第一步:在终端启动一个消费都等待生产者生产出来的数据

代码实现

创建Maven项目

- 添加依赖

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>2.4.1</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.12</artifactId>

<version>2.4.1</version>

</dependency>

- 在resources目录下添加log4j.properties

### 设置###

log4j.rootLogger = debug,stdout,D,E

### 输出信息到控制抬 ###

log4j.appender.stdout = org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target = System.out

log4j.appender.stdout.layout = org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern = [%-5p] %d{yyyy-MM-dd HH:mm:ss,SSS} method:%l%n%m%n

### 输出DEBUG 级别以上的日志到=E://logs/error.log ###

log4j.appender.D = org.apache.log4j.DailyRollingFileAppender

log4j.appender.D.File = E://logs/log.log

log4j.appender.D.Append = true

log4j.appender.D.Threshold = DEBUG

log4j.appender.D.layout = org.apache.log4j.PatternLayout

log4j.appender.D.layout.ConversionPattern = %-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%n

### 输出ERROR 级别以上的日志到=E://logs/error.log ###

log4j.appender.E = org.apache.log4j.DailyRollingFileAppender

log4j.appender.E.File =E://logs/error.log

log4j.appender.E.Append = true

log4j.appender.E.Threshold = ERROR

log4j.appender.E.layout = org.apache.log4j.PatternLayout

log4j.appender.E.layout.ConversionPattern = %-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%n

情况一:创建生产者

public class CustomerProducer {

// 配置信息来源:ProducerConfig

public static void main(String[] args) {

Properties props = new Properties();

// Kafka服务端的主机名和端口号

props.put("bootstrap.servers", "hcmaster:9092");

// 应答级别:等待所有副本节点的应答

props.put("acks", "all");

// 消息发送最大尝试次数

props.put("retries", 0);

// 一批消息处理大小

props.put("batch.size", 16384);//16k

// 请求延时

props.put("linger.ms", 1);

// 发送缓存区内存大小

props.put("buffer.memory", 33554432);//32M

// key序列化

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

// value序列化

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer<String, String> producer = new KafkaProducer<>(props);

//循环发送数据

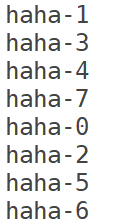

for (int i = 0; i < 8; i++) {

ProducerRecord<String, String> data = new ProducerRecord<>("first", Integer.toString(i), "haha-" + i);

producer.send(data);

}

producer.close();

}

}

运行程序,在Consumer终端上查看结果:

情况二:创建带回调的生产者

public class CallBackProducer {

public static void main(String[] args) throws InterruptedException {

Properties props = new Properties();

// Kafka服务端的主机名和端口号

props.put("bootstrap.servers", "hcmaster:9092,hcslave1:9092,hcslave2:9092");

// 等待所有副本节点的应答

props.put("acks", "all");

// 消息发送最大尝试次数

props.put("retries", 0);

// 一批消息处理大小

props.put("batch.size", 16384);

// 增加服务端请求延时

props.put("linger.ms", 1);

// 发送缓存区内存大小

props.put("buffer.memory", 33554432);

// key序列化

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

// value序列化

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<>(props);

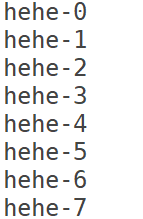

for (int i = 0; i < 8; i++) {

Thread.sleep(500);

ProducerRecord<String, String> pr = new ProducerRecord<>("first", Integer.toString(i), "hehe-" + i);

kafkaProducer.send(pr, new Callback() {

@Override

public void onCompletion(RecordMetadata metadata, Exception exception) {

if(exception != null){

System.out.println("发送失败");

}else {

System.out.print("发送成功: ");

if (metadata != null) {

System.out.println(metadata.topic()+" - "+metadata.partition() + " - " + metadata.offset());

}

}

}

});

}

kafkaProducer.close();

}

}

在Intellij控制中结果:

在counsumer终端中查看结果:

情况三:创建自定义分区的生产者

- 自定义Partitioner

public class CustomPartitioner implements Partitioner {

@Override

public void configure(Map<String, ?> configs) {

}

@Override

public int partition(String topic, Object key, byte[] keyBytes, Object value, byte[] valueBytes, Cluster cluster) {

// 控制分区

return 1;

}

@Override

public void close() {

}

}

- 自定义Procuder

public class CallBackProducer {

public static void main(String[] args) throws InterruptedException {

Properties props = new Properties();

// Kafka服务端的主机名和端口号

props.put("bootstrap.servers", "hcmaster:9092,hcslave1:9092,hcslave2:9092");

// 等待所有副本节点的应答

props.put("acks", "all");

// 消息发送最大尝试次数

props.put("retries", 0);

// 一批消息处理大小

props.put("batch.size", 16384);

// 增加服务端请求延时

props.put("linger.ms", 1);

// 发送缓存区内存大小

props.put("buffer.memory", 33554432);

// key序列化

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

// value序列化

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

// 自定义分区

props.put("partitioner.class", "com.hc.producer.customerparitioner.CustomPartitioner");

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<>(props);

for (int i = 0; i < 8; i++) {

Thread.sleep(500);

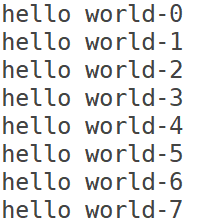

ProducerRecord<String, String> pr = new ProducerRecord<>("first", Integer.toString(i), "hello world-" + i);

kafkaProducer.send(pr, (metadata, exception) -> {

if (exception != null) {

System.out.println("发送失败");

} else {

System.out.print("发送成功: ");

if (metadata != null) {

System.out.println(metadata.partition() + " --- " + metadata.offset());

}

}

});

}

kafkaProducer.close();

}

}

- 结果

在Intellij控制中结果:

在counsumer终端中查看结果: