以下是在搭建卷积神经网络过程中遇到的一些error,希望和大家分享。

Error1

ResourceExhaustedError (see above for traceback): OOM when allocating tensor with shape[32,1,5,5] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc

[[node Conv2D (defined at /home/xiaoshumiao/PycharmProjects/tensorflow/modular/cnn_define.py:30) = Conv2D[T=DT_FLOAT, data_format="NCHW", dilations=[1, 1, 1, 1], padding="SAME", strides=[1, 1, 1, 1], use_cudnn_on_gpu=true, _device="/job:localhost/replica:0/task:0/device:GPU:0"](Conv2D-0-TransposeNHWCToNCHW-LayoutOptimizer, Variable/read)]]

Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.

解决:

因为当时导入的数据集中训练数据是一维的,而用keras导入的数据是二维的图片,正好适合cnn,但是对于之前一维时定义的函数自然会有格式问题,因此,将输入尺寸in_size—>insize_x,insize_y

class cnn_layer2(object):

def __init__(self,in_size_x,in_size_y,out_size):

self.xs = placeholder(float32,[None,in_size_x,in_size_y])

self.ys = placeholder(float32,[None,out_size])#!!!!!!!!!!!!!!!!!!!!!!transform format

self.keep_prob = placeholder(float32)

self.x_image = reshape(self.xs,[-1,28,28,1])#-1 means batch;28,28 means picture's size;1 means channel

self.cnn1 = add_layer_5x5(self.x_image, 1, 32)

self.cnn2 = add_layer_5x5(self.cnn1, 32, 64)

self.fnn1 = add_layer_dropput(transpose(reshape(self.cnn2,[-1,7*7*64])),7*7*64,1024,keep_prob=self.keep_prob)#!!!!!!!!!!!!!!!transform format

self.fnn2 = add_layer_dropput(self.fnn1,1024,10,keep_prob=self.keep_prob,activation_function=nn.softmax)

self.loss = reduce_mean(-reduce_sum(multiply(transpose(self.ys),log(self.fnn2)),reduction_indices=[0])) #cross_entropy

self.train_step = train.AdamOptimizer(0.0001).minimize(self.loss)

keras导入数据格式见下方链接

解决tf升级warning:Please use alternatives such as official/mnist/dataset.py from tensorflow/models

Error2

Traceback (most recent call last):

File "/home/xiaoshumiao/PycharmProjects/tensorflow/main/Convolutional_Neural_Network.py", line 22, in <module>

train_x_betch = train_data.row(train_data)

File "/home/xiaoshumiao/PycharmProjects/tensorflow/modular/random_choose.py", line 23, in row

y = y_data[self.num[0:self.n],: ]

TypeError: 'corresponding_choose' object is not subscriptable

这里主要是因为之前编写的随机抽取样本的函数也是基于一维编写的,同样在这里不适合二维图像数据的随机抽取。

原代码:

class corresponding_choose(object):

def __init__(self,data,n,m=1):

self.num = arange(data.shape[m])

random.shuffle(self.num)

self.n=n

def col(self,x_data):

self.x = x_data[:, self.num[0:self.n]]

return self.x

def row(self,y_data):

y = y_data[self.num[0:self.n],: ]

return y

后经过博客Python二维数组与三维数组切片详解增加两个用于三维数组切片的方法:

def col_2(self,x_data):

self.x = x_data[::,self.num[0:self.n]]

return self.x

def row_2(self,y_data):

y = y_data[self.num[0:self.n],::]

return y

work!

Error3

tensorflow.python.framework.errors_impl.ResourceExhaustedError: OOM when allocating tensor with shape[10000,32,28,28] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc

[[{{node Conv2D}} = Conv2D[T=DT_FLOAT, data_format="NCHW", dilations=[1, 1, 1, 1], padding="SAME", strides=[1, 1, 1, 1], use_cudnn_on_gpu=true, _device="/job:localhost/replica:0/task:0/device:GPU:0"](Conv2D-0-TransposeNHWCToNCHW-LayoutOptimizer, Variable/read)]]

Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.

这个问题是因为GPU内存不足,引发的原因是在测试训练结果的时候直接选择test数据集,也就是默认的betch=10000,这里和训练betch一样,进行抽样,即可。

test_data = corresponding_choose(test_x_image, 500, m=0)

test_x_betch = test_data.row_2(test_x_image)

注意,label数据是二维数据,不是图片,所以,对于标签项。用以下代码:

test_y_betch = test_data.row(test_y)

Error4

虽然程序work了,但是正确率却一直在0.1左右,显然有问题。print了一下全连接网络最后一层输出,结果如下:

100个betch的数组,值为nan。

尝试

1、试着将输入数据从二维变为一维 ×。

2、通过print或者pandas查看各层输出(测试样本为1,迭代次数为1)

debugg1:第一层池化层输出

print格式下:

b = sess.run(n.cnn1, feed_dict={n.xs: train_x_betch, n.ys: train_y_betch, n.keep_prob: 1}).reshape(-1,14*14*32)#same卷积,32个卷积核

print b

pandas格式下:

b = sess.run(n.cnn1, feed_dict={n.xs: train_x_betch, n.ys: train_y_betch, n.keep_prob: 1}).reshape(-1,14*14*32)

a = pd.DataFrame(data=b)

a.to_csv('/home/xiaoshumiao/PycharmProjects/tensorflow/main/0.csv')

得到的值为-3.4028235e+38,也就是负数的下限。

debugg2:第一层卷积层输出

因为我是把卷积输出封装在函数中,作为一个局部变量,这里查看局部变量方法请看python在一个函数中调用另一函数中的变量

最终得到的是nan

debugg3:Weight值

(1)、权重和偏差初始化正常,运行完一轮卷积神经网络后值为nan

(2)、当先不运行tf.train,也就是先不进行梯度更新的时候参数也正常

(3)、加上训练以后,参数值为nan

为此应该是在梯度反向传递过程中出现问题。

(1)、去掉最大池化 ×。

(2)、将全连接网络的神经网络层函数由含有dropout的换成不含有dropout的 ×。

…

正当百思不得其解之时,看到这篇博文tensorflow NAN常见原因和解决方法中的一句话,如果relu激活函数过多…

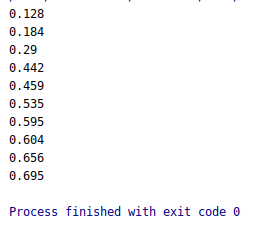

遂将隐含层激活函数设置为sigmod以后,终于…

正确率可以通过一些技巧提升,最重要的是他终于work了。

以下是卷积层的定义代码:

def weight_variable(shape):

inital = truncated_normal(shape,stddev=0.1)

return Variable(inital)

def bias_variable(shape):

inital = constant(0.1,shape=shape)

return Variable(inital)

def conv2d_conventional(x,W):

return nn.conv2d(x,W,strides=[1,1,1,1],padding='SAME')#strides = 1,batch = 1,channle = 1;the same as 《深度学习》p214 picture

def max_pool_2x2_nonrepeat(x):

return nn.max_pool(x,ksize=[1,2,2,1],strides=[1,2,2,1],padding='SAME')

def add_layer_5x5(x,in_layer,out_layer):

W_conv = weight_variable([5,5,in_layer,out_layer])#《深度学习》p212,patch: 5*5; in_put layer: 1; out_put layer: 32

b_conv = bias_variable([out_layer])

output = nn.relu(conv2d_conventional(x,W_conv)+b_conv)

a = max_pool_2x2_nonrepeat(output)

return a

关于卷积神经网络的结构分析,请关注我的博客,以后会继续更新!

May the Force be with you.