Article Directory

- How to ensure the visibility of 1 volatile

- Why can not guarantee atomicity 2 volatile issue

- 3 volatile reasons for ordering can guarantee

- 3.1 single-threaded prohibit reordering rules as-if-serial

- More than 3.1 threads prohibit reordering rules happens-before

- 3.1.1 happens-before-defined rules and further understanding

- 3.1.2 happens-before details of the rules

- Examples of above 3.2 and then binding is how to ensure chat volatile orderliness

- 4 Postscript

Source Address: https://github.com/nieandsun/concurrent-study.git

How to ensure the visibility of 1 volatile

The article, " [] Concurrent programming - concurrent programming visibility, atomicity, ordering problem " to reproduce the two threads modify the same shared variable invisibility problem. I believe I am sure you all know, one way to solve the problem is this: 在声明共享变量时加上volatile关键字。

that the underlying principle of what is it? ? ?

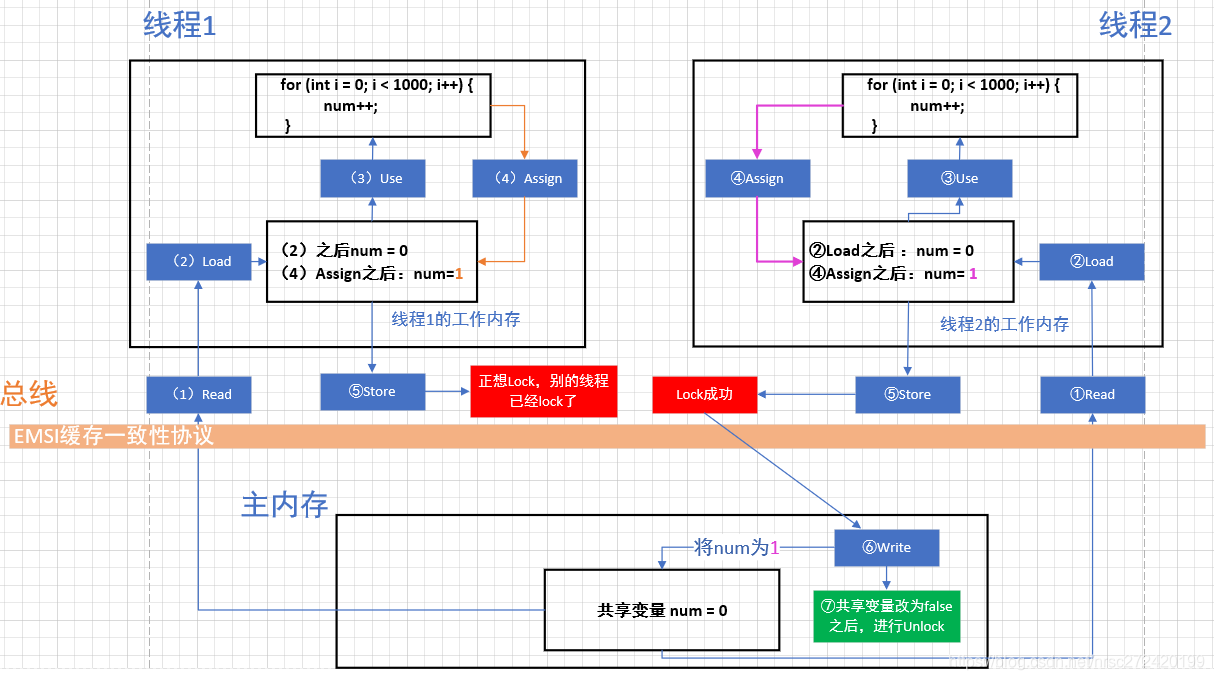

First, be aware that when without the volatile keyword, even if the thread 2 has been shared values of the variables were modified, but because thread 1 has been in use with the shared variable (not to sync at some point about the main memory), Therefore, the thread can not sense a thread modification 2 to shared variables (reference may be made to the above understanding in FIG. 1).

This time you'll definitely want, if the thread after the two shared variables modified, can directly notify thread 1 fine. . .

The volatile keyword to do this, its specific implementation principle can be explained by the following figure:

注意:There are a lot of friends told me that, in fact, the CPU does not have to use the bus, and this point I'm not sure, but according to my information and access to their own understanding, even if the bus is not used, the underlying principles of this piece is the same. . .

In fact, between the main memory and the various threads, there are still a bus, volatile keyword has been able to ensure the visibility between threads, there are three crucial:

- (1) Thread 2 revised copy of the working memory shared variable, before writing back to main memory to

总线perform a lock operation - (2) by the respective threads

总线嗅探机制to monitor the bus, once found operating lock, and lock variables related to the copy of the data in the working memory of this thread, that data will be cleared, and re-read from the main memory

Of course, if only enough to meet the previous two, because there may be just one thread to monitor a shared variable has changed, to the main memory immediately pulls data, at a time when the thread 2 not synchronized to the modified variable main memory, thread 1 that will get shared variables or previous value, which is equivalent to thread Amendment 2 to shared variables, thread 1 is not detected, it can not guarantee the visibility between threads. So there's a crucial point:

- (3) write back to main memory before the thread 2 in the variable will modify the other thread reads from the main memory of the shared variable will be blocked, thread 2 after the variable write back to main memory,

会进行unlockand other threads can read to. - Of course, this time is very fast.

Based on the above three points, volatile can guarantee visibility between threads.

By the way, the appeal is only 其具体实现是利用汇编语言的Lock前缀指令theoretical, .

Why can not guarantee atomicity 2 volatile issue

Examples of above with reference to " [] Concurrent Programming - visibility concurrent programming, atomicity, ordering problem " in the second example, two threads where I reasons.

volatile can not guarantee atomicity problem because the available under FIG explained:

As shown, if the thread 1 and the thread 2 are executed cyclically num ++ operation, when the thread 1 performs a cycle of num ++ operations will be performed assign -> store -> and try to lock, but then suddenly 嗅探the thread 2 has a shared variable num lock operation, it will have to pull the thread 1 num-date value from the main memory 再进行新的循环, that is 相当于线程1浪费了一次循环. So even with volatile can not guarantee the atomicity of multi-threaded programs.

3 volatile reasons for ordering can guarantee

The article, " [] Concurrent programming - concurrent programming visibility, atomicity, ordering problem " to reproduce the orderliness of problems between multiple threads because the code reordering triggered, and describes the benefits and reordering Types of.

But imagine if each thread of the code can do anything, any reordering, then we want programmers to write code in line with their wishes, then how many cases have to consider -> 这将严重增加程序猿的负担!!!-> therefore 规定某些情况下可以重排序,而有些情况下绝对不能重排序 就成了势在必行的事.

In a multithreaded before understanding the situation volatile can guarantee the principle of orderly, let's look at what time can not reorder the single-threaded case.

3.1 single-threaded prohibit reordering rules as-if-serial

The reason why we write code that can be executed in accordance with the wishes of our own single-threaded case, because there is a rule as-if-serial in the single-threaded case - as-if-serial translated into Chinese it seems, is ordered execution of that seemingly our code execution line by line from top to bottom, and there has been no reordering.

as-if-serial semantics mean it is: no matter how the CPU and compiler reordering, must ensure that in the case of single-threaded program results are correct.

The following data dependent relationship, not reordered.

- Read After Write:

int a = 1;

int b = a;

- After Write Write

int a = 1;

int a = 2;

- Reading writing

int a = 1;

int b = a;

int a = 2;

编译器和处理器不能对存在数据依赖关系的操作进行重排序- In fact, this is the specific rules of the as-if-serial. Because of this reordering will change the result. However, the absence of data dependencies between operations if these operations could be reordered compilers and processors. For example as follows:

int a = 1;

int b = 2;

int c = a + b;

Between c and a data dependencies, data dependencies exist also between b and c. Therefore, in the final sequence of instructions execution, c is not to be reordered in front of a and b. But no data dependency between a and b, the compiler and the processor may perform reordering sequence between a and b. The following are two possible execution sequence of the program:

可以这样:

int a = 1;

int b = 2;

int c = a + b;

也可以重排序成这样:

int b = 2;

int a = 1;

int c = a + b;

The above example can be seen by as-if-serial single-threaded program rules to protect them, to comply with as-if-serial semantics compiler, runtime and processors can make us feel: single-threaded program looks according to the procedure line by line writing order of execution. - "So we do not have to worry about re-scheduling problem with single-threaded programs circumstances.

But 不同处理器之间和不同线程之间的数据依赖性编译器和处理器不会考虑- "This is the reason why the multi-threaded program ordering problems due to procedural problems caused by reordering occurs.

More than 3.1 threads prohibit reordering rules happens-before

3.1.1 happens-before-defined rules and further understanding

actually happens-before rule memory visibility rules among a plurality of operations is defined as follows:

It happens-before to visibility rules set forth in memory between a plurality of operation. In JMM, if a result of the operation performed by the need for another operation visible, must exist happens-before relationship between the two operations.

But with a happens-before relationship between the two operations, does not mean that a pre-operation must be performed before the operation! Before it happens-before requires only one operation (execution results) of operation after a visible and sequenced previous operation before the second operation (the first is visible to and ordered before the second)

Further understanding:

The above definition seems very contradictory, in fact, it is a different point of view, it is.

- (1) standing Java programmer's perspective: JMM guarantee, if an operator happens-before another operation, then the execution result of the operation of a second operation will be visible, but the order of execution of one operation ahead of the second operation.

- (2) standing on the angle of compilers and processors for: JMM allowed, there is a relationship between two happens-before operation, does not require a specific implementation of the Java platform has to be performed in the order specified happens-before relation. If the results after the re-ordering, consistent with the results as happens-before relationship is performed, then this reordering is allowed.

3.1.2 happens-before details of the rules

After reading 3.1.1, I guess a lot of people are still ignorant of the force. . . All hell ah. . .

我想了很久觉得还是从禁止重排序的角度去理解比较好理解。

Next we look at the happens-before specific rules, and these rules of interpretation of what is prohibited in terms of reordering:

- (1) The program sequence rules (single-thread rule): a thread in each operation, happens-before any subsequent operation to the thread.

In fact, another way of saying that for as-if-serial rule, that the compiler and processor can not manipulate the data dependencies that exist under the single-threaded case do reordering

- (2) monitor lock rules: for unlocking a lock, happens-before subsequent locking of the locks.

In fact, that is a thread must wait until another thread releases the lock, it can grab the lock -> can not be reordered between the two operations

- (. 3) volatile variable rules: to write a volatile domain, happens-before reading this field in any subsequent volatile.

See 3.1.2.1

- (4) transitive: if A happens-before B, and B happens-before C, then A happens-before C.

This is not much to say. . .

- (5) start () rules: any of the following happens-before thread B to the thread A executed if the operation ThreadB.start () (start thread B), then A thread ThreadB.start () operation.

In fact, that is operating thread B executed, must have occurred after the open thread B -> both can not be reversed, that can not be reordered

- (6) join () rule: If the thread A executes operations ThreadB.join () returns successfully, then any of the following happens-before thread B to the thread A in the () operation is successful return from ThreadB.join.

In fact, that is ThreadB.join () operation after this code, must occur after executing the thread B -> two can not be reordered, where you can see another one of my articles "[Concurrent Programming] - Thread class join method "

- (7) thread interrupts rules: thread interrupt method is called happens-before in the interrupted thread code detection to interrupt event occurs.

In fact, that is only launched interrupt the operation of the thread, the thread can be perceived interrupt request thread -> two can not be reordered, where you can see another one of my articles "[] Concurrent programming - interrupt, interrupted and use isInterrupted Detailed "

I believe the point of view in accordance with blue font happens-before you rule it will certainly be easier to understand, of course, and you may feel like there are a lot of rules, like crap, especially (2), (4), (5), (6), (7) which are a few rules. -> But you know what we call causality, and the computer can understand -> precisely because of these rules was to ensure that the code we write, can be performed in accordance with our wishes.

3.1.2.1 volatile variable rules and then understand why volatile + can assure orderly ★★★

I think the look volatile variable rules described in 3.1.2, you can not see four or five or six. . .

So where to start directly from the reordering rules volatile variables.

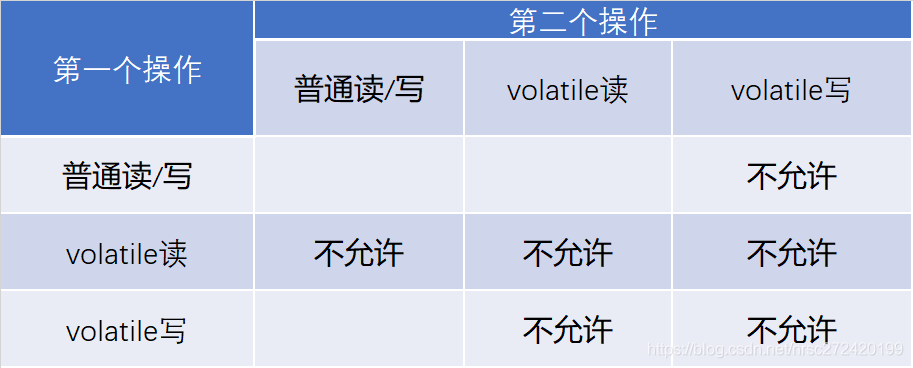

reordering rules volatile variables can be found in the following table:

sum up:

- (1) when the second operation is a volatile time of writing, no matter what is the first operation that can not be reordered. This rule ensures that the volatile write operation before will not be compiled thinks highly volatile after ordering to write.

- (2) when the first operation is a volatile read time, no matter what is the second operation, can not be reordered. This rule ensures that volatile read operation will not be compiled after ordering discouraged to read before volatile.

- (3) when the first write operation is a volatile, volatile second operation is a read, no reordering.

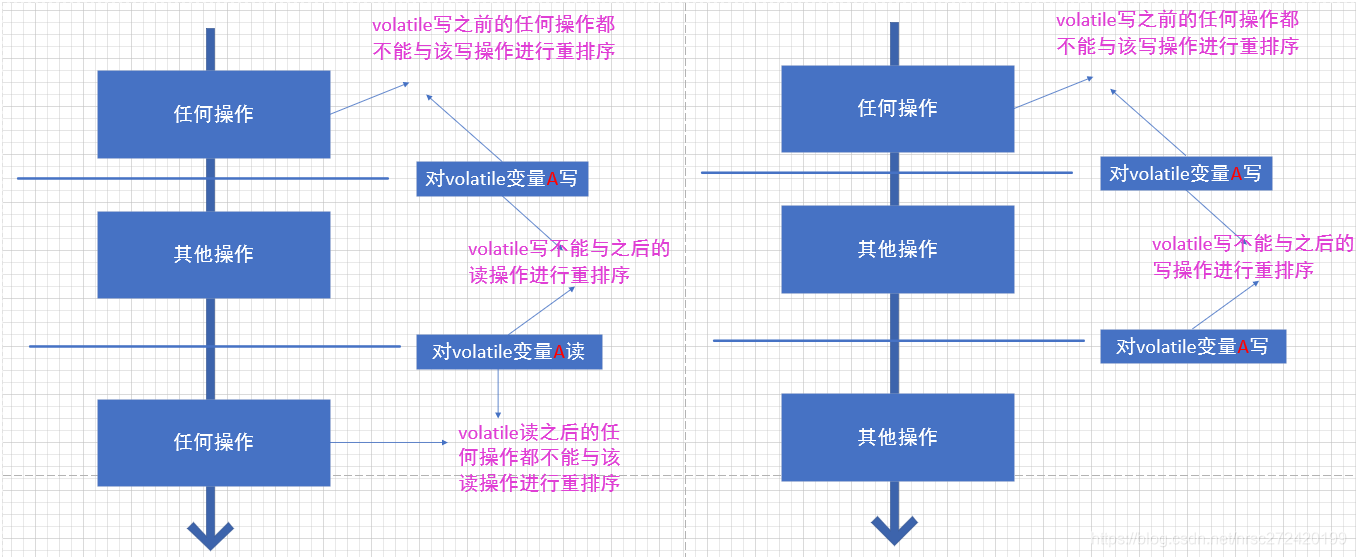

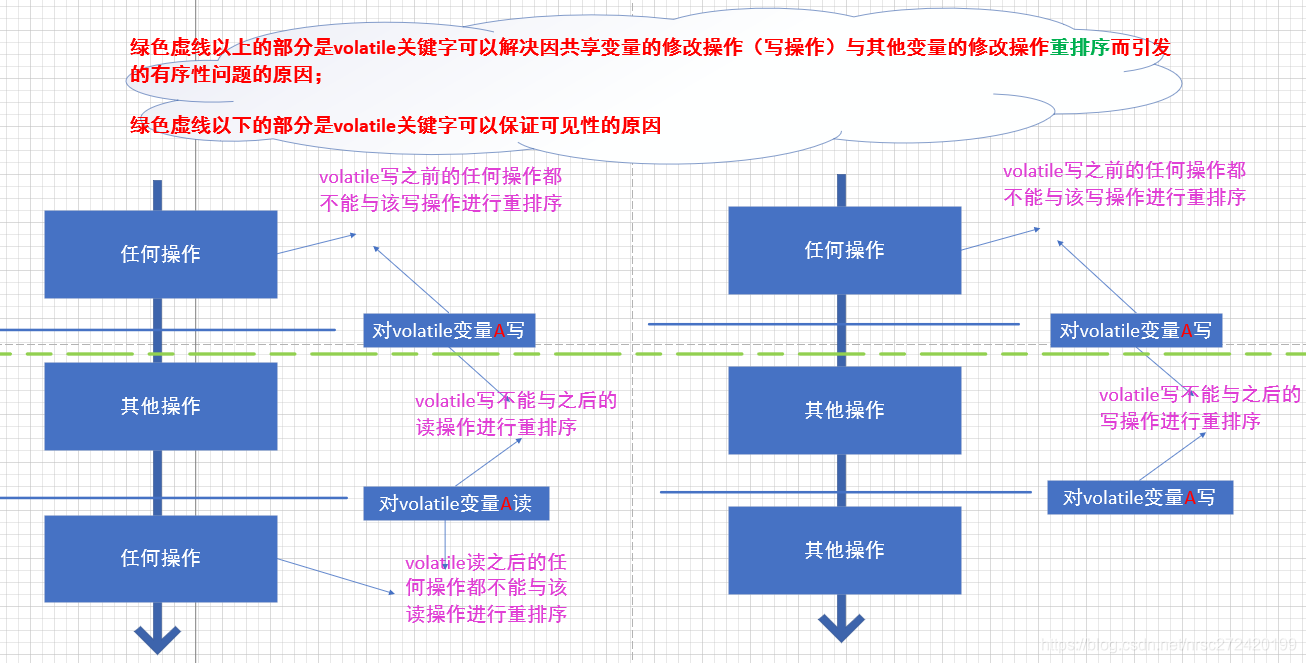

In fact, the above rule can be expressed by the following chart:

From this figure, I think you can also get a conclusion: that as long as you are in front of this volatile shared variable write, read or write behind me no matter, you can perceive what you write in front of ->

其实从这个角度也可以解释为什么volatile可以保证线程的可见性★★★

Here from the pure theory to analyze volatile reasons for ordering can guarantee:

First, from the two examples above point of view, the reason there will be an orderly issues, because modifying other variables (or write) and 目标共享变量modified occurred reordering, and after the target shared variables together with the volatile keyword other variables are modified, it can not be 加上volatile关键字的目标共享变量modified reordering, so will not appear由于重排序导致的我们写的代码和实际运行生成的结果不一致的问题了。

In fact, you can do the following analysis of the comments I painted above diagram here after: ★★★

3.1.2.2 volatile realization of the underlying principles prohibit reordering (or theory) - memory barrier

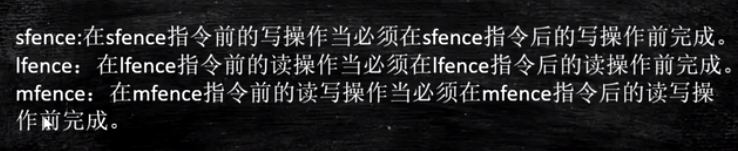

For volatile variables, compiler when generating bytecode will be inserted in the instruction sequence in Java 内存屏障to inhibit a particular type of processor reordering problem.

Memory barriers have the following four types:

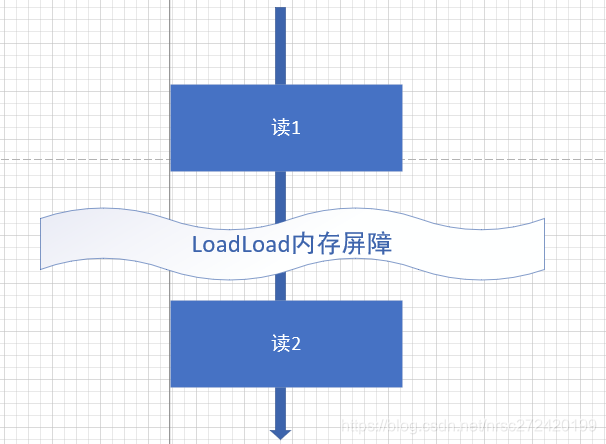

in the end is Gesha memory barrier here to LoadLoad memory barrier, for example to draw a diagram to understand this:

If you added a LoadLoad memory barrier, then read reading between 1 and 2 read 21 and can not read then re-sorted - "this is the so-called memory barrier.

For the volatile keyword, the following actions will be in accordance with specifications (seemingly every data are saying):

Before each write volatile, after inserting a StoreStore, write, insert a StoreLoad

after each volatile read, insert and LoadStore LoadLoad

其实这里我有一个疑问: Now that the front 3.1.2.1 says:

When the second operation is a volatile time of writing, no matter what is the first operation that can not be reordered. This rule ensures that the volatile write operation before will not be compiled thinks highly volatile after ordering to write.

That StoreStore inserted before volatile write, it is not only to ensure the reordering volatile this can not be written with the previous write? ? ? The guarantee can not read and write in this volatile front can not reorder? ? ? -> Paint as follows:

welcome and look forward to your comments! ! !

顺便多说一句: We usually use the processor generally X86, it is actually not so much instruction, only StoreLoad.

3.1.2.3 volatile specific implementation - lock prefix instructions

The following video content from a particular open class:

Through the analysis of the unsafe.cpp OpenJDK source code, you will find the modified volatile keyword variables there is a "lock:" prefix.

Lock前缀指令并不是一种内存屏障,但是它能完成类似内存屏障的功能. Lock will lock cache and CPU bus, may be

to be understood as a CPU instruction-level lock. ->也就是说真正实现内存屏障功能的其实是Lock指令!!!

Meanwhile, the data processor instruction sets the current cache line will be written directly to system memory, and the write-back cache memory handling can lead to the data of the address is not valid in the other CPU.

In the specific implementation, it is to lock the bus and the cache, and execute subsequent instructions, and finally will release all of the dirty data in the cache back to main memory to refresh the rear lock. Lock locked in the bus when other CPU read and write requests will be blocked until the lock is released.

In fact, the same section of this article first analysis of the contents of the above two paragraphs and I am.

顺便多说一句:Today saw another open class, he said at a win system volatile underlying implementation is a lock prefix instructions

to achieve the underlying linux system is accomplished by the following three system-level commands:

specifically, how to welcome and look forward to your comments! ! !

Examples of above 3.2 and then binding is how to ensure chat volatile orderliness

Example 1 above:

@Outcome(id = {"0, 1", "1, 0", "1, 1"}, expect = ACCEPTABLE, desc = "ok")

@Outcome(id = "0, 0", expect = ACCEPTABLE_INTERESTING, desc = "danger")

@State

public class OrderProblem2 {

int x, y;

/****

* 线程1 执行的代码

* @param r

*/

@Actor

public void actor1(II_Result r) {

x = 1;

r.r2 = y;

}

/****

* 线程2 执行的代码

* @param r

*/

@Actor

public void actor2(II_Result r) {

y = 1;

r.r1 = x;

}

}

r.r1 and r.r2 reason why the whole is zero, because the thread 1 and thread 2 may have been reordered, and then just, thread 1 will perform as r.r2 = y, then thread 2 and executed r.r1 = x; this time because the x and y were not assigned, so their values are 0 and, therefore, there has been r.r1 and r.r2 all zeros situation.

And suppose r.r1 and r.r2 are modified volatile, then the due r.r1 = x;and r.r2 = y;are a write operation, then the front x =1;and y=1;the operation can not be carried out with reordering, it does not guarantee the r.r1 and r.r2 possible are 0 -> thus will solve this case由于重排序导致的我们写的代码和实际运行生成的结果不一致的问题。

2 above example, the following code, are interested in their own analysis of it.

@Outcome(id = {"1", "4"}, expect = Expect.ACCEPTABLE, desc = "ok")

@Outcome(id = "0", expect = Expect.ACCEPTABLE_INTERESTING, desc = "danger")

@State

public class OrderProblem1 {

int num = 0;

boolean ready = false;

/***

* 线程1 执行的代码

* @param r

*/

@Actor

public void actor1(I_Result r) {

if (ready) {

r.r1 = num + num;

} else {

r.r1 = 1;

}

}

/***

* 线程2 执行的代码

* @param r

*/

@Actor

public void actor2(I_Result r) {

num = 2;

ready = true;

}

}

4 Postscript

I read a lot of information when writing this article, watch a lot of video open class. . . However, the absence of which copies of the information or open class video which can completely convince me alone, so this article a lot of information and a comprehensive video views, of course, contains a lot of their own understanding.

Dear Readers, If it is found where there is wrong, you are very welcome to give me a shout pointed out! ! !