1. Introduction

Guarantees thread visibility and order, but does not guarantee atomicity (voilatile is a cache lock at the cpu level, and you don't need to care about it at the java level, so you need to add cas to ensure thread safety).

Although voilatile cannot guarantee thread safety, it is applicable to the situation where one thread writes and multiple threads read. Make sure that every time a thread reads, it reads the real-time value in memory, not the old value of the register.

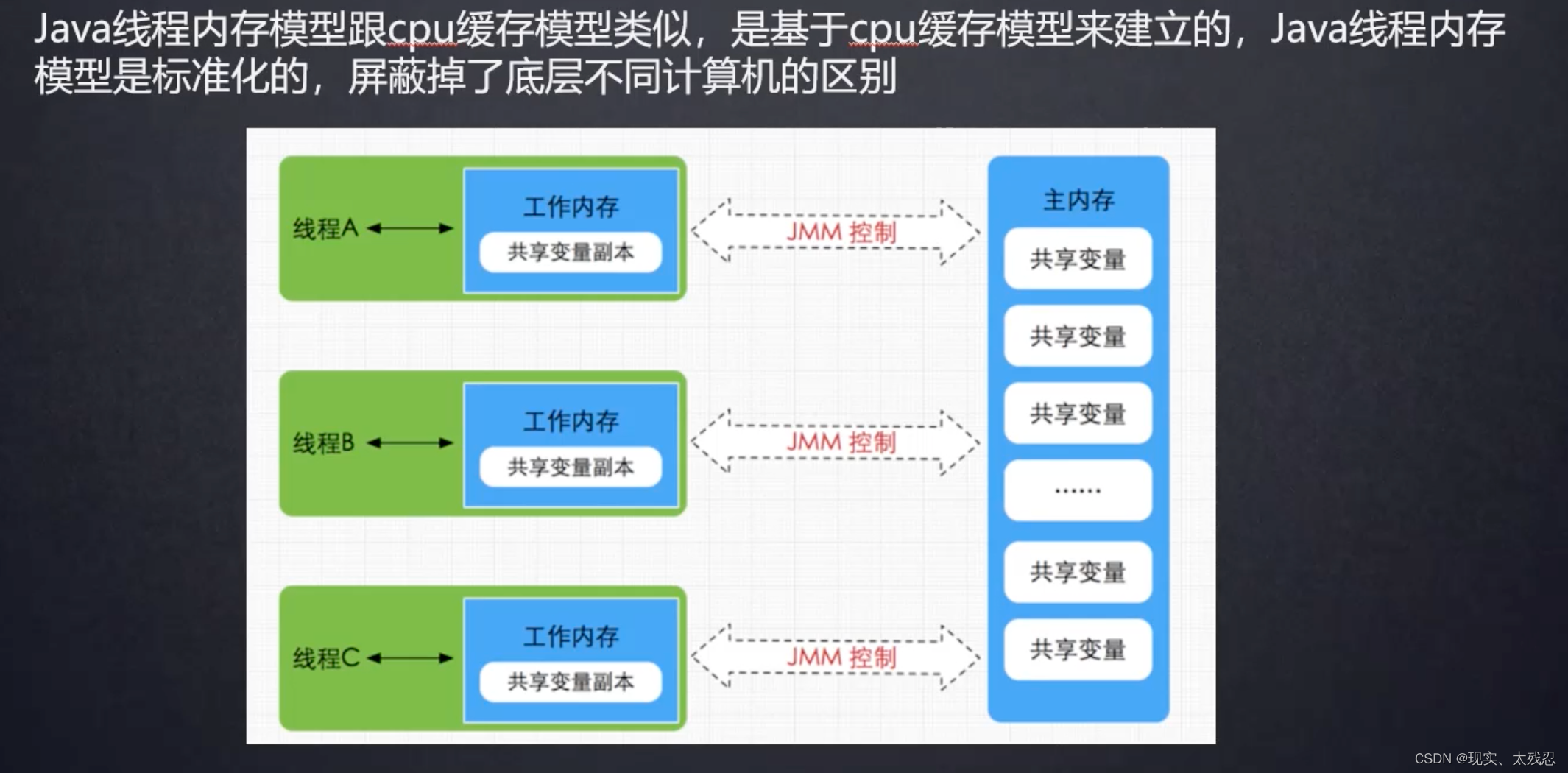

Two, JMM memory model

The full name of java memory model is called java memory model in Chinese.

Because there are certain differences in memory access under different hardware manufacturers and different operating systems, it will cause various problems when the same code runs on different systems. Therefore, the java memory model (JMM) shields the memory access differences of various hardware and operating systems, so that java programs can achieve consistent concurrency effects on various platforms.

The JMM memory model is shown in the figure:

Each java thread has its own working memory. Which variable the thread needs to operate needs to be loaded from the main memory to its own working memory before it can be used.

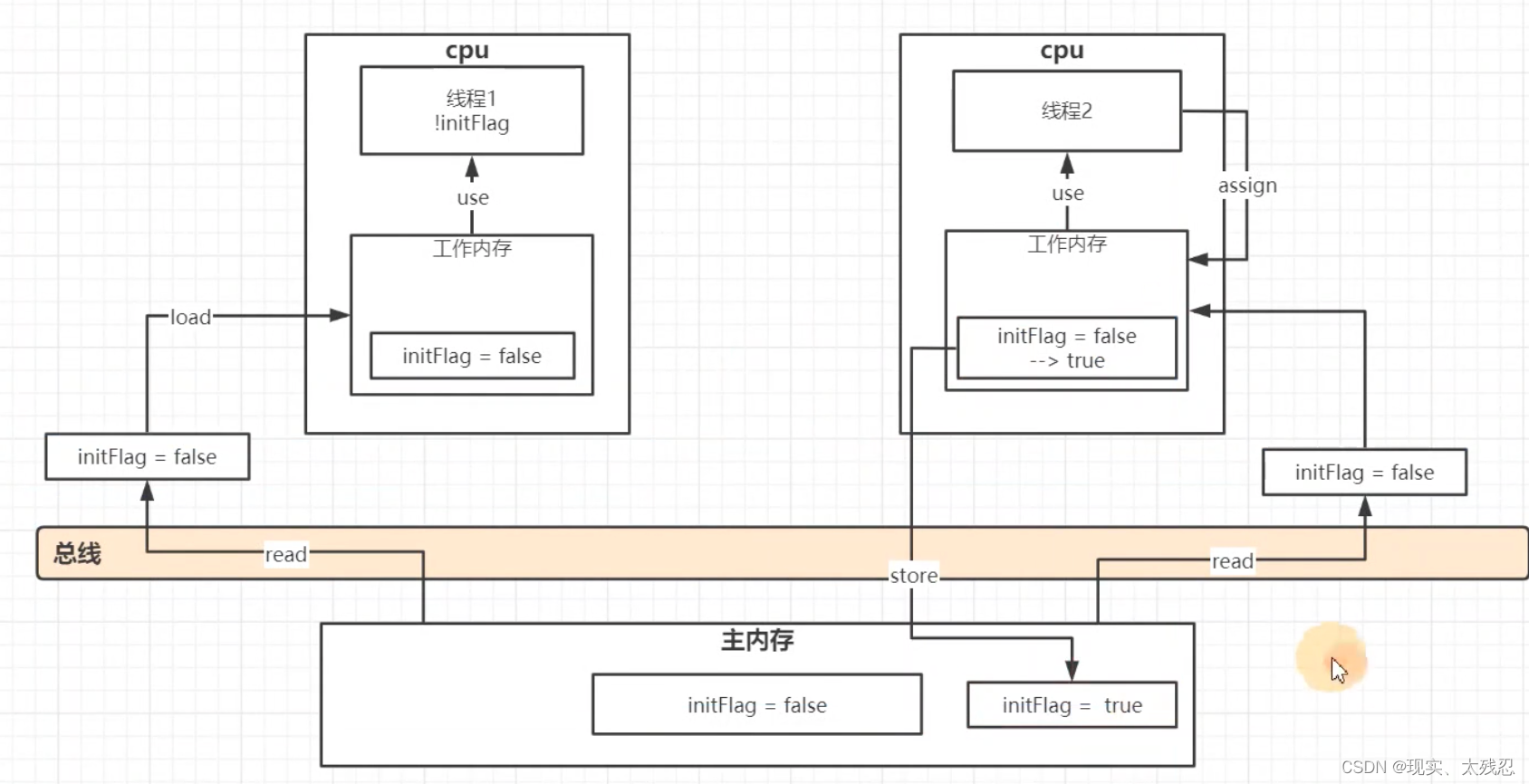

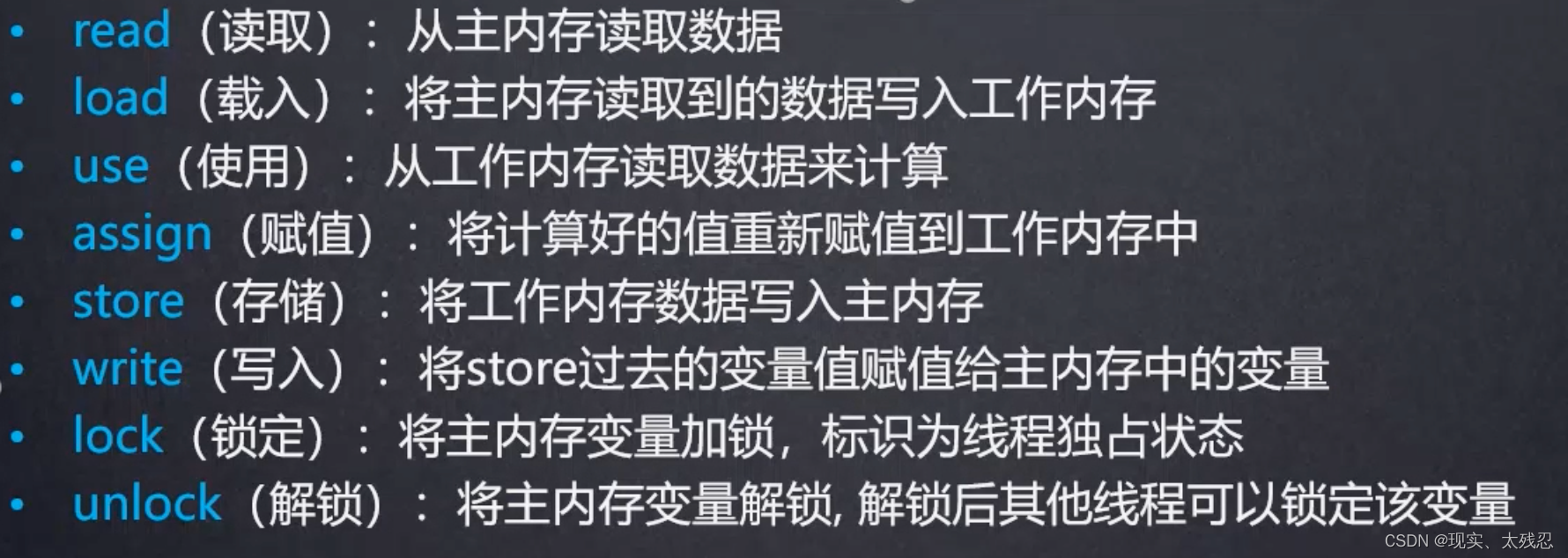

JMM atomic operations

3. Memory barrier

There are two types of memory barriers at the hardware layer: Load Barrier and Store Barrier, namely read barriers and write barriers.

The memory barrier has two functions:

- prevent reordering of instructions on either side of the barrier;

- Force the dirty data in the write buffer/cache to be written back to the main memory, invalidating the corresponding data in the cache.

For the Load Barrier, inserting the Load Barrier before the instruction can invalidate the data in the cache and force the data to be reloaded from the main memory; for the

Store Barrier, inserting the Store Barrier after the instruction can make the data written in the cache The latest data updates are written to main memory and made visible to other threads.

memory barriers in java

Usually the so-called four types, namely LoadLoad, StoreStore, LoadStore, and StoreLoad are actually a combination of the above two, completing a series of barrier and data synchronization functions.

- LoadLoad barrier: For such a statement Load1; LoadLoad; Load2, before the data to be read by Load2 and subsequent read operations is accessed, the data to be read by Load1 is guaranteed to be read.

- StoreStore barrier: For such a statement Store1; StoreStore; Store2, before Store2 and subsequent write operations are executed, the write operation of Store1 is guaranteed to be visible to other processors.

- LoadStore barrier: For such a statement Load1; LoadStore; Store2, before Store2 and subsequent write operations are flushed out, it is guaranteed that the data to be read by Load1 is completely read.

- StoreLoad barrier: For such a statement Store1; StoreLoad; Load2, before Load2 and all subsequent read operations are executed, the writes to Store1 are guaranteed to be visible to all processors. Its overhead is the largest of the four barriers. In most processor implementations, this barrier is a universal barrier that functions as the other three memory barriers

Fourth, the role of the volatile keyword

1. Guarantee the visibility of concurrent programming (atomicity is not guaranteed)

There is no problem with the Java memory model when modifying and reading data under a single thread; however, dirty data may be read under multi-threading, and variables decorated with volatile need to be

modified with volatile. Every time a thread uses a variable, it will read the modified variable the latest value of

Realize the principle of visibility

The underlying implementation is mainly through the assembly of lock prefix instructions, which will lock the cache of this memory area (cache line locking) and write back to the main memory

1A-32 and Intel 64 Architecture Software Developer's Manual explains the lock instruction:

- will immediately write the data for the current processor cache line back to system memory.

- This operation of writing back to memory will invalidate the data cached at this memory address in other CPUs (MESI protocol)

- Provides a memory barrier function so that instructions before and after the lock cannot be reordered

View disassembly code

-server -Xcomp -XX:+ UnlockDiagnosticYMOptions -XX:+ PrintAssembly XX:CompileCommand=compileonly,*VolatileVisibilityTest.prepareData

2. Disable order reordering

The execution order of instructions is not necessarily executed in the same order as we write. In order to ensure the efficiency of execution, JVM (including CPU) may reorder instructions. Reordering has no effect in single-threaded mode, but if the order is wrong in multi-threaded mode, it may not meet the requirements. Variables that need to ensure the execution order can be modified with volatile.

Typical example: implementation of lazy double detection and locking in singleton mode

Reference: Singleton mode singleton of java design pattern_Reality, Too Cruel Blog-CSDN Blog

Instruction reordering follows two principles

as-if-serial principle

No matter how reordered (compiler and processor to provide parallelism), the execution result of the (single-threaded) program cannot be changed.

happen-before principle

The happen-before relationship is the main basis for judging whether there is data competition and whether threads are safe, and is also the basis for instruction reordering, ensuring visibility under multi-threading.

Variables modified by volatile will establish a happen-before relationship when reading and writing.

The difference between as-if-serial and happen-before

- The as-if-serial semantics guarantee that the execution result of the program in a single thread is not changed, and the happens-before relationship ensures that the execution result of a correctly synchronized multi-threaded program is not changed.

- The as-if-serial semantics create an illusion for programmers writing single-threaded programs: single-threaded programs are executed in the order of the program. The happens-before relationship creates an illusion for programmers who write correctly synchronized multithreaded programs: correctly synchronized multithreaded programs are executed in the order specified by happens-before.

- The purpose of as-if-serial semantics and happens-before is to improve the parallelism of program execution as much as possible without changing the program execution results.

Implement the principle of preventing instruction reordering

Insert a StoreStore barrier before each volatile write operation, so that after other threads modify the A variable, the modified value is visible to the current thread, and insert a StoreLoad barrier after the write operation, so that other threads can obtain the A variable. , to be able to obtain the value that has been modified by the current thread, and insert a LoadLoad barrier before each volatile read operation, so that when the current thread obtains the A variable, other threads can also obtain the same value, so that all The data read by the thread is the same, and the LoadStore barrier is inserted after the read operation; this allows the current thread to obtain the value of the A variable in the main memory before other threads modify the value of the A variable.