If you are interested in learning more about it, please visit my personal website: Yetong Space

One: Basic concepts

Using synchronized to achieve thread synchronization, that is, locking, implements pessimistic locking. Locking allows only one thread to access a piece of code at a time. While increasing security, it sacrifices program execution performance.

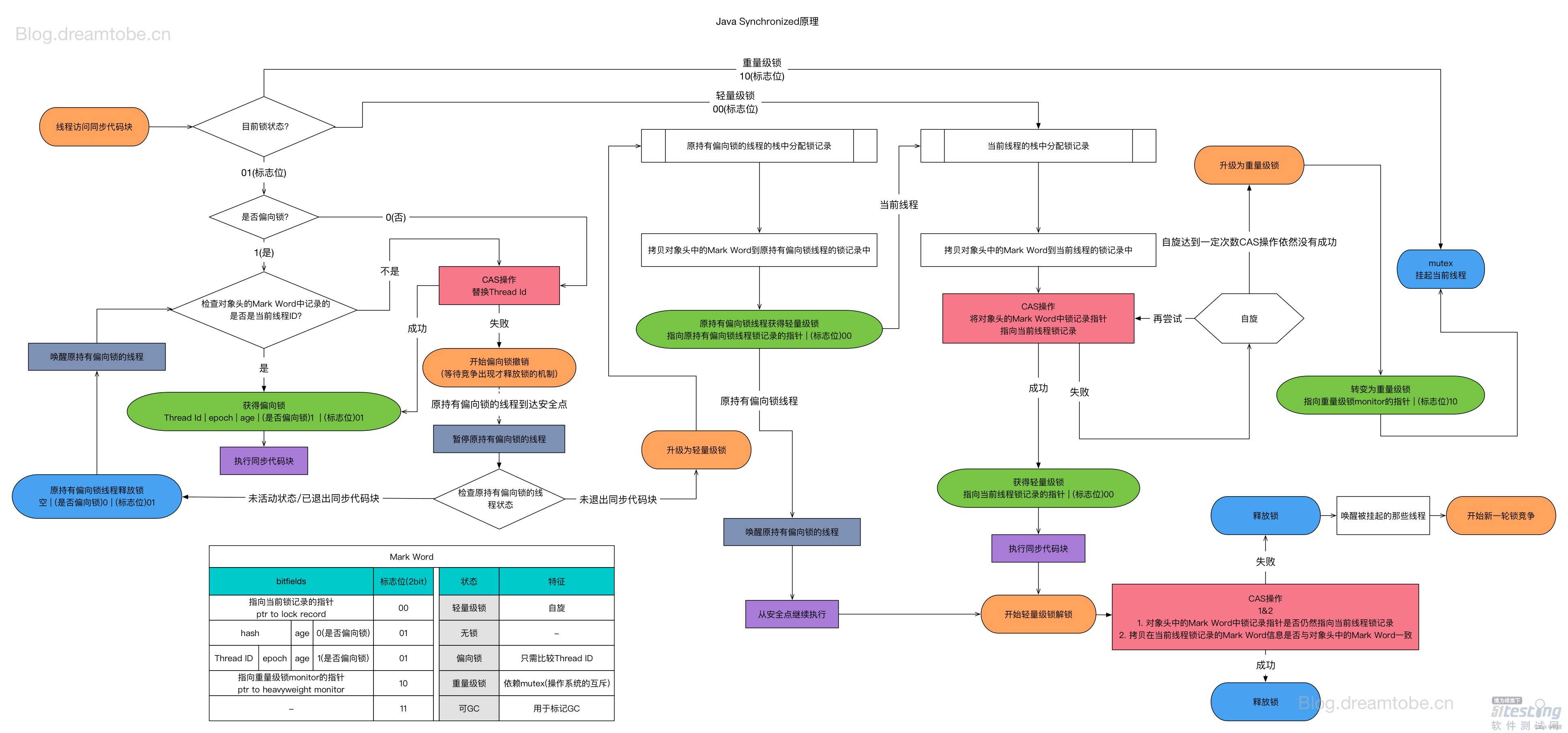

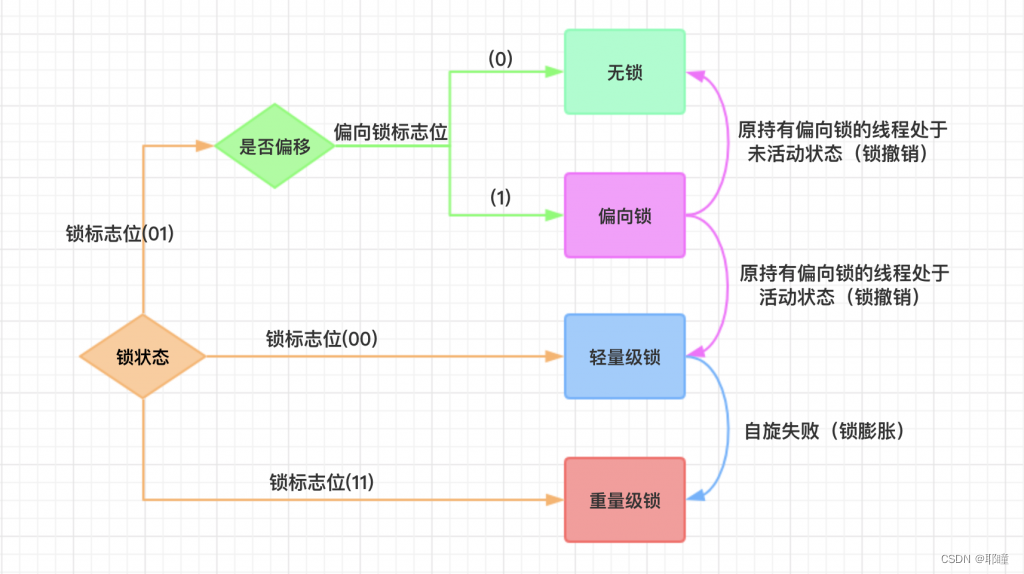

In order to reduce the performance consumption caused by acquiring and releasing locks to a certain extent, "biased locks" and "lightweight locks" were introduced after jdk6, so there are a total of 4 lock states for locks in Java, ranging from low to low The highest order is: no lock state, biased lock state, lightweight lock state, and heavyweight lock state. It will gradually escalate with the competition situation. Locks can be upgraded but not downgraded, which means that after a biased lock is upgraded to a lightweight lock, it cannot be downgraded to a biased lock. The purpose of this lock upgrade but not downgrade strategy is to improve the efficiency of acquiring and releasing locks.

Lock competition: If multiple threads acquire a lock in turn, but every time the lock is acquired smoothly, without blocking, then there is no lock competition. Lock competition occurs only when a thread tries to acquire a lock and finds that the lock is already occupied and can only wait for it to be released.

Two: no lock

Lock-free means that there is no lock on the resource, all threads can access and modify the same resource, but only one thread can modify it successfully at the same time.

The feature of lock-free is that the modification operation will be performed in a loop, and the thread will continue to try to modify the shared resource. If there is no conflict, the modification is successful and exits, otherwise it will continue to loop. If there are multiple threads modifying the same value, there must be one thread that can modify it successfully, while other threads that fail to modify it will continue to retry until the modification succeeds.

Three: bias lock

Biased locks, as the name suggests, will be biased towards the first thread to access the lock. If there is only one thread accessing the synchronization lock during operation, and there is no multi-thread contention, the thread does not need to trigger synchronization. In this case, a biased lock will be added to the thread. When the thread reaches the synchronization code block for the second time, it will judge whether the thread holding the lock at this time is itself, and if it is, it will continue to execute normally. Since the lock was not released before, there is no need to re-lock it here. If there is only one thread that uses the lock from beginning to end, it is obvious that there is almost no additional overhead for biasing the lock, and the performance is extremely high.

The acquisition process of biased locks:

- Check the logo of the biased lock and the lock flag in Mark Word. If the biased lock is 1 and the lock flag is 01, the lock is biasable.

- If it is biasable, test whether the thread ID in Mark Word is the same as the current thread, if they are the same, execute the synchronization code directly, otherwise go to the next step.

- The current thread operates the competition lock through CAS. If the competition is successful, set the thread ID in Mark Word as the current thread ID, and then execute the synchronization code. If the competition fails, go to the next step.

- If the current thread fails to pass the CAS competition lock, it means that there is competition. When the global safe point is reached, the thread that obtained the biased lock is suspended, the biased lock is upgraded to a lightweight lock, and then the thread blocked at the safe point continues to execute the synchronization code.

If other threads seize the lock during the running process, the thread holding the biased lock will be suspended, and the JVM will release its biased lock and restore the lock to a standard lightweight lock. When the bias lock is released, it will cause STW (stop the word) operation.

The release process of biased locks: only when other threads try to compete for biased locks, the thread holding the biased lock will release the lock, and the thread will not actively release the biased lock. The cancellation of the biased lock needs to wait for the global security point (that is, no bytecode is being executed), it will suspend the thread that owns the biased lock, and the biased lock will return to the unlocked state or the lightweight lock state after the cancellation.

Four: lightweight lock

In the lightweight lock state, the lock competition continues, and the thread that has not grabbed the lock will spin, that is, it will continuously loop to determine whether the lock can be successfully acquired. Long-term spin operation is very resource-consuming. If one thread holds a lock, other threads can only consume CPU in situ and cannot perform any effective tasks. This phenomenon is called busy-waiting. If the lock competition is serious, a thread that reaches the maximum number of spins will upgrade the lightweight lock to a heavyweight lock.

Lock spin: If the thread holding the lock can release the lock resource in a short time, then those threads waiting for the competing lock do not need to switch between the kernel state and the user state to enter the blocking and suspending state, they only need to wait First-wait (spin), the lock can be acquired immediately after the thread holding the lock releases the lock, thus avoiding the consumption of user thread and kernel switching.

The locking process of lightweight locks:

- When the thread execution code enters the synchronization block, if the Mark Word is in the lock-free state, the virtual machine first creates a space named Lock Record in the stack frame of the current thread, which is used to store the copy of the Mark Word of the current object, which is officially called as "Dispalced Mark Word".

- Copy the Mark Word in the object header to the lock record.

- After the copy is successful, the virtual machine will use the CAS operation to update the Mark Word of the object to a pointer to execute the Lock Record, and point the owner pointer in the Lock Record to the Mark Word of the object. If the update is successful, go to 4, otherwise go to 5.

- If the update is successful, the thread owns the lock, and sets the lock flag to 00, indicating that it is in a lightweight lock state.

- If the update fails, the virtual machine checks whether the Mark Word of the object points to the stack frame of the current thread. If so, it means that the current thread already owns the lock, and can enter and execute the synchronization code. Otherwise, it means that multiple threads compete, and the lightweight lock will expand into a heavyweight lock. Mark Word stores the pointer of the heavyweight lock (mutual exclusion lock), and the thread waiting for the lock will also enter the blocked state.

Five: heavyweight lock

When a thread tries to acquire a lock and finds that the occupied lock is a heavyweight lock, it directly suspends itself and waits to be woken up in the future. Before JDK1.6, synchronized would directly add heavyweight locks, which is obviously well optimized now.

The characteristics of heavyweight locks: when other threads try to acquire the lock, they will be blocked, and these threads will be awakened only after the thread holding the lock releases the lock.

Six: Summary

| Lock | advantage | shortcoming | Applicable scene |

|---|---|---|---|

| Bias lock | Locking and unlocking do not require additional consumption, and there is only a nanosecond gap compared to executing an asynchronous method | If there is lock competition between threads, it will bring additional consumption of lock cancellation | Applicable to scenarios where only one thread accesses the synchronized block |

| lightweight lock | Competing threads will not be blocked, improving the response speed of the program | Competing threads will use spin if they never get the lock, consuming CPU | The pursuit of response time, the synchronization block execution speed is very fast |

| heavyweight lock | Thread competition does not use spin and does not consume CPU | Thread blocking, slow response time | In pursuit of throughput, the execution speed of synchronized blocks is longer |