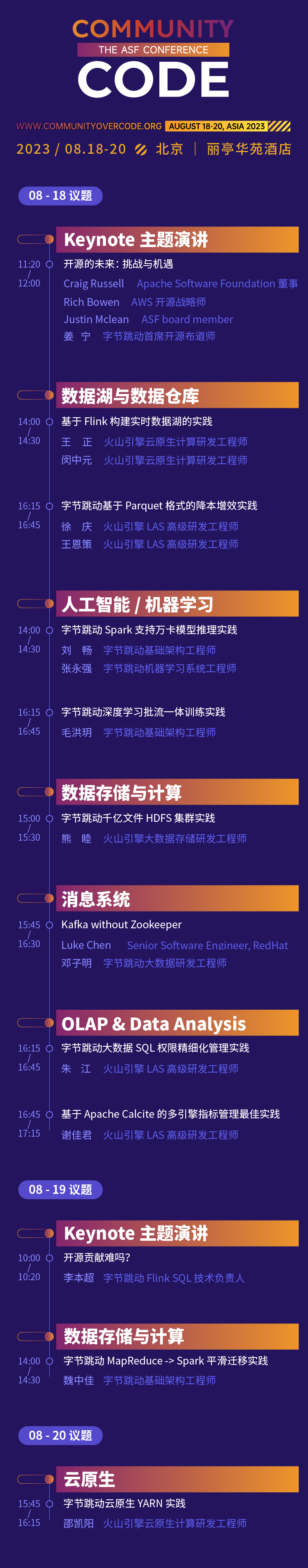

August 18-20, 2023, Park Plaza Hotel, Beijing

CommunityOverCode Asia (formerly ApacheCon Asia), the official global series of conferences of the Apache Software Foundation, will be held for the first time in China on August 18-20, 2023 at Park Plaza Hotel in Beijing. The conference will include 17 forum directions and hundreds of Cutting edge issues.

ByteDance's open source experience has gone through different stages of "using open source, participating in open source, and taking the initiative to open open source." Its attitude towards open source has always been open and encouraging. In this CommunityOverCode Asia summit, 15 students from Byte will share the practical experience of Apache open source projects in ByteDance's business around 10 topics under 6 topics, and Apache Calcite PMC Member will share in the keynote speech I look forward to sharing my experiences and gains from participating in open source contributions with on-site attendees.

Keynote Speech: Is it difficult to contribute to open source?

Perhaps many students have thought about participating in some open source contributions to improve their technical capabilities and influence. But there is usually some distance between ideals and reality: because I am too busy at work and have no time to participate; the threshold for open source projects is too high and I don’t know how to get started; I have tried some contributions, but the community response is not high and I have not persisted. In this keynote, Li Benchao will combine his own experience to share some short stories and thoughts about his contribution to the open source community, how to overcome these difficulties, finally achieve breakthroughs in the open source community, and strike a balance between work and open source contribution.

Li Benchao

ByteDance Flink SQL Technical Director

Apache Calcite PMC Member, Apache Flink Committer, graduated from Peking University, currently works in the ByteDance streaming computing team, and is the technical leader of Flink SQL.

Keynote speech

Topic: Data Lakes and Data Warehouses

The practice of building a real-time data lake based on Flink

Real-time data lakes are a core component of modern data architecture, allowing enterprises to analyze and query large amounts of data in real-time. In this sharing, we will first introduce the current pain points of real-time data lakes, such as the high timeliness, diversity, consistency and accuracy of data. Then we will introduce how we build a real-time data lake based on Flink and Iceberg, mainly through the following two parts: how to put data into the lake in real time, and how to use Flink to perform OLAP ad hoc queries. Finally, let’s introduce some practical benefits of ByteDance in real-time data lake.

Wang Zheng

Volcano Engine Cloud Native Computing R&D Engineer

Joined ByteDance in 2021 and worked in the infrastructure open platform team, mainly responsible for the research and development of Serverless Flink and other directions.

Min Zhongyuan

Volcano Engine Cloud Native Computing R&D Engineer

Joined ByteDance in 2021 and works in the infrastructure open platform team, mainly responsible for the research and development of Serverless Flink, Flink OLAP and other directions.

ByteDance’s practice of cost reduction and efficiency improvement based on Parquet format

ByteDance offline data warehouse uses Parquet format for data storage by default. However, during business use, we encountered related problems such as too many small files and high data storage costs. To address the problem of too many small files, the existing technical solution is generally to read multiple Parquet small files through Spark, and then re-output the data and merge it into one or more large files. For the problem of excessive storage costs, currently offline data warehouses only have partition-level row-level TTL solutions. If you need to delete detailed field data (column-level TTL) that is no longer used in the partition and accounts for a large proportion, you need to read the data through Spark. And overwrite the fields that need to be deleted by setting them to NULL. Whether it is small file merging or column-level TTL, there are a large number of overwrite operations on Parquet data files. Since the Parquet format has special encoding rules, a series of operations such as special (de)serialization, (de)compression, and (de)encoding are required to read and write data in Parquet. In this process, operations such as encoding, decoding, and decompression are CPU-intensive calculations that consume a large amount of computing resources. In order to improve the overwriting efficiency of Parquet format files, we deeply studied the Parquet file format definition and used the binary copy method to optimize the data overwriting operation, skipping redundant operations such as encoding and decoding in ordinary overwriting. Compared with traditional methods The file overwriting efficiency is greatly improved, and the performance is 10+ times that of ordinary overwriting methods. In order to improve ease of use, we also provide new SQL syntax to support users to conveniently complete operations such as small file merging and column-level TTL.

Xu Qing

Volcano Engine LAS Senior R&D Engineer

He has been engaged in the research and development of big data related components such as Hive Metastore, SparkSQL, Hudi and so on for many years.

Wang Ence

Volcano Engine LAS Senior R&D Engineer

Responsible for the design and development of ByteDance's big data distributed computing engine, helping the company mine high-value information from massive data.

Topic: Artificial Intelligence/Machine Learning

ByteDance deep learning batch-stream integrated training practice

With the development of the company's business, the complexity of algorithms continues to increase, and more and more algorithm models are exploring real-time training based on offline updates to improve model effects. In order to achieve flexible arrangement and free switching of complex offline and real-time training, and to schedule offline computing resources in a wider range, machine learning model training gradually tends to integrate batch and stream. This time, we will share ByteDance machine learning training scheduling The framework’s architectural evolution, batch-stream integration practice, heterogeneous elastic training and other contents. It also focuses on the practical experience of multi-stage multi-data source hybrid orchestration, global shuffle of streaming samples, full-link Nativeization, and training data insights in the MFTC (batch-stream integrated collaborative training) scenario.

Mao Hongyue

ByteDance Infrastructure Engineer

Joined ByteDance in 2022 and is engaged in machine learning training research and development. He is mainly responsible for the large-scale cloud-native batch-stream integrated AI model training engine, which supports businesses including Douyin video recommendation, Toutiao recommendation, pangolin advertising, Qianchuan graphic advertising and other businesses.

ByteDance Spark supports Wanka model inference practice

With the development of the company's business, the complexity of algorithms continues to increase, and more and more algorithm models are exploring real-time training based on offline updates to improve model effects. In order to achieve flexible arrangement and free switching of complex offline and real-time training, and to schedule offline computing resources in a wider range, machine learning model training gradually tends to integrate batch and stream. This time, we will share ByteDance machine learning training scheduling The framework’s architectural evolution, batch-stream integration practice, heterogeneous elastic training and other contents. It also focuses on the practical experience of multi-stage multi-data source hybrid orchestration, global shuffle of streaming samples, full-link Nativeization, and training data insights in the MFTC (batch-stream integrated collaborative training) scenario.

Liu Chang

ByteDance Infrastructure Engineer

Joined ByteDance in 2020 and works in the infrastructure batch computing team. He is mainly responsible for the development of Spark cloud native and Spark On Kubernetes.

Zhang Yongqiang

ByteDance Machine Learning System Engineer

Joined ByteDance in 2022, worked on the AML machine learning system team, and participated in building a large-scale machine learning platform.

Special Topic: Data Storage and Computing

Bytedance MapReduce -> Spark smooth migration practice

With the development of business, ByteDance runs about 1.2 million Spark jobs online every day. In contrast, there are still about 20,000 to 30,000 MapReduce tasks online every day. As a batch processing framework with a long history, the operation and maintenance of the MapReduce engine faces a series of problems from the perspective of big data research and development. For example, the ROI of the framework update iteration is low, the adaptability to the new computing scheduling framework is poor, and so on. From a user's perspective, there are also a series of problems when using the MapReduce engine. For example, the computing performance is poor and additional Pipeline tools are needed to manage serially running jobs. You want to migrate Spark, but there are a large number of existing jobs and a large number of jobs use various scripts that Spark itself does not support. In this context, the Bytedance Batch team designed and implemented a solution for smoothly migrating MapReduce tasks to Spark. This solution allows users to complete the smooth migration from MapReduce to Spark by only adding a small number of parameters or environment variables to existing jobs. , greatly reducing migration costs and achieving good cost benefits.

Wei Zhongjia

ByteDance Infrastructure Engineer

He joined ByteDance in 2018 and is currently a big data development engineer of ByteDance infrastructure. He focuses on the field of big data distributed computing and is mainly responsible for the development of Spark kernel and the development of ByteDance's self-developed Shuffle Service.

ByteDance’s hundreds of billions of files HDFS cluster practice

With the in-depth development of big data technology, data scale and usage complexity are getting higher and higher, and Apache HDFS is facing new challenges. At ByteDance, HDFS is the storage for traditional Hadoop data warehouse business, the base for storage and computing separation architecture computing engines, and the storage base for machine learning model training. In ByteDance, HDFS not only builds storage scheduling capabilities to serve large-scale computing resource scheduling across multiple regions and improves the stability of computing tasks; it also provides data identification and hot and cold scheduling that integrates user-side cache, conventional three copies, and cold storage. ability. This sharing introduces how ByteDance internally understands the new requirements for traditional big data storage in emerging scenarios, and supports system stability in different scenarios through technological evolution and operation and maintenance system construction.

Xiong Mu

Volcano Engine Big Data Storage R&D Engineer

Mainly responsible for the evolution of big data storage HDFS metadata services and upper-layer computing ecological support.

Topic: OLAP & Data Analysis

Best practices for multi-engine indicator management based on Apache Calcite

Topic introduction

There are various indicators in data analysis. When maintaining massive indicators, there are often the following pain points:

- Repeated segments cannot be reused

- Different engines require writing different SQL

- Caliber changes are difficult to synchronize to all downstream

In order to solve these problems, ByteDance has tried to use existing technical capabilities to design solutions:

- Store indicators in Hive tables as much as possible: it will greatly increase storage costs and backtracking costs, which is not feasible

- Encapsulating indicators into View: Not only will it generate additional table information in Hive, doubling the number of tables, but it is also unfriendly to support partitioning. Query usage experience is poor, so it is difficult to promote

Because the current technology is not enough to solve the above problems, ByteDance designed and implemented two sets of new syntax capabilities based on Apache Calcite:

- Virtual column: column-level view, reuse table column permissions, simple promotion

- SQL Define Function: Use SQL to directly define functions to facilitate the reuse of SQL fragments

The combination of these two capabilities can effectively reduce the cost of indicator management. For example:

- The indicator only needs to be modified once, and there is no need to modify it synchronously downstream.

- Fields in collection types such as MAP and JSON can be defined as virtual columns, making the logic clearer and more convenient to use.

Specific typical cases and implementation principles will be introduced in the speech PPT.

Xie Jiajun

Volcano Engine LAS Senior R&D Engineer

Participated in a speech at Apache Asia Con 2022. I love open source and often participate in community work. I am now an Apache Calcite active committer and a Linkedin Coral Contributor.

Practice of refined management of mixed permissions in big data queues

background:

In recent years, data security issues have gradually attracted the attention of governments and enterprises around the world. With the promulgation and implementation of national data security laws and personal information protection laws, clear requirements have been put forward for the principle of minimum data sufficiency. Therefore, how to control permissions in a more fine-grained manner has become a problem that every enterprise must solve.

Current issues:

The industry usually extracts permission points in SQL based on rules, and manages these permission points horizontally according to the row dimension, or vertically according to the column dimension.

This single-dimensional permission control granularity is too coarse and cannot support the combined relationship between multiple permissions. In a mid-stage large wide table scenario like ByteDance, where multiple business lines are stored in a unified manner, it is difficult to meet the demand for fine-grained permission control of data.

solution:

Based on the above problems, ByteDance designed a refined management solution for mixed permissions in rows and columns based on Apache Calcite and its self-developed permission service Gemini.

-

Accurate authority point extraction based on Calcite bloodline

- Based on the blood relationship capability, it accurately locates the permission point information (table, row, column, etc.) actually used in SQL and performs refined permission extraction.

-

Multi-dimensional permission management and control with mixed permissions in rows and columns

- On top of the traditional library permissions, table permissions, and column permissions, a new row restriction permission is added. Row permissions can be attached to table permissions/column permissions as a special resource.

- Each table permission/column permission can be bundled with multiple row permission resources at the same time, and the row restrictions of different table permissions/column permissions are independent of each other.

- Through the bundling of horizontal/vertical permission points, the query resources are positioned on the 'resource cells' with overlapping rows and rows to achieve more fine-grained resource-level permissions.

Solution advantages:

- Under the new solution, through precise fine-grained permission point extraction and multi-dimensional row and column mixed permission support, resource management and control is refined from a horizontal row or a vertical column to 'resource cells' with overlapping rows and rows. superior.

- The scope of permission control is further refined, and the required permissions are granted with the smallest granularity while ensuring normal use of users.

- Specific typical cases and implementation principles will be introduced in the speech PPT.

Zhu Jiang

Volcano Engine LAS Senior R&D Engineer

Topic: Cloud Native

ByteDance Cloud Native YARN Practice

Bytedance's internal offline business has a huge scale. There are hundreds of thousands of nodes and millions of tasks running online every day, and the amount of resources used every day reaches tens of millions. Internally, the offline scheduling system and the online scheduling system are respectively responsible for the offline business. and online business scheduling management. However, with the development of business scale, this system has exposed some shortcomings: it belongs to two systems offline, and some major activity scenarios require offline resource conversion through operation and maintenance. The operation and maintenance burden is heavy and the conversion cycle is long; resource pool The lack of uniformity makes the overall resource utilization rate low, and quota management and control, machine operation and maintenance, etc. cannot be reused; big data operations cannot enjoy the various benefits of cloud native, such as reliable and stable isolation capabilities, convenient operation and maintenance capabilities, etc. Offline systems urgently need to be unified, and traditional big data engines are not designed for cloud native and are difficult to deploy directly on cloud native. Each computing engine and task require in-depth transformation to support various features originally on YARN, and the cost of transformation is huge. Based on this background, ByteDance proposes a cloud-native YARN solution - Serverless YARN, which is 100% compatible with the Hadoop YARN protocol. Big data jobs in the Hadoop ecosystem can be transparently migrated to cloud-native systems without modification, and online resources and Offline resources can be efficiently and flexibly converted and reused in a time-sharing manner, and the overall resource utilization of the cluster is significantly improved.

Shao Kaiyang

Volcano Engine Cloud Native Computing R&D Engineer

Responsible for offline scheduling related work in ByteDance infrastructure and has many years of experience in engineering architecture.

Topic: Messaging system

Kafka without Zookeeper

Currently, Kafka relies on ZooKeeper to store its metadata, such as broker information, topics, partitions, etc. KRaft is a new generation of Kafka without Zookeeper. This lecture will include:

- Why Kafka needs to develop new KRaft features

- Architecture of old (with Zookeeper) Kafka and new (without Zookeeper) Kafka

- Benefits of adopting Kafka

- how it works internally

- Monitoring indicators

- Tools to help solve Kafka issues

- A demo to show what we have achieved so far

- Kafka community’s roadmap towards KRaft

After this talk, the audience will have a better understanding of what KRaft is, how it works, how it differs from Zookeeper-based Kafka, and most importantly, how to monitor and troubleshoot it.

Luke Chen

RedHat, Senior Software Engineer

Senior software engineer at RedHat, dedicated to running Apache Kafka products on the cloud. Apache Kafka Committer and PMC member, has contributed to Apache Kafka for more than 3 years.

Deng Ziming

ByteDance Big Data R&D Engineer, Apache Kafka Committer

Booth interaction is waiting for you to play

ByteDance Open Source will set up an interactive booth at the conference site, where ByteDance’s open source projects will be displayed and interact with participants. There are not only rich community surroundings on site, but also various interactive links. Friends who are participating are welcome to check in~

Follow the ByteDance open source public account, there will be surprises on site!

View the full schedule: https://apachecon.com/acasia2023/en/tracks.html

Fellow chicken "open sourced" deepin-IDE and finally achieved bootstrapping! Good guy, Tencent has really turned Switch into a "thinking learning machine" Tencent Cloud's April 8 failure review and situation explanation RustDesk remote desktop startup reconstruction Web client WeChat's open source terminal database based on SQLite WCDB ushered in a major upgrade TIOBE April list: PHP fell to an all-time low, Fabrice Bellard, the father of FFmpeg, released the audio compression tool TSAC , Google released a large code model, CodeGemma , is it going to kill you? It’s so good that it’s open source - open source picture & poster editor tool