every blog every motto: You can do more than you think.

https://blog.csdn.net/weixin_39190382?type=blog

0. Preface

Spectral clustering spectral clustering overview

description: no

1. Text

1.1 Overall understanding

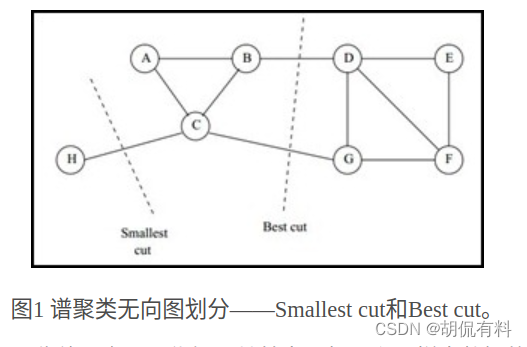

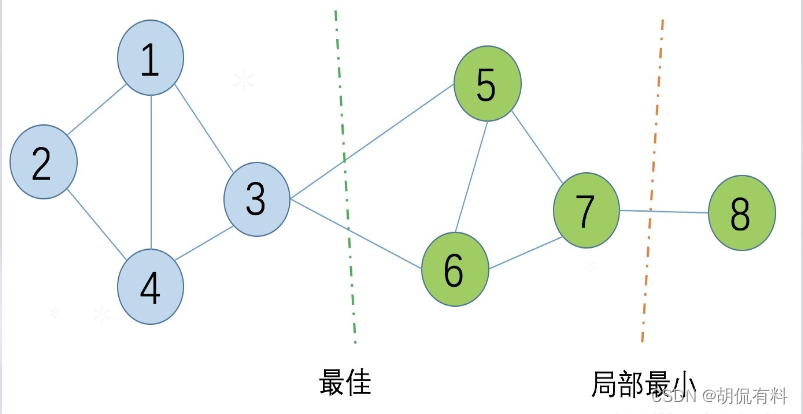

Spectral Clustering is a clustering method based on graph theory, which divides a weighted undirected graph into two or more optimal subgraphs. Make the subgraphs as similar as possible, and the distance between subgraphs as far as possible . The optimal means that the optimal objective functions are different. There are two types:

- smallest cut

- The best cut

is shown in the figure below:

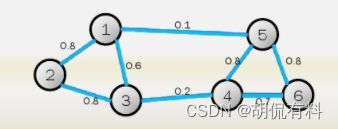

1.1.1 Undirected graph

As shown in the figure below, it consists of several vertices and edges . Because the edges have no direction, it is called an undirected graph. in,

Set of points: V = { v 1 , v 2 , . . . . , v 3 } V = \{v1,v2,...,v3\}V={

v 1 ,v2 , _.....,v 3 }

edge set:E = { e 1 , e 2 , . . . . , e 3 } E = \{e1,e2,...,e3\}E={

e 1 ,e 2 ,.....,e 3 }

So, the graph is expressed as G ( V , E ) G(V,E)G ( V ,E)

Weight matrix (adjacency matrix) WWW, W i j W_{ij} WijDisplayii _i andjjThe weight between j , since it is an undirected graph, so

W ij = W ji W_{ij}=W_{ji}Wij=Wji

1.1.2 Degree and degree matrix

In data structures, degree is defined as the number of vertices directly connected to the point.

Here, the definition is as follows:

di = ∑ j = 1 n W ij d_i = \sum_{j=1}^{n}W_{ij}di=j=1∑nWij

That is, the sum of weights for a row (or column).

The degree matrix is a diagonal matrix composed of n degrees, as follows:

[ d 1 0 ⋯ 0 0 d 2 ⋯ 0 ⋮ ⋮ ⋱ ⋮ 0 0 ⋯ d n ] \begin{bmatrix} {d_{1}}&{0}&{\cdots}&{0}\\ {0}&{d_{2}}&{\cdots}&{0}\\ {\vdots}&{\vdots}&{\ddots}&{\vdots}\\ {0}&{0}&{\cdots}&{d_{n}}\\ \end{bmatrix} d10⋮00d2⋮0⋯⋯⋱⋯00⋮dn

1.1.3 Similarity Matrix

Note: We can calculate the distance between two points and generate an adjacency matrix to represent the relationship between our points (that is, what we call a graph ). The similarity matrix here is based on the "distance matrix" (according to the distance Judging whether it is similar) According to a certain method (the following three methods) to further "screen"

(it can be roughly understood, some points are far away)

The above weight matrix is composed of weights between any two points. In practice, we cannot directly obtain weights, only the definition of data points, and the weights are usually calculated by the distance between two points . The distance is far away and the weight is high, and the distance is close and the weight is high. Usually by three methods

(1). ϵ ϵϵ - proximity method (less used)

Set a threshold ϵ ϵϵ , calculate the Euclidean distance between two points and compare with the threshold,

W i j = { 0 , i f s i j > ϵ ϵ , i f s i j ⩽ ϵ W_{ij}=\left\{ \begin{matrix} 0 , & if &s_{ij}>ϵ \\ ϵ, &if &s_{ij}\leqslantϵ \end{matrix} \right. Wij={

0,ϵ ,ififsij>ϵsij⩽ϵ

Use the threshold to filter the distance, the comparison of the card is dead, the weight between the samples is 0, and a lot of information is missing

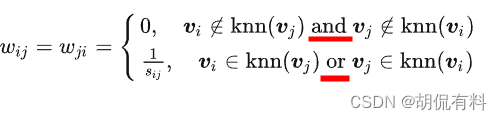

(2). k-nearest neighbor method

For any point , find the k vertices closest to it, the weights of this vertex and k vertices are obtained by calculation (all are greater than 0, and the distance of the rest of the vertices is 0), but the obtained similarity matrix will be asymmetric. Such as: iii injjj 'skkAmong the k nearest neighbors, but jjj may not be iniii 'skkamong the k nearest neighbors. There are two solutions to this problem:

Description: sij s_{ij}sijIndicates the distance

a. Method 1

In the loose version,

as long as one satisfies k-nearest neighbors , then let W ij = W ji W_{ij}=W_{ji}Wij=Wji, it is 0 only if k-nearest neighbors are not satisfied at the same time.

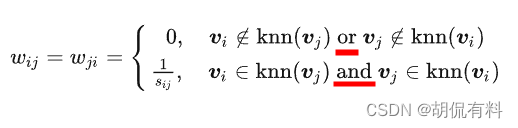

b. Method 2

Strict version Only when

two vertices are close neighbors , the distance is calculated, otherwise the weight is 0.

(3). Fully connected

Calculate the interconnection between all points , so the weight is greater than 0, you can choose different kernel functions to calculate the distance, commonly used are:

- polynomial kernel function

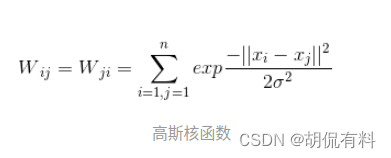

- Gaussian kernel function

- sigmoid kernel function

1.1.4 Laplace matrix

L = D − W L = D - W L=D−W

D D D is the degree matrix,WWW is the adjacency matrix above

1.1.5 Cut graph, cluster

Let's get into the key point, now we have to cut the undirected graph mentioned above .

Cut optimization objective:

C ost ( G 1 , . . . G 2 ) = ∑ i C ( G i , G i ^ ) Cost(G1,...G2) = \sum_{i}C(G_i,\hat{ G_{i}})Cost(G1,...G2)=i∑C(Gi,Gi^)

C ( G 1 , G 2 ) = ∑ i ∈ G 1 , j ∈ G 2 w i j C(G_1,G_2) = \sum_{i\in G_1,j\in G_2}w_{ij} C(G1,G2)=i∈G1,j∈G2∑wij

- The goal is to minimize the sum of weights for cutting subgraph times , i.e., cut the fewest edges.

- Local optima may appear in cutting, so there are different graph cutting methods according to different cutting methods.

(1). RatioCut

Goal: Make the number of nodes in the subgraph as large as possible

Ration C ut ( G 1 , . . . G 2 ) = ∑ i C ( G i , G i ^ ) ∣ G i ^ ∣ RationCut(G1,... G2) = \sum_i{C(G_i,\hat{G_i}) \over \mid \hat{G_i} \mid }RationCut(G1,...G2)=i∑∣Gi^∣C(Gi,Gi^)

The denominator is the number of nodes in the subgraph

(2). NCut cut image

Goal: Consider the weight sum of each subgraph edge

NC ut ( C 1 , . . . C k ) = ∑ i C ( G i , GI ^ ) vol ( G i ^ ) NCut(C_1,...C_k) = \ sum_i{C(G_i,\hat{G_I} )\over vol(\hat{G_i})}NCut(C1,...Ck)=i∑vol(Gi^)C(Gi,GI^)

The denominator is the weight sum of each side of the subgraph

1.2 Calculation process

1.2.1 There are three main parts of the core:

- Generation of similarity matrix (commonly used: full connection method)

- Cutting method (commonly used: NCut)

- The final clustering method (commonly used: K-Means)

1.2.2 Algorithm flow:

- Construct similarity matrix S, degree matrix D, and calculate Laplacian matrix L = D − WL = D - WL=D−W

- Construct the standardized Laplacian matrix D − 1 2 ⋅ L ⋅ D − 1 2 D^{-1 \over 2} ·L ·D^{-1 \over 2}D2−1⋅L⋅D2−1

- Calculate D − 1 2 ⋅ L ⋅ D − 1 2 D^{-1 \over 2} · L · D^{-1 \over 2}D2−1⋅L⋅D2−1The smallest k 1 k_1k1The eigenvectors ff corresponding to each eigenvaluef

- The respective eigenvectors ffThe matrix composed of f is standardized by row, and the final composition isn ⋅ k 1 n k_1n⋅k1The characteristic matrix FF of dimensionF

- For each row in F as a k 1 k_1k1Dimensional samples, a total of n samples, clustered with the input clustering method, the clustering dimension is k 2 k_2k2

- Get the cluster partition C ( c 1 , c 2 , . . . ck 2 ) C(c_1,c_2,...c_{k2})C(c1,c2,...ck2 _)

1.2.3 python implementation

def calculate_w_ij(a,b,sigma=1):

w_ab = np.exp(-np.sum((a-b)**2)/(2*sigma**2))

return w_ab

# 计算邻接矩阵

def Construct_Matrix_W(data,k=5):

rows = len(data) # 取出数据行数

W = np.zeros((rows,rows)) # 对矩阵进行初始化:初始化W为rows*rows的方阵

for i in range(rows): # 遍历行

for j in range(rows): # 遍历列

if(i!=j): # 计算不重复点的距离

W[i][j] = calculate_w_ij(data[i],data[j]) # 调用函数计算距离

t = np.argsort(W[i,:]) # 对W中进行行排序,并提取对应索引

for x in range(rows-k): # 对W进行处理

W[i][t[x]] = 0

W = (W+W.T)/2 # 主要是想处理可能存在的复数的虚部,都变为实数

return W

def Calculate_Matrix_L_sym(W): # 计算标准化的拉普拉斯矩阵

degreeMatrix = np.sum(W, axis=1) # 按照行对W矩阵进行求和

L = np.diag(degreeMatrix) - W # 计算对应的对角矩阵减去w

# 拉普拉斯矩阵标准化,就是选择Ncut切图

sqrtDegreeMatrix = np.diag(1.0 / (degreeMatrix ** (0.5))) # D^(-1/2)

L_sym = np.dot(np.dot(sqrtDegreeMatrix, L), sqrtDegreeMatrix) # D^(-1/2) L D^(-1/2)

return L_sym

def normalization(matrix): # 归一化

sum = np.sqrt(np.sum(matrix**2,axis=1,keepdims=True)) # 求数组的正平方根

nor_matrix = matrix/sum # 求平均

return nor_matrix

W = Construct_Matrix_W(your_data) # 计算邻接矩阵

L_sym = Calculate_Matrix_L_sym(W) # 依据W计算标准化拉普拉斯矩阵

lam, H = np.linalg.eig(L_sym) # 特征值分解

t = np.argsort(lam) # 将lam中的元素进行排序,返回排序后的下标

H = np.c_[H[:,t[0]],H[:,t[1]]] # 0和1类的两个矩阵按行连接,就是把两矩阵左右相加,要求行数相等。

H = normalization(H) # 归一化处理

model = KMeans(n_clusters=20) # 新建20簇的Kmeans模型

model.fit(H) # 训练

labels = model.labels_ # 得到聚类后的每组数据对应的标签类型

res = np.c_[your_data,labels] # 按照行数连接data和labels

1.3 Summary

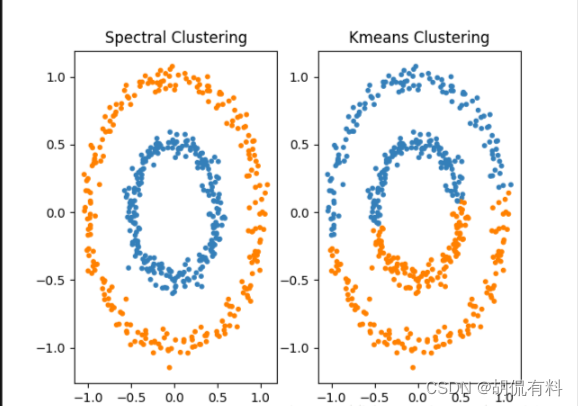

The main advantages of the spectral clustering algorithm are:

- Spectral clustering only requires a similarity matrix between the data, so it is effective for clustering dealing with sparse data. This is difficult for traditional clustering algorithms such as K-Means.

- Due to the use of dimensionality reduction, the complexity of dealing with high-dimensional data clustering is better than traditional clustering algorithms.

The main disadvantages of spectral clustering algorithms are:

- If the dimension of the final clustering is very high, the running speed of spectral clustering and the final clustering effect are not good due to insufficient dimensionality reduction.

- The clustering effect depends on the similarity matrix, and the final clustering effect obtained by different similarity matrices may be very different.

reference

[1] https://zhuanlan.zhihu.com/p/387483956

[2] https://blog.csdn.net/songbinxu/article/details/80838865

[3] https://blog.csdn.net/weixin_45591044/article/details/122747024

[4] https://blog.csdn.net/yftadyz/article/details/108933660?ops_request_misc=%257B%2522request%255Fid%2522%253A%2522164345675116780264032305%2522%252C%2522scm%2522%253A%252220140713.130102334…%2522%257D&request_id=164345675116780264032305&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2alltop_ulrmf~default-2-108933660.pc_search_insert_ulrmf&utm_term=%E8%B0%B1%E5%9B%BE%E7%90%86%E8%AE%BA&spm=1018.2226.3001.4187

[5] https://blog.csdn.net/katrina1rani/article/details/108451882

[6] https://zhuanlan.zhihu.com/p/392736238

[7] https://zhuanlan.zhihu.com/p/91154535

[8] https://www.cnblogs.com/tychyg/p/5277137.html

[9] https://www.cnblogs.com/kang06/p/9468647.html

[10] https://zhuanlan.zhihu.com/p/29849122

[11] https://www.cnblogs.com/xiximayou/p/13548514.html

[12] https://www.cnblogs.com/pinard/p/6221564.html

[13] https://zhuanlan.zhihu.com/p/54348180

[14] https://blog.csdn.net/qq_43391414/article/details/112277987

[15] https://blog.csdn.net/jxlijunhao/article/details/116443245

[16] https://zhuanlan.zhihu.com/p/368878987

[17] https://blog.csdn.net/qq280929090/article/details/103591577