One article to understand the whole process of Netty sending data | All the details you want to know are here

This series of Netty source code analysis articles is based on version 4.1.56.Final

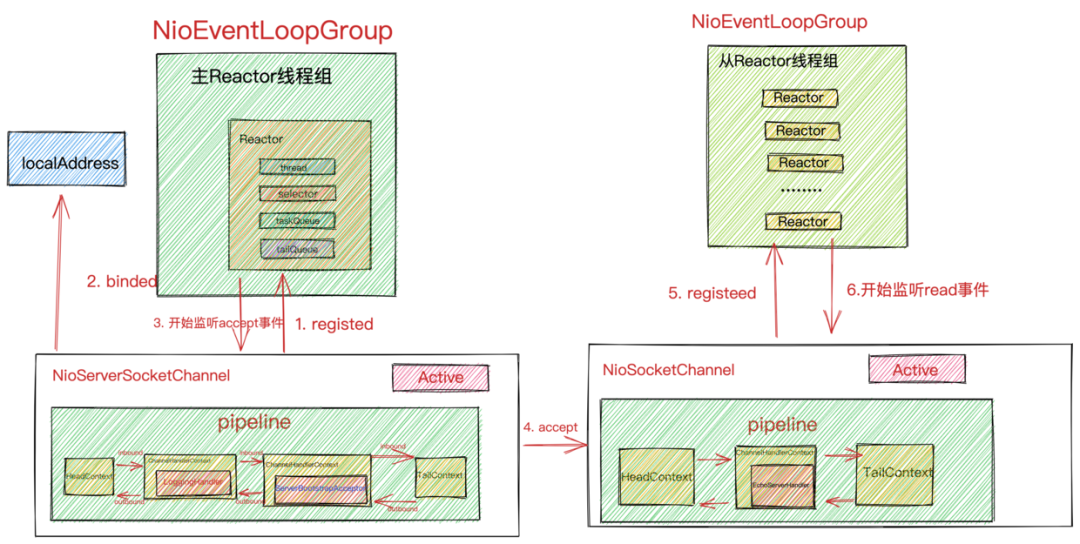

The complete structure of the master-slave Reactor group.png

In the article "How Netty Receives Network Data Efficiently" , we introduced the complete process of Netty's SubReactor processing network data reading. When Netty reads the network request data for us, and we complete the business processing in our own business thread After that, it is necessary to return the business processing results to the client. In this article, we will introduce how SubReactor handles the entire process of sending network data.

We all know that Netty is a high-performance asynchronous event-driven network communication framework. Since it is a network communication framework, its main tasks are:

-

Receive client connections.

-

Read network request data on the connection.

-

Send network response data to the connection.

In the previous series of articles , in the process of introducing Netty's startup and receiving connections , we only saw the registration of the OP_ACCEPT event and the OP_READ event, but did not see the registration of the OP_WRITE event.

-

So under what circumstances will Netty register the OP_WRITE event with SubReactor?

-

How does Netty asynchronously process write operations?

The author of this article will reveal the answers to these mysteries one by one. Let's take the previous EchoServer as an example for illustration.

@Sharable

public class EchoServerHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) {

//此处的msg就是Netty在read loop中从NioSocketChannel中读取到的ByteBuffer

ctx.write(msg);

}

}

We will send the ByteBuffer (Object msg here) read in the article "How Netty Efficiently Receives Network Data" to the client directly, and use this simple example to unveil the prelude to how Netty sends data ~~

In actual development, we first decode and convert the read ByteBuffer into our business Request class through the decoder, then do business processing in the business thread, encode the business Response class into ByteBuffer through the encoder, and finally use ChannelHandlerContext A reference to ctx to send response data.

In this article, we only focus on the process of writing data in Netty. The author will specifically introduce the content related to Netty encoding and decoding in subsequent articles.

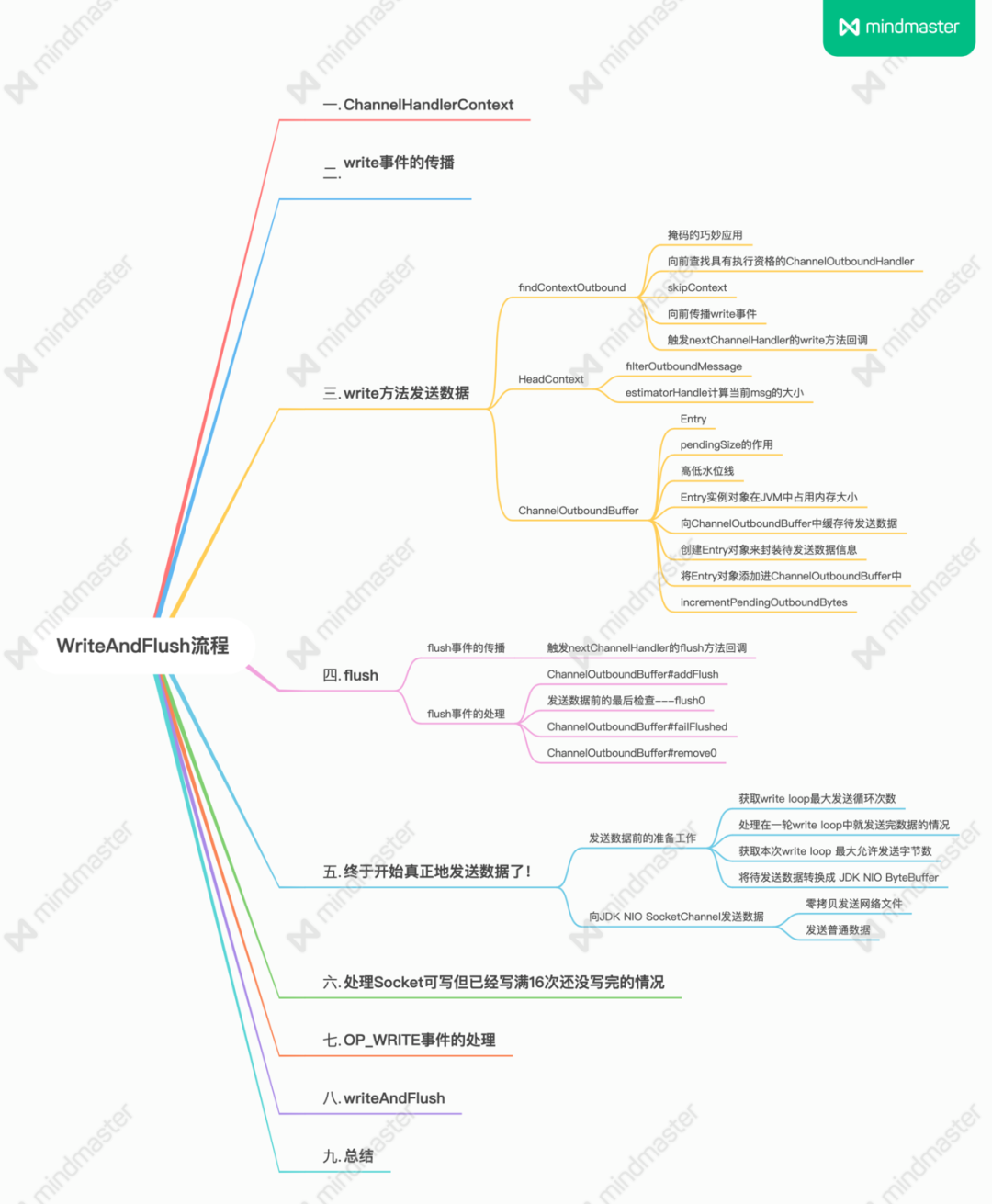

Summary of this article.png

1. ChannelHandlerContext

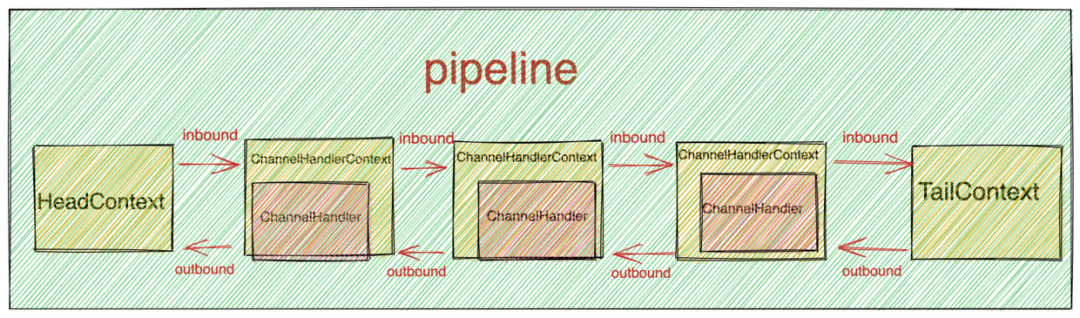

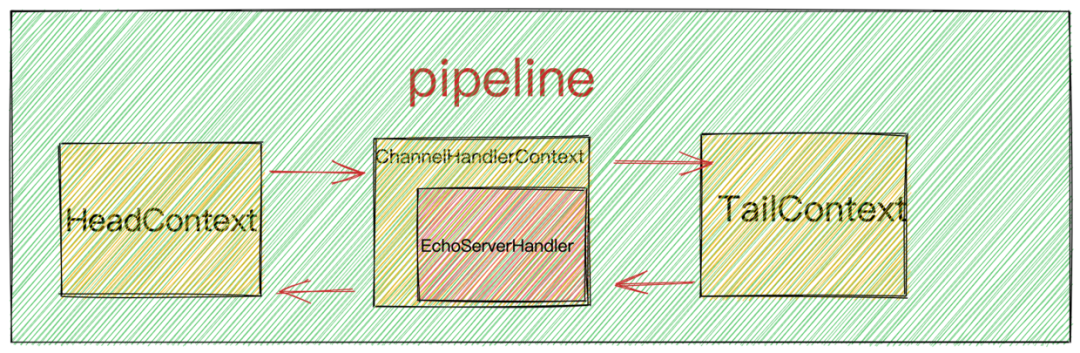

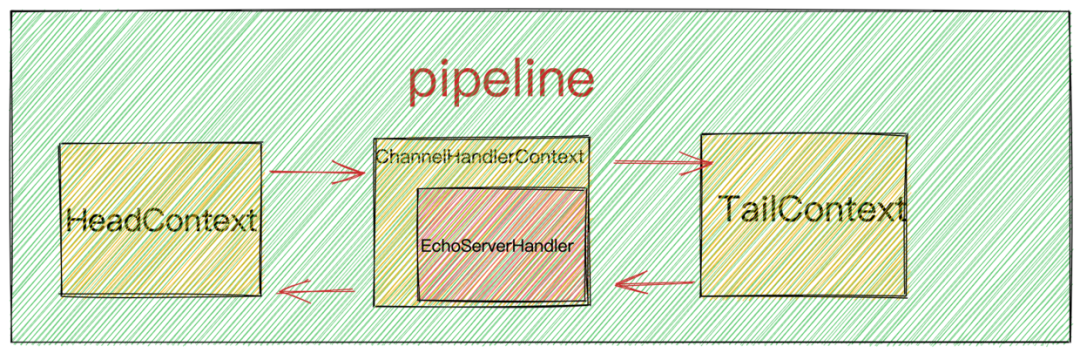

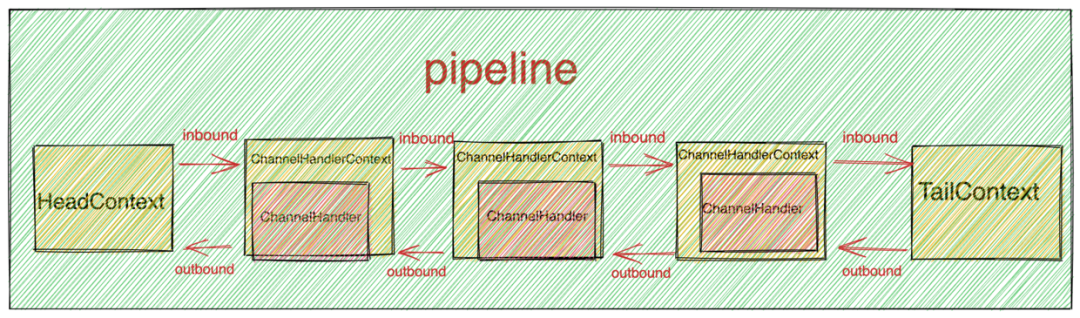

pipeline structure.png

Through the introduction of the previous articles, we know that Netty will allocate a pipeline for each Channel, and the pipeline is a doubly linked list structure. The IO asynchronous events generated in Netty will be propagated in this pipeline.

IO asynchronous events in Netty are roughly divided into two categories:

-

Inbound event: Inbound events, such as the ChannelActive event and ChannelRead event introduced earlier, will propagate backwards from the head node of the pipeline, HeadContext.

-

outbound event: outbound events, such as the write event and flush event that will be introduced in this article, outbound events will propagate from back to front in the opposite direction until HeadContext. The processing of outbound events will eventually be done in the HeadContext.

-

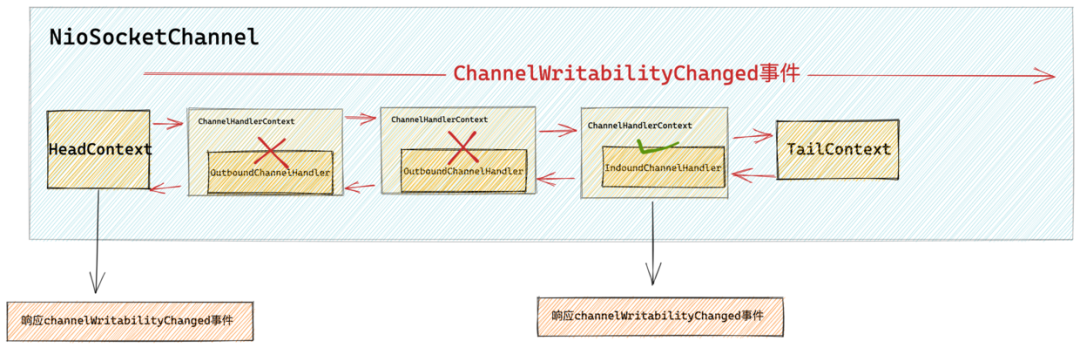

The channelHandlerContext.write() used in this example will cause the write event to propagate forward along the pipeline from the current ChannelHandler, which is the EchoServerHandler here.

-

And channelHandlerContext.channel().write() will make the write event propagate forward from the tail node of the pipeline, TailContext, until HeadContext.

-

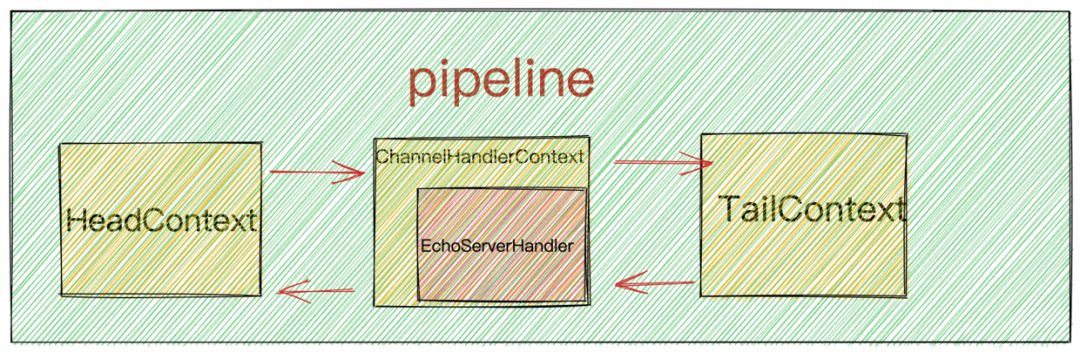

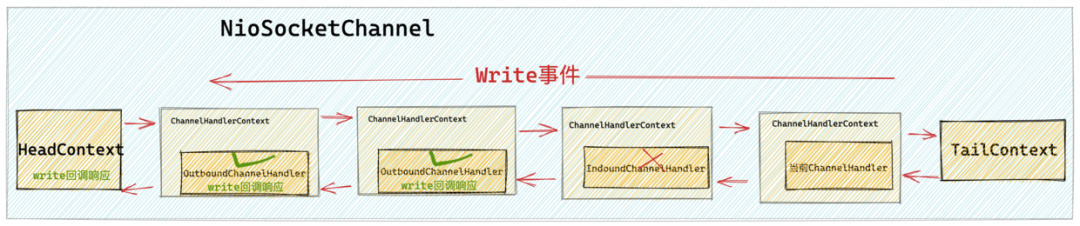

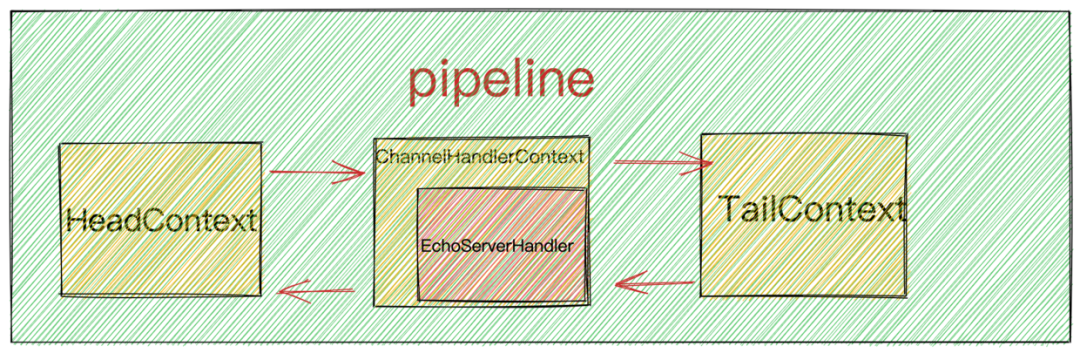

Client channel pipeline structure.png

And the type in a doubly linked list data structure like pipeline is ChannelHandlerContext, which wraps our custom IO processing logic ChannelHandler.

ChannelHandler does not need to perceive the context information in the pipeline it is in, it only needs to concentrate on handling the IO logic. The context information about the pipeline is all encapsulated in ChannelHandlerContext.

The role of ChannelHandler in Netty is only responsible for processing IO logic, such as encoding and decoding. It does not perceive its position in the pipeline, let alone the two ChannelHandlers adjacent to it. In fact, ChannelHandler does not need to care about these, the only thing it needs to care about is to handle the asynchronous events it cares about

而 ChannelHandlerContext 中维护了 pipeline 这个双向链表中的 pre 以及 next 指针,这样可以方便的找到与其相邻的 ChannelHandler ,并可以过滤出一些符合执行条件的 ChannelHandler。正如它的命名一样, ChannelHandlerContext 正是起到了维护 ChannelHandler 上下文的一个作用。而 Netty 中的异步事件在 pipeline 中的传播靠的就是这个 ChannelHandlerContext 。

这样设计就使得 ChannelHandlerContext 和 ChannelHandler 的职责单一,各司其职,具有高度的可扩展性。

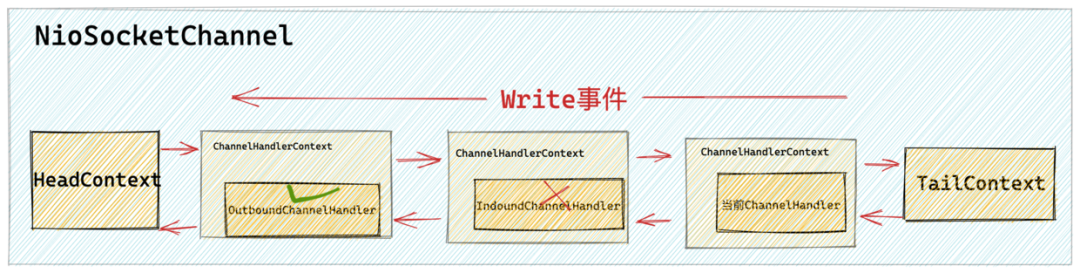

2. write事件的传播

我们无论是在业务线程或者是在 SubReactor 线程中完成业务处理后,都需要通过 channelHandlerContext 的引用将 write事件在 pipeline 中进行传播。然后在 pipeline 中相应的 ChannelHandler 中监听 write 事件从而可以对 write事件进行自定义编排处理(比如我们常用的编码器),最终传播到 HeadContext 中执行发送数据的逻辑操作。

前边也提到 Netty 中有两个触发 write 事件传播的方法,它们的传播处理逻辑都是一样的,只不过它们在 pipeline 中的传播起点是不同的。

-

channelHandlerContext.write() 方法会从当前 ChannelHandler 开始在 pipeline 中向前传播 write 事件直到 HeadContext。

-

channelHandlerContext.channel().write() 方法则会从 pipeline 的尾结点 TailContext 开始在 pipeline 中向前传播 write 事件直到 HeadContext 。

客户端channel pipeline结构.png

在我们清楚了 write 事件的总体传播流程后,接下来就来看看在 write 事件传播的过程中Netty为我们作了些什么?这里我们以 channelHandlerContext.write() 方法为例说明。

3. write方法发送数据

write事件传播流程.png

abstract class AbstractChannelHandlerContext implements ChannelHandlerContext, ResourceLeakHint {

@Override

public ChannelFuture write(Object msg) {

return write(msg, newPromise());

}

@Override

public ChannelFuture write(final Object msg, final ChannelPromise promise) {

write(msg, false, promise);

return promise;

}

}

这里我们看到 Netty 的写操作是一个异步操作,当我们在业务线程中调用 channelHandlerContext.write() 后,Netty 会给我们返回一个 ChannelFuture,我们可以在这个 ChannelFutrue 中添加 ChannelFutureListener ,这样当 Netty 将我们要发送的数据发送到底层 Socket 中时,Netty 会通过 ChannelFutureListener 通知我们写入结果。

@Override

public void channelRead(final ChannelHandlerContext ctx, final Object msg) {

//此处的msg就是Netty在read loop中从NioSocketChannel中读取到的ByteBuffer

ChannelFuture future = ctx.write(msg);

future.addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture future) throws Exception {

Throwable cause = future.cause();

if (cause != null) {

处理异常情况

} else {

写入Socket成功后,Netty会通知到这里

}

}

});

}

当异步事件在 pipeline 传播的过程中发生异常时,异步事件就会停止在 pipeline 中传播。所以我们在日常开发中,需要对写操作异常情况进行处理。

-

其中 inbound 类异步事件发生异常时,会触发exceptionCaught事件传播。exceptionCaught 事件本身也是一种 inbound 事件,传播方向会从当前发生异常的 ChannelHandler 开始一直向后传播直到 TailContext。

-

When an outbound asynchronous event occurs, the exceptionCaught event propagation will not be triggered . Generally just notify the related ChannelFuture. However, if an exception occurs during the propagation of the flush event, the exceptionCaught event callback in the ChannelHandler where the current exception occurs will be triggered.

We continue to return to the main line of writing operations~~~

private void write(Object msg, boolean flush, ChannelPromise promise) {

ObjectUtil.checkNotNull(msg, "msg");

................省略检查promise的有效性...............

//flush = true 表示channelHandler中调用的是writeAndFlush方法,这里需要找到pipeline中覆盖write或者flush方法的channelHandler

//flush = false 表示调用的是write方法,只需要找到pipeline中覆盖write方法的channelHandler

final AbstractChannelHandlerContext next = findContextOutbound(flush ?

(MASK_WRITE | MASK_FLUSH) : MASK_WRITE);

//用于检查内存泄露

final Object m = pipeline.touch(msg, next);

//获取pipeline中下一个要被执行的channelHandler的executor

EventExecutor executor = next.executor();

//确保OutBound事件由ChannelHandler指定的executor执行

if (executor.inEventLoop()) {

//如果当前线程正是channelHandler指定的executor则直接执行

if (flush) {

next.invokeWriteAndFlush(m, promise);

} else {

next.invokeWrite(m, promise);

}

} else {

//如果当前线程不是ChannelHandler指定的executor,则封装成异步任务提交给指定executor执行,注意这里的executor不一定是reactor线程。

final WriteTask task = WriteTask.newInstance(next, m, promise, flush);

if (!safeExecute(executor, task, promise, m, !flush)) {

task.cancel();

}

}

}

For the write event to propagate forward in the pipeline, it is necessary to find the next ChannelHandler that is eligible for execution on the pipeline, because the one in front of the current ChannelHandler may be a ChannelInboundHandler type or a ChannelOutboundHandler type ChannelHandler, or it may not care at all ChannelHandler for the write event (the write callback method is not implemented).

Propagation of write event.png

Here we need to find the ChannelOutboundHandler type in front of the current ChannelHandler through the findContextOutbound method and overwrite the ChannelHandler that implements the write callback method as the next object to be executed.

3.1 findContextOutbound

private AbstractChannelHandlerContext findContextOutbound(int mask) {

AbstractChannelHandlerContext ctx = this;

//获取当前ChannelHandler的executor

EventExecutor currentExecutor = executor();

do {

//获取前一个ChannelHandler

ctx = ctx.prev;

} while (skipContext(ctx, currentExecutor, mask, MASK_ONLY_OUTBOUND));

return ctx;

}

//判断前一个ChannelHandler是否具有响应Write事件的资格

private static boolean skipContext(

AbstractChannelHandlerContext ctx, EventExecutor currentExecutor, int mask, int onlyMask) {

return (ctx.executionMask & (onlyMask | mask)) == 0 ||

(ctx.executor() == currentExecutor && (ctx.executionMask & mask) == 0);

}

The parameter received by the findContextOutbound method is a mask, which indicates that the ChannelHandler that has the execution qualifications is to be searched forward. Because what we call here is the write method of ChannelHandlerContext, so flush = false, and the mask passed in is MASK_WRITE, which means that we want to look forward and cover the ChannelOutboundHandler that implements the write callback method.

3.1.1 Clever application of masks

In Netty, some asynchronous event callback methods overridden by ChannelHandler are represented by int-type masks, so that we can use this mask to determine what execution qualifications the current ChannelHandler has.

final class ChannelHandlerMask {

....................省略......................

static final int MASK_CHANNEL_ACTIVE = 1 << 3;

static final int MASK_CHANNEL_READ = 1 << 5;

static final int MASK_CHANNEL_READ_COMPLETE = 1 << 6;

static final int MASK_WRITE = 1 << 15;

static final int MASK_FLUSH = 1 << 16;

//outbound事件掩码集合

static final int MASK_ONLY_OUTBOUND = MASK_BIND | MASK_CONNECT | MASK_DISCONNECT |

MASK_CLOSE | MASK_DEREGISTER | MASK_READ | MASK_WRITE | MASK_FLUSH;

....................省略......................

}

When a ChannelHandler is added to the pipeline, Netty will | 运算 add the ChannelHandler's execution qualification to the ChannelHandlerContext#executionMask field according to the type of the current ChannelHandler and the asynchronous event callback method implemented by it.

abstract class AbstractChannelHandlerContext implements ChannelHandlerContext, ResourceLeakHint {

//ChannelHandler执行资格掩码

private final int executionMask;

....................省略......................

}

Similar mask usage is actually mentioned in the previous article ? "A Discussion on the Operating Architecture of the Netty Core Engine Reactor" , when the Channel registers the IO events of interest to the corresponding Reactor, an int is also used. A mask of type interestOps represents the set of IO events that the Channel is interested in.

@Override

protected void doBeginRead() throws Exception {

final SelectionKey selectionKey = this.selectionKey;

if (!selectionKey.isValid()) {

return;

}

readPending = true;

final int interestOps = selectionKey.interestOps();

/**

* 1:ServerSocketChannel 初始化时 readInterestOp设置的是OP_ACCEPT事件

* 2:SocketChannel 初始化时 readInterestOp设置的是OP_READ事件

* */

if ((interestOps & readInterestOp) == 0) {

//注册监听OP_ACCEPT或者OP_READ事件

selectionKey.interestOps(interestOps | readInterestOp);

}

}

-

Use the & operation to determine whether an event is in the event collection:

(readyOps & SelectionKey.OP_CONNECT) != 0 -

Use the | operator to add events to the event collection:

interestOps | readInterestOp -

To delete an event from the event collection, first invert the event to be deleted ~, and then perform & operation with the event collection:

ops &= ~SelectionKey.OP_CONNECT

The author will explain this part in detail when the next article fully introduces the pipeline. You only need to know that the mask here represents a collection of execution qualifications. The execution qualification of the current ChannelHandler is stored in the executionMask field in its ChannelHandlerContext.

3.1.2 Look forward to the ChannelOutboundHandler with execution qualification

private AbstractChannelHandlerContext findContextOutbound(int mask) {

//当前ChannelHandler

AbstractChannelHandlerContext ctx = this;

//获取当前ChannelHandler的executor

EventExecutor currentExecutor = executor();

do {

//获取前一个ChannelHandler

ctx = ctx.prev;

} while (skipContext(ctx, currentExecutor, mask, MASK_ONLY_OUTBOUND));

return ctx;

}

//判断前一个ChannelHandler是否具有响应Write事件的资格

private static boolean skipContext(

AbstractChannelHandlerContext ctx, EventExecutor currentExecutor, int mask, int onlyMask) {

return (ctx.executionMask & (onlyMask | mask)) == 0 ||

(ctx.executor() == currentExecutor && (ctx.executionMask & mask) == 0);

}

前边我们提到 ChannelHandlerContext 不仅封装了 ChannelHandler 的执行资格掩码还可以感知到当前 ChannelHandler 在 pipeline 中的位置,因为 ChannelHandlerContext 中维护了前驱指针 prev 以及后驱指针 next。

这里我们需要在 pipeline 中传播 write 事件,它是一种 outbound 事件,所以需要向前传播,这里通过 ChannelHandlerContext 的前驱指针 prev 拿到当前 ChannelHandler 在 pipeline 中的前一个节点。

ctx = ctx.prev;

通过 skipContext 方法判断前驱节点是否具有执行的资格。如果没有执行资格则跳过继续向前查找。如果具有执行资格则返回并响应 write 事件。

在 write 事件传播场景中,执行资格指的是前驱 ChannelHandler 是否是ChannelOutboundHandler 类型的,并且它是否覆盖实现了 write 事件回调方法。

public class EchoChannelHandler extends ChannelOutboundHandlerAdapter {

@Override

public void write(ChannelHandlerContext ctx, Object msg, ChannelPromise promise) throws Exception {

super.write(ctx, msg, promise);

}

}

3.1.3 skipContext

该方法主要用来判断当前 ChannelHandler 的前驱节点是否具有 mask 掩码中包含的事件响应资格。

方法参数中有两个比较重要的掩码:

-

int onlyMask:用来指定当前 ChannelHandler 需要符合的类型。其中MASK_ONLY_OUTBOUND 为 ChannelOutboundHandler 类型的掩码, MASK_ONLY_INBOUND 为 ChannelInboundHandler 类型的掩码。

final class ChannelHandlerMask {

//outbound事件的掩码集合

static final int MASK_ONLY_OUTBOUND = MASK_BIND | MASK_CONNECT | MASK_DISCONNECT |

MASK_CLOSE | MASK_DEREGISTER | MASK_READ | MASK_WRITE | MASK_FLUSH;

//inbound事件的掩码集合

static final int MASK_ONLY_INBOUND = MASK_CHANNEL_REGISTERED |

MASK_CHANNEL_UNREGISTERED | MASK_CHANNEL_ACTIVE | MASK_CHANNEL_INACTIVE | MASK_CHANNEL_READ |

MASK_CHANNEL_READ_COMPLETE | MASK_USER_EVENT_TRIGGERED | MASK_CHANNEL_WRITABILITY_CHANGED;

}

比如本小节中我们是在介绍 write 事件的传播,那么就需要在当前ChannelHandler 前边首先是找到一个 ChannelOutboundHandler 类型的ChannelHandler。

ctx.executionMask & (onlyMask | mask)) == 0 用于判断前一个 ChannelHandler 是否为我们指定的 ChannelHandler 类型,在本小节中我们指定的是 onluMask = MASK_ONLY_OUTBOUND 即 ChannelOutboundHandler 类型。如果不是,这里就会直接跳过,继续在 pipeline 中向前查找。

-

int mask:用于指定前一个 ChannelHandler 需要实现的相关异步事件处理回调。在本小节中这里指定的是 MASK_WRITE ,即需要实现 write 回调方法。通过(ctx.executionMask & mask) == 0条件来判断前一个ChannelHandler 是否实现了 write 回调,如果没有实现这里就跳过,继续在 pipeline 中向前查找。

关于 skipContext 方法的详细介绍,笔者还会在下篇文章全面介绍 pipeline的时候再次进行介绍,这里大家只需要明白该方法的核心逻辑即可。

3.1.4 向前传播write事件

通过 findContextOutbound 方法我们在 pipeline 中找到了下一个具有执行资格的 ChannelHandler,这里指的是下一个 ChannelOutboundHandler 类型并且覆盖实现了 write 方法的 ChannelHandler。

Netty 紧接着会调用这个 nextChannelHandler 的 write 方法实现 write 事件在 pipeline 中的传播。

//获取下一个要被执行的channelHandler指定的executor

EventExecutor executor = next.executor();

//确保outbound事件的执行 是由 channelHandler指定的executor执行的

if (executor.inEventLoop()) {

//如果当前线程是指定的executor 则直接操作

if (flush) {

next.invokeWriteAndFlush(m, promise);

} else {

next.invokeWrite(m, promise);

}

} else {

//如果当前线程不是channelHandler指定的executor,则封装程异步任务 提交给指定的executor执行

final WriteTask task = WriteTask.newInstance(next, m, promise, flush);

if (!safeExecute(executor, task, promise, m, !flush)) {

task.cancel();

}

}

在我们向 pipeline 添加 ChannelHandler 的时候可以通过ChannelPipeline#addLast(EventExecutorGroup,ChannelHandler......) 方法指定执行该 ChannelHandler 的executor。如果不特殊指定,那么执行该 ChannelHandler 的executor默认为该 Channel 绑定的 Reactor 线程。

执行 ChannelHandler 中异步事件回调方法的线程必须是 ChannelHandler 指定的executor。

So here first we need to obtain the executor specified by the next ChannelHandler that meets the execution conditions found in the findContextOutbound method.

EventExecutor executor = next.executor()

And use executor.inEventLoop() the method to judge whether the current thread is the executor specified by the ChannelHandler.

If yes, then we directly execute the write method in ChannelHandler in the current thread.

If not, we need to encapsulate the callback operation of the write event by ChannelHandler into an asynchronous task WriteTask and submit it to the executor specified by ChannelHandler, which is responsible for execution.

It should be noted here that the executor is not necessarily the reactor thread bound to the channel. It can be our custom thread pool, but we need to specify it through the ChannelPipeline#addLast method. If we don't specify it, the executor that executes the ChannelHandler is the reactor thread bound to the channel by default.

Here Netty needs to ensure that the outbound event is executed by the executor specified by channelHandler.

Some students here may have questions. If we add ChannelHandlers to pipieline and specify different executors for each ChannelHandler, how can Netty ensure thread safety ? ?

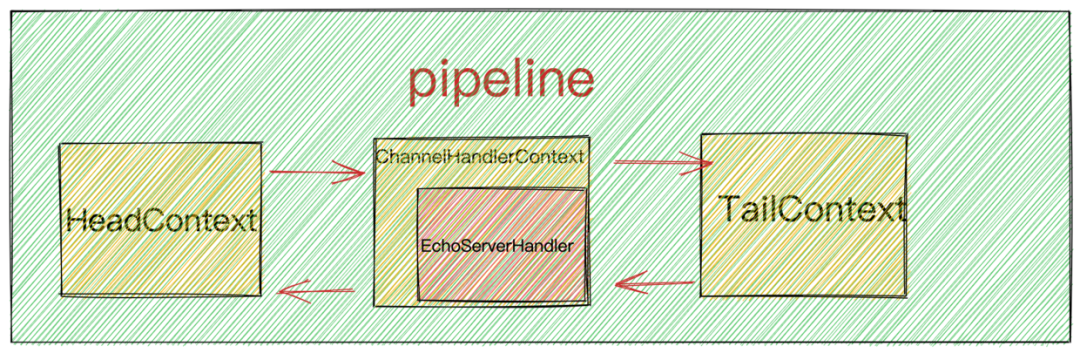

Do you still remember the structure in the pipeline?

Client channel pipeline structure.png

outbound 事件在 pipeline 中的传播最终会传播到 HeadContext 中,之前的系列文章我们提到过,HeadContext 中封装了 Channel 的 Unsafe 类负责 Channel 底层的 IO 操作。而 HeadContext 指定的 executor 正是对应 channel 绑定的 reactor 线程。

所以最终在 netty 内核中执行写操作的线程一定是 reactor 线程从而保证了线程安全性。

忘记这段内容的同学可以在回顾下?《Reactor在Netty中的实现(创建篇)》,类似的套路我们在介绍 NioServerSocketChannel 进行 bind 绑定以及 register 注册的时候都介绍过,只不过这里将 executor 扩展到了自定义线程池的范围。

3.1.5 触发nextChannelHandler的write方法回调

write事件的传播1.png

//如果当前线程是指定的executor 则直接操作

if (flush) {

next.invokeWriteAndFlush(m, promise);

} else {

next.invokeWrite(m, promise);

}

由于我们在示例 ChannelHandler 中调用的是 ChannelHandlerContext#write 方法,所以这里的 flush = false 。触发调用 nextChannelHandler 的 write 方法。

void invokeWrite(Object msg, ChannelPromise promise) {

if (invokeHandler()) {

invokeWrite0(msg, promise);

} else {

// 当前channelHandler虽然添加到pipeline中,但是并没有调用handlerAdded

// 所以不能调用当前channelHandler中的回调方法,只能继续向前传递write事件

write(msg, promise);

}

}

这里首先需要通过 invokeHandler() 方法判断这个 nextChannelHandler 中的 handlerAdded 方法是否被回调过。因为 ChannelHandler 只有被正确的添加到对应的 ChannelHandlerContext 中并且准备好处理异步事件时, ChannelHandler#handlerAdded 方法才会被回调。

The author will introduce this part in detail in the next article. Here you only need to understand that the purpose of calling the invokeHandler() method is to determine whether the ChannelHandler is correctly initialized.

private boolean invokeHandler() {

// Store in local variable to reduce volatile reads.

int handlerState = this.handlerState;

return handlerState == ADD_COMPLETE || (!ordered && handlerState == ADD_PENDING);

}

Only when the handlerAdded callback is triggered, the state of ChannelHandler can become ADD_COMPLETE.

If the invokeHandler() method returns false, then we need to skip the nextChannelHandler and call the ChannelHandlerContext#write method to continue propagating the write event forward.

@Override

public ChannelFuture write(final Object msg, final ChannelPromise promise) {

//继续向前传播write事件,回到流程起点

write(msg, false, promise);

return promise;

}

If invokeHandler() returns true, it means that the nextChannelHandler has been correctly initialized in the pipeline, and Netty directly calls the write method of the ChannelHandler, so that the write event is propagated from the current ChannelHandler to the nextChannelHandler.

private void invokeWrite0(Object msg, ChannelPromise promise) {

try {

//调用当前ChannelHandler中的write方法

((ChannelOutboundHandler) handler()).write(this, msg, promise);

} catch (Throwable t) {

notifyOutboundHandlerException(t, promise);

}

}

Here we see that if an exception occurs during the propagation of the write event, the write event will stop propagating in the pipeline and notify the registered ChannelFutureListener.

Client channel pipeline structure.png

From the pipeline structure of the example in this article, we can see that after the EchoServerHandler calls the ChannelHandlerContext#write method, the write event will propagate forward in the pipeline to the HeadContext, and the HeadContext is where Netty actually handles the write event.

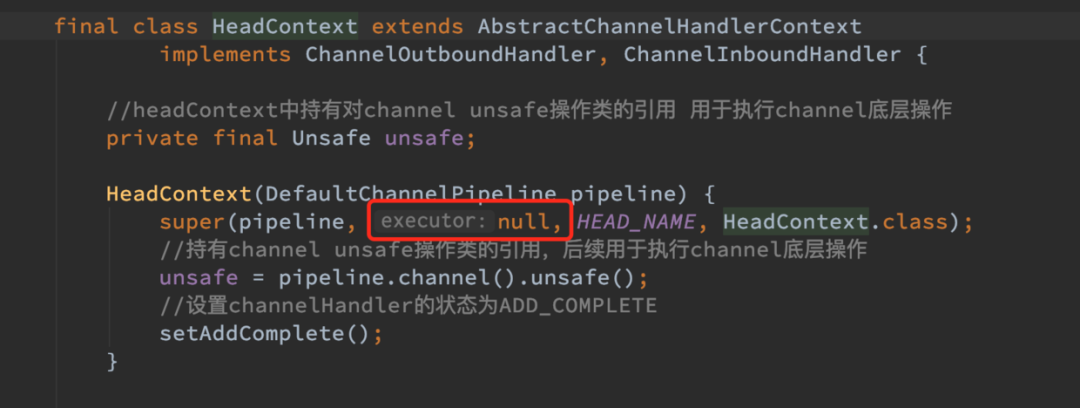

3.2 HeadContext

final class HeadContext extends AbstractChannelHandlerContext

implements ChannelOutboundHandler, ChannelInboundHandler {

@Override

public void write(ChannelHandlerContext ctx, Object msg, ChannelPromise promise) {

unsafe.write(msg, promise);

}

}

The write event will eventually propagate to HeadContext in the pipeline and call back the write method of HeadContext. And call the channel's unsafe class in the write callback to perform the underlying write operation. This is the end point of the propagation of the write event in the pipeline.

Cache data to be sent in ChannelOutboundBuffer.png

protected abstract class AbstractUnsafe implements Unsafe {

//待发送数据缓冲队列 Netty是全异步框架,所以这里需要一个缓冲队列来缓存用户需要发送的数据

private volatile ChannelOutboundBuffer outboundBuffer = new ChannelOutboundBuffer(AbstractChannel.this);

@Override

public final void write(Object msg, ChannelPromise promise) {

assertEventLoop();

//获取当前channel对应的待发送数据缓冲队列(支持用户异步写入的核心关键)

ChannelOutboundBuffer outboundBuffer = this.outboundBuffer;

..........省略..................

int size;

try {

//过滤message类型 这里只会接受DirectBuffer或者fileRegion类型的msg

msg = filterOutboundMessage(msg);

//计算当前msg的大小

size = pipeline.estimatorHandle().size(msg);

if (size < 0) {

size = 0;

}

} catch (Throwable t) {

..........省略..................

}

//将msg 加入到Netty中的待写入数据缓冲队列ChannelOutboundBuffer中

outboundBuffer.addMessage(msg, size, promise);

}

}

As we all know, Netty is an asynchronous event-driven network framework. All IO operations in Netty are asynchronous, including the write operation introduced in this section. In order to ensure asynchronous execution of write operations, Netty defines a data buffer queue to be sent ChannelOutboundBuffer, Netty caches the network data that these users need to send in ChannelOutboundBuffer before writing them to the Socket.

Each client NioSocketChannel corresponds to a ChannelOutboundBuffer data buffer queue to be sent

3.2.1 filterOutboundMessage

ChannelOutboundBuffer only accepts msg data of ByteBuffer type and FileRegion type.

FileRegion is defined by Netty to transfer file data over the network in a zero-copy manner. In this article, we mainly focus on the sending of common network data ByteBuffer.

So before writing msg to ChannelOutboundBuffer, we need to check the type of msg to be written. Make sure it is an acceptable type for ChannelOutboundBuffer.

@Override

protected final Object filterOutboundMessage(Object msg) {

if (msg instanceof ByteBuf) {

ByteBuf buf = (ByteBuf) msg;

if (buf.isDirect()) {

return msg;

}

return newDirectBuffer(buf);

}

if (msg instanceof FileRegion) {

return msg;

}

throw new UnsupportedOperationException(

"unsupported message type: " + StringUtil.simpleClassName(msg) + EXPECTED_TYPES);

}

In the process of network data transmission, in order to reduce the copying of data from the on-heap memory to the off-heap memory and relieve the pressure of GC, Netty must use DirectByteBuffer to use the off-heap memory to store the data sent by the network.

3.2.2 estimatorHandle calculates the size of the current msg

public class DefaultChannelPipeline implements ChannelPipeline {

//原子更新estimatorHandle字段

private static final AtomicReferenceFieldUpdater<DefaultChannelPipeline, MessageSizeEstimator.Handle> ESTIMATOR =

AtomicReferenceFieldUpdater.newUpdater(

DefaultChannelPipeline.class, MessageSizeEstimator.Handle.class, "estimatorHandle");

//计算要发送msg大小的handler

private volatile MessageSizeEstimator.Handle estimatorHandle;

final MessageSizeEstimator.Handle estimatorHandle() {

MessageSizeEstimator.Handle handle = estimatorHandle;

if (handle == null) {

handle = channel.config().getMessageSizeEstimator().newHandle();

if (!ESTIMATOR.compareAndSet(this, null, handle)) {

handle = estimatorHandle;

}

}

return handle;

}

}

In the pipeline, there will be an estimatorHandle specially used to calculate the size of the ByteBuffer to be sent. This estimatorHandle will be initialized when the configuration class in the Channel corresponding to the pipeline is created.

Here the actual type of estimatorHandle is DefaultMessageSizeEstimator#HandleImpl.

public final class DefaultMessageSizeEstimator implements MessageSizeEstimator {

private static final class HandleImpl implements Handle {

private final int unknownSize;

private HandleImpl(int unknownSize) {

this.unknownSize = unknownSize;

}

@Override

public int size(Object msg) {

if (msg instanceof ByteBuf) {

return ((ByteBuf) msg).readableBytes();

}

if (msg instanceof ByteBufHolder) {

return ((ByteBufHolder) msg).content().readableBytes();

}

if (msg instanceof FileRegion) {

return 0;

}

return unknownSize;

}

}

Here we see that the size of ByteBuffer is the number of unread bytes in the Buffer writerIndex - readerIndex.

After we have verified the type of data msg to be written and calculated the size of msg, we can ChannelOutboundBuffer#addMessagewrite msg into ChannelOutboundBuffer (data buffer queue to be sent) through the method.

The final logic of the write event processing is to write the data to be sent into the ChannelOutboundBuffer. Next, let's see what the internal structure of the ChannelOutboundBuffer looks like.

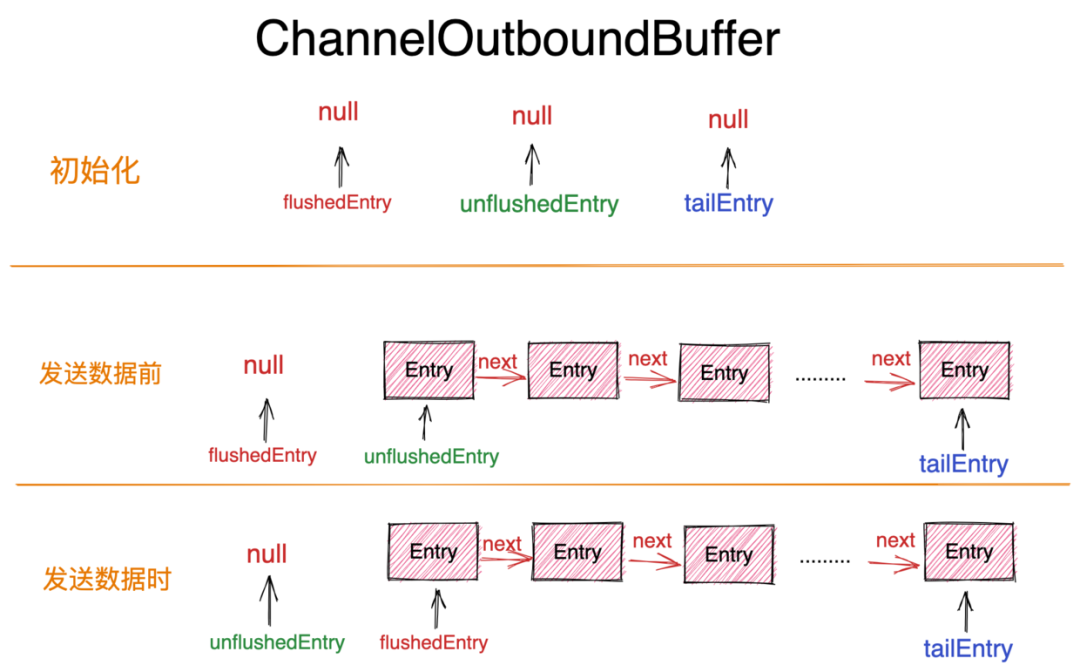

3.3 ChannelOutboundBuffer

ChannelOutboundBuffer is actually a buffer queue with a single linked list structure. The node type in the linked list is Entry. Since the function of ChannelOutboundBuffer in Netty is to cache the network data to be sent by the application, the Encapsulation in the Entry is the network transmission to be written into the Socket. Data-related information, and the ChannelPromise returned to the user in the ChannelHandlerContext#write method. This allows the application to be notified asynchronously after data has been written to the Socket.

In addition, three important pointers are encapsulated in ChannelOutboundBuffer:

-

unflushedEntry: This pointer points to the first Entry of data to be sent in ChannelOutboundBuffer. -

tailEntry: This pointer points to the last Entry of data to be sent in ChannelOutboundBuffer. Through the two pointers unflushedEntry and tailEntry, we can easily locate the Entry range of the data to be sent. -

flushedEntry: When we need to send the buffered data in ChannelOutboundBuffer to the Socket through the flush operation, the flushedEntry pointer will point to the position of the unflushedEntry, so the Entry between the flushedEntry pointer and the tailEntry pointer is the network data that we will send to the Socket.

These three pointers are all null when initialized.

ChannelOutboundBuffer structure.png

3.3.1 Entry

Entry is the node element type in the ChannelOutboundBuffer linked list structure, which encapsulates various information of the data to be sent. ChannelOutboundBuffer is actually the organization and operation of the Entry structure. Therefore, understanding the Entry structure is the basis for understanding the entire ChannelOutboundBuffer operation process.

Next, let's take a look at the information about the data to be sent that the Entry structure specifically encapsulates.

static final class Entry {

//Entry的对象池,用来创建和回收Entry对象

private static final ObjectPool<Entry> RECYCLER = ObjectPool.newPool(new ObjectCreator<Entry>() {

@Override

public Entry newObject(Handle<Entry> handle) {

return new Entry(handle);

}

});

//DefaultHandle用于回收对象

private final Handle<Entry> handle;

//ChannelOutboundBuffer下一个节点

Entry next;

//待发送数据

Object msg;

//msg 转换为 jdk nio 中的byteBuffer

ByteBuffer[] bufs;

ByteBuffer buf;

//异步write操作的future

ChannelPromise promise;

//已发送了多少

long progress;

//总共需要发送多少,不包含entry对象大小。

long total;

//pendingSize表示entry对象在堆中需要的内存总量 待发送数据大小 + entry对象本身在堆中占用内存大小(96)

int pendingSize;

//msg中包含了几个jdk nio bytebuffer

int count = -1;

//write操作是否被取消

boolean cancelled;

}

We see that there are 12 fields in the Entry structure, including 1 static field and 11 instance fields.

Below, the author will introduce the meaning and function of these 12 fields, some of which will be used in later scenarios, here you may have a vague understanding of some fields, but it doesn't matter, how much you can understand here is how much , It doesn’t matter if you don’t understand it. The introduction here is just to make everyone familiar. In the explanation of the following process, the author will refer to these fields again.

-

ObjectPool<Entry> RECYCLER:Entry 的对象池,负责创建管理 Entry 实例,由于 Netty 是一个网络框架,所以 IO 读写就成了它的核心操作,在一个支持高性能高吞吐的网络框架中,会有大量的 IO 读写操作,那么就会导致频繁的创建 Entry 对象。我们都知道,创建一个实例对象以及 GC 回收这些实例对象都是需要性能开销的,那么在大量频繁创建 Entry 对象的场景下,引入对象池来复用创建好的 Entry 对象实例可以抵消掉由频繁创建对象以及GC回收对象所带来的性能开销。

关于对象池的详细内容,感兴趣的同学可以回看下笔者的这篇文章?《详解Recycler对象池的精妙设计与实现》

-

Handle<Entry> handle:默认实现类型为 DefaultHandle ,用于数据发送完毕后,对象池回收 Entry 对象。由对象池 RECYCLER 在创建 Entry 对象的时候传递进来。 -

Entry next:ChannelOutboundBuffer 是一个单链表的结构,这里的 next 指针用于指向当前 Entry 节点的后继节点。 -

Object msg:应用程序待发送的网络数据,这里 msg 的类型为 DirectByteBuffer 或者 FileRegion(用于通过零拷贝的方式网络传输文件)。 -

ByteBuffer[] bufs:这里的 ByteBuffer 类型为 JDK NIO 原生的 ByteBuffer 类型,因为 Netty 最终发送数据是通过 JDK NIO 底层的 SocketChannel 进行发送,所以需要将 Netty 中实现的 ByteBuffer 类型转换为 JDK NIO ByteBuffer 类型。应用程序发送的 ByteBuffer 可能是一个也可能是多个,如果发送多个就用 ByteBuffer[] bufs 封装在 Entry 对象中,如果是一个就用 ByteBuffer buf 封装。 -

int count:表示待发送数据 msg 中一共包含了多少个 ByteBuffer 需要发送。 -

ChannelPromise promise: ChannelFuture returned by ChannelHandlerContext#write asynchronous write operation. When Netty writes the data to be sent into the Socket, it will notify the application to send the result through this ChannelPromise. -

long progress: Indicates the current sending progress, how much data has been sent. -

long total: How much data needs to be sent in total in Entry. Note: This field does not contain the memory footprint of the Entry object. It just indicates the size of the network data to be sent. -

boolean cancelled: Whether the write operation invoked by the application is cancelled. -

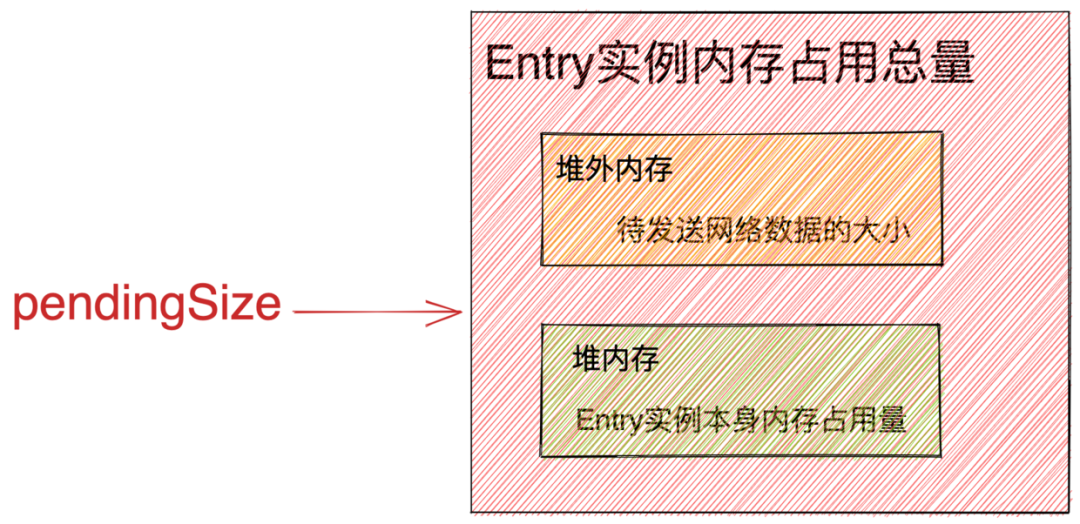

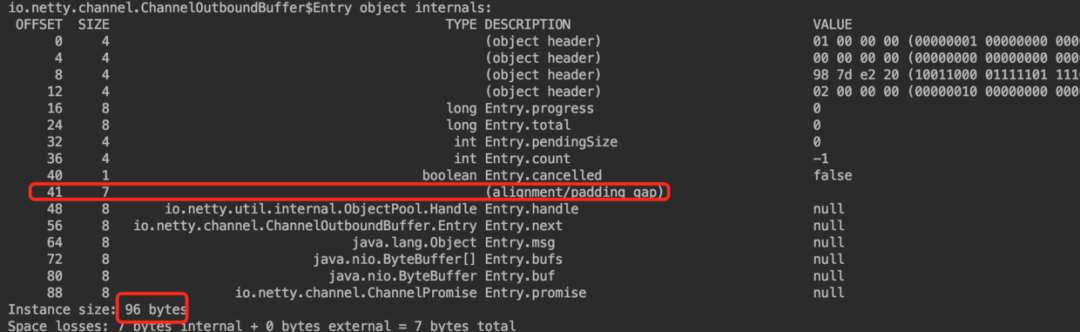

int pendingSize: Indicates the total memory usage of the data to be sent. The amount of data to be sent in memory is divided into two parts:-

The size of the network data to be sent encapsulated in the Entry object.

-

The memory usage of the Entry object itself.

-

Total memory usage of Entry.png

3.3.2 The role of pendingSize

Imagine such a scenario, when the receiving speed and processing speed of network data become slower and slower due to network congestion or high Netty client load, the sliding window of TCP keeps shrinking to reduce the sending of network data until it is 0, while Netty However, the server has a large number of frequent write operations, which are continuously written to ChannelOutboundBuffer.

This leads to the fact that the data cannot be sent out but the Netty server keeps writing data, which will slowly burst the ChannelOutboundBuffer and cause OOM. This mainly refers to the OOM of the off-heap memory, because all the data to be sent wrapped in the ChannelOutboundBuffer are stored in the off-heap memory.

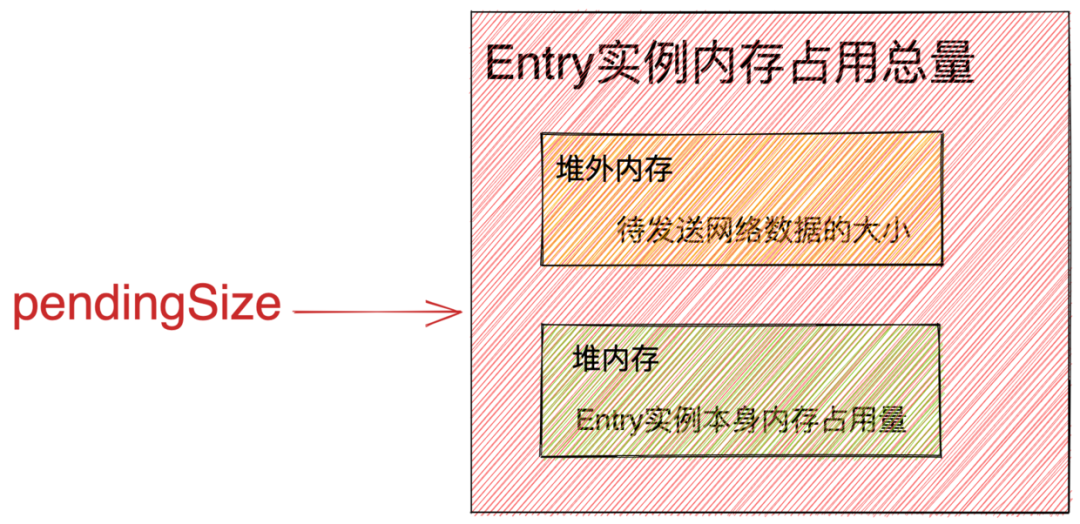

So Netty must limit the total memory usage of the data to be sent in ChannelOutboundBuffer, and it cannot grow infinitely. The high and low watermarks are defined in Netty to indicate the upper and lower limits of the memory usage of the data to be sent in the ChannelOutboundBuffer. Note: The memory here includes both JVM heap memory usage and off-heap memory usage.

-

当待发送数据的内存占用总量超过高水位线的时候,Netty 就会将 NioSocketChannel 的状态标记为不可写状态。否则就可能导致 OOM。

-

当待发送数据的内存占用总量低于低水位线的时候,Netty 会再次将 NioSocketChannel 的状态标记为可写状态。

那么我们用什么记录ChannelOutboundBuffer中的待发送数据的内存占用总量呢?

答案就是本小节要介绍的 pendingSize 字段。在谈到待发送数据的内存占用量时大部分同学普遍都会有一个误解就是只计算待发送数据的大小(msg中包含的字节数) 而忽略了 Entry 实例对象本身在内存中的占用量。

因为 Netty 会将待发送数据封装在 Entry 实例对象中,在大量频繁的写操作中会产生大量的 Entry 实例对象,所以 Entry 实例对象的内存占用是不可忽视的。

否则就会导致明明还没有到达高水位线,但是由于大量的 Entry 实例对象存在,从而发生OOM。

所以 pendingSize 的计算既要包含待发送数据的大小也要包含其 Entry 实例对象的内存占用大小,这样才能准确计算出 ChannelOutboundBuffer 中待发送数据的内存占用总量。

ChannelOutboundBuffer 中所有的 Entry 实例中的 pendingSize 之和就是待发送数据总的内存占用量。

public final class ChannelOutboundBuffer {

//ChannelOutboundBuffer中的待发送数据的内存占用总量

private volatile long totalPendingSize;

}

3.3.3 高低水位线

上小节提到 Netty 为了防止 ChannelOutboundBuffer 中的待发送数据内存占用无限制的增长从而导致 OOM ,所以引入了高低水位线,作为待发送数据内存占用的上限和下限。

那么高低水位线具体设置多大呢 ? 我们来看一下 DefaultChannelConfig 中的配置。

public class DefaultChannelConfig implements ChannelConfig {

//ChannelOutboundBuffer中的高低水位线

private volatile WriteBufferWaterMark writeBufferWaterMark = WriteBufferWaterMark.DEFAULT;

}

public final class WriteBufferWaterMark {

private static final int DEFAULT_LOW_WATER_MARK = 32 * 1024;

private static final int DEFAULT_HIGH_WATER_MARK = 64 * 1024;

public static final WriteBufferWaterMark DEFAULT =

new WriteBufferWaterMark(DEFAULT_LOW_WATER_MARK, DEFAULT_HIGH_WATER_MARK, false);

WriteBufferWaterMark(int low, int high, boolean validate) {

..........省略校验逻辑.........

this.low = low;

this.high = high;

}

}

我们看到 ChannelOutboundBuffer 中的高水位线设置的大小为 64 KB,低水位线设置的是 32 KB。

这也就意味着每个 Channel 中的待发送数据如果超过 64 KB。Channel 的状态就会变为不可写状态。当内存占用量低于 32 KB时,Channel 的状态会再次变为可写状态。

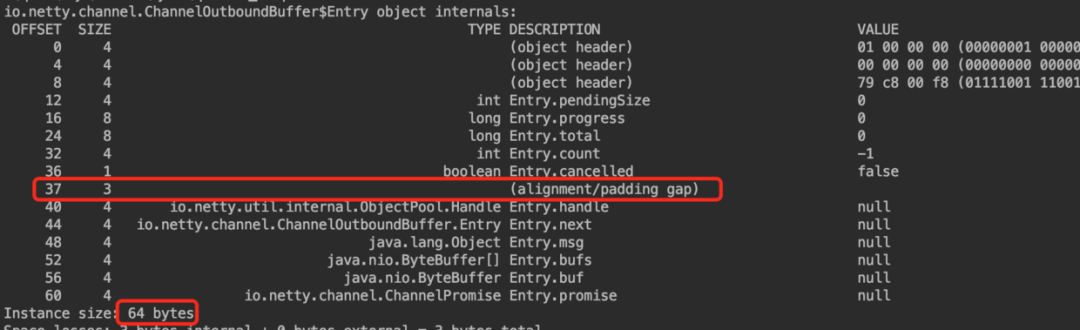

3.3.4 Entry实例对象在JVM中占用内存大小

前边提到 pendingSize 的作用主要是记录当前待发送数据的内存占用总量从而可以预警 OOM 的发生。

待发送数据的内存占用分为:待发送数据 msg 的内存占用大小以及 Entry 对象本身在JVM中的内存占用。

那么 Entry 对象本身的内存占用我们该如何计算呢?

要想搞清楚这个问题,大家需要先了解一下 Java 对象内存布局的相关知识。关于这部分背景知识,笔者已经在 ?《一文聊透对象在JVM中的内存布局,以及内存对齐和压缩指针的原理及应用》这篇文章中给出了详尽的阐述,想深入了解这块的同学可以看下这篇文章。

这里笔者只从这篇文章中提炼一些关于计算 Java 对象占用内存大小相关的内容。

在关于 Java 对象内存布局这篇文章中我们提到,对于Java普通对象来说内存中的布局由:对象头 + 实例数据区 + Padding,这三部分组成。

其中对象头由存储对象运行时信息的 MarkWord 以及指向对象类型元信息的类型指针组成。

MarkWord 用来存放:hashcode,GC 分代年龄,锁状态标志,线程持有的锁,偏向线程 Id,偏向时间戳等。在 32 位操作系统和 64 位操作系统中 MarkWord 分别占用 4B 和 8B 大小的内存。

Java 对象头中的类型指针还有实例数据区的对象引用,在64 位系统中开启压缩指针的情况下(-XX:+UseCompressedOops)占用 4B 大小。在关闭压缩指针的情况下(-XX:-UseCompressedOops)占用 8B 大小。

实例数据区用于存储 Java 类中定义的实例字段,包括所有父类中的实例字段以及对象引用。

在实例数据区中对象字段之间的排列以及内存对齐需要遵循三个字段重排列规则:

-

规则1:如果一个字段占用X个字节,那么这个字段的偏移量OFFSET需要对齐至NX。 -

规则2:在开启了压缩指针的 64 位 JVM 中,Java 类中的第一个字段的 OFFSET 需要对齐至4N,在关闭压缩指针的情况下类中第一个字段的OFFSET需要对齐至8N。 -

规则3:JVM 默认分配字段的顺序为:long / double,int / float,short / char,byte / boolean,oops(Ordianry Object Point 引用类型指针),并且父类中定义的实例变量会出现在子类实例变量之前。当设置JVM参数-XX +CompactFields时(默认),占用内存小于 long / double 的字段会允许被插入到对象中第一个 long / double 字段之前的间隙中,以避免不必要的内存填充。

还有一个重要规则就是 Java 虚拟机堆中对象的起始地址需要对齐至 8 的倍数(可由JVM参数 -XX:ObjectAlignmentInBytes 控制,默认为 8 )。

在了解上述字段排列以及对象之间的内存对齐规则后,我们分别以开启压缩指针和关闭压缩指针两种情况,来对 Entry 对象的内存布局进行分析并计算对象占用内存大小。

static final class Entry {

.............省略static字段RECYCLER.........

//DefaultHandle用于回收对象

private final Handle<Entry> handle;

//ChannelOutboundBuffer下一个节点

Entry next;

//待发送数据

Object msg;

//msg 转换为 jdk nio 中的byteBuffer

ByteBuffer[] bufs;

ByteBuffer buf;

//异步write操作的future

ChannelPromise promise;

//已发送了多少

long progress;

//总共需要发送多少,不包含entry对象大小。

long total;

//pendingSize表示entry对象在堆中需要的内存总量 待发送数据大小 + entry对象本身在堆中占用内存大小(96)

int pendingSize;

//msg中包含了几个jdk nio bytebuffer

int count = -1;

//write操作是否被取消

boolean cancelled;

}

我们看到 Entry 对象中一共有 11 个实例字段,其中 2 个 long 型字段,2 个 int 型字段,1 个 boolean 型字段,6 个对象引用。

默认情况下JVM参数 -XX +CompactFields 是开启的。

开启指针压缩 -XX:+UseCompressedOops

Entry 对象的内存布局中开头先是 8 个字节的 MarkWord,然后是 4 个字节的类型指针(开启压缩指针)。

在实例数据区中对象的排列规则需要符合规则3,也就是字段之间的排列顺序需要遵循 long > int > boolean > oop(对象引用)。

根据规则 3 Entry对象实例数据区第一个字段应该是 long progress,但根据规则1 long 型字段的 OFFSET 需要对齐至 8 的倍数,并且根据 规则2 在开启压缩指针的情况下,对象的第一个字段 OFFSET 需要对齐至 4 的倍数。所以字段long progress 的 OFFET = 16,这就必然导致了在对象头与字段 long progress 之间需要由 4 字节的字节填充(OFFET = 12处发生字节填充)。

但是 JVM 默认开启了 -XX +CompactFields,根据 规则3 占用内存小于 long / double 的字段会允许被插入到对象中第一个 long / double 字段之前的间隙中,以避免不必要的内存填充。

所以位于后边的字段 int pendingSize 插入到了 OFFET = 12 位置处,避免了不必要的字节填充。

在 Entry 对象的实例数据区中紧接着基础类型字段后面跟着的就是 6 个对象引用字段(开启压缩指针占用 4 个字节)。

大家一定注意到 OFFSET = 37 处本应该存放的是字段 private final Handle<Entry> handle 但是却被填充了 3 个字节。这是为什么呢?

According to the field rearrangement rule 1: the reference field private final Handle<Entry> handle occupies 4 bytes (when the compressed pointer is enabled), so it needs to be aligned to a multiple of 4. So 3 bytes need to be padded so that the reference field private final Handle<Entry> handle is at OFFSET = 40.

According to the above rules, it is finally calculated that the entry object occupies 64 bytes of memory in the heap when the compressed pointer is enabled.

Turn off pointer compression -XX:-UseCompressedOops

After analyzing the memory layout of the Entry object when the compressed pointer is turned on, I think everyone now has a clearer understanding of the three rules for field rearrangement introduced earlier, so let's analyze on this basis when the compressed pointer is turned off. The memory layout of the Entry object in the case.

First of all, the beginning of the Entry object in the memory layout is still an object header consisting of 8 bytes of MarkWord and 8 bytes of type pointer (closed compression pointer).

We see that byte stuffing occurs at OFFSET = 41. The reason is that when the compressed pointer is turned off, the memory size occupied by the object reference becomes 8 bytes. According to rule 1: OFFSET of the reference field needs to be aligned to a multiple of private final Handle<Entry> handle 8 , so 7 bytes need to be filled before the reference field, so that private final Handle<Entry> handle OFFET of the reference field = 48.

The three rules of comprehensive field rearrangement are finally calculated. When the compression pointer is turned off, the size of the Entry object in the heap is 96 bytes.

3.3.5 Buffer the data to be sent in ChannelOutboundBuffer

After introducing the basic structure of ChannelOutboundBuffer, the following is the last step of Netty's processing of write events. Let's see how the user's data to be sent is added to ChannelOutboundBuffer.

public void addMessage(Object msg, int size, ChannelPromise promise) {

Entry entry = Entry.newInstance(msg, size, total(msg), promise);

if (tailEntry == null) {

flushedEntry = null;

} else {

Entry tail = tailEntry;

tail.next = entry;

}

tailEntry = entry;

if (unflushedEntry == null) {

unflushedEntry = entry;

}

incrementPendingOutboundBytes(entry.pendingSize, false);

}

3.3.5.1 Create an Entry object to encapsulate the data information to be sent

Through the previous introduction, we learned that when the user calls ctx.write(msg), the write event starts to propagate forward in the pipeline from the current ChannelHandler, and finally writes the data to be sent to the write buffer corresponding to the channel in the HeadContext Zone ChannelOutboundBuffer.

And ChannelOutboundBuffer is a singly linked list composed of Entry structure, which encapsulates various information of the data to be sent by the user.

Here, first of all, we need to create an Entry object for the data to be sent, and when we introduced the object pool in the article "Explaining the Exquisite Design and Implementation of the Recycler Object Pool" , we mentioned that Netty, as a high-performance and high-throughput network framework, has to face massive IO processing operations. In this scenario, a large number of Entry objects will be created frequently, and the creation and recycling of objects will require performance overhead. Especially in the face of a large number of frequent object creation scenarios, this overhead will be further reduced. Zoom in, so Netty introduces an object pool to manage Entry object instances to avoid the frequent creation of Entry objects and the performance overhead caused by GC.

Since the Entry object has been taken over by the object pool, it cannot be created directly outside the object pool. Its constructor is a private type, and a static method newInstance is provided for external threads to obtain Entry objects from the object pool. This is also mentioned in the article "Explaining the Exquisite Design and Implementation of Recycler Object Pool" when introducing the design of pooled objects.

static final class Entry {

//静态变量引用类型地址 这个是在Klass Point(类型指针)中定义 8字节(开启指针压缩 为4字节)

private static final ObjectPool<Entry> RECYCLER = ObjectPool.newPool(new ObjectCreator<Entry>() {

@Override

public Entry newObject(Handle<Entry> handle) {

return new Entry(handle);

}

});

//Entry对象只能通过对象池获取,不可外部自行创建

private Entry(Handle<Entry> handle) {

this.handle = handle;

}

//不考虑指针压缩的大小 entry对象在堆中占用的内存大小为96

//如果开启指针压缩,entry对象在堆中占用的内存大小 会是64

static final int CHANNEL_OUTBOUND_BUFFER_ENTRY_OVERHEAD =

SystemPropertyUtil.getInt("io.netty.transport.outboundBufferEntrySizeOverhead", 96);

static Entry newInstance(Object msg, int size, long total, ChannelPromise promise) {

Entry entry = RECYCLER.get();

entry.msg = msg;

//待发数据数据大小 + entry对象大小

entry.pendingSize = size + CHANNEL_OUTBOUND_BUFFER_ENTRY_OVERHEAD;

entry.total = total;

entry.promise = promise;

return entry;

}

.......................省略................

}

-

Obtain the Entry object instance from the object pool through the object pool RECYCLER held in the pooled object Entry.

-

The data msg (DirectByteBuffer) to be sent by the user, the size of the data to be sent: total, the channelFuture of the data to be sent this time, and the pendingSize of the Entry object are all encapsulated in the corresponding fields of the Entry object instance.

这里需要特殊说明一点的是关于 pendingSize 的计算方式,之前我们提到 pendingSize 中所计算的内存占用一共包含两部分:

-

待发送网络数据大小

-

Entry 对象本身在内存中的占用量

Entry内存占用总量.png

而在《3.3.4 Entry实例对象在JVM中占用内存大小》小节中我们介绍到,Entry 对象在内存中的占用大小在开启压缩指针的情况下(-XX:+UseCompressedOops)占用 64 字节,在关闭压缩指针的情况下(-XX:-UseCompressedOops)占用 96 字节。

字段 CHANNEL_OUTBOUND_BUFFER_ENTRY_OVERHEAD 表示的就是 Entry 对象在内存中的占用大小,Netty这里默认是 96 字节,当然如果我们的应用程序开启了指针压缩,我们可以通过 JVM 启动参数 -D io.netty.transport.outboundBufferEntrySizeOverhead 指定为 64 字节。

3.3.5.2 将Entry对象添加进ChannelOutboundBuffer中

ChannelOutboundBuffer结构.png

if (tailEntry == null) {

flushedEntry = null;

} else {

Entry tail = tailEntry;

tail.next = entry;

}

tailEntry = entry;

if (unflushedEntry == null) {

unflushedEntry = entry;

}

在《3.3 ChannelOutboundBuffer》小节一开始,我们介绍了 ChannelOutboundBuffer 中最重要的三个指针,这里涉及到的两个指针分别是:

-

unflushedEntry:指向 ChannelOutboundBuffer 中第一个未被 flush 进 Socket 的待发送数据。用来指示 ChannelOutboundBuffer 的第一个节点。 -

tailEntry:指向 ChannelOutboundBuffer 中最后一个节点。

通过 unflushedEntry 和 tailEntry 可以定位出待发送数据的范围。Channel 中的每一次 write 事件,最终都会将待发送数据插入到 ChannelOutboundBuffer 的尾结点处。

3.3.5.3 incrementPendingOutboundBytes

在将 Entry 对象添加进 ChannelOutboundBuffer 之后,就需要更新用于记录当前 ChannelOutboundBuffer 中关于待发送数据所占内存总量的水位线指示。

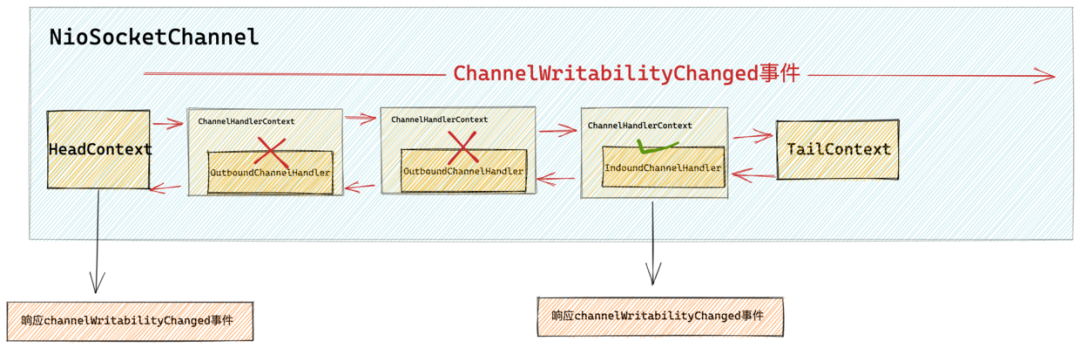

如果更新后的水位线超过了 Netty 指定的高水位线 DEFAULT_HIGH_WATER_MARK = 64 * 1024,则需要将当前 Channel 的状态设置为不可写,并在 pipeline 中传播 ChannelWritabilityChanged 事件,注意该事件是一个 inbound 事件。

响应channelWritabilityChanged事件.png

public final class ChannelOutboundBuffer {

//ChannelOutboundBuffer中的待发送数据的内存占用总量 : 所有Entry对象本身所占用内存大小 + 所有待发送数据的大小

private volatile long totalPendingSize;

//水位线指针

private static final AtomicLongFieldUpdater<ChannelOutboundBuffer> TOTAL_PENDING_SIZE_UPDATER =

AtomicLongFieldUpdater.newUpdater(ChannelOutboundBuffer.class, "totalPendingSize");

private void incrementPendingOutboundBytes(long size, boolean invokeLater) {

if (size == 0) {

return;

}

//更新总共待写入数据的大小

long newWriteBufferSize = TOTAL_PENDING_SIZE_UPDATER.addAndGet(this, size);

//如果待写入的数据 大于 高水位线 64 * 1024 则设置当前channel为不可写 由用户自己决定是否继续写入

if (newWriteBufferSize > channel.config().getWriteBufferHighWaterMark()) {

//设置当前channel状态为不可写,并触发fireChannelWritabilityChanged事件

setUnwritable(invokeLater);

}

}

}

volatile 关键字在 Java 内存模型中只能保证变量的可见性,以及禁止指令重排序。但无法保证多线程更新的原子性,这里我们可以通过AtomicLongFieldUpdater 来帮助 totalPendingSize 字段实现原子性的更新。

// 0表示channel可写,1表示channel不可写

private volatile int unwritable;

private static final AtomicIntegerFieldUpdater<ChannelOutboundBuffer> UNWRITABLE_UPDATER =

AtomicIntegerFieldUpdater.newUpdater(ChannelOutboundBuffer.class, "unwritable");

private void setUnwritable(boolean invokeLater) {

for (;;) {

final int oldValue = unwritable;

final int newValue = oldValue | 1;

if (UNWRITABLE_UPDATER.compareAndSet(this, oldValue, newValue)) {

if (oldValue == 0) {

//触发fireChannelWritabilityChanged事件 表示当前channel变为不可写

fireChannelWritabilityChanged(invokeLater);

}

break;

}

}

}

当 ChannelOutboundBuffer 中的内存占用水位线 totalPendingSize 已经超过高水位线时,调用该方法将当前 Channel 的状态设置为不可写状态。

unwritable == 0 表示当前channel可写,unwritable == 1 表示当前channel不可写。

channel 可以通过调用 isWritable 方法来判断自身当前状态是否可写。

public boolean isWritable() {

return unwritable == 0;

}

当 Channel 的状态是首次从可写状态变为不可写状态时,就会在 channel 对应的 pipeline 中传播 ChannelWritabilityChanged 事件。

private void fireChannelWritabilityChanged(boolean invokeLater) {

final ChannelPipeline pipeline = channel.pipeline();

if (invokeLater) {

Runnable task = fireChannelWritabilityChangedTask;

if (task == null) {

fireChannelWritabilityChangedTask = task = new Runnable() {

@Override

public void run() {

pipeline.fireChannelWritabilityChanged();

}

};

}

channel.eventLoop().execute(task);

} else {

pipeline.fireChannelWritabilityChanged();

}

}

用户可以在自定义的 ChannelHandler 中实现 channelWritabilityChanged 事件回调方法,来针对 Channel 的可写状态变化做出不同的处理。

public class EchoServerHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelWritabilityChanged(ChannelHandlerContext ctx) throws Exception {

if (ctx.channel().isWritable()) {

...........当前channel可写.........

} else {

...........当前channel不可写.........

}

}

}

So far, the author has introduced the propagation of the write event in the pipeline. Next, let's look at the processing process of another important flush event.

4. flush

From Netty's processing of the write event, we can see that after the user calls the ctx.write(msg) method, Netty only temporarily writes the data to be sent by the user to the ChannelOutboundBuffer corresponding to the channel to be sent, but does not No data will be written to the Socket.

And when a read event is completed, we will call the ctx.flush() method to write the data to be sent in the ChannelOutboundBuffer into the send buffer in the Socket, so as to send the data out.

public class EchoServerHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelReadComplete(ChannelHandlerContext ctx) {

//本次OP_READ事件处理完毕

ctx.flush();

}

}

4.1 Propagation of flush events

pipeline structure.png

The flush event and the write event are both oubound events, so their propagation directions are propagated in the pipeline from back to front.

There are also two methods to trigger flush event propagation:

-

channelHandlerContext.flush(): The flush event will propagate forward in the pipeline from the current channelHandler until the headContext. -

channelHandlerContext.channel().flush(): The flush event will propagate forward from the tailContext of the pipeline until the headContext.

abstract class AbstractChannelHandlerContext implements ChannelHandlerContext, ResourceLeakHint {

@Override

public ChannelHandlerContext flush() {

//向前查找覆盖flush方法的Outbound类型的ChannelHandler

final AbstractChannelHandlerContext next = findContextOutbound(MASK_FLUSH);

//获取执行ChannelHandler的executor,在初始化pipeline的时候设置,默认为Reactor线程

EventExecutor executor = next.executor();

if (executor.inEventLoop()) {

next.invokeFlush();

} else {

Tasks tasks = next.invokeTasks;

if (tasks == null) {

next.invokeTasks = tasks = new Tasks(next);

}

safeExecute(executor, tasks.invokeFlushTask, channel().voidPromise(), null, false);

}

return this;

}

}

The logic here is basically the same as that of the write event propagation. It is also the first to find the first ChannelOutboundHandler type and implement the flush event callback method from the pipeline forward through the findContextOutbound(MASK_FLUSH) method from the current ChannelHandler. Note that the execution qualification mask passed in here is MASK_FLUSH.

The thread that executes the event callback method in ChannelHandler must pass pipeline#addLast(EventExecutorGroup group, ChannelHandler... handlers)the executor specified for ChannelHandler. If not specified, the default executor is the reactor thread bound to the channel.

If the current thread is not the executor specified by ChannelHandler, you need to encapsulate the call of the invokeFlush() method into a Task and hand it over to the specified executor for execution.

4.1.1 Trigger the flush method callback of nextChannelHandler

private void invokeFlush() {

if (invokeHandler()) {

invokeFlush0();

} else {

//如果该ChannelHandler并没有加入到pipeline中则继续向前传递flush事件

flush();

}

}

This is the same as the related processing of the write event. First, the invokeHandler() method needs to be called to determine whether the nextChannelHandler is correctly initialized in the pipeline.

If the handlerAdded method in nextChannelHandler has not been called back, then the nextChannelHandler can only be skipped here, and the ChannelHandlerContext#flush method is called to continue propagating the flush event forward.

If the handlerAdded method in nextChannelHandler has been called back, it means that nextChannelHandler has been correctly initialized in the pipeline, then directly call the flush event callback method of nextChannelHandler.

private void invokeFlush0() {

try {

((ChannelOutboundHandler) handler()).flush(this);

} catch (Throwable t) {

invokeExceptionCaught(t);

}

}

The difference from the write event processing here is that when the flush callback of nextChannelHandler is invoked and an exception occurs, the exceptionCaught callback of nextChannelHandler will be triggered.

private void invokeExceptionCaught(final Throwable cause) {

if (invokeHandler()) {

try {

handler().exceptionCaught(this, cause);

} catch (Throwable error) {

if (logger.isDebugEnabled()) {

logger.debug(....相关日志打印......);

} else if (logger.isWarnEnabled()) {

logger.warn(...相关日志打印......));

}

}

} else {

fireExceptionCaught(cause);

}

}

While other outbound events such as write events are abnormal during the propagation process, they only call back to notify the related ChannelFuture. Does not trigger the propagation of the exceptionCaught event.

4.2 Processing of flush event

Client channel pipeline structure.png

Finally, the flush event will propagate forward in the pipeline to the HeadContext, and call the unsafe class of the channel in the HeadContext to complete the final processing logic of the flush event.

final class HeadContext extends AbstractChannelHandlerContext {

@Override

public void flush(ChannelHandlerContext ctx) {

unsafe.flush();

}

}

The following is where Netty actually handles the flush event.

protected abstract class AbstractUnsafe implements Unsafe {

@Override

public final void flush() {

assertEventLoop();

ChannelOutboundBuffer outboundBuffer = this.outboundBuffer;

//channel以关闭

if (outboundBuffer == null) {

return;

}

//将flushedEntry指针指向ChannelOutboundBuffer头结点,此时变为即将要flush进Socket的数据队列

outboundBuffer.addFlush();

//将待写数据写进Socket

flush0();

}

}

4.2.1 ChannelOutboundBuffer#addFlush

ChannelOutboundBuffer structure.png

Here is the time to actually send data. In the addFlush method, the flushedEntry pointer will point to the first unflushed Entry node indicated by the unflushedEntry pointer. And set the unflushedEntry pointer to NULL, ready to start the process of flushing and sending data.

At this time, the ChannelOutboundBuffer is changed from the buffer queue of the data to be sent to the data queue about to be flushed into the Socket

In this way, the Entry node between flushedEntry and tailEntry is the range of data to be sent in this flush operation.

public void addFlush() {

Entry entry = unflushedEntry;

if (entry != null) {

if (flushedEntry == null) {

flushedEntry = entry;

}

do {

flushed ++;

//如果当前entry对应的write操作被用户取消,则释放msg,并降低channelOutboundBuffer水位线

if (!entry.promise.setUncancellable()) {

int pending = entry.cancel();

decrementPendingOutboundBytes(pending, false, true);

}

entry = entry.next;

} while (entry != null);

// All flushed so reset unflushedEntry

unflushedEntry = null;

}

}

When the process of sending data by flush starts, the process of sending data cannot be canceled. Before that, we can cancel the process of sending data through ChannelPromise.

Therefore, it is necessary to set the ChannelPromise wrapped by all Entry nodes in the ChannelOutboundBuffer as non-cancellable.

public interface Promise<V> extends Future<V> {

/**

* 设置当前future为不可取消状态

*

* 返回true的情况:

* 1:成功的将future设置为uncancellable

* 2:当future已经成功完成

*

* 返回false的情况:

* 1:future已经被取消,则不能在设置 uncancellable 状态

*

*/

boolean setUncancellable();

}

If the setUncancellable() method here returns false, it means that the user has canceled the ChannelPromise before that, and then it is necessary to call the entry.cancel() method to release the off-heap memory allocated for the data msg to be sent.

static final class Entry {

//write操作是否被取消

boolean cancelled;

int cancel() {

if (!cancelled) {

cancelled = true;

int pSize = pendingSize;

// release message and replace with an empty buffer

ReferenceCountUtil.safeRelease(msg);

msg = Unpooled.EMPTY_BUFFER;

pendingSize = 0;

total = 0;

progress = 0;

bufs = null;

buf = null;

return pSize;

}

return 0;

}

}

当 Entry 对象被取消后,就需要减少 ChannelOutboundBuffer 的内存占用总量的水位线 totalPendingSize。

private static final AtomicLongFieldUpdater<ChannelOutboundBuffer> TOTAL_PENDING_SIZE_UPDATER =

AtomicLongFieldUpdater.newUpdater(ChannelOutboundBuffer.class, "totalPendingSize");

//水位线指针.ChannelOutboundBuffer中的待发送数据的内存占用总量 : 所有Entry对象本身所占用内存大小 + 所有待发送数据的大小

private volatile long totalPendingSize;

private void decrementPendingOutboundBytes(long size, boolean invokeLater, boolean notifyWritability) {

if (size == 0) {

return;

}

long newWriteBufferSize = TOTAL_PENDING_SIZE_UPDATER.addAndGet(this, -size);

if (notifyWritability && newWriteBufferSize < channel.config().getWriteBufferLowWaterMark()) {

setWritable(invokeLater);

}

}

当更新后的水位线低于低水位线 DEFAULT_LOW_WATER_MARK = 32 * 1024 时,就将当前 channel 设置为可写状态。

private void setWritable(boolean invokeLater) {

for (;;) {

final int oldValue = unwritable;

final int newValue = oldValue & ~1;

if (UNWRITABLE_UPDATER.compareAndSet(this, oldValue, newValue)) {

if (oldValue != 0 && newValue == 0) {

fireChannelWritabilityChanged(invokeLater);

}

break;

}

}

}

当 Channel 的状态是第一次从不可写状态变为可写状态时,Netty 会在 pipeline 中再次触发 ChannelWritabilityChanged 事件的传播。

响应channelWritabilityChanged事件.png

4.2.2 发送数据前的最后检查---flush0

flush0 方法这里主要做的事情就是检查当 channel 的状态是否正常,如果 channel 状态一切正常,则调用 doWrite 方法发送数据。

protected abstract class AbstractUnsafe implements Unsafe {

//是否正在进行flush操作

private boolean inFlush0;

protected void flush0() {

if (inFlush0) {

// Avoid re-entrance

return;

}

final ChannelOutboundBuffer outboundBuffer = this.outboundBuffer;

//channel已经关闭或者outboundBuffer为空

if (outboundBuffer == null || outboundBuffer.isEmpty()) {

return;

}

inFlush0 = true;

if (!isActive()) {

try {

if (!outboundBuffer.isEmpty()) {

if (isOpen()) {

//当前channel处于disConnected状态 通知promise 写入失败 并触发channelWritabilityChanged事件

outboundBuffer.failFlushed(new NotYetConnectedException(), true);

} else {

//当前channel处于关闭状态 通知promise 写入失败 但不触发channelWritabilityChanged事件

outboundBuffer.failFlushed(newClosedChannelException(initialCloseCause, "flush0()"), false);

}

}

} finally {

inFlush0 = false;

}

return;

}

try {

//写入Socket

doWrite(outboundBuffer);

} catch (Throwable t) {

handleWriteError(t);

} finally {

inFlush0 = false;

}

}

}

-

outboundBuffer == null || outboundBuffer.isEmpty():如果 channel 已经关闭了或者对应写缓冲区中没有任何数据,那么就停止发送流程,直接 return。 -

!isActive():如果当前channel处于非活跃状态,则需要调用outboundBuffer#failFlushed通知 ChannelOutboundBuffer 中所有待发送操作对应的 channelPromise 向用户线程报告发送失败。并将待发送数据 Entry 对象从 ChannelOutboundBuffer 中删除,并释放待发送数据空间,回收 Entry 对象实例。

还记得我们在?《Netty如何高效接收网络连接》一文中提到过的 NioSocketChannel 的 active 状态有哪些条件吗??

@Override

public boolean isActive() {

SocketChannel ch = javaChannel();

return ch.isOpen() && ch.isConnected();

}

NioSocketChannel 处于 active 状态的条件必须是当前 NioSocketChannel 是 open 的同时处于 connected 状态。

-

!isActive() && isOpen(): Indicates that the current channel is in the disConnected state. At this time, the exception type notified to the user channelPromise is NotYetConnectedException, and all the off-heap memory occupied by the data to be sent is released. If the memory usage is lower than the low water mark at this time, set the channel to writable state, and trigger the channelWritabilityChanged event.

When the channel is in the disConnected state, users can perform write operations but cannot perform flush operations.

-

!isActive() && !isOpen(): Indicates that the current channel is closed. At this time, the exception type notified to the user of the channelPromise is newClosedChannelException. Because the channel has been closed, the channelWritabilityChanged event will not be triggered here. -

When these abnormal state checks of the channel pass, the doWrite method is called to write the data to be sent in the ChannelOutboundBuffer into the underlying Socket.

4.2.2.1 ChannelOutboundBuffer#failFlushed

public final class ChannelOutboundBuffer {

private boolean inFail;

void failFlushed(Throwable cause, boolean notify) {

if (inFail) {

return;

}

try {

inFail = true;

for (;;) {

if (!remove0(cause, notify)) {

break;

}

}

} finally {

inFail = false;

}

}

}

This method is used to delete all the Entry object nodes between flushedEntry and tailEntry in the ChannelOutboundBuffer if the current channel is found to be inactive when Netty is sending data, release the memory space occupied by the sent data, and recycle the Entry object at the same time instance.

4.2.2.2 ChannelOutboundBuffer#remove0

private boolean remove0(Throwable cause, boolean notifyWritability) {

Entry e = flushedEntry;

if (e == null) {

//清空当前reactor线程缓存的所有待发送数据

clearNioBuffers();

return false;

}

Object msg = e.msg;

ChannelPromise promise = e.promise;

int size = e.pendingSize;

//从channelOutboundBuffer中删除该Entry节点

removeEntry(e);

if (!e.cancelled) {

// only release message, fail and decrement if it was not canceled before.

//释放msg所占用的内存空间

ReferenceCountUtil.safeRelease(msg);

//编辑promise发送失败,并通知相应的Lisener

safeFail(promise, cause);

//由于msg得到释放,所以需要降低channelOutboundBuffer中的内存占用水位线,并根据notifyWritability决定是否触发ChannelWritabilityChanged事件

decrementPendingOutboundBytes(size, false, notifyWritability);

}

// recycle the entry

//回收Entry实例对象

e.recycle();

return true;

}

When an Entry node needs to be cleared from the ChannelOutboundBuffer, Netty needs to release the memory space occupied by the sent data msg wrapped in the Entry node. Mark the corresponding promise as failure and notify the corresponding listener. Since the msg is released, it is necessary to reduce the memory usage watermark in the channelOutboundBuffer, and decide boolean notifyWritability whether to trigger the ChannelWritabilityChanged event. Finally, the Entry instance needs to be recycled to the Recycler object pool.

5. Finally start actually sending data!

When we come here, we have really entered the core processing logic of Netty sending data. In the article " How Netty Receives Network Data Efficiently" , the author introduces the core process of Netty reading data in detail. Netty will continue to circulate in a read loop Read the data in the Socket until the data is read or the number of reads is full 16 times. When the loop reads 16 times and the reading is not completed, Netty cannot continue to read, because Netty must ensure that the Reactor thread can be evenly read. It handles the IO events registered in all Channels above it. The remaining unread data waits until the next read loop begins to read.

In addition, before the start of each read loop, Netty will allocate a DirectByteBuffer with an initial size of 2048 to load the data read from the Socket. The amount is used to determine whether to expand or shrink the DirectByteBuffer. The purpose is to allocate a DirectByteBuffer with an appropriate capacity for the next read loop.

In fact, the core logic of Netty's processing of sending data is the same as that of reading data. Here you can compare these two articles.

但发送数据的细节会多一些,也会更复杂一些,由于这块逻辑整体稍微比较复杂,所以我们接下来还是分模块进行解析:

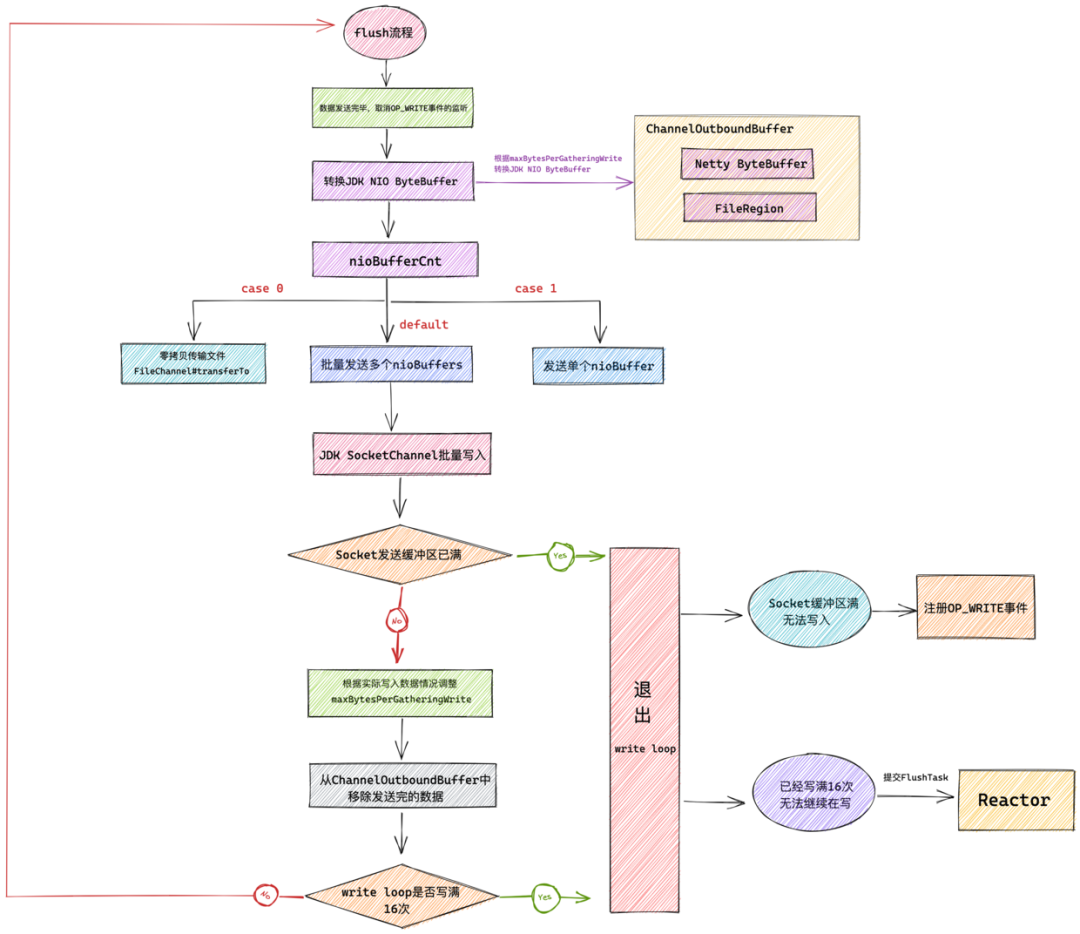

5.1 发送数据前的准备工作

@Override

protected void doWrite(ChannelOutboundBuffer in) throws Exception {

//获取NioSocketChannel中封装的jdk nio底层socketChannel

SocketChannel ch = javaChannel();

//最大写入次数 默认为16 目的是为了保证SubReactor可以平均的处理注册其上的所有Channel

int writeSpinCount = config().getWriteSpinCount();

do {

if (in.isEmpty()) {

// 如果全部数据已经写完 则移除OP_WRITE事件并直接退出writeLoop

clearOpWrite();

return;

}

// SO_SNDBUF设置的发送缓冲区大小 * 2 作为 最大写入字节数 293976 = 146988 << 1

int maxBytesPerGatheringWrite = ((NioSocketChannelConfig) config).getMaxBytesPerGatheringWrite();

// 将ChannelOutboundBuffer中缓存的DirectBuffer转换成JDK NIO 的 ByteBuffer

ByteBuffer[] nioBuffers = in.nioBuffers(1024, maxBytesPerGatheringWrite);

// ChannelOutboundBuffer中总共的DirectBuffer数

int nioBufferCnt = in.nioBufferCount();

switch (nioBufferCnt) {

.........向底层jdk nio socketChannel发送数据.........

}

} while (writeSpinCount > 0);

............处理本轮write loop未写完的情况.......

}

这部分内容为 Netty 开始发送数据之前的准备工作:

5.1.1 获取write loop最大发送循环次数

从当前 NioSocketChannel 的配置类 NioSocketChannelConfig 中获取 write loop 最大循环写入次数,默认为 16。但也可以通过下面的方式进行自定义设置。

ServerBootstrap b = new ServerBootstrap();

b.group(bossGroup, workerGroup)

.......

.childOption(ChannelOption.WRITE_SPIN_COUNT,自定义数值)

5.1.2 处理在一轮write loop中就发送完数据的情况

进入 write loop 之后首先需要判断当前 ChannelOutboundBuffer 中的数据是否已经写完了 in.isEmpty()) ,如果全部写完就需要清除当前 Channel 在 Reactor 上注册的 OP_WRITE 事件。

这里大家可能会有疑问,目前我们还没有注册 OP_WRITE 事件到 Reactor 上,为啥要清除呢?别着急,笔者会在后面为大家揭晓答案。

5.1.3 获取本次write loop 最大允许发送字节数

从 ChannelConfig 中获取本次 write loop 最大允许发送的字节数 maxBytesPerGatheringWrite 。初始值为 SO_SNDBUF大小 * 2 = 293976 = 146988 << 1,最小值为 2048。

private final class NioSocketChannelConfig extends DefaultSocketChannelConfig {

//293976 = 146988 << 1

//SO_SNDBUF设置的发送缓冲区大小 * 2 作为 最大写入字节数

//最小值为2048

private volatile int maxBytesPerGatheringWrite = Integer.MAX_VALUE;

private NioSocketChannelConfig(NioSocketChannel channel, Socket javaSocket) {

super(channel, javaSocket);

calculateMaxBytesPerGatheringWrite();

}

private void calculateMaxBytesPerGatheringWrite() {

// 293976 = 146988 << 1

// SO_SNDBUF设置的发送缓冲区大小 * 2 作为 最大写入字节数

int newSendBufferSize = getSendBufferSize() << 1;

if (newSendBufferSize > 0) {

setMaxBytesPerGatheringWrite(newSendBufferSize);

}

}

}

我们可以通过如下的方式自定义配置 Socket 发送缓冲区大小。

ServerBootstrap b = new ServerBootstrap();

b.group(bossGroup, workerGroup)

.......

.childOption(ChannelOption.SO_SNDBUF,自定义数值)

5.1.4 将待发送数据转换成 JDK NIO ByteBuffer

Since Netty will eventually call the SocketChannel of JDK NIO to send data, it is necessary to first convert the DirectByteBuffer (ByteBuffer implementation in Netty) stored in the write buffer queue ChannelOutboundBuffer in the current Channel to the ByteBuffer type of JDK NIO. Finally, the converted data to be sent is stored in the ByteBuffer[] nioBuffers array. Here, the conversion of the above ByteBuffer type is completed by calling ChannelOutboundBuffer#nioBuffers the method.

-

maxBytesPerGatheringWrite: Indicates that maxBytesPerGatheringWrite bytes are converted from ChannelOutboundBuffer at most in this write loop. That is, how many bytes can be sent by this write loop at most. -

1024: This write loop can convert up to 1024 ByteBuffers (JDK NIO implementation). That is to say, how many ByteBuffers can be sent in batches at most in this write loop.

By ChannelOutboundBuffer#nioBufferCount() obtaining the total number of ByteBuffers that need to be sent in this write loop nioBufferCnt. Note that this has become the ByteBuffer implemented by JDK NIO.

For the detailed ByteBuffer type conversion process, the author will give you a comprehensive and detailed explanation when explaining the Buffer design. Here we mainly focus on the main line of the sending data process.

After completing these preparations before sending, Netty then starts sending these converted JDK NIO ByteBuffers to the JDK NIO SocketChannel.

5.2 Send data to JDK NIO SocketChannel

flush process.png

@Override

protected void doWrite(ChannelOutboundBuffer in) throws Exception {

SocketChannel ch = javaChannel();

int writeSpinCount = config().getWriteSpinCount();

do {

.........将待发送数据转换到JDK NIO ByteBuffer中.........

//本次write loop中需要发送的 JDK ByteBuffer个数

int nioBufferCnt = in.nioBufferCount();

switch (nioBufferCnt) {

case 0:

//这里主要是针对 网络传输文件数据 的处理 FileRegion

writeSpinCount -= doWrite0(in);

break;

case 1: {

.........处理单个NioByteBuffer发送的情况......

break;

}

default: {

.........批量处理多个NioByteBuffers发送的情况......

break;

}

}

} while (writeSpinCount > 0);

............处理本轮write loop未写完的情况.......

}

这里大家可能对 nioBufferCnt == 0 的情况比较有疑惑,明明之前已经校验过ChannelOutboundBuffer 不为空了,为什么这里从 ChannelOutboundBuffer 中获取到的 nioBuffer 个数依然为 0 呢?

在前边我们介绍 Netty 对 write 事件的处理过程时提过, ChannelOutboundBuffer 中只支持 ByteBuf 类型和 FileRegion 类型,其中 ByteBuf 类型用于装载普通的发送数据,而 FileRegion 类型用于通过零拷贝的方式网络传输文件。

而这里 ChannelOutboundBuffer 虽然不为空,但是装载的 NioByteBuffer 个数却为 0 说明 ChannelOutboundBuffer 中装载的是 FileRegion 类型,当前正在进行网络文件的传输。

case 0 的分支主要就是用于处理网络文件传输的情况。

5.2.1 零拷贝发送网络文件

protected final int doWrite0(ChannelOutboundBuffer in) throws Exception {

Object msg = in.current();

if (msg == null) {

return 0;

}

return doWriteInternal(in, in.current());

}

这里需要特别注意的是用于文件传输的方法 doWriteInternal 中的返回值,理解这些返回值的具体情况有助于我们理解后面 write loop 的逻辑走向。

private int doWriteInternal(ChannelOutboundBuffer in, Object msg) throws Exception {

if (msg instanceof ByteBuf) {

..............忽略............

} else if (msg instanceof FileRegion) {

FileRegion region = (FileRegion) msg;

//文件已经传输完毕

if (region.transferred() >= region.count()) {

in.remove();

return 0;

}

//零拷贝的方式传输文件

long localFlushedAmount = doWriteFileRegion(region);

if (localFlushedAmount > 0) {

in.progress(localFlushedAmount);

if (region.transferred() >= region.count()) {

in.remove();

}

return 1;

}

} else {

// Should not reach here.

throw new Error();

}

//走到这里表示 此时Socket已经写不进去了 退出writeLoop,注册OP_WRITE事件

return WRITE_STATUS_SNDBUF_FULL;

}

最终会在 doWriteFileRegion 方法中通过 FileChannel#transferTo 方法底层用到的系统调用为 sendFile 实现零拷贝网络文件的传输。

public class NioSocketChannel extends AbstractNioByteChannel implements io.netty.channel.socket.SocketChannel {

@Override

protected long doWriteFileRegion(FileRegion region) throws Exception {

final long position = region.transferred();

return region.transferTo(javaChannel(), position);

}

}

关于 Netty 中涉及到的零拷贝,笔者会有一篇专门的文章为大家讲解,本文的主题我们还是先聚焦于把发送流程的主线打通。

我们继续回到发送数据流程主线上来~~

case 0:

//这里主要是针对 网络传输文件数据 的处理 FileRegion

writeSpinCount -= doWrite0(in);

break;

-

region.transferred() >= region.count():表示当前 FileRegion 中的文件数据已经传输完毕。那么在这种情况下本次 write loop 没有写入任何数据到 Socket ,所以返回 0 ,writeSpinCount - 0 意思就是本次 write loop 不算,继续循环。 -

localFlushedAmount > 0: Indicates that some data has been written into the Socket in this write loop, and 1 will be returned, and writeSpinCount - 1 will reduce the number of write loops once. -

localFlushedAmount <= 0: Indicates that the current Socket send buffer is full and data cannot be written, so it returnsWRITE_STATUS_SNDBUF_FULL = Integer.MAX_VALUE.writeSpinCount - Integer.MAX_VALUEMust be a negative number, exit the loop directly, register the OP_WRITE event with Reactor and exit the flush process. When the Socket send buffer is writable, Reactor will notify the channel to continue sending file data. Remember this, we will mention it later .

5.2.2 Send normal data

The remaining two cases, case 1 and default, mainly deal with the normal data sending logic loaded by ByteBuffer.

Among them, case 1 indicates that the ChannelOutboundBuffer of the current Channel only contains one NioByteBuffer.

default means that the ChannelOutboundBuffer of the current Channel contains multiple NioByteBuffers.

@Override

protected void doWrite(ChannelOutboundBuffer in) throws Exception {

SocketChannel ch = javaChannel();

int writeSpinCount = config().getWriteSpinCount();

do {

.........将待发送数据转换到JDK NIO ByteBuffer中.........

//本次write loop中需要发送的 JDK ByteBuffer个数

int nioBufferCnt = in.nioBufferCount();

switch (nioBufferCnt) {

case 0:

..........处理网络文件传输.........

case 1: {

ByteBuffer buffer = nioBuffers[0];

int attemptedBytes = buffer.remaining();

final int localWrittenBytes = ch.write(buffer);

if (localWrittenBytes <= 0) {

//如果当前Socket发送缓冲区满了写不进去了,则注册OP_WRITE事件,等待Socket发送缓冲区可写时 在写

// SubReactor在处理OP_WRITE事件时,直接调用flush方法

incompleteWrite(true);

return;

}

//根据当前实际写入情况调整 maxBytesPerGatheringWrite数值

adjustMaxBytesPerGatheringWrite(attemptedBytes, localWrittenBytes, maxBytesPerGatheringWrite);

//如果ChannelOutboundBuffer中的某个Entry被全部写入 则删除该Entry

// 如果Entry被写入了一部分 还有一部分未写入 则更新Entry中的readIndex 等待下次writeLoop继续写入

in.removeBytes(localWrittenBytes);

--writeSpinCount;

break;

}

default: {

// ChannelOutboundBuffer中总共待写入数据的字节数

long attemptedBytes = in.nioBufferSize();

//批量写入

final long localWrittenBytes = ch.write(nioBuffers, 0, nioBufferCnt);

if (localWrittenBytes <= 0) {

incompleteWrite(true);

return;

}

//根据实际写入情况调整一次写入数据大小的最大值

// maxBytesPerGatheringWrite决定每次可以从channelOutboundBuffer中获取多少发送数据

adjustMaxBytesPerGatheringWrite((int) attemptedBytes, (int) localWrittenBytes,

maxBytesPerGatheringWrite);

//移除全部写完的BUffer,如果只写了部分数据则更新buffer的readerIndex,下一个writeLoop写入

in.removeBytes(localWrittenBytes);

--writeSpinCount;

break;

}

}

} while (writeSpinCount > 0);

............处理本轮write loop未写完的情况.......

}

The case 1 and default branches have the same logic when processing the sent data, the only difference is that case 1 handles the sending of a single NioByteBuffer, while the default branch processes the sending of multiple NioByteBuffers in batches.

Next, the author will take the default branch that is often triggered as an example to describe the logical details of Netty when processing data transmission:

-

Firstly, get the total amount of bytes attemptedBytes to be sent by this write loop from the ChannelOutboundBuffer in the current NioSocketChannel.

ChannelOutboundBuffer#nioBuffersThis nioBufferSize is calculated when converting the JDK NIO ByteBuffer type in the method introduced earlier . -

Call the native SocketChannel of JDK NIO to batch send the data in nioBuffers. And get how many bytes localWrittenBytes have sent in batches in this write loop.

/**

* @throws NotYetConnectedException

* If this channel is not yet connected

*/

public abstract long write(ByteBuffer[] srcs, int offset, int length)

throws IOException;

-

localWrittenBytes <= 0Indicates that the write buffer area SEND_BUF of the current Socket is full and data cannot be written. Then you need to register the OP_WRITE event with the Reactor corresponding to the current NioSocketChannel, and stop the current flush process. When the write buffer of the Socket has capacity to write, epoll will notify the reactor thread to continue writing.

protected final void incompleteWrite(boolean setOpWrite) {

// Did not write completely.

if (setOpWrite) {

//这里处理还没写满16次 但是socket缓冲区已满写不进去的情况 注册write事件

//什么时候socket可写了, epoll会通知reactor线程继续写

setOpWrite();

} else {

...........目前还不需要关注这里.......

}

}

Register the OP_WRITE event with Reactor:

protected final void setOpWrite() {

final SelectionKey key = selectionKey();

if (!key.isValid()) {

return;

}

final int interestOps = key.interestOps();

if ((interestOps & SelectionKey.OP_WRITE) == 0) {

key.interestOps(interestOps | SelectionKey.OP_WRITE);

}

}

Regarding the usage of adding monitoring IO events to the IO event collection interestOps through bit operations, the author has introduced it many times in the previous article, so I won’t repeat it here.

-

According to the data written into the Socket write buffer by this write loop, adjust the maximum number of bytes written by the next write loop. maxBytesPerGatheringWrite determines how much sending data can be obtained from channelOutboundBuffer each write loop. The initial value

SO_SNDBUF大小 * 2 = 293976 = 146988 << 1, the minimum value is 2048.

public static final int MAX_BYTES_PER_GATHERING_WRITE_ATTEMPTED_LOW_THRESHOLD = 4096;

private void adjustMaxBytesPerGatheringWrite(int attempted, int written, int oldMaxBytesPerGatheringWrite) {

if (attempted == written) {

if (attempted << 1 > oldMaxBytesPerGatheringWrite) {

((NioSocketChannelConfig) config).setMaxBytesPerGatheringWrite(attempted << 1);

}

} else if (attempted > MAX_BYTES_PER_GATHERING_WRITE_ATTEMPTED_LOW_THRESHOLD && written < attempted >>> 1) {