Article directory

- Create a new data table employees

- Load data into the table

- References elements in a collection type

- New data table stocks

- load data

- DML data manipulation

Create a new data table employees

create table employees(

name string,

salary float,

subordinates array<string>,

deductions map<string,float>,

address struct<street:string,city:string,state:string,zip:int>

)

partitioned by (country string,state string);

Load data into the table

load data local inpath '/opt/datafiles/employees.txt' overwrite into table employees partition (country='US',state='CA');

partition子句分区表,用户必须为每个分区的键指定一个值。

数据将会存放到这个文件夹

/user/hive/warehouse/learnhive.db/employees/country=US/state=CA

select name,salary from employees;

+-------------------+-----------+

| name | salary |

+-------------------+-----------+

| John Doe | 100000.0 |

| Mary Smith | 80000.0 |

| Todd Jones | 70000.0 |

| Bill King | 60000.0 |

| Boss Man | 200000.0 |

| Fred Finance | 150000.0 |

| Stacy Accountant | 60000.0 |

+-------------------+-----------+

When the column selected by the user is a collection data type, Hive applies JSON syntax to the output. The subordinates are listed as an array. Note: the string elements of the collection are quoted, while the column values of the basic data type string are not quoted.

select name,subordinates from employees;

The deductions column is a MAP

select name,deductions from employees;

The address column is a struct

select name,address from employees;

References elements in a collection type

Reference array (select the second element of the array subordinates)

select name,subordinates[1] from employees;

Referencing a non-existent element will return NULL. At the same time, the value of the string data type extracted is not quoted

Reference MAP element

select name,deductions['Insurance'] from employees;

reference struct element

select name,address.city from employees;

New data table stocks

create table stocks(

exchange_e string,

symbol string,

ymd string,

price_open float,

price_high float,

price_low float,

price_close float,

volume int,

price_adj_close float)

row format delimited fields terminated by ',';

load data

load data local inpath '/opt/datafiles/stocks.csv' overwrite into table stocks;

Use regular expressions to specify columns

First you have to execute this statement

set hive.support.quoted.identifiers=none;

select symbol,`price.*` from stocks limit 5;

Calculate using column values

select upper(name),salary,deductions["Federal Taxes"],round(salary*(1-deductions["Federal Taxes"])) from employees;

arithmetic operator

+,加

-,减

*,乘

/,除

%,求余

&,按位取与

|,按位取或

^,按位取亦或

~,按位取反

limit statement

select name,salary from employees limit 2;

select name,salary from employees limit 1,2;

查询从第1列开始,返回2列

column alias

select name as n,salary from employees;

DML data manipulation

data import

load

load data [local] inpath '数据的path' [overwrite] into table student [partition (partcol1=val1,…)];

(1)load data:表示加载数据

(2)local:表示从本地加载数据到hive表;否则从HDFS加载数据到hive表

(3)inpath:表示加载数据的路径

(4)overwrite:表示覆盖表中已有数据,否则表示追加

(5)into table:表示加载到哪张表

(6)student:表示具体的表

(7)partition:表示上传到指定分区

insert

insert into或overwrite table student_par values(1,'wangwu'),(2,'zhaoliu');

insert into:以追加数据的方式插入到表或分区,原有数据不会删除

insert overwrite:会覆盖表中已存在的数据

insert overwrite table student_par select id, name from student ;

(根据单张表查询结果插入)

Create tables based on query results

create table if not exists student3 as select id, name from student;

Specify the loading data path through Location when creating a table

create external table if not exists student5(

id int, name string

)

row format delimited fields terminated by '\t'

location '/student;

Data output

Insert export

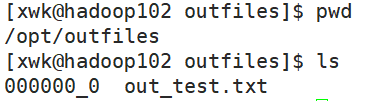

1) Export the results of the query to the local

insert overwrite local directory '/opt/outfiles' select * from employees;

2) Format and export the query results to the local

insert overwrite local directory '/opt/outfiles'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

select * from employees;

3) Export the query results to HDFS (no local)

insert overwrite directory '/opt/outfiles'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

select * from employees;

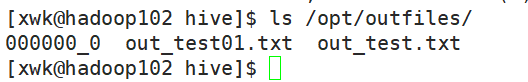

Export Hadoop commands to local

dfs -get /user/hive/warehouse/learnhive.db/test2/test.txt /opt/outfiles/out_test.txt;

Hive Shell Command Export

[xwk@hadoop102 outfiles]$ cd /opt/software/hive/

[xwk@hadoop102 hive]$ ./bin/hive -e 'select * from learnhive.test2;' > /opt/outfiles/out_test01.txt;

Export to HDFS

export and import are mainly used for Hive table migration between two Hadoop platform clusters.

export table learnhive.test2 to '/opt/outfiles';

Import data to the specified Hive table

Note: Use export to export first, and then import the data.

delete data first

import table test2 from '/opt/outfiles';

Clear the data in the table (Truncate)

Note: Truncate can only delete the management table, not the data in the external table

truncate table test2;

select * from test2;

+-------------+----------------+-----------------+----------------+

| test2.name | test2.friends | test2.children | test2.address |

+-------------+----------------+-----------------+----------------+

+-------------+----------------+-----------------+----------------+