HashMap是Java中最常用的数据结构之一,经常用来存储键值对。虽然网络上已经有很多关于HashMap源码分析的代码,毕竟“纸上得来终觉浅,绝知此事要躬行”,是时候一探究竟了。

一、概述

HashMap是基于哈希表实现的Map接口。这个实现提供了所有可选的映射操作,并允许null值和null键。(HashMap类大致相当于Hashtable,不同的是它是非同步的,并且允许为空。)这个类不保证映射的顺序;特别是,它不能保证顺序随时间保持不变。

这个实现为基本操作(get和put)提供了恒定时间的性能,前提是哈希函数将元素适当地分散在桶中。集合视图的迭代需要的时间与HashMap实例的“capacity(容量)”(bucket的数量)及其大小(键值映射的数量)成比例。因此,如果迭代性能很重要,那么不要将初始容量设置得太高(或者负载系数设置得太低)。

一个HashMap的实例有两个影响其性能的参数:初始容量和负载因子。容量是哈希表中的桶数,初始容量就是创建哈希表时的容量。负载因子是在哈希表的容量自动增加之前,允许哈希表填满的度量。当哈希表中的条目数量超过负载因子和当前容量的乘积时,哈希表就是rehash (即重新构建内部数据结构),这样哈希表的bucket数量大约是原来的两倍。

一般来说,默认的负载因子(0.75)在时间和空间成本之间提供了很好的权衡。较高的值会减少空间开销,但会增加查找成本(反映在HashMap类的大多数操作中,包括get和put)。在设置map的初始容量时,应该考虑map中预期的条目数及其负载因子,从而将rehash操作的数量减到最少。如果初始容量大于条目数除以负载因子的最大值,则不会发生rehash操作。

如果要将许多mappings存储在HashMap实例中,那么使用足够大的容量创建映射将使其存储的效率高于根据表的增长需要执行自动rehash。注意,使用具有相同hashCode()的多个键肯定会降低任何散列表的性能。为了改善影响,当键是Comparable时,该类可以使用键之间的比较顺序来帮助打破关系。

请注意,此实现不是同步的。如果多个线程同时访问一个哈希映射,并且其中至少有一个线程在结构上修改了映射,那么它必须在外部同步。(结构修改是任何添加或删除一个或多个映射的操作;仅仅更改与实例已经包含的键关联的值不是结构修改)。这通常是通过对一些自然封装映射的对象进行同步来完成的。

如果不存在这样的对象,则应该使用Collections#synchronizedMap Collections.synchronizedMap方法。这最好在创建时完成,以防止对映射的意外非同步访问:

Map m = Collections.synchronizedMap(new HashMap(...));

这个类的所有“集合视图方法”返回的迭代器都是fail-fast;如果在迭代器创建之后,以任何方式在结构上修改映射,除了通过迭代器自己的remove方法,迭代器将抛出ConcurrentModificationException。因此,面对并发修改,迭代器会快速而干净地失败,而不是在将来某个不确定的时间冒险进行任意的、不确定的行为。

请注意,迭代器的fail-fast行为不能得到保证,因为一般来说,在非同步并发修改的情况下不可能做出任何硬保证。fail-fast迭代器以最大的努力抛出ConcurrentModificationException。因此,编写一个依赖于这个异常的正确性的程序是错误的:迭代器的fail-fast行为应该只用于检测bug。

该类是Java集合框架的成员。

二、继承结构

public class HashMap<K,V> extends AbstractMap<K,V>

implements Map<K,V>, Cloneable, Serializable

HashMap继承了AbstractMap类。AbstractMap是一个抽象类,实现了Map接口,此类提供Map接口的骨干实现,以最大限度地减少实现此接口所需的工作量。

public abstract class AbstractMap<K,V> implements Map<K,V>

Map接口,将键映射到值的对象。映射不能包含重复的键;每个键最多只能映射到一个值。这个接口代替了Dictionary类,后者是一个完全抽象的类,而不是一个接口。

public interface Map<K,V>

实现Cloneable和Serializable接口,分别对应克隆和序列化操作。

三、HashMap性能比较

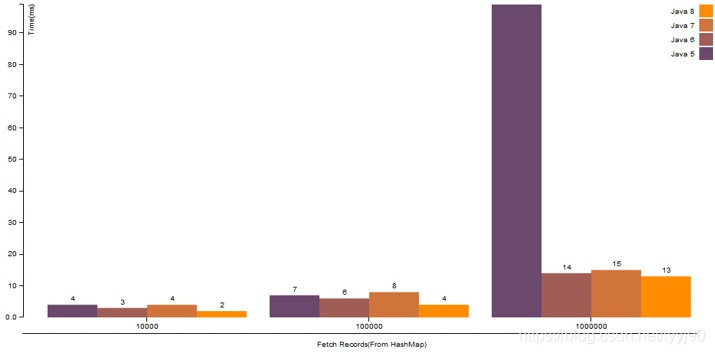

基于创建不同大小的HashMap并按键执行put和get操作的简单实验,记录了以下结果。

3.1 使用正确的hashCode()逻辑进行HashMap.get()操作

| 记录数量 | Java 5 | Java 6 | Java 7 | Java 8 |

|---|---|---|---|---|

| 10,000 | 4 ms | 3 ms | 4 ms | 2 ms |

| 100,000 | 7 ms | 6 ms | 8 ms | 4 ms |

| 1,000,000 | 99 ms | 15 ms | 14 ms | 13 ms |

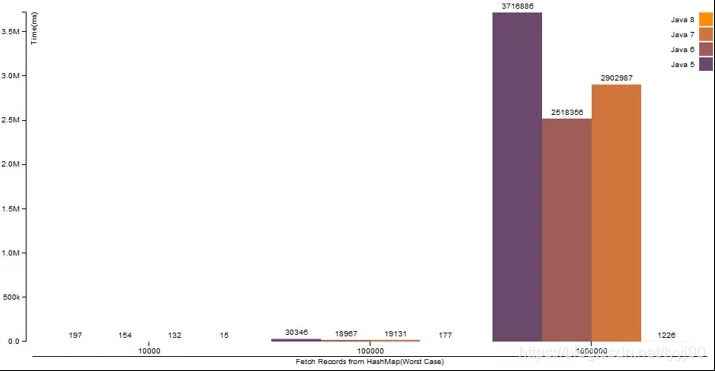

3.2 HashMap.get()操作与破坏(hashCode对所有Keys相同)hashCode()逻辑

| 记录数量 | Java 5 | Java 6 | Java 7 | Java 8 |

|---|---|---|---|---|

| 10,000 | 197 ms | 154 ms | 132 ms | 15 ms |

| 100,000 | 30346 ms | 18967 ms | 19131 ms | 177 ms |

| 1,000,000 | 3716886 ms | 2518356 ms | 2902987 ms | 1226 ms |

| 10,000,000 | OOM | OOM | OOM | 5775 ms |

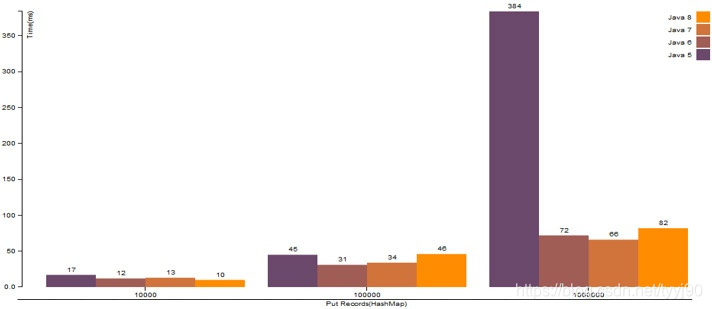

3.3 使用正确的hashCode()逻辑进行HashMap.put()操作

| 记录数量 | Java 5 | Java 6 | Java 7 | Java 8 |

|---|---|---|---|---|

| 10,000 | 17 ms | 12 ms | 13 ms | 10 ms |

| 100,000 | 45 ms | 31 ms | 34 ms | 46 ms |

| 1,000,000 | 384 ms | 72 ms | 66 ms | 82 ms |

| 10,000,000 | 4731 ms | 944 ms | 1024 ms | 99 ms |

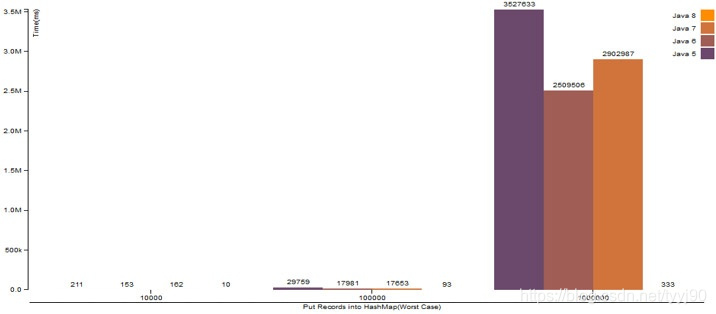

3.4 HashMap.put()操作与破坏(hashCode对所有Keys相同)hashCode()逻辑

| 记录数量 | Java 5 | Java 6 | Java 7 | Java 8 |

|---|---|---|---|---|

| 10,000 | 211 ms | 153 ms | 162 ms | 10 ms |

| 100,000 | 29759 ms | 17981 ms | 17653 ms | 93 ms |

| 1,000,000 | 3527633 ms | 2509506 ms | 2902987 ms | 333 ms |

| 10,000,000 | OOM | OOM | OOM | 3970 ms |

四、详细设计

4.1 概述

HashMap使用了拉链式的散列算法,并在JDK 1.8中引入了红黑树优化过长的链表。

散列冲突会对HashMap的查找时间产生负面影响。当多个键最终位于同一个存储桶中时,则值及其键将放置在链表中。在检索的情况下,必须遍历链表以获得条目。在最坏的情况下,当所有键都映射到同一个桶时,HashMap的查找时间从O(1)增加到O(n)。

在高冲突的情况下,Java 8对HashMap对象进行了以下改进/更改。

- Java 7中添加的备用String散列函数已被删除;

- 包含大量碰撞键的存储桶将在达到特定阈值后将其条目存储在平衡树中而不是链表中。

以上更改确保在最坏情况下和O(1)与正确的hashCode()的性能O(log(n))。

这个映射通常充当一个桶哈希表,但是当桶变得太大时,它们会被转换成树节点的元素,每个元素的结构都类似于java.util.TreeMap中的元素。大多数方法都尝试使用普通的元素,但在适用时中继到TreeNode方法(只需检查节点的instanceof)。树节点可以像其他元素一样被遍历和使用,但是在过度填充时支持更快的查找。但是,由于正常使用的大多数桶并没有过度填充,所以在表方法的过程中可能会延迟检查树是否存在。

在Java 8中,当存储桶中的元素数量达到特定阈值时,HashMap用红黑树替换链表。在将链表转换为红黑树时,哈希码用作分支变量。如果同一个存储桶中有两个不同的哈希码,则一个被认为更大,并且位于树的右侧,另一个位于左侧。但是当两个哈希码相等时,HashMap假定key是可比较的,并比较key以确定方向,以便可以维护某些顺序。使HashMap的键可比较是一种很好的做法。

4.2 重要常量

以下是代码片段,注释中给出了解释。

/**

* The default initial capacity - MUST be a power of two.

*/

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16

/**

* The maximum capacity, used if a higher value is implicitly specified

* by either of the constructors with arguments.

* MUST be a power of two <= 1<<30.

*/

static final int MAXIMUM_CAPACITY = 1 << 30;

/**

* The load factor used when none specified in constructor.

*/

static final float DEFAULT_LOAD_FACTOR = 0.75f;

/**

* The bin count threshold for using a tree rather than list for a

* bin. Bins are converted to trees when adding an element to a

* bin with at least this many nodes. The value must be greater

* than 2 and should be at least 8 to mesh with assumptions in

* tree removal about conversion back to plain bins upon

* shrinkage.

*/

static final int TREEIFY_THRESHOLD = 8;

/**

* The bin count threshold for untreeifying a (split) bin during a

* resize operation. Should be less than TREEIFY_THRESHOLD, and at

* most 6 to mesh with shrinkage detection under removal.

*/

static final int UNTREEIFY_THRESHOLD = 6;

/**

* The smallest table capacity for which bins may be treeified.

* (Otherwise the table is resized if too many nodes in a bin.)

* Should be at least 4 * TREEIFY_THRESHOLD to avoid conflicts

* between resizing and treeification thresholds.

*/

static final int MIN_TREEIFY_CAPACITY = 64;

| 常量 | 值 | 解释 |

|---|---|---|

| DEFAULT_INITIAL_CAPACITY | 1 << 4(即16) | 初始容量缺省值,一定是2的幂 |

| MAXIMUM_CAPACITY | 1 << 30 | 最大容量,如果较高的值由带参数的任何构造函数隐式指定,则使用该值。必须是2的幂 <= (1 << 30) |

| DEFAULT_LOAD_FACTOR | 0.75f | 构造函数中没有指定时使用的负载因子 |

| TREEIFY_THRESHOLD | 8 | 链表转化为树的阈值 |

| UNTREEIFY_THRESHOLD | 6 | 树转化为链表的阈值,用于在调整大小操作期间反树化(拆分) |

| MIN_TREEIFY_CAPACITY | 64 | 树化表的最小容量 |

4.3 Fields

以下是HashMap中的几个重要Field。

/* ---------------- Fields -------------- */

/**

* The table, initialized on first use, and resized as

* necessary. When allocated, length is always a power of two.

* (We also tolerate length zero in some operations to allow

* bootstrapping mechanics that are currently not needed.)

*/

transient Node<K,V>[] table;

/**

* Holds cached entrySet(). Note that AbstractMap fields are used

* for keySet() and values().

*/

transient Set<Map.Entry<K,V>> entrySet;

/**

* The number of key-value mappings contained in this map.

*/

transient int size;

/**

* The number of times this HashMap has been structurally modified

* Structural modifications are those that change the number of mappings in

* the HashMap or otherwise modify its internal structure (e.g.,

* rehash). This field is used to make iterators on Collection-views of

* the HashMap fail-fast. (See ConcurrentModificationException).

*/

transient int modCount;

/**

* The next size value at which to resize (capacity * load factor).

*

* @serial

*/

// (The javadoc description is true upon serialization.

// Additionally, if the table array has not been allocated, this

// field holds the initial array capacity, or zero signifying

// DEFAULT_INITIAL_CAPACITY.)

int threshold;

/**

* The load factor for the hash table.

*

* @serial

*/

final float loadFactor;

先说说transient关键字,Java中transient关键字的作用是被修饰的成员属性变量不被序列化。HashMap实现了Serializable接口,意味着可以被序列化。如果字段不需要序列化则可以使用关键字transient修饰。

| Field | 说明 |

|---|---|

| table | 节点表,第一次使用时初始化,并根据需要调整大小。当分配时,长度总是2的幂。 |

| entrySet | 缓存entrySet() |

| size | HashMap中键值对的数量 |

| modCount | 这个HashMap在结构上被修改的次数, 结构修改是指改变HashMap中映射的数量或修改其内部结构的次数(例如,rehash)。 此字段用于使HashMap集合视图上的迭代器快速失效。 |

| threshold | 要调整大小的下一个值(容量*负载因子) |

| loadFactor | 哈希表的负载因子 |

4.4 关键内部类

HashMap源码中包含了好几个内部类,比如Node<K,V>和TreeNode<K,V>

4.4.1 Node<K,V>

基本哈希表节点,用于大多数条目。此类构造函数需要形参提供hash值、key、value和next节点。next是用来指向下一个节点的。它的hashCode()是key和value哈希值的异或。我们看到方法都使用了final关键字修饰,意味着子类无法重写。

static class Node<K,V> implements Map.Entry<K,V> {

final int hash;

final K key;

V value;

Node<K,V> next;

Node(int hash, K key, V value, Node<K,V> next) {

this.hash = hash;

this.key = key;

this.value = value;

this.next = next;

}

public final K getKey() { return key; }

public final V getValue() { return value; }

public final String toString() { return key + "=" + value; }

public final int hashCode() {

return Objects.hashCode(key) ^ Objects.hashCode(value);

}

public final V setValue(V newValue) {

V oldValue = value;

value = newValue;

return oldValue;

}

public final boolean equals(Object o) {

if (o == this)

return true;

if (o instanceof Map.Entry) {

Map.Entry<?,?> e = (Map.Entry<?,?>)o;

if (Objects.equals(key, e.getKey()) &&

Objects.equals(value, e.getValue()))

return true;

}

return false;

}

}

4.4.2 TreeNode<K,V>

树节点条目,继承自LinkedHashMap.Entry<K,V>,而LinkedHashMap.Entry<K,V>又继承了HashMap.Node<K,V>,所以它可用于普通节点或链接节点的扩展。HashMap中的平衡树是红黑树。

类内部Field parent–父节点,left–左孩子,right–右孩子,prev–前一个节点,red–节点颜色

root()方法很好理解。获取红黑树的根节点,通过遍历节点的parent,直到为null,说明找到了根节点。

moveRootToFront方法可确保给定的root节点为哈希表“拉链法”的某个头节点。表中index索引处替换为root,root的prev节点和root的next节点链接起来,以前的first节点现在排在root节点之后。同时把root的prev节点赋为null,代表首节点无prev节点。

find函数使用给定的hash和键从p开始查找节点,kc参数在第一次使用comparableClassFor(key)比较key时做了缓存。

(1)如果给定的哈希值小于当前节点的哈希值,说明应该朝着红黑树左子树查找;

(2)如果给定的哈希值大于当前节点的哈希值,则朝着右子树查找;

(3)当不满足大于和小于的场景,说明两个TreeNode哈希值是相等的,接着比较key是否相等,相等则直接返回此节点,已经找到;

(4)当前节点左右子节点为空,则移动当前节点到左右的非空子节点;

(5)如果都不满足以上条件,则通过比较器决定查找走向;

(6)比较器没法决出大小,直接开始从右子树查找,找到不为null的节点后直接返回;

(7)否则,当前节点选择左子节点。

comparableClassFor(Object x)方法用来检查k是否是可比较的(如果对象x的类是C,如果C实现了Comparable接口,那么返回C,否则返回null);

compareComparables(Class<?> kc, Object k, Object x)方法的含义为如果x所属的类是kc,返回k.compareTo(x)的比较结果,如果x为空,或者其所属的类不是kc,返回0;

getTreeNode实际上调用find()方法进行节点查找,首先判断parent是否为null,如果为null说明当前节点就是root节点,如果不是则调用root()获取根节点,从root节点开始查找。

tieBreakOrder函数表示决出两个对象的大小,当hash相等且不可比较时,此函数用于排序插入。我们不需要一个总顺序,只需要一个一致的插入规则来在重新平衡之间保持等价。此函数首先通过类名字符串比较大小,如果无法“决出胜负”,则获取对象的HashCode去比较大小。tie-break的意思是:指在一盘网球( set )的比分来到6-6时,为了决定该盘的胜负所进行的一个特殊的局( game )。领先对手2分以上(含2分)且其得分达七分(含七分)以上者胜出。也就是决胜局!

treeify函数用来将链表树化。遍历整个链表,首先将当前节点作为root节点,然后接下来将链表的节点x插入到合适的位置。

untreeify函数是treeify函数的逆操作,将树化以后的结构变为链表,遍历树节点,并将树节点转化为普通链表节点。

putTreeVal函数首先查找树中是否已经存在要插入的键值,如果根据hash和key找到了则直接返回对应的节点;没找到的话,先将其构造为树节点,然后将其插入到树中。

removeTreeNode函数从名字不难看出就是把节点从树中删除。注释中提到这个删除方法比红黑树更复杂。

(1)首先我们把当前节点的的链接断开,如果这个时候判断头结点为空,那么直接返回,说明后面已经没有节点了;

(2)然后判断是否向非树转化,需要满足四个条件之一:root为null、root的右孩子为null、root的左孩子为null和root左孩子的左孩子为null;由于本身为红黑树,由这几个条件就可以知道这个时候红黑树的节点已经很少了,低于阈值进行转化;

(3)接下来进入红黑树删除节点的case,具体可以结合红黑树删除节点的代码分析,略过。

split函数从注释可知,这个方法是将红黑树结构进行拆分,将其拆分成两颗树,如果元素太少了,则从树状结构变为链表,而且仅是在缩放Map的时候才会调用。

static final class TreeNode<K,V> extends LinkedHashMap.Entry<K,V> {

TreeNode<K,V> parent; // red-black tree links

TreeNode<K,V> left;

TreeNode<K,V> right;

TreeNode<K,V> prev; // needed to unlink next upon deletion

boolean red;

TreeNode(int hash, K key, V val, Node<K,V> next) {

super(hash, key, val, next);

}

/**

* Returns root of tree containing this node.

*/

final TreeNode<K,V> root() {

for (TreeNode<K,V> r = this, p;;) {

if ((p = r.parent) == null)

return r;

r = p;

}

}

/**

* Ensures that the given root is the first node of its bin.

*/

static <K,V> void moveRootToFront(Node<K,V>[] tab, TreeNode<K,V> root) {

int n;

if (root != null && tab != null && (n = tab.length) > 0) {

int index = (n - 1) & root.hash;

TreeNode<K,V> first = (TreeNode<K,V>)tab[index];

if (root != first) {

Node<K,V> rn;

tab[index] = root;

TreeNode<K,V> rp = root.prev;

if ((rn = root.next) != null)

((TreeNode<K,V>)rn).prev = rp;

if (rp != null)

rp.next = rn;

if (first != null)

first.prev = root;

root.next = first;

root.prev = null;

}

assert checkInvariants(root);

}

}

/**

* Finds the node starting at root p with the given hash and key.

* The kc argument caches comparableClassFor(key) upon first use

* comparing keys.

*/

final TreeNode<K,V> find(int h, Object k, Class<?> kc) {

TreeNode<K,V> p = this;

do {

int ph, dir; K pk;

TreeNode<K,V> pl = p.left, pr = p.right, q;

if ((ph = p.hash) > h)

p = pl;

else if (ph < h)

p = pr;

else if ((pk = p.key) == k || (k != null && k.equals(pk)))

return p;

else if (pl == null)

p = pr;

else if (pr == null)

p = pl;

else if ((kc != null ||

(kc = comparableClassFor(k)) != null) &&

(dir = compareComparables(kc, k, pk)) != 0)

p = (dir < 0) ? pl : pr;

else if ((q = pr.find(h, k, kc)) != null)

return q;

else

p = pl;

} while (p != null);

return null;

}

/**

* Calls find for root node.

*/

final TreeNode<K,V> getTreeNode(int h, Object k) {

return ((parent != null) ? root() : this).find(h, k, null);

}

/**

* Tie-breaking utility for ordering insertions when equal

* hashCodes and non-comparable. We don't require a total

* order, just a consistent insertion rule to maintain

* equivalence across rebalancings. Tie-breaking further than

* necessary simplifies testing a bit.

*/

static int tieBreakOrder(Object a, Object b) {

int d;

if (a == null || b == null ||

(d = a.getClass().getName().

compareTo(b.getClass().getName())) == 0)

d = (System.identityHashCode(a) <= System.identityHashCode(b) ?

-1 : 1);

return d;

}

/**

* Forms tree of the nodes linked from this node.

* @return root of tree

*/

final void treeify(Node<K,V>[] tab) {

TreeNode<K,V> root = null;

for (TreeNode<K,V> x = this, next; x != null; x = next) {

next = (TreeNode<K,V>)x.next;

x.left = x.right = null;

if (root == null) {

x.parent = null;

x.red = false;

root = x;

}

else {

K k = x.key;

int h = x.hash;

Class<?> kc = null;

for (TreeNode<K,V> p = root;;) {

int dir, ph;

K pk = p.key;

if ((ph = p.hash) > h)

dir = -1;

else if (ph < h)

dir = 1;

else if ((kc == null &&

(kc = comparableClassFor(k)) == null) ||

(dir = compareComparables(kc, k, pk)) == 0)

dir = tieBreakOrder(k, pk);

TreeNode<K,V> xp = p;

if ((p = (dir <= 0) ? p.left : p.right) == null) {

x.parent = xp;

if (dir <= 0)

xp.left = x;

else

xp.right = x;

root = balanceInsertion(root, x);

break;

}

}

}

}

moveRootToFront(tab, root);

}

/**

* Returns a list of non-TreeNodes replacing those linked from

* this node.

*/

final Node<K,V> untreeify(HashMap<K,V> map) {

Node<K,V> hd = null, tl = null;

for (Node<K,V> q = this; q != null; q = q.next) {

Node<K,V> p = map.replacementNode(q, null);

if (tl == null)

hd = p;

else

tl.next = p;

tl = p;

}

return hd;

}

/**

* Tree version of putVal.

*/

final TreeNode<K,V> putTreeVal(HashMap<K,V> map, Node<K,V>[] tab,

int h, K k, V v) {

Class<?> kc = null;

boolean searched = false;

TreeNode<K,V> root = (parent != null) ? root() : this;

for (TreeNode<K,V> p = root;;) {

int dir, ph; K pk;

if ((ph = p.hash) > h)

dir = -1;

else if (ph < h)

dir = 1;

else if ((pk = p.key) == k || (k != null && k.equals(pk)))

return p;

else if ((kc == null &&

(kc = comparableClassFor(k)) == null) ||

(dir = compareComparables(kc, k, pk)) == 0) {

if (!searched) {

TreeNode<K,V> q, ch;

searched = true;

if (((ch = p.left) != null &&

(q = ch.find(h, k, kc)) != null) ||

((ch = p.right) != null &&

(q = ch.find(h, k, kc)) != null))

return q;

}

dir = tieBreakOrder(k, pk);

}

TreeNode<K,V> xp = p;

if ((p = (dir <= 0) ? p.left : p.right) == null) {

Node<K,V> xpn = xp.next;

TreeNode<K,V> x = map.newTreeNode(h, k, v, xpn);

if (dir <= 0)

xp.left = x;

else

xp.right = x;

xp.next = x;

x.parent = x.prev = xp;

if (xpn != null)

((TreeNode<K,V>)xpn).prev = x;

moveRootToFront(tab, balanceInsertion(root, x));

return null;

}

}

}

/**

* Removes the given node, that must be present before this call.

* This is messier than typical red-black deletion code because we

* cannot swap the contents of an interior node with a leaf

* successor that is pinned by "next" pointers that are accessible

* independently during traversal. So instead we swap the tree

* linkages. If the current tree appears to have too few nodes,

* the bin is converted back to a plain bin. (The test triggers

* somewhere between 2 and 6 nodes, depending on tree structure).

*/

final void removeTreeNode(HashMap<K,V> map, Node<K,V>[] tab,

boolean movable) {

int n;

if (tab == null || (n = tab.length) == 0)

return;

int index = (n - 1) & hash;

TreeNode<K,V> first = (TreeNode<K,V>)tab[index], root = first, rl;

TreeNode<K,V> succ = (TreeNode<K,V>)next, pred = prev;

if (pred == null)

tab[index] = first = succ;

else

pred.next = succ;

if (succ != null)

succ.prev = pred;

if (first == null)

return;

if (root.parent != null)

root = root.root();

if (root == null || root.right == null ||

(rl = root.left) == null || rl.left == null) {

tab[index] = first.untreeify(map); // too small

return;

}

TreeNode<K,V> p = this, pl = left, pr = right, replacement;

if (pl != null && pr != null) {

TreeNode<K,V> s = pr, sl;

while ((sl = s.left) != null) // find successor

s = sl;

boolean c = s.red; s.red = p.red; p.red = c; // swap colors

TreeNode<K,V> sr = s.right;

TreeNode<K,V> pp = p.parent;

if (s == pr) { // p was s's direct parent

p.parent = s;

s.right = p;

}

else {

TreeNode<K,V> sp = s.parent;

if ((p.parent = sp) != null) {

if (s == sp.left)

sp.left = p;

else

sp.right = p;

}

if ((s.right = pr) != null)

pr.parent = s;

}

p.left = null;

if ((p.right = sr) != null)

sr.parent = p;

if ((s.left = pl) != null)

pl.parent = s;

if ((s.parent = pp) == null)

root = s;

else if (p == pp.left)

pp.left = s;

else

pp.right = s;

if (sr != null)

replacement = sr;

else

replacement = p;

}

else if (pl != null)

replacement = pl;

else if (pr != null)

replacement = pr;

else

replacement = p;

if (replacement != p) {

TreeNode<K,V> pp = replacement.parent = p.parent;

if (pp == null)

root = replacement;

else if (p == pp.left)

pp.left = replacement;

else

pp.right = replacement;

p.left = p.right = p.parent = null;

}

TreeNode<K,V> r = p.red ? root : balanceDeletion(root, replacement);

if (replacement == p) { // detach

TreeNode<K,V> pp = p.parent;

p.parent = null;

if (pp != null) {

if (p == pp.left)

pp.left = null;

else if (p == pp.right)

pp.right = null;

}

}

if (movable)

moveRootToFront(tab, r);

}

/**

* Splits nodes in a tree bin into lower and upper tree bins,

* or untreeifies if now too small. Called only from resize;

* see above discussion about split bits and indices.

*

* @param map the map

* @param tab the table for recording bin heads

* @param index the index of the table being split

* @param bit the bit of hash to split on

*/

final void split(HashMap<K,V> map, Node<K,V>[] tab, int index, int bit) {

TreeNode<K,V> b = this;

// Relink into lo and hi lists, preserving order

TreeNode<K,V> loHead = null, loTail = null;

TreeNode<K,V> hiHead = null, hiTail = null;

int lc = 0, hc = 0;

for (TreeNode<K,V> e = b, next; e != null; e = next) {

next = (TreeNode<K,V>)e.next;

e.next = null;

if ((e.hash & bit) == 0) {

if ((e.prev = loTail) == null)

loHead = e;

else

loTail.next = e;

loTail = e;

++lc;

}

else {

if ((e.prev = hiTail) == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

++hc;

}

}

if (loHead != null) {

if (lc <= UNTREEIFY_THRESHOLD)

tab[index] = loHead.untreeify(map);

else {

tab[index] = loHead;

if (hiHead != null) // (else is already treeified)

loHead.treeify(tab);

}

}

if (hiHead != null) {

if (hc <= UNTREEIFY_THRESHOLD)

tab[index + bit] = hiHead.untreeify(map);

else {

tab[index + bit] = hiHead;

if (loHead != null)

hiHead.treeify(tab);

}

}

}

/* ------------------------------------------------------------ */

// Red-black tree methods, all adapted from CLR

static <K,V> TreeNode<K,V> rotateLeft(TreeNode<K,V> root,

TreeNode<K,V> p) {

TreeNode<K,V> r, pp, rl;

if (p != null && (r = p.right) != null) {

if ((rl = p.right = r.left) != null)

rl.parent = p;

if ((pp = r.parent = p.parent) == null)

(root = r).red = false;

else if (pp.left == p)

pp.left = r;

else

pp.right = r;

r.left = p;

p.parent = r;

}

return root;

}

static <K,V> TreeNode<K,V> rotateRight(TreeNode<K,V> root,

TreeNode<K,V> p) {

TreeNode<K,V> l, pp, lr;

if (p != null && (l = p.left) != null) {

if ((lr = p.left = l.right) != null)

lr.parent = p;

if ((pp = l.parent = p.parent) == null)

(root = l).red = false;

else if (pp.right == p)

pp.right = l;

else

pp.left = l;

l.right = p;

p.parent = l;

}

return root;

}

static <K,V> TreeNode<K,V> balanceInsertion(TreeNode<K,V> root,

TreeNode<K,V> x) {

x.red = true;

for (TreeNode<K,V> xp, xpp, xppl, xppr;;) {

if ((xp = x.parent) == null) {

x.red = false;

return x;

}

else if (!xp.red || (xpp = xp.parent) == null)

return root;

if (xp == (xppl = xpp.left)) {

if ((xppr = xpp.right) != null && xppr.red) {

xppr.red = false;

xp.red = false;

xpp.red = true;

x = xpp;

}

else {

if (x == xp.right) {

root = rotateLeft(root, x = xp);

xpp = (xp = x.parent) == null ? null : xp.parent;

}

if (xp != null) {

xp.red = false;

if (xpp != null) {

xpp.red = true;

root = rotateRight(root, xpp);

}

}

}

}

else {

if (xppl != null && xppl.red) {

xppl.red = false;

xp.red = false;

xpp.red = true;

x = xpp;

}

else {

if (x == xp.left) {

root = rotateRight(root, x = xp);

xpp = (xp = x.parent) == null ? null : xp.parent;

}

if (xp != null) {

xp.red = false;

if (xpp != null) {

xpp.red = true;

root = rotateLeft(root, xpp);

}

}

}

}

}

}

static <K,V> TreeNode<K,V> balanceDeletion(TreeNode<K,V> root,

TreeNode<K,V> x) {

for (TreeNode<K,V> xp, xpl, xpr;;) {

if (x == null || x == root)

return root;

else if ((xp = x.parent) == null) {

x.red = false;

return x;

}

else if (x.red) {

x.red = false;

return root;

}

else if ((xpl = xp.left) == x) {

if ((xpr = xp.right) != null && xpr.red) {

xpr.red = false;

xp.red = true;

root = rotateLeft(root, xp);

xpr = (xp = x.parent) == null ? null : xp.right;

}

if (xpr == null)

x = xp;

else {

TreeNode<K,V> sl = xpr.left, sr = xpr.right;

if ((sr == null || !sr.red) &&

(sl == null || !sl.red)) {

xpr.red = true;

x = xp;

}

else {

if (sr == null || !sr.red) {

if (sl != null)

sl.red = false;

xpr.red = true;

root = rotateRight(root, xpr);

xpr = (xp = x.parent) == null ?

null : xp.right;

}

if (xpr != null) {

xpr.red = (xp == null) ? false : xp.red;

if ((sr = xpr.right) != null)

sr.red = false;

}

if (xp != null) {

xp.red = false;

root = rotateLeft(root, xp);

}

x = root;

}

}

}

else { // symmetric

if (xpl != null && xpl.red) {

xpl.red = false;

xp.red = true;

root = rotateRight(root, xp);

xpl = (xp = x.parent) == null ? null : xp.left;

}

if (xpl == null)

x = xp;

else {

TreeNode<K,V> sl = xpl.left, sr = xpl.right;

if ((sl == null || !sl.red) &&

(sr == null || !sr.red)) {

xpl.red = true;

x = xp;

}

else {

if (sl == null || !sl.red) {

if (sr != null)

sr.red = false;

xpl.red = true;

root = rotateLeft(root, xpl);

xpl = (xp = x.parent) == null ?

null : xp.left;

}

if (xpl != null) {

xpl.red = (xp == null) ? false : xp.red;

if ((sl = xpl.left) != null)

sl.red = false;

}

if (xp != null) {

xp.red = false;

root = rotateRight(root, xp);

}

x = root;

}

}

}

}

}

/**

* Recursive invariant check

*/

static <K,V> boolean checkInvariants(TreeNode<K,V> t) {

TreeNode<K,V> tp = t.parent, tl = t.left, tr = t.right,

tb = t.prev, tn = (TreeNode<K,V>)t.next;

if (tb != null && tb.next != t)

return false;

if (tn != null && tn.prev != t)

return false;

if (tp != null && t != tp.left && t != tp.right)

return false;

if (tl != null && (tl.parent != t || tl.hash > t.hash))

return false;

if (tr != null && (tr.parent != t || tr.hash < t.hash))

return false;

if (t.red && tl != null && tl.red && tr != null && tr.red)

return false;

if (tl != null && !checkInvariants(tl))

return false;

if (tr != null && !checkInvariants(tr))

return false;

return true;

}

}

4.5 关键方法

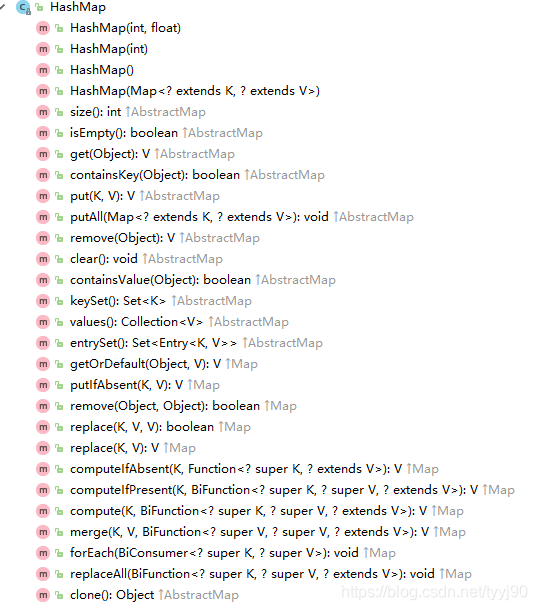

上图是HashMap的public方法,也就是我们使用HashMap的API。可以看到这些方法都是实现Map接口的,或者是实现抽象类AbstractMap的。

4.5.1 构造函数

从上图看到有四个构造函数。它们分别是:

/**

* Constructs an empty <tt>HashMap</tt> with the specified initial

* capacity and load factor.

*

* @param initialCapacity the initial capacity

* @param loadFactor the load factor

* @throws IllegalArgumentException if the initial capacity is negative

* or the load factor is nonpositive

*/

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

this.loadFactor = loadFactor;

this.threshold = tableSizeFor(initialCapacity);

}

/**

* Constructs an empty <tt>HashMap</tt> with the specified initial

* capacity and the default load factor (0.75).

*

* @param initialCapacity the initial capacity.

* @throws IllegalArgumentException if the initial capacity is negative.

*/

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

/**

* Constructs an empty <tt>HashMap</tt> with the default initial capacity

* (16) and the default load factor (0.75).

*/

public HashMap() {

this.loadFactor = DEFAULT_LOAD_FACTOR; // all other fields defaulted

}

/**

* Constructs a new <tt>HashMap</tt> with the same mappings as the

* specified <tt>Map</tt>. The <tt>HashMap</tt> is created with

* default load factor (0.75) and an initial capacity sufficient to

* hold the mappings in the specified <tt>Map</tt>.

*

* @param m the map whose mappings are to be placed in this map

* @throws NullPointerException if the specified map is null

*/

public HashMap(Map<? extends K, ? extends V> m) {

this.loadFactor = DEFAULT_LOAD_FACTOR;

putMapEntries(m, false);

}

这四个构造函数用的最多的莫过于不带参数的HashMap(),它使用了默认参数(初始容量为16、负载因子为0.75)初始化HashMap。

我们再来看构造函数:HashMap(int initialCapacity, float loadFactor),它让我们可以指定初始容量和负载因子。构造函数首先判断入参是否正确,接下来为变量loadFactor赋值相应的负载因子,为变量threshold赋值,这个赋值是调用tableSizeFor(int cap)函数进行的,threshold代表要调整大小的下一个值,因为初始容量应该为2的幂,所以tableSizeFor函数就是做这个工作的。

至于带一个形参HashMap(int initialCapacity)构造函数,实际上内部还是调用HashMap(int initialCapacity, float loadFactor),只不过将负载因子设置为0.75。

最后来看HashMap(Map<? extends K, ? extends V> m),这个函数是为了将其它Map转化为HashMap,同样将负载因子设置为0.75。

4.5.2 tableSizeFor(int cap)

上面提到tableSizeFor函数初始容量为2的幂,我们具体分析一下如何实现。

/**

* Returns a power of two size for the given target capacity.

*/

static final int tableSizeFor(int cap) {

int n = cap - 1;

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

}

各种移位、或运算,咋一看没有凸显规律,这个时候举个例子就最为合适不过了。

首先回顾一下>>>运算,它代表无符号右移。无论是正数还是负数,高位通通补0。

我们假设cap = 11,那么n = 10(0b1010)

经过n |= n >>> 1后 n = 0b1111

经过n |= n >>> 2后 n = 0b1111

经过n |= n >>> 4后 n = 0b1111

经过n |= n >>> 8后 n = 0b1111

经过n |= n >>> 16后 n = 0b1111

最后一步 n + 1 = 16(2^4)

从例子上确实看出这个函数起作用了,它找到了一个大于cap并且为2的幂的数,但没看明白原理。所以继续找个例子看看,找找规律。

我们假设cap = 25,那么n = 24(0b0001 1000)

经过n |= n >>> 1后 n = 0b0001 1100

经过n |= n >>> 2后 n = 0b0001 1111

经过n |= n >>> 4后 n = 0b0001 1111

经过n |= n >>> 8后 n = 0b0001 1111

经过n |= n >>> 16后 n = 0b0001 1111

最后一步 n + 1 = 32(2^5)

好像有一点规律了,这个算法总是把给定cap - 1 的最高为1的位之后都置为1,这样如果再加1,必然就是2的幂,0b000…111… + 1 换算为十进制一定是2^n。

先不看这个函数的作用,我们分析一下,如果要找到刚好大于等于某个数的2的幂,实际就是把这个数用二进制表示,然后最高位左移一位,剩下的位全部补0,比如上面的25 = 0b 0001 1001,就会变为0b0010 0000 = 2^5。

接着看这个算法,如果将25直接经过五步无符号右移、或运算一样可以得到正确结果为什么非要减一呢?实际上这是为了修复如果这个数本身就是2的幂,如果直接无符号右移、或运算就不能把它自己返回了,而是返回了一个比它更大的2的幂的数。

第一次经过n |= n >>> 1运算后相当于n的最高位为1和次高位也为1了;

第二次经过n |= n >>> 2运算后相当于n的最高位1和次高位之后的两位也都为1;

第三次经过n |= n >>> 4运算后相当于用上一次最高位往后的四个都为1的位去或上一次这四位接下来的位;

接下来的步骤也是一样的,最终结果就是数字 cap - 1 本身最高位1往后的位都为1,接着加1之后就是我们想要的结果。

4.5.3 resize()

/**

* Initializes or doubles table size. If null, allocates in

* accord with initial capacity target held in field threshold.

* Otherwise, because we are using power-of-two expansion, the

* elements from each bin must either stay at same index, or move

* with a power of two offset in the new table.

*

* @return the table

*/

final Node<K,V>[] resize() {

Node<K,V>[] oldTab = table;

int oldCap = (oldTab == null) ? 0 : oldTab.length;

int oldThr = threshold;

int newCap, newThr = 0;

if (oldCap > 0) {

if (oldCap >= MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return oldTab;

}

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

newThr = oldThr << 1; // double threshold

}

else if (oldThr > 0) // initial capacity was placed in threshold

newCap = oldThr;

else { // zero initial threshold signifies using defaults

newCap = DEFAULT_INITIAL_CAPACITY;

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

if (newThr == 0) {

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY ?

(int)ft : Integer.MAX_VALUE);

}

threshold = newThr;

@SuppressWarnings({"rawtypes","unchecked"})

Node<K,V>[] newTab = (Node<K,V>[])new Node[newCap];

table = newTab;

if (oldTab != null) {

for (int j = 0; j < oldCap; ++j) {

Node<K,V> e;

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

if (e.next == null)

newTab[e.hash & (newCap - 1)] = e;

else if (e instanceof TreeNode)

((TreeNode<K,V>)e).split(this, newTab, j, oldCap);

else { // preserve order

Node<K,V> loHead = null, loTail = null;

Node<K,V> hiHead = null, hiTail = null;

Node<K,V> next;

do {

next = e.next;

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}

上面是resize()的源码,第一步修改capacity、threshold,然后把表内的数据重新映射到扩容以后的表中。

通常情况下新的容量是旧容量的两倍,相应的threshold也扩大两倍。接着创建新的表。

创建完新表以后,就需要将旧表中内容重新映射到新表中,它分为三种情况:

1.旧表中不存在链表和树结构的情况;

2.旧表中存在树结构;

3.旧表中存在链表;

第一种情况最简单,e.hash & (newCap - 1) 计算后得到在新表中的位置;

第二种情况,将红黑树结构进行拆分,将其拆分成两颗树 e.hash & bit,如果元素太少了,则从树状结构变为链表;

第三种情况,将链表拆分成两个,通过e.hash & oldCap将旧链表拆分成两个新的,然后分别赋值给newTab[j]和newTab[j + oldCap],完成拆分。

参考资料

1.https://www.nagarro.com/en/blog/post/24/performance-improvement-for-hashmap-in-java-8