1.StatefulSet概述

- 部署有状态应用

- 解决Pod独立生命周期,保持Pod启动顺序和唯一性

1. 稳定,唯一的网络标识符,持久存储

2. 有序,优雅的部署和扩展、删除和终止

3. 有序,滚动更新

应用场景:数据库

StatefulSet与Deployment区别:

有身份的!

身份三要素:

- 域名

- 主机名

- 存储(PVC)

无状态的适用:web,api,微服务的部署,可以运行在任意节点,不依赖后端持久化存储。

有状态的适用: 需要有固定ip,pod有各自的存储,可以按一定规则进行扩缩容。

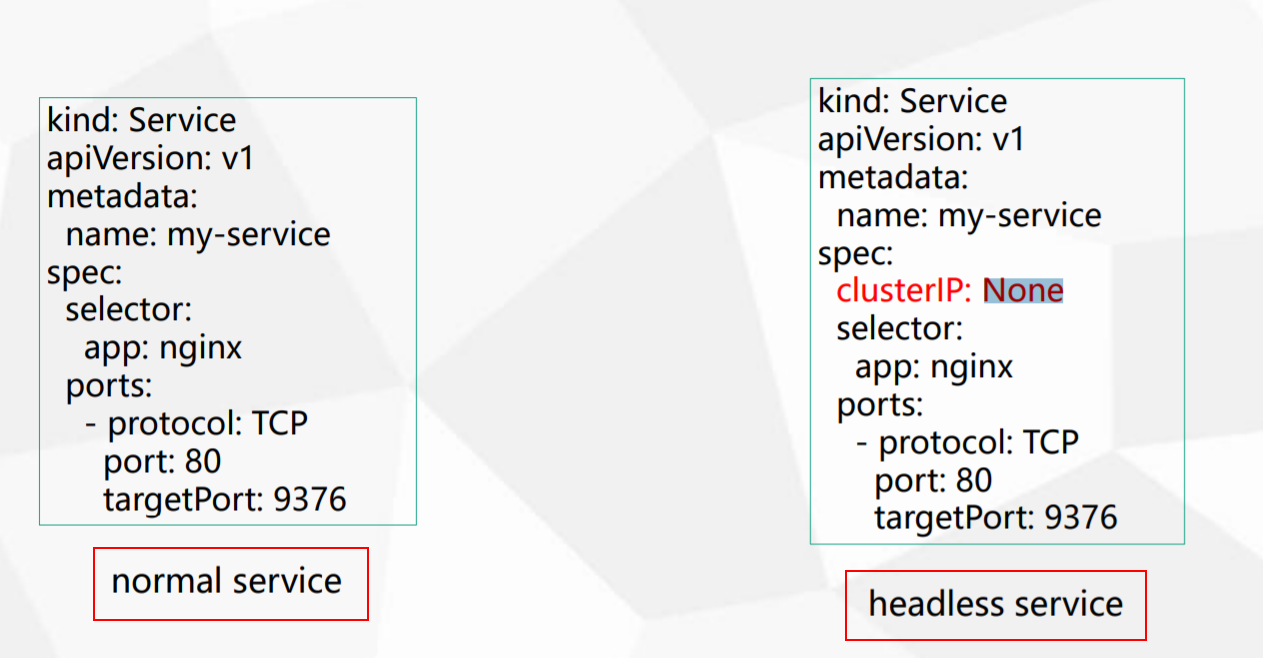

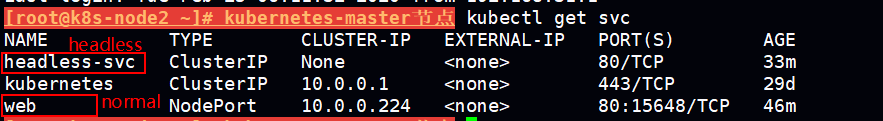

2.正常service和headlessService对比

normal sevice:

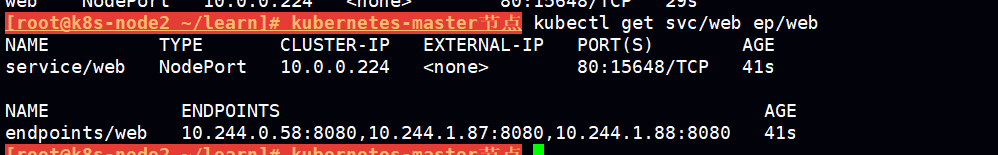

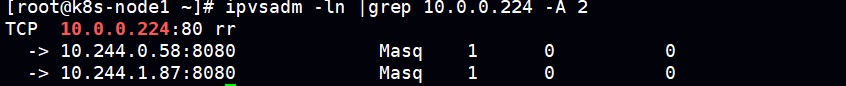

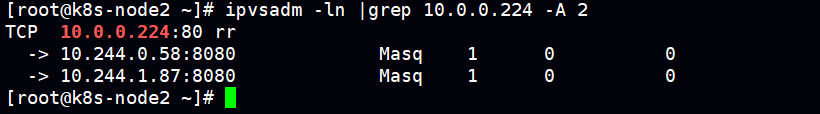

通过一个cluster-ip 10.0.0.224:80 来反向代理 endpoints

10.244.0.58:8080 10.244.1.78:8080 10.244.1.88:8080

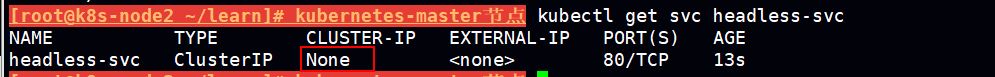

headless service:

无头服务,需要将 clusterIP: None 并且不能设置nodePort

web-headlessService.yaml

apiVersion

statefulSet.yaml seviceName关联上面的svc headless-svc

apiVersion

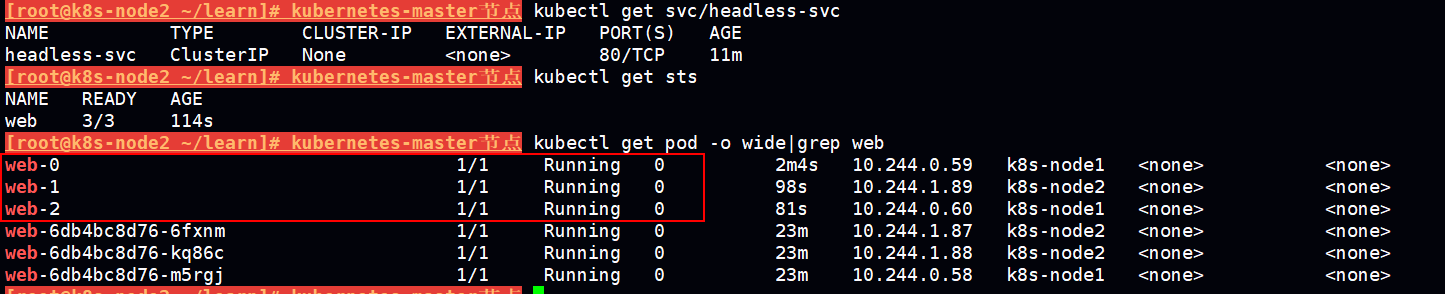

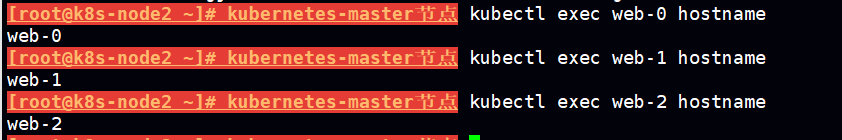

statefulSet生成的pod 都会有个稳定有序的 标识符 statefulSetName-index 如 web-0

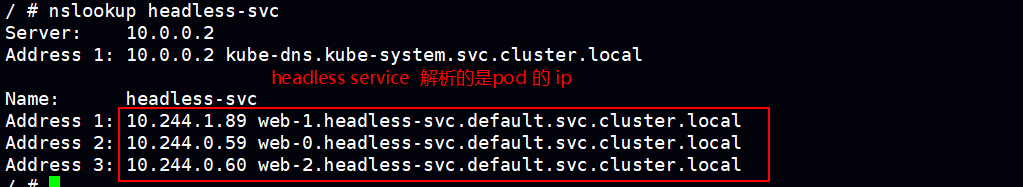

service域名解析差异

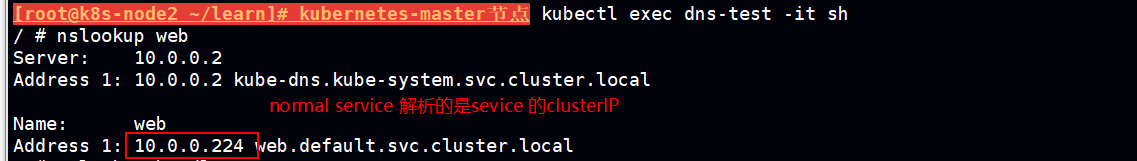

normal service 解析的是 service 的clusterIP

由于headless service 的clusterIP 设置的None 所以解析 service name 的时候解析是sevice 对应的pod ip

ClusterIP A记录格式:(normal service)

<service-name>.<namespace-name>.svc.cluster.local

ClusterIP=None A记录格式:(headless servcie)

<statefulsetName-index>.<service-name>.<namespace-name>.svc.cluster.local

示例:web-0.nginx.default.svc.cluster.local

相比 normal service 的域名格式 headless service 解析出来的格式多一个< statefulSetName-index >

statefulSet 管理的pod 他主机名 statefulSetName-index

3.StatefulSet 存储

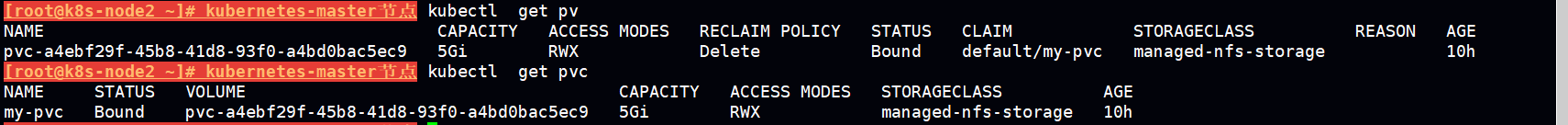

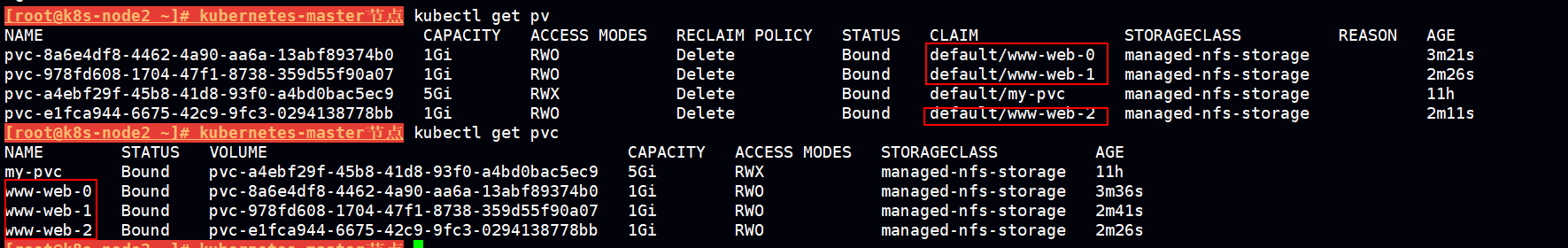

StatefulSet使用 volumeClaimTemplates创建每个Pod的PersistentVolume的同时会为每个pod分配并创建一个带编号的pvc

使用无状态部署创建的动态供给pv 和pvc 是没有编号的

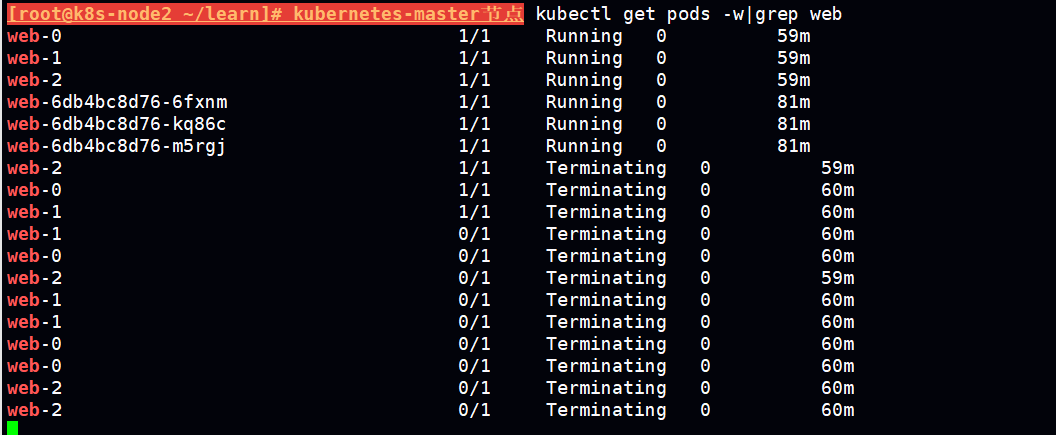

statfulset的删除机制

执行kubectl delete -f statefulSet.yaml

按照创建的先后进行删除,先创建的将会被先删除。

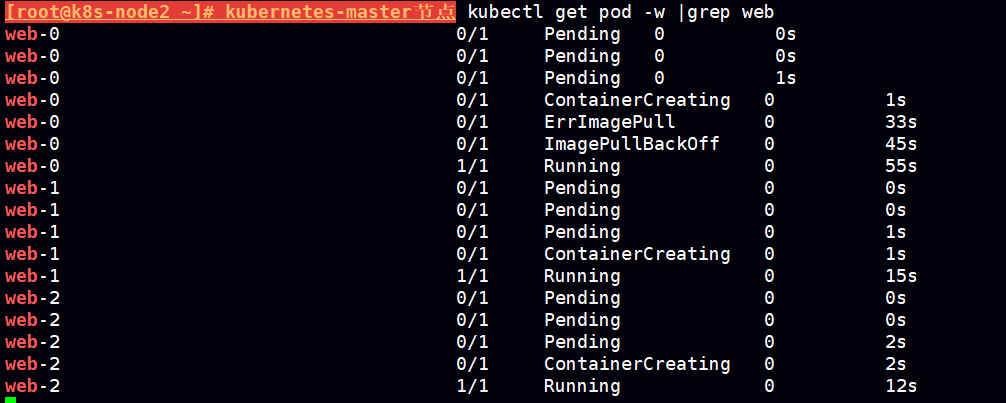

statefulset的启动机制

有序的 pv ,pvc创建

这样就可以使得对应的pod 申请到的 pv 和pvc 与之向对应。

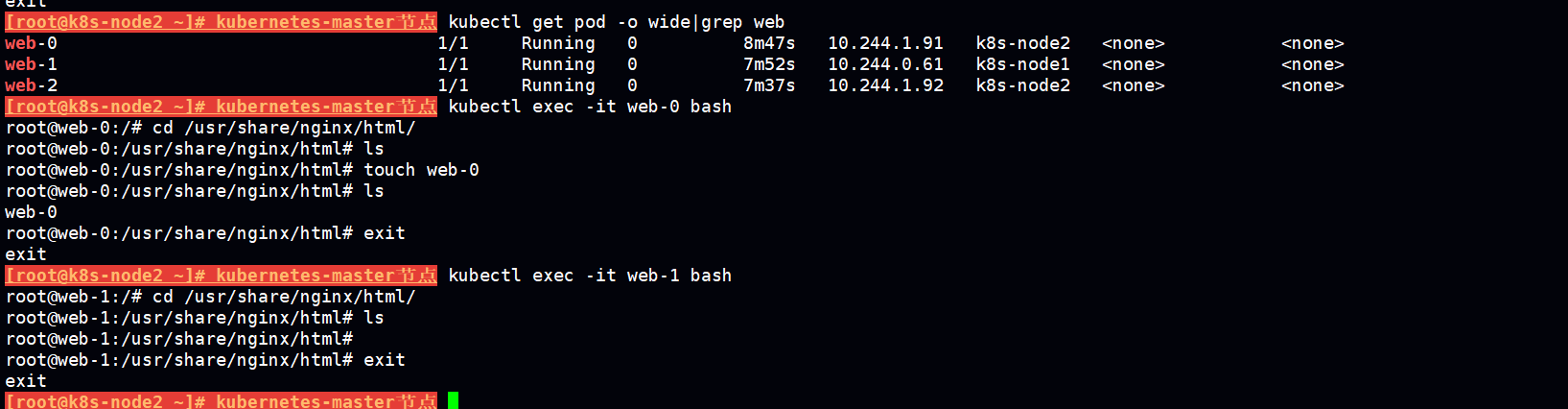

各个pod之前的存储是互相独立的不共享

在web-0创建的文件 在 web-1中是看不到的

4.statefulSet应用示例(Mysql集群)

创建个statefulSet应用目录

mkdir statefulset-mysqlCluster

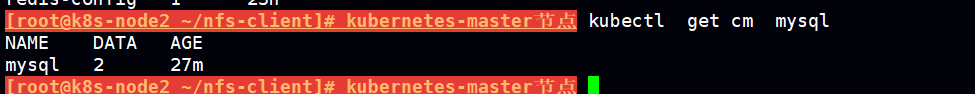

创建configMap

configmap.yaml 主要保存主从配置

apiVersion

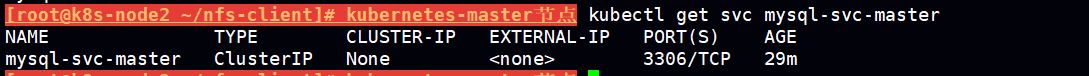

创建service

service.yaml

apiVersion

需要先创建一个Headless Service,用于从机去访问主机。从机可以通过<pod-name>.mysql-svc-master去访问到指定的pod。

创建statefulSet

apiVersion