原始版本

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStreamWriter;

public class StringGenerator {

public static void main(String[] args) {

try (OutputStreamWriter writer = new OutputStreamWriter(new FileOutputStream("text.text"))) {

StringBuilder str = new StringBuilder();

str.append("afiaongaoncanivnrfbnavoiadnvonvoron#".repeat(1000));

str.append("aovnodnvds");

writer.write(str.toString());

} catch (IOException e) {

e.printStackTrace();

}

}

}

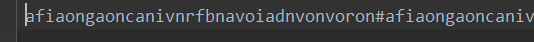

文件写进去了:

看看能不能读出来:

读的出来,那就正式测试吧:

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.util.StringTokenizer;

public class SplitTest {

public static void main(String[] args) {

String str = "";

try (BufferedReader reader = new BufferedReader(new FileReader("text.text"))) {

str = reader.readLine();

} catch (IOException e) {

e.printStackTrace();

}

long time0 = System.currentTimeMillis();

StringTokenizer st = new StringTokenizer(str, "#");

while (st.hasMoreTokens()) {

st.nextToken();

}

long time1 = System.currentTimeMillis();

String[] array = str.split("#");

for (String s : array) {}

long time2 = System.currentTimeMillis();

System.out.println("StringTokenizer的运行时间是:" + (time1-time0));

System.out.println("split()的运行时间是:" + (time2-time1));

}

}

运行结果:

StringTokenizer的运行时间是:9

split()的运行时间是:0

文本内容×10

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStreamWriter;

public class StringGenerator {

public static void main(String[] args) {

try (OutputStreamWriter writer = new OutputStreamWriter(new FileOutputStream("text.text"))) {

StringBuilder str = new StringBuilder();

str.append("afiaongaoncanivnrfbnavoiadnvonvoron#".repeat(10000));

str.append("aovnodnvds");

writer.write(str.toString());

} catch (IOException e) {

e.printStackTrace();

}

}

}

运行结果:

StringTokenizer的运行时间是:10

split()的运行时间是:0

文本内容×100

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStreamWriter;

public class StringGenerator {

public static void main(String[] args) {

try (OutputStreamWriter writer = new OutputStreamWriter(new FileOutputStream("text.text"))) {

StringBuilder str = new StringBuilder();

str.append("afiaongaoncanivnrfbnavoiadnvonvoron#".repeat(100000));

str.append("aovnodnvds");

writer.write(str.toString());

} catch (IOException e) {

e.printStackTrace();

}

}

}

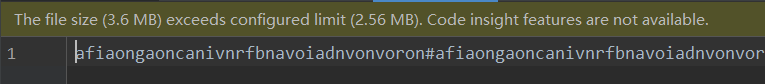

看看这3.6MB的文件吧……

运行结果:

StringTokenizer的运行时间是:20

split()的运行时间是:10

文本内容×1000

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStreamWriter;

public class StringGenerator {

public static void main(String[] args) {

try (OutputStreamWriter writer = new OutputStreamWriter(new FileOutputStream("text.text"))) {

StringBuilder str = new StringBuilder();

str.append("afiaongaoncanivnrfbnavoiadnvonvoron#".repeat(1000000));

str.append("aovnodnvds");

writer.write(str.toString());

} catch (IOException e) {

e.printStackTrace();

}

}

}

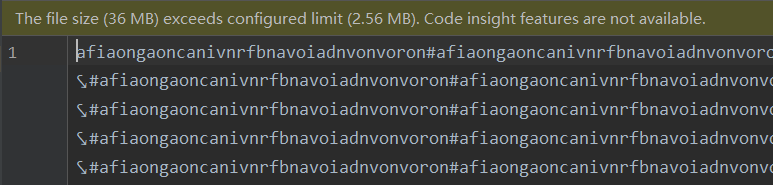

惨绝人寰的36M:

运行结果:

StringTokenizer的运行时间是:90

split()的运行时间是:140

文本内容×10000

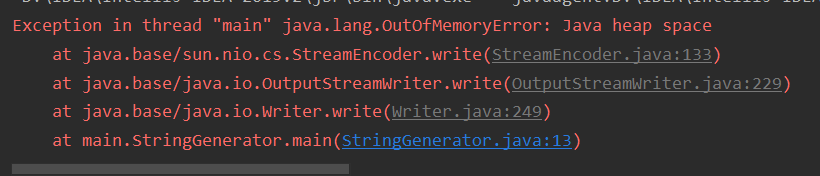

这个时候虚拟机就有点顶不住,我忘了是1W倍还是10W倍数据,反正基本在GB级别的时候,虚拟机就崩了……

试着调了虚拟机,也没啥用,就试着不在一次写入,而是改成分批次写入,每次都是追加模式……

import java.io.FileWriter;

import java.io.IOException;

public class StringGenerator {

public static void main(String[] args) {

try (FileWriter writer = new FileWriter("text.text", true)) {

StringBuilder str = new StringBuilder();

str.append("afiaongaoncanivnrfbnavoiadnvonvoron#".repeat(1000000));

for (int i = 0; i < 10; i++) {

writer.write(str.append("\n").toString());

}

str.append("aovnodnvds");

} catch (IOException e) {

e.printStackTrace();

}

}

}

360MB数据了,可怕……

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.util.StringTokenizer;

public class SplitTest {

public static void main(String[] args) {

String[] strings = new String[10];

try (BufferedReader reader = new BufferedReader(new FileReader("text.text"))) {

for (int i = 0; i < 10; i++) {

strings[i] = reader.readLine();

}

} catch (IOException e) {

e.printStackTrace();

}

long time0 = System.currentTimeMillis();

for (String s : strings) {

StringTokenizer st = new StringTokenizer(s, "#");

while (st.hasMoreTokens()) {

st.nextToken();

}

}

long time1 = System.currentTimeMillis();

for (String s : strings) {

String[] array = s.split("#");

for (String str : array) {}

}

long time2 = System.currentTimeMillis();

System.out.println("StringTokenizer的运行时间是:" + (time1-time0));

System.out.println("split()的运行时间是:" + (time2-time1));

}

}

测试结果:

StringTokenizer的运行时间是:316

split()的运行时间是:609

文本内容×10W

import java.io.FileWriter;

import java.io.IOException;

public class StringGenerator {

public static void main(String[] args) {

try (FileWriter writer = new FileWriter("text.text", true)) {

StringBuilder str = new StringBuilder();

str.append("afiaongaoncanivnrfbnavoiadnvonvoron#".repeat(1000000));

for (int i = 0; i < 100; i++) {

writer.write(str.append("\n").toString());

}

str.append("aovnodnvds");

} catch (IOException e) {

e.printStackTrace();

}

}

}

一个文件有这么地狱级别的数据量——3.96GB,太可怕了 (虽然比起大数据的数据量,这根本不算个事)

不敢一次读出来,分了100次换行读出来:

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.util.StringTokenizer;

public class SplitTest {

public static void main(String[] args) {

String[] strings = new String[100];

try (BufferedReader reader = new BufferedReader(new FileReader("text.text"))) {

for (int i = 0; i < 100; i++) {

strings[i] = reader.readLine();

}

} catch (IOException e) {

e.printStackTrace();

}

long time0 = System.currentTimeMillis();

for (String s : strings) {

StringTokenizer st = new StringTokenizer(s, "#");

while (st.hasMoreTokens()) {

st.nextToken();

}

}

long time1 = System.currentTimeMillis();

for (String s : strings) {

String[] array = s.split("#");

for (String str : array) {}

}

long time2 = System.currentTimeMillis();

System.out.println("StringTokenizer的运行时间是:" + (time1-time0));

System.out.println("split()的运行时间是:" + (time2-time1));

}

}

测试结果:

StringTokenizer的运行时间是:650

split()的运行时间是:1805

对比总结

java.util.StringTokenizer是Java1.0就推出的古老API,在小数据量级显得不如split()简洁和快速。

但当数据量变大的时候,由于split()生成的数据存储在数组中,这个数组就会很大,很难操作,好在String是引用类型,否则光是开辟这么一大块空间就已经要命了……

所以,数据量很大还要读文件(显然那会儿都该是数据库了)的时候,可以使用java.util.StringTokenizer,避免使用split()再次开辟一个大数组!