最近博主希望优化现有的业务框架,打算搭建一套 以Hive 为存储的数据仓库系统。

之前没有了解过hive , 本次是首次使用Hive, 不足之处欢迎各位指正。

Tips :

在安装HIVE 之前请确保,已经安装了Hadoop

此种方式存在不足:不能使用多个链接 连接Hive 数据库,会报错!!!!!!

解决方法: 使用外部的元数据库,可以参考我接下来的一篇文章。

HIVE_第二讲_ Hive on MySQL

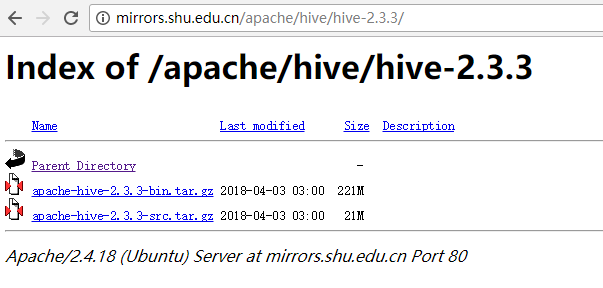

第一步:下载压缩包

首先我们需要从 Hive 官网上下载一个最新的 tar.gz 的压缩包

我下载的版本是 apache-hive-2.3.3-bin

http://mirrors.shu.edu.cn/apache/hive/hive-2.3.3/

第二步: 解压

下载好之后 ,我们对压缩包进行解压

解压指令

tar -zxvf xx.tar.gz -C outputDir

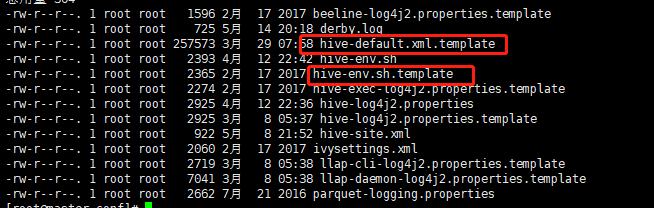

第三步: 修改配置文件

首先我们查看下conf目录,我们需要对其中的配置文件进行修改。

这里对其中2个文件进行讲解:

hive-default.xml.template

hive-env.sh.template

hive-default.xml.template

里面写有所有hive 可配置属性的默认设置与解释,如果我们忘了某一个配置的含义,可以来这里面进行查找。

hive-env.sh.template

描述了hive 所需的环境变量的相关设置

复制一份hive-env.sh 填入以下内容:

hive-env.sh

# Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # Set Hive and Hadoop environment variables here. These variables can be used # to control the execution of Hive. It should be used by admins to configure # the Hive installation (so that users do not have to set environment variables # or set command line parameters to get correct behavior). # # The hive service being invoked (CLI etc.) is available via the environment # variable SERVICE # Hive Client memory usage can be an issue if a large number of clients # are running at the same time. The flags below have been useful in # reducing memory usage: # # if [ "$SERVICE" = "cli" ]; then # if [ -z "$DEBUG" ]; then # export HADOOP_OPTS="$HADOOP_OPTS -XX:NewRatio=12 -Xms10m -XX:MaxHeapFreeRatio=40 -XX:MinHeapFreeRatio=15 -XX:+UseParNewGC -XX:-UseGCOverheadLimit" # else # export HADOOP_OPTS="$HADOOP_OPTS -XX:NewRatio=12 -Xms10m -XX:MaxHeapFreeRatio=40 -XX:MinHeapFreeRatio=15 -XX:-UseGCOverheadLimit" # fi # fi # The heap size of the jvm stared by hive shell script can be controlled via: # export HADOOP_HEAPSIZE=1024 # # Larger heap size may be required when running queries over large number of files or partitions. # By default hive shell scripts use a heap size of 256 (MB). Larger heap size would also be # appropriate for hive server. # Set HADOOP_HOME to point to a specific hadoop install directory HADOOP_HOME=/usr/local/hadoop # Hive Configuration Directory can be controlled by: export HIVE_CONF_DIR=/usr/local/hive/conf # Folder containing extra libraries required for hive compilation/execution can be controlled by: export HIVE_AUX_JARS_PATH=/usr/local/hive/lib

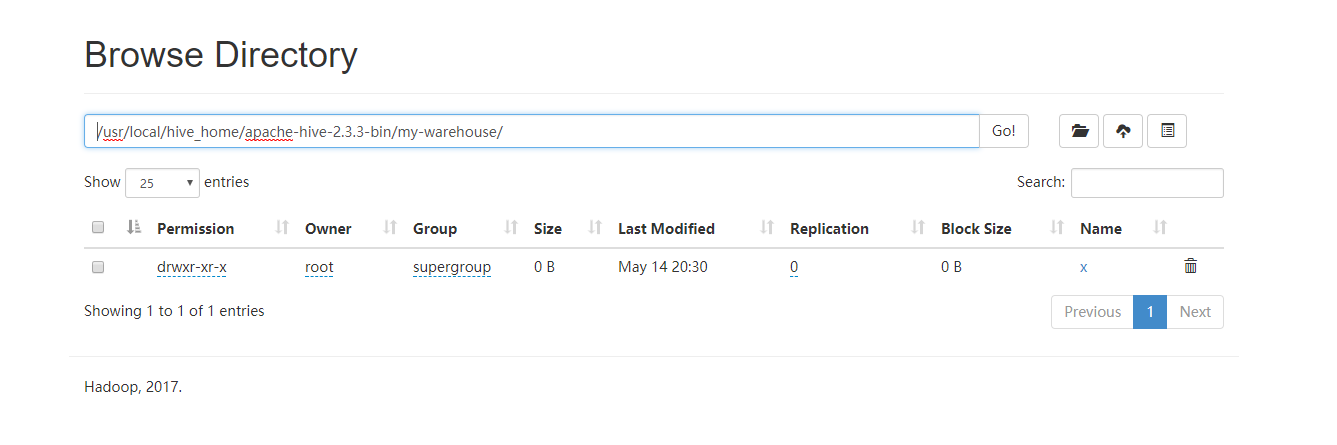

我们新创建一个hive-site.xml , 填写一些我们自己定义的配置:

hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/usr/local/hive_home/apache-hive-2.3.3-bin/my-warehouse</value>

<description>

Local or HDFS directory where Hive keeps table contents.

</description>

</property>

<property>

<name>hive.metastore.local</name>

<value>true</value>

<description>

Use false if a production metastore server is used.

</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:derby:;databaseName=/usr/local/hive_home/apache-hive-2.3.3-bin/my-metastore;create=true</value>

<description>

The JDBC connection URL.

</description>

</property>

</configuration>

对其中的重要属性进行讲解:

hive.metastore.warehouse.dir

描述了spark 中管理表 存放的默认位置。其中 default 库 会存放在根目录下,而其他数据库的表 会存放在 以数据库名开头的 一个子目录下 database/tablename/

hive.metastore.local

描述了hive 元信息的存放位置,元信息指的是 对hive 中表的描述,存放位置。。等相关信息。

修改完这两个hive 之后我们的配置文件已经配置完成,

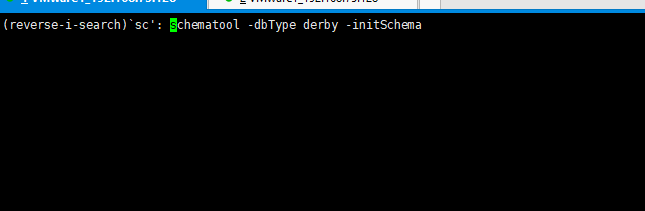

第四步. 初始化derby 数据库

对于第一次配置derby,此时的derby 数据库还未完成初始化,我们需要完成初始化服务。

运行 bin 下面的schematool 工具

schematool -dbType derby -initSchema

第五步. 启动hive服务

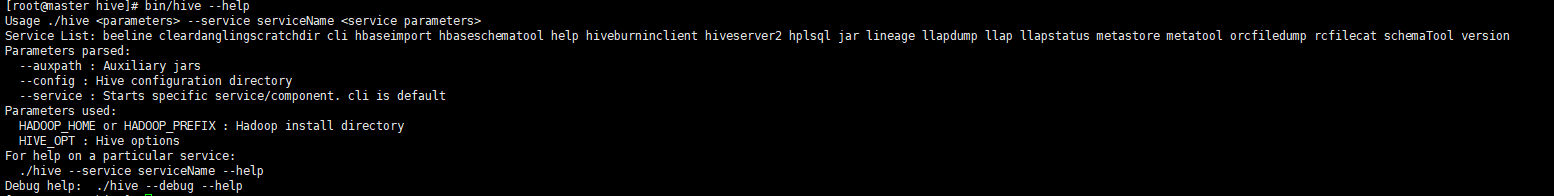

启动hive服务,bin/hive

( 默认启动的服务为 hive-cli, 可以通过 --help 进行查找帮助)

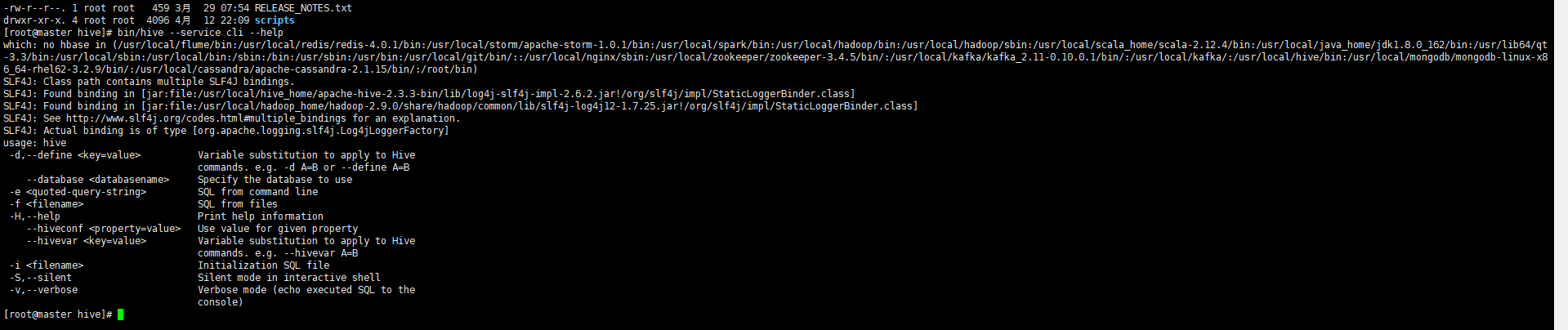

我们也可以通过 bin/hive --service cli --help 更具体的cli 服务 的帮助文档

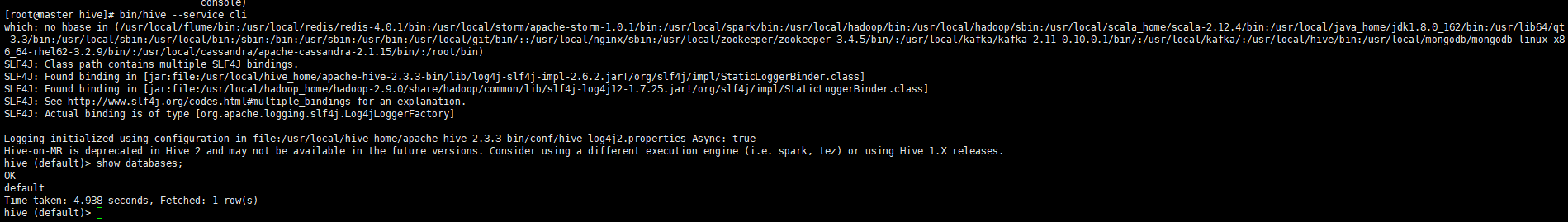

我们尝试操作下 看下所有的数据库集合 show databases

[root@master hive]# bin/hive --service cli which: no hbase in (/usr/local/flume/bin:/usr/local/redis/redis-4.0.1/bin:/usr/local/storm/apache-storm-1.0.1/bin:/usr/local/spark/bin:/usr/local/hadoop/bin:/usr/local/hadoop/sbin:/usr/local/scala_home/scala-2.12.4/bin:/usr/local/java_home/jdk1.8.0_162/bin:/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/usr/local/git/bin/::/usr/local/nginx/sbin:/usr/local/zookeeper/zookeeper-3.4.5/bin/:/usr/local/kafka/kafka_2.11-0.10.0.1/bin/:/usr/local/kafka/:/usr/local/hive/bin:/usr/local/mongodb/mongodb-linux-x86_64-rhel62-3.2.9/bin/:/usr/local/cassandra/apache-cassandra-2.1.15/bin/:/root/bin) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hive_home/apache-hive-2.3.3-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hadoop_home/hadoop-2.9.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Logging initialized using configuration in file:/usr/local/hive_home/apache-hive-2.3.3-bin/conf/hive-log4j2.properties Async: true Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive (default)> show databases; OK default Time taken: 4.938 seconds, Fetched: 1 row(s)

大功告成!!