一、本次下载安装ck版本19.16.2.2

1、ck下载地址:https : //repo.yandex.ru/clickhouse/rpm/stable/x86_64/

2、注意:此版本需要这三个包,其他版本可能还需要其他包。

clickhouse-client-19.16.2.2-2.noarch.rpm

clickhouse-common-static-19.16.2.2-2.x86_64.rpm

clickhouse-server-19.16.2.2-2.noarch.rpm3、如果是Centos6.x可能会报一个glib版本的错误

1、glibc下载:

wget http://copr-be.cloud.fedoraproject.org/results/mosquito/myrepo-el6/epel-6-x86_64/glibc-2.17-55.fc20/glibc-2.17-55.el6.x86_64.rpm

wget http://copr-be.cloud.fedoraproject.org/results/mosquito/myrepo-el6/epel-6-x86_64/glibc-2.17-55.fc20/glibc-common-2.17-55.el6.x86_64.rpm

wget http://copr-be.cloud.fedoraproject.org/results/mosquito/myrepo-el6/epel-6-x86_64/glibc-2.17-55.fc20/glibc-devel-2.17-55.el6.x86_64.rpm

wget http://copr-be.cloud.fedoraproject.org/results/mosquito/myrepo-el6/epel-6-x86_64/glibc-2.17-55.fc20/glibc-headers-2.17-55.el6.x86_64.rpm

2、执行命令:

sudo rpm -Uvh glibc-2.17-55.el6.x86_64.rpm \

glibc-common-2.17-55.el6.x86_64.rpm \

glibc-devel-2.17-55.el6.x86_64.rpm \

glibc-headers-2.17-55.el6.x86_64.rpm

3、若上诉命令执行报依赖问题则执行:

sudo rpm -Uvh glibc-2.17-55.el6.x86_64.rpm \

glibc-common-2.17-55.el6.x86_64.rpm \

glibc-devel-2.17-55.el6.x86_64.rpm \

glibc-headers-2.17-55.el6.x86_64.rpm\

--force --nodeps4、rpm -ivh *.rpm

5、在/etc/clickhouse-server/ 下config.xml添加

<include_from>/etc/clickhouse-server/metrika.xml</include_from>6、在metrika.xml中内容大致为:zk为单节点,集群时<node index = ""1"">把node对应的index带上

<clickhouse_remote_servers>

<bip_ck_cluster>

<shard>

<internal_replication>true</internal_replication>

<replica>

<default_database>shard1</default_database>

<host>node04</host>

<port>9000</port>

<user>default</user>

<password>password</password>

</replica>

<replica>

<default_database>shard1</default_database>

<host>node05</host>

<port>9000</port>

<user>default</user>

<password>password</password>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<default_database>shard2</default_database>

<host>node04</host>

<port>9000</port>

<user>default</user>

<password>password</password>

</replica>

<replica>

<default_database>shard2</default_database>

<host>node05</host>

<port>9000</port>

<user>default</user>

<password>password</password>

</replica>

</shard>

</bip_ck_cluster>

</clickhouse_remote_servers>

<!--zookeeper相关配置-->

<zookeeper-servers>

<node index="1">

<host>192.168.231.135</host>

<port>2181</port>

</node>

</zookeeper-servers>

<networks>

<ip>::/0</ip>

</networks>

<!--压缩相关配置-->

<clickhouse_compression>

<case>

<min_part_size>10000000000</min_part_size>

<min_part_size_ratio>0.01</min_part_size_ratio>

<method>lz4</method> <!--压缩算法lz4压缩比zstd快, 更占磁盘-->

</case>

</clickhouse_compression>

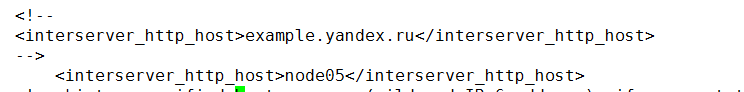

</yandex>7、users.xml中可以配置密码。config.xml中需要注意配置。第一个配置为本节点hostname即可。第二个配置为0.0.0.0即可

![]()

8)过程中还发现/etc/localtime文件没有导致的无法启动的问题

扫描二维码关注公众号,回复:

9533165 查看本文章

ln -s /usr/share/zoneinfo/US/Pacific localtime

9)clickhouse-server --daemon 启动节点(我这里配置了两个)

10)clickhouse-client -u default --password password访问

11)建表语句。建表中的{replica}既以下截图的位置

![]()

需要对照select * from system.clusters中的一致

node04

create table shard1.back_replica(cw_username String, cw_time DateTime,cw_age int)ENGINE = ReplicatedMergeTree('/clickhouse/tables/shard1/back_replica', '1') PARTITION BY toYYYYMM(cw_time) order by (cw_time) SETTINGS index_granularity = 8192;

create table shard2.back_replica(cw_username String,cw_time DateTime,cw_age int)ENGINE = ReplicatedMergeTree('/clickhouse/tables/shard2/back_replica', '2') PARTITION BY toYYYYMM(cw_time) order by (cw_time) SETTINGS index_granularity = 8192;

node05

CREATE TABLE shard1.back_replica(cw_username String, cw_time DateTime, cw_age int) ENGINE = ReplicatedMergeTree('/clickhouse/tables/shard1/back_replica', '2') PARTITION BY toYYYYMM(cw_time) ORDER BY cw_time SETTINGS index_granularity = 8192;

CREATE TABLE shard2.back_replica (`cw_username` String, `cw_time` DateTime, `cw_age` Int32) ENGINE = ReplicatedMergeTree('/clickhouse/tables/shard2/back_replica', '1') PARTITION BY toYYYYMM(cw_time) ORDER BY cw_time SETTINGS index_granularity = 8192;12)A一个节点中的一个shard写入。另一个节点查询依然能查到。此时既完成了一个2个shard且互为副本的高可用ck集群