Pytorch中数据集读取

在机器学习中,有很多形式的数据,我们就以最常用的几种来看:

在Pytorch中,他自带了很多数据集,比如MNIST、CIFAR10等,这些自带的数据集获得和读取十分简便:

import torch import torch.nn as nn import torch.utils.data as Data import torchvision train_data = torchvision.datasets.MNIST( root='./mnist/', # 数据集存放的位置,他会先查找,如果该地没有对应数据集,就下载(不连外网的话推荐从网上下载好后直接放到对应目录)

#比如这里root是"./mnist/" 那么下载好的数据放入./mnist/raw/下

train=True, # 表示这是训练集,如果是测试集就改为false transform=torchvision.transforms.ToTensor(), # 表示数据转换的格式 download=True, )

以上就获得了对应的数据集,接下来就是读取:

train_loader = Data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True) #使用自定义好的DataLoader函数来进行 for epoch in range(EPOCH): for step, (b_x, b_y) in enumerate(train_loader): # 这里就可以遍历数据集,进行训练了 XXXX XXXX

可以看到,这样获得数据集确实方便,但是也有缺点:

1、只能获得固定的数据集,对于自己的特有的数据集,无法读取。

2、如果要对这些数据集处理,没有办法做到。

因此,我们需要一种方法来读取本地的数据集:

先看看如何把从网上下载的文件转换为图片存储:

import os

from skimage import io

import torchvision.datasets.mnist as mnist

import numpy root = "data/MNIST/raw/" #自己对应数据集的目录 #获得对应的训练集的数据和标签 train_set = ( mnist.read_image_file(os.path.join(root, 'train-images-idx3-ubyte')), mnist.read_label_file(os.path.join(root, 'train-labels-idx1-ubyte')) ) #获得对应的测试机的数据和标签 test_set = ( mnist.read_image_file(os.path.join(root, 't10k-images-idx3-ubyte')), mnist.read_label_file(os.path.join(root, 't10k-labels-idx1-ubyte')) ) print("train set:", train_set[0].size()) print("test set:", test_set[0].size()) #将数据转换为图片格式 def convert_to_img(train=True): if (train): #如果是训练集的话 就放到训练集中 f = open(root + 'train.txt', 'w') #打开对应文件 data_path = root + '/train/' if (not os.path.exists(data_path)): os.makedirs(data_path) for i, (img, label) in enumerate(zip(train_set[0], train_set[1])): # 拼合出图片路径 img_path = data_path + str(i) + '.jpg' #将img这个获取的数据转换为numpy后存入,而因为文件存入后打开格式为.jpg,所以自动变成了图片 io.imsave(img_path, img.numpy()) #存入标签 int_label = str(label).replace('tensor(', '') int_label = int_label.replace(')', '') f.write(img_path + ' ' + str(int_label) + '\n') f.close() else: f = open(root + 'test.txt', 'w') data_path = root + '/test/' if (not os.path.exists(data_path)): os.makedirs(data_path) for i, (img, label) in enumerate(zip(test_set[0], test_set[1])): img_path = data_path + str(i) + '.jpg' io.imsave(img_path, img.numpy()) int_label = str(label).replace('tensor(', '') int_label = int_label.replace(')', '') f.write(img_path + ' ' + str(int_label) + '\n') f.close() convert_to_img(True) convert_to_img(False)

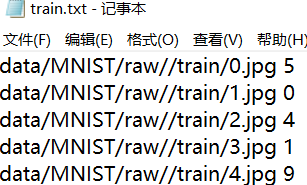

这样以后,得到的效果是:

在train.txt中,存放着文件的路径和标签

然后在文件路径中,有如图的图片:

数据转换成图片格式了,那接下来看看如何将图片读取:

读取数据的重点就是重写torch.utils.data的Dataset方法,其中有三个重要的方法:__init__,__getitem__,__len__,这三个方法分别表示:对数据集的初始化,在循环的时候获得数据,还有数据的长度。

我们就用已有的图片数据集,来进行CNN的训练:

首先要写一个自己的数据集加载的类:

import torch from torch import nn, optim import torch.nn.functional as F from torch.autograd import Variable from torch.utils.data import DataLoader from torchvision import transforms from PIL import Image import FileLoader from torch.utils import data import numpy as np import matplotlib.pyplot as plt #定义对数据集的加载方式 def default_loader(path): return Image.open(path) #继承自data.Dataset,完成对方法的重写 class MyImageFloder(data.Dataset): def __init__(self,FileName,transform = None,target_transform = None,loader = default_loader): #获得对应的数据位置和标签

FilePlaces,LabelSet = FileLoader.filePlace_loader(FileName) # 对标签进行修改,因为读进来变成了str类型,要修改成long类型 LabelSet = [np.long(i) for i in LabelSet] LabelSet = torch.Tensor(LabelSet).long() self.imgs_place = FilePlaces self.LabelSet = LabelSet self.transform = transform self.target_transform = target_transform self.loader = loader # 这里是对数据进行读取 使用之前定义的loader方法来执行 def __getitem__(self, item): img_place = self.imgs_place[item] label = self.LabelSet[item] img = self.loader(img_place) if self.transform is not None: img = self.transform(img) return img,label def __len__(self): return len(self.imgs_place)

这里的重点就是,一定看好了读取以后的格式和维度,还有类型,在实际使用的时候,经常报错,要根据提示来修改对应的代码!

import torch from torch import nn, optim import torch.nn.functional as F from torch.autograd import Variable from torch.utils.data import DataLoader from torchvision import transforms from PIL import Image import FileLoader from torch.utils import data import numpy as np import matplotlib.pyplot as plt import MyImageLoader batch_size = 64 mytransform = transforms.Compose([ transforms.ToTensor() ] ) batch_size = 64 train_loader = DataLoader(MyImageLoader.MyImageFloder(FileName='data/MNIST/raw/train.txt',transform=mytransform), batch_size=batch_size, shuffle=True) test_loader = DataLoader(MyImageLoader.MyImageFloder(FileName='data/MNIST/raw/test.txt',transform=mytransform), batch_size=batch_size, shuffle=True) class Net(nn.Module): def __init__(self): super(Net, self).__init__() # 输入1通道,输出10通道,kernel 5*5 self.conv1 = nn.Conv2d(in_channels=1, out_channels=10, kernel_size=5) self.conv2 = nn.Conv2d(10, 20, 5) self.conv3 = nn.Conv2d(20, 40, 3) self.mp = nn.MaxPool2d(2) # fully connect self.fc = nn.Linear(40, 10)#(in_features, out_features) def forward(self, x): # in_size = 64 in_size = x.size(0) # one batch 此时的x是包含batchsize维度为4的tensor,即(batchsize,channels,x,y),x.size(0)指batchsize的值 把batchsize的值作为网络的in_size # x: 64*1*28*28 x = F.relu(self.mp(self.conv1(x))) # x: 64*10*12*12 feature map =[(28-4)/2]^2=12*12 x = F.relu(self.mp(self.conv2(x))) # x: 64*20*4*4 x = F.relu(self.mp(self.conv3(x))) x = x.view(in_size, -1) # flatten the tensor 相当于resharp # print(x.size()) # x: 64*320 x = self.fc(x) # x:64*10 # print(x.size()) return F.log_softmax(x) #64*10 model = Net() optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5) losses =[] test_losses = [] test_acces = [] def train(epoch): loss=0; for batch_idx, (data, target) in enumerate(train_loader):#batch_idx是enumerate()函数自带的索引,从0开始 # data.size():[64, 1, 28, 28] # target.size():[64] output = model(data) #output:64*10 # target = [np.long(i) for i in target] # target = torch.Tensor(target).long() loss = F.nll_loss(output, target) if batch_idx % 200 == 0: print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format( epoch, batch_idx * len(data), len(train_loader.dataset), 100. * batch_idx / len(train_loader), loss.item())) optimizer.zero_grad() # 所有参数的梯度清零 loss.backward() #即反向传播求梯度 optimizer.step() #调用optimizer进行梯度下降更新参数 # 每次训练完一整轮,记录一次 losses.append(loss.item()) def test(): test_loss = 0 correct = 0 for data, target in test_loader: # target = [np.long(i) for i in target] # target = torch.Tensor(target).long() with torch.no_grad(): data, target = Variable(data), Variable(target) output = model(data) # sum up batch loss test_loss += F.nll_loss(output, target, size_average=False).item() # get the index of the max log-probability pred = output.data.max(1, keepdim=True)[1] correct += pred.eq(target.data.view_as(pred)).cpu().sum() test_loss /= len(test_loader.dataset) print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format( test_loss, correct, len(test_loader.dataset), 100. * correct / len(test_loader.dataset))) test_losses.append(test_loss) print(correct.item()) test_acces.append(correct.item() / len(test_loader.dataset)) for epoch in range(1, 5): train(epoch) test() plt.figure(22) x_loss = list(range(len(losses))) x_acc = list(range(len(test_acces))) plt.subplot(221) plt.title('train loss ') plt.plot(x_loss, losses) plt.subplot(222) plt.title('test loss') plt.plot(x_loss, test_losses) plt.subplot(212) plt.plot(x_acc, test_acces) plt.title('test acc') plt.show()

这里直接套用CNN的代码即可。

关于此问题比较好的方法可以参考:https://blog.csdn.net/qq_36852276/article/details/94588656