Opencv之图像拼接:基于Stitcher的多图和基于Surf的两图拼接

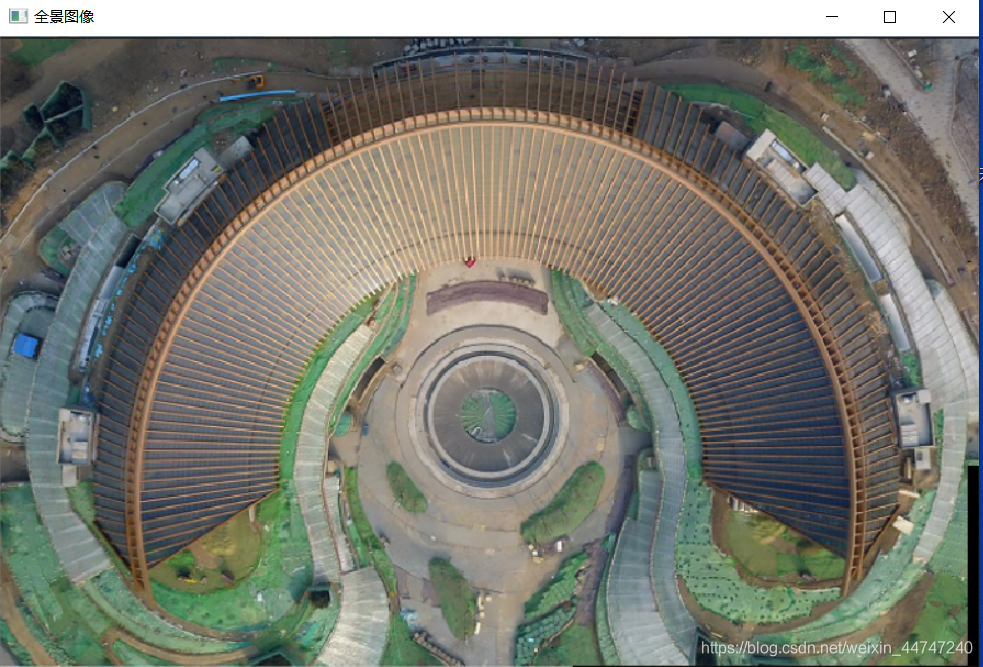

图像拼接算法,一种是调用opencv的stitcher算法,这种调用相对简单,但是其内部算法是很复杂的,这点可以从其cpp里看出,不断匹配运行,得到结果,在尝试过程中,兼容性不是很好,经常会出现无法拼接的情况,速度很慢,错误率较高。这里实现了四幅图像的拼接,效果还不错。

代码如下:

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/stitching.hpp>

using namespace std;

using namespace cv;

vector<Mat> imgs;

int main(int argc, char * argv[])

{

imgs.push_back(imread("C:/Users/14587/Desktop/图片素材测试/裁剪2.jpg"));

imgs.push_back(imread("C:/Users/14587/Desktop/图片素材测试/裁剪1.jpg"));

imgs.push_back(imread("C:/Users/14587/Desktop/图片素材测试/裁剪4.jpg"));

imgs.push_back(imread("C:/Users/14587/Desktop/图片素材测试/裁剪3.jpg"));

Stitcher stitcher = Stitcher::createDefault(true);

// 使用stitch函数进行拼接

Mat temp;

Stitcher::Status status = stitcher.stitch(imgs, temp);

if (status != Stitcher::OK)

{

cout << "不能拼接 " << int(status) << endl;

waitKey(0);

return -1;

}

Mat pano2 = temp.clone();

// 显示源图像,和结果图像

namedWindow("全景图像", WINDOW_NORMAL);

imshow("全景图像", temp);

waitKey(0);

if (waitKey() == 27)

return 0;

}

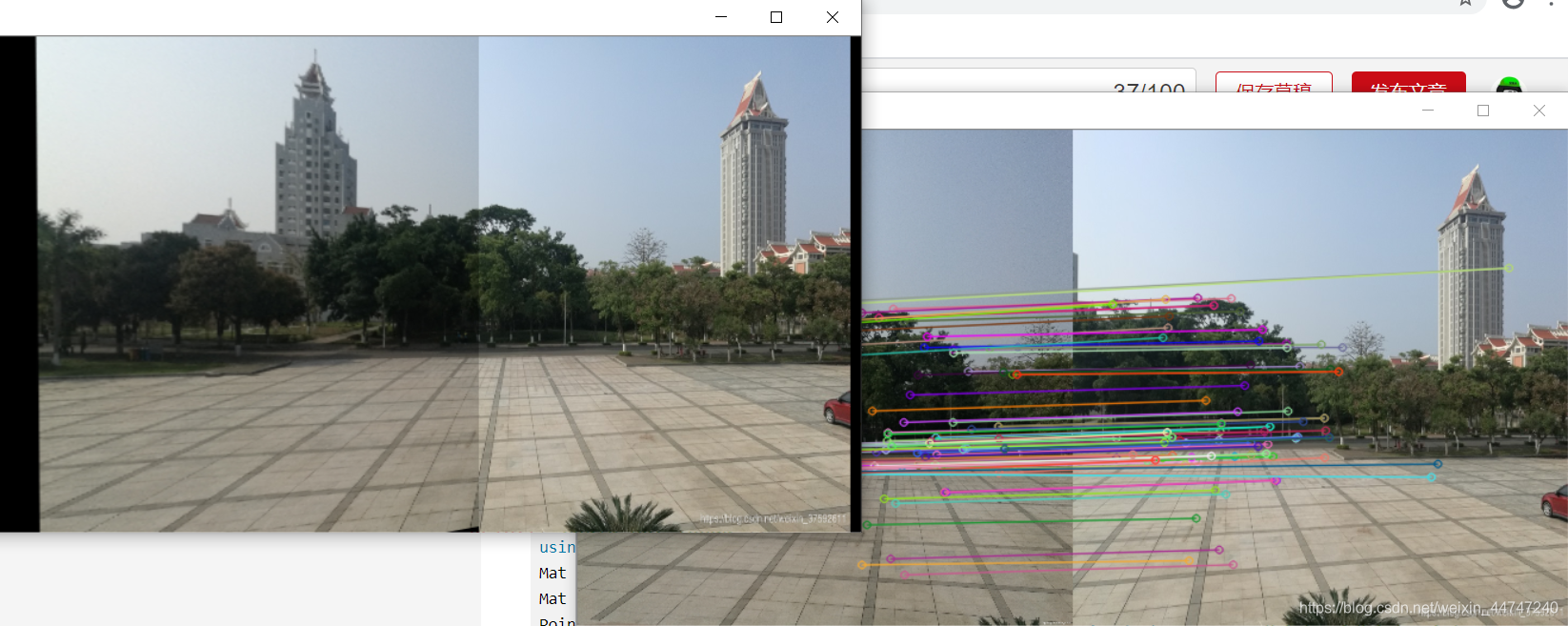

基于surf拼接的代码如下:

#include<opencv2/opencv.hpp>

#include<opencv2/highgui/highgui.hpp>

#include<opencv2/xfeatures2d.hpp>

#include<iostream>

#include<math.h>

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

Mat srcimage_0, srcimage_1, dstimage;

Mat srcimage0, srcimage1;

Point2f getTransformPoint(const Point2f originalPoint, const Mat &transformMaxtri);

//SURF特征提取及暴力匹配

int main() {

srcimage_0 = imread("C:\\Users\\14587\\Desktop\\1.jpg");

srcimage_1 = imread("C:\\Users\\14587\\Desktop\\2.jpg");

if (!srcimage_0.data) {

printf("could not looad");

return -1;

}

if (!srcimage_1.data) {

printf("could not load");

return -1;

}

//cvtColor(srcimage0, srcimage_0, CV_RGB2GRAY);

//cvtColor(srcimage1, srcimage_1, CV_RGB2GRAY);

//resize(srcimage_0, srcimage_0, Size(srcimage_0.cols /4, srcimage_0.rows / 4));

//resize(srcimage_1, srcimage_1, Size(srcimage_1.cols /4, srcimage_1.rows / 4));

resize(srcimage_0, srcimage_0, Size(416, 416));

resize(srcimage_1, srcimage_1, Size(416, 416));

vector<KeyPoint> keypoint0, keypoint1;

//特征点寻找

Mat result0, result1,result;

BFMatcher matcher;

int minhessian = 800;

Ptr<SURF> detector = SURF::create(minhessian);

detector->detect(srcimage_0, keypoint0);

detector->detect(srcimage_1, keypoint1);

Ptr<Feature2D> f2d = xfeatures2d::SURF::create();

f2d->detectAndCompute(srcimage_0,Mat(), keypoint0,result0);

f2d->detectAndCompute(srcimage_1, Mat(), keypoint1, result1);

vector<DMatch> matches;

vector<DMatch> GoodMatchePoints;

matcher.match(result0, result1, matches,Mat());

sort(matches.begin(), matches.end()); //从小到大排序

//最佳匹配点100个

//调整范围

int goodmatch =100;

vector< DMatch > good_matches;

vector<Point2f> imagePoints0, imagePoints1;

for (int i = 0; i < goodmatch; i++) {

good_matches.push_back(matches[i]);

imagePoints0.push_back(keypoint0[good_matches[i].queryIdx].pt);

imagePoints1.push_back(keypoint1[good_matches[i].trainIdx].pt);

}

//绘制特征点

//drawKeypoints(srcimage_0, keypoint0, srcimage_0, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

//drawKeypoints(srcimage_1, keypoint1, srcimage_1, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

drawMatches(srcimage_0, keypoint0, srcimage_1, keypoint1, good_matches, result, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS); //绘制匹配点

imshow("拼接", result);

Mat H = findHomography(imagePoints0, imagePoints1, RANSAC, 3, noArray());//最优单映射变换矩阵

Mat adjustMat = (Mat_<double>(3, 3) << 1.0, 0, srcimage_0.cols, 0, 1.0, 0, 0, 0, 1.0);

Mat adjustHomo = adjustMat * H;

Point2f originalLinkPoint, targetLinkPoint, basedImagePoint;

originalLinkPoint = keypoint0[good_matches[0].queryIdx].pt;

targetLinkPoint = getTransformPoint(originalLinkPoint, adjustHomo);

basedImagePoint = keypoint1[good_matches[0].trainIdx].pt;

Mat imageTransform1;

//透视变换

warpPerspective(srcimage_0, imageTransform1, adjustMat*H,Size(srcimage_1.cols + srcimage_0.cols + 10, srcimage_1.rows));

Mat ROIMat = srcimage_1(Rect(Point(basedImagePoint.x, 0),Point(srcimage_1.cols, srcimage_1.rows)));

ROIMat.copyTo(Mat(imageTransform1, Rect(targetLinkPoint.x, 0, srcimage_1.cols - basedImagePoint.x + 1, srcimage_1.rows)));

imshow("拼接结果", imageTransform1);

//putText(result, "No Entry Straight Ahead", scene_corners[0] + Point2f((float)srcimage_0.cols, 0),FONT_HERSHEY_COMPLEX,1.0,Scalar(0,0,255));

waitKey(0);

return 0;

}

Point2f getTransformPoint(const Point2f originalPoint, const Mat &transformMaxtri)

{

Mat originelP, targetP;

originelP = (Mat_<double>(3, 1) << originalPoint.x, originalPoint.y, 1.0);

targetP = transformMaxtri * originelP;

float x = targetP.at<double>(0, 0) / targetP.at<double>(2, 0);

float y = targetP.at<double>(1, 0) / targetP.at<double>(2, 0);

return Point2f(x, y);

}

下面给附上测试图: