实现MFS的高可用,就要由pacemaker+corosync+vmfence+mfsmaster这些部分组成。

为什么要实现MFS的高可用呢?

因为master只有一个,如果坏了,那么整个分布式集群就有问题了。

1.什么是pacemaker?

Pacemaker是一个集群资源管理器。

它利用集群基础构件(OpenAIS 、heartbeat或corosync)提供的消息和成员管理能力来探测并从节点或资源级别的故障中恢复,

以实现群集服务(亦称资源)的最大可用性。

它可以做几乎任何规模的集群,并带有一个强大的依赖模式,让管理员能够准确地表达群集资源之间的关系(包括顺序和位置)。

几乎任何可以编写的脚本,都可以作为管理起搏器集群的一部分。

尤为重要的是Pacemaker不是一个heartbeat的分支,似乎很多人存在这样的误解。

Pacemaker是CRM项目(亦名V2资源管理器)的延续,该项目最初是为heartbeat而开发,但目前已经成为独立项目。

2.什么是corosync?

Corosync是集群管理套件的一部分,它在传递信息的时候可以通过一个简单的配置文件来定义信息传递的方式和协议等。

Corosync是集群管理套件的一部分,通常会与其他资源管理器一起组合使用它在传递信息的时候可以通过一个简单的配置文件来定义信息传递的方式和协议等。它是一个新兴的软件,2008年推出,但其实它并不是一个真正意义上的新软件,在2002年的时候有一个项目Openais , 它由于过大,分裂为两个子项目,其中可以实现HA心跳信息传输的功能就是Corosync ,它的代码60%左右来源于Openais. Corosync可以提供一个完整的HA功能,但是要实现更多,更复杂的功能,那就需要使用Openais了。Corosync是未来的发展方向。在以后的新项目里,一般采用Corosync,而hb_gui可以提供很好的HA管理功能,可以实现图形化的管理。另外相关的图形化有RHCS的套件luci+ricci,当然还有基于java开发的LCMC集群管理工具。

一、实验环境的搭建

| server1( mfsmaster+corosync+pacemaker) | 172.25.58.1 |

| server2(mfschunker) | 172.25.58.2 |

| server3(mfschunker) | 172.25.58.3 |

| server4( mfsmaster+corosync+pacemaker ) | 172.25.58.4 |

| mfsclient 真机 | 172.25.58.250 |

由于server1 server2 server3分别是master chunk chunk 在上篇博客中已经搭建好,

真机也已经搭建好client

1、现在 给server1和server4配置好本地软件仓库,然后下载这四个软件即可~~~

moosefs-cgi-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-cgiserv-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-cli-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm

2、开启mfs-master1和4的mfs-master

[root@server1 ~]# systemctl start moosefs-master

[root@server4 ~]# vim /usr/lib/systemd/system/moosefs-master.service

8 ExecStart=/usr/sbin/mfsmaster -a

[root@server4 ~]# systemctl daemon-reload

[root@server4 ~]# systemctl start moosefs-master3、给本地软件仓库添加pacemaker 和 corosync包,然后给server1和server4安装pacemaker和corosync

[root@foundation58 mfs]# cp -r /home/kiosk/Desktop/pack-install/mfs/pacemaker/* .

[root@foundation58 mfs]# createrepo .

Spawning worker 0 with 22 pkgs

Spawning worker 1 with 22 pkgs

Spawning worker 2 with 21 pkgs

Spawning worker 3 with 21 pkgs

Workers Finished

Saving Primary metadata

Saving file lists metadata

Saving other metadata

Generating sqlite DBs

Sqlite DBs complete

[root@server1 mfs]# yum repolist

Loaded plugins: product-id, search-disabled-repos, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

mfs | 2.9 kB 00:00

rhel7.3 | 4.1 kB 00:00

(1/3): mfs/primary_db | 103 kB 00:00

(2/3): rhel7.3/group_gz | 136 kB 00:00

(3/3): rhel7.3/primary_db | 3.9 MB 00:00

repo id repo name status

mfs mfs 86

rhel7.3 rhel7.3 4,751

repolist: 4,837[root@server1 mfs]# yum install pacemaker corosync -y

[root@server4 mfs]# yum install pacemaker corosync -y

5、在server1和server4上做免密

[root@server1 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

d7:92:3e:97:0a:d9:30:62:d5:00:52:b1:e6:23:ef:75 root@server1

The key's randomart image is:

+--[ RSA 2048]----+

| ..+o. |

| . . o |

| o . . |

| o . o |

| . = S + . |

| + o B . . |

| . + E o |

| . . o + |

| . . |

+-----------------+

[root@server1 ~]# ssh-copy-id server4

[root@server1 ~]# ssh-copy-id server16、安装资源管理工具pcs

[root@server1 ~]# yum install -y pcs

[root@server4 ~]# yum install -y pcs

[root@server1 ~]# systemctl start pcsd

[root@server4 ~]# systemctl start pcsd

[root@server1 ~]# systemctl enable pcsd

[root@server4 ~]# systemctl enable pcsd

[root@server1 ~]# passwd hacluster

[root@server4 ~]# passwd hacluster

7、创建集群,并启动

[root@server1 ~]# pcs cluster auth server1 server4

[root@server1 ~]# pcs cluster setup --name mycluster server1 server4

[root@server1 ~]# pcs cluster start --all

8、查看状态

[root@server1 ~]# corosync-cfgtool -s

Printing ring status.

Local node ID 1

RING ID 0

id = 172.25.58.1

status = ring 0 active with no faults

[root@server1 ~]# pcs status corosync

Membership information

----------------------

Nodeid Votes Name

1 1 server1 (local)

2 1 server4

9、解决报错

[root@server1 ~]# crm_verify -L -V

error: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

error: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

error: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

[root@server1 ~]# pcs property set stonith-enabled=false

[root@server1 ~]# crm_verify -L -V10、创建资源

[root@server1 ~]# pcs resource create vip ocf:heartbeat:IPaddr2 ip=172.25.14.100 cidr_netmask=32 op monitor interval=30s

[root@server1 ~]# pcs resource show

vip (ocf::heartbeat:IPaddr2): Started server1

[root@server1 ~]# pcs status

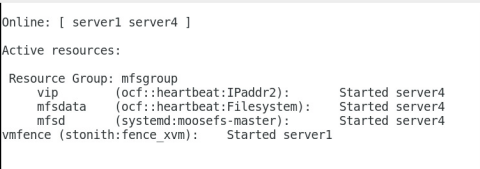

[root@server4 ~]# crm_mon

11、实现高可用

- 查看此时虚拟ip在server1上

-

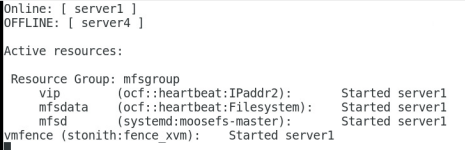

[root@server1 ~]# ip addr [root@server1 ~]# pcs cluster stop server1 [root@server4 ~]# ip addr用crm_mon命令,查看监控信息可以查看只有server4是online

- 再开启server1,vip不会漂移

[root@server1 ~]# pcs cluster start server1

实现数据共享

1、清理之前的环境

[root@foundation14 ~]# umount /mnt/mfsmeta

[root@server1 ~]# systemctl stop moosefs-master

[root@server2 chunk1]# systemctl stop moosefs-chunkserver

[root@server3 3.0.103]# systemctl stop moosefs-chunkserver

2、所有节点,添加如下解析(真机,server1-4)

[root@foundation14 ~]# vim /etc/hosts

[root@foundation14 ~]# cat /etc/hosts

172.25.58.100 mfsmaster

172.24.58.1 server1 3、给sevrer2加虚拟磁盘

[root@server2 chunk1]# fdisk -l

Disk /dev/vda: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

4、安装targetcli,并做相应配置

[root@server2 ~]# yum install -y targetcli

[root@server2 ~]# targetcli

Warning: Could not load preferences file /root/.targetcli/prefs.bin.

targetcli shell version 2.1.fb41

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'.

/> ls

o- / ...................................................................................... [...]

o- backstores ........................................................................... [...]

| o- block ............................................................... [Storage Objects: 0]

| o- fileio .............................................................. [Storage Objects: 0]

| o- pscsi ............................................................... [Storage Objects: 0]

| o- ramdisk ............................................................. [Storage Objects: 0]

o- iscsi ......................................................................... [Targets: 0]

o- loopback ...................................................................... [Targets: 0]

/> cd backstores/block

/backstores/block> create my_disk1 /dev/vda

Created block storage object my_disk1 using /dev/vda.

/iscsi> create iqn.2019-04.com.example:server2

/iscsi/iqn.20...er2/tpg1/luns> create /backstores/block/my_disk1

/iscsi/iqn.20...er2/tpg1/acls> create iqn.2019-04.com.example:client

5、server1安装iscsi

[root@server1 ~]# yum install -y iscsi-*

[root@server1 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2019-04.com.example:client

[root@server1 ~]# iscsiadm -m discovery -t st -p 172.25.14.2

172.25.14.2:3260,1 iqn.2019-04.com.example:server2

[root@server1 ~]# iscsiadm -m node -l

##磁盘共享成功

[root@server1 ~]# fdisk -l

Disk /dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

##创建分区,并格式化

[root@server1 ~]# fdisk /dev/sdb

[root@server1 ~]# mkfs.xfs /dev/sdb1

##挂载

[root@server1 ~]# mount /dev/sdb1 /mnt/

[root@server1 ~]# cd /var/lib/mfs/

[root@server1 mfs]# ls

changelog.10.mfs changelog.6.mfs changelog.9.mfs metadata.mfs.back.1

changelog.1.mfs changelog.7.mfs metadata.crc metadata.mfs.empty

changelog.3.mfs changelog.8.mfs metadata.mfs stats.mfs

[root@server1 mfs]# cp -p * /mnt

[root@server1 mfs]# cd /mnt

[root@server1 mnt]# ls

changelog.10.mfs changelog.6.mfs changelog.9.mfs metadata.mfs.back.1

changelog.1.mfs changelog.7.mfs metadata.crc metadata.mfs.empty

changelog.3.mfs changelog.8.mfs metadata.mfs stats.mfs

[root@server1 mnt]# chown mfs.mfs /mnt/

[root@server1 mnt]# cd

[root@server1 ~]# umount /mnt

[root@server1 ~]# mount /dev/sdb1 /var/lib/mfs

[root@server1 ~]# systemctl start moosefs-master

[root@server1 ~]# systemctl stop moosefs-master

6、server4,同server1

[root@server4 ~]# yum install -y iscsi-*

[root@server4 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2019-04.com.example:client

[root@server4 ~]# iscsiadm -m discovery -t st -p 172.25.14.2

172.25.14.2:3260,1 iqn.2019-04.com.example:server2

[root@server4 ~]# iscsiadm -m node -l

[root@server4 ~]# mount /dev/sdb1 /var/lib/mfs

[root@server4 ~]# systemctl start moosefs-master

[root@server4 ~]# systemctl stop moosefs-master

7、在此创建

[root@server1 ~]# pcs resource create mfsdata ocf:heartbeat:Filesystem device=/dev/sdb1 directory=/var/lib/mfs fstype=xfs op monitor interval=30s

[root@server1 ~]# pcs resource create mfsd systemd:moosefs-master op monitor interval=1min

[root@server1 ~]# pcs resource group add mfsgroup vip mfsdata mfsd

8、测试,关掉server4,则server1上线

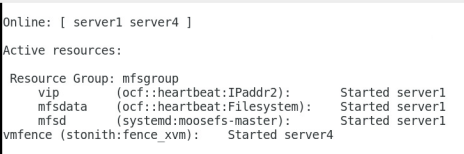

[root@server1 ~]# pcs cluster stop server4

[root@server1 ~]# pcs cluster start server4

此时,即使server4好了,vip也不会飘回去

配置fence

1、server1,server4安装fence-virt

[root@server1 mfs]# yum install -y fence-virt

[root@server4 mfs]# yum install -y fence-virt

[root@server1 mfs]# pcs stonith list

fence_virt - Fence agent for virtual machines

fence_xvm - Fence agent for virtual machines

2、客户端安装 fence-virtd,并做配置

[root@foundation14 ~]# yum install -y fence-virtd

[root@foundation14 ~]# mkdir /etc/cluster

[root@foundation14 ~]# cd /etc/cluster

[root@foundation14 cluster]# fence_virtd -c

Interface [virbr0]: br0 ##注意br0需要修改,其余回车即可

[root@foundation14 cluster]# dd if=/dev/urandom of=fence_xvm.key bs=128 count=1

1+0 records in

1+0 records out

128 bytes (128 B) copied, 0.000205145 s, 624 kB/s

[root@foundation14 cluster]# ls

fence_xvm.key

[root@foundation14 cluster]# scp fence_xvm.key [email protected]:

[root@foundation14 cluster]# scp fence_xvm.key [email protected]:

[root@server1 mfs]# mkdir /etc/cluster

[root@server4 ~]# mkdir /etc/cluster

[root@foundation14 cluster]# systemctl start fence_virtd

[root@foundation14 images]# netstat -anulp | grep :1229 ##默认断口1229开启

udp 0 0 0.0.0.0:1229 0.0.0.0:* 14972/fence_virtd

3、在server1继续配置策略

[root@server1 mfs]# cd /etc/cluster

[root@server1 cluster]# pcs stonith create vmfence fence_xvm pcmk_host_map="server1:server1;server4:server4" op monitor interval=1min

[root@server1 cluster]# pcs property set stonith-enabled-true

[root@server1 cluster]# crm_verify -L -V ##没有报错

[root@server1 cluster]# fence_xvm -H server4

[root@server4 ~]# pcs cluster start server4

- 使server1崩溃,server1会自启动,然后server4上线

[root@server1 ~]# echo c > /proc/sysrq-trigger