问题是:

一个网友的博客,记录他在一次面试时,碰到面试官要求他在白板上用TensorFlow写一个简单的网络实现异或(XOR)功能。这个本身并不难,单层感知器不能解决异或问题是学习神经网络中的一个常识,而简单的两层神经网络却能将其轻易解决。但这个问题的难处在于,我们接触TensorFlow通常直接拿来写CNN,或者其他的深度学习相关的网络了,而实现这种简单网络,基本上从未做过;更何况,要求在白板上写出来,如果想bug free,并不是容易的事儿啊。

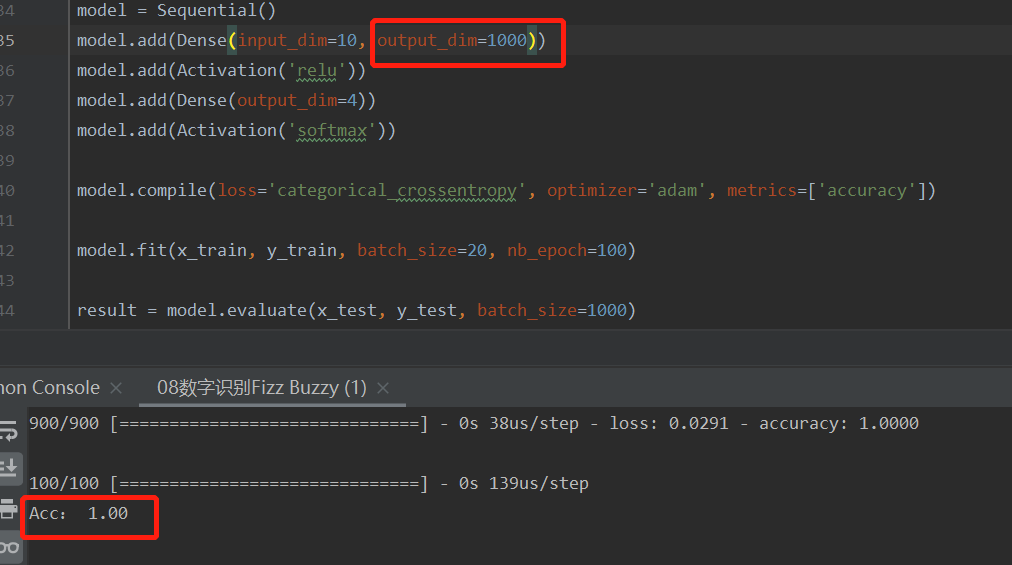

1 from keras.layers.normalization import BatchNormalization 2 from keras.models import Sequential 3 from keras.utils import np_utils 4 from keras.layers.core import Dense, Dropout, Activation 5 from keras.optimizers import SGD, Adam 6 import numpy as np 7 8 def fizzbuzz(start,end): 9 x_train, y_train = [], [] 10 for i in range(start, end+1): 11 num = i 12 tmp = [0]*10 13 j = 0 14 while num: 15 tmp[j] = num & 1 16 num = num >> 1 17 j += 1 18 x_train.append(tmp) 19 if i % 3 == 0 and i % 5 ==0: 20 y_train.append([0, 0, 0, 1]) 21 elif i % 3 == 0: 22 y_train.append([0, 1, 0, 0]) 23 elif i % 5 == 0: 24 y_train.append([0, 0, 1, 0]) 25 else : 26 y_train.append([1, 0, 0, 0]) 27 return np.array(x_train), np.array(y_train) 28 29 30 x_train, y_train = fizzbuzz(101, 1000) 31 x_test, y_test = fizzbuzz(1, 100) 32 33 model = Sequential() 34 model.add(Dense(input_dim=10, output_dim=1000)) 35 model.add(Activation('relu')) 36 model.add(Dense(output_dim=4)) 37 model.add(Activation('softmax')) 38 39 model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) 40 41 model.fit(x_train, y_train, batch_size=20, nb_epoch=100) 42 43 result = model.evaluate(x_test, y_test, batch_size=1000) 44 45 print('Acc:', format(result[1], '0.2f'))

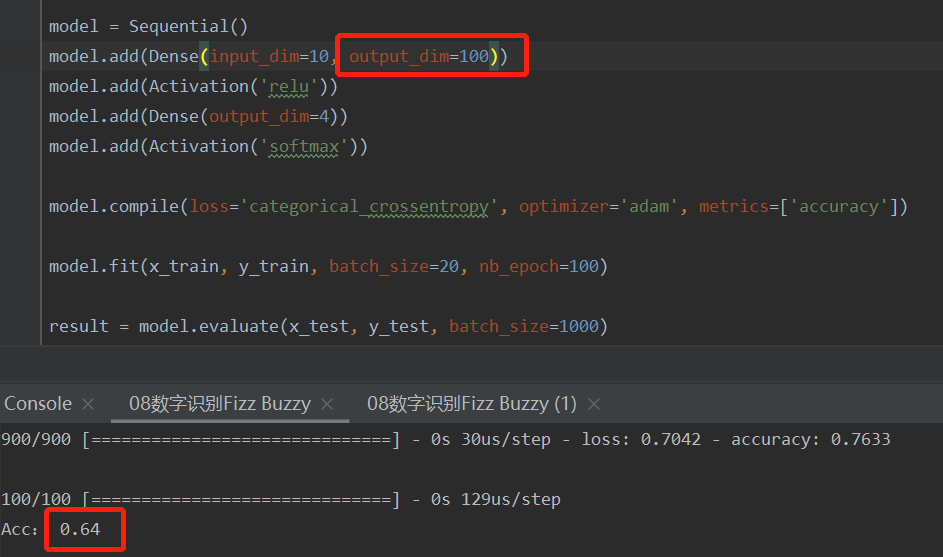

参数不同结果不同