spark配置启动过程中出现:py4j.protocol.Py4JError: An error occurred while calling None.None. Trace:

Authentication error: unexpected command.

异常的代码:

ssh://root@192.168.141.130:22/root/miniconda2/envs/ai/bin/python3.6 -u /root/Desktop/kafka_test.py

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/export/servers/spark-2.3.4-bin-hadoop2.7/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/export/servers/hadoop-2.7.5/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2019-10-17 14:56:39 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Traceback (most recent call last):

File "/root/Desktop/kafka_test.py", line 15, in <module>

spark = SparkSession.builder.appName("wordcount").getOrCreate()

File "/root/miniconda2/envs/ai/lib/python3.6/site-packages/pyspark/sql/session.py", line 173, in getOrCreate

sc = SparkContext.getOrCreate(sparkConf)

File "/root/miniconda2/envs/ai/lib/python3.6/site-packages/pyspark/context.py", line 363, in getOrCreate

SparkContext(conf=conf or SparkConf())

File "/root/miniconda2/envs/ai/lib/python3.6/site-packages/pyspark/context.py", line 129, in __init__

SparkContext._ensure_initialized(self, gateway=gateway, conf=conf)

File "/root/miniconda2/envs/ai/lib/python3.6/site-packages/pyspark/context.py", line 312, in _ensure_initialized

SparkContext._gateway = gateway or launch_gateway(conf)

File "/root/miniconda2/envs/ai/lib/python3.6/site-packages/pyspark/java_gateway.py", line 46, in launch_gateway

return _launch_gateway(conf)

File "/root/miniconda2/envs/ai/lib/python3.6/site-packages/pyspark/java_gateway.py", line 139, in _launch_gateway

java_import(gateway.jvm, "org.apache.spark.SparkConf")

File "/root/miniconda2/envs/ai/lib/python3.6/site-packages/py4j/java_gateway.py", line 176, in java_import

return_value = get_return_value(answer, gateway_client, None, None)

File "/root/miniconda2/envs/ai/lib/python3.6/site-packages/py4j/protocol.py", line 324, in get_return_value

format(target_id, ".", name, value))

py4j.protocol.Py4JError: An error occurred while calling None.None. Trace:

Authentication error: unexpected command.

出现异常的原因:虚拟环境中安装的py4j的版本和spark中Python中lib包中的版本不一致引起的

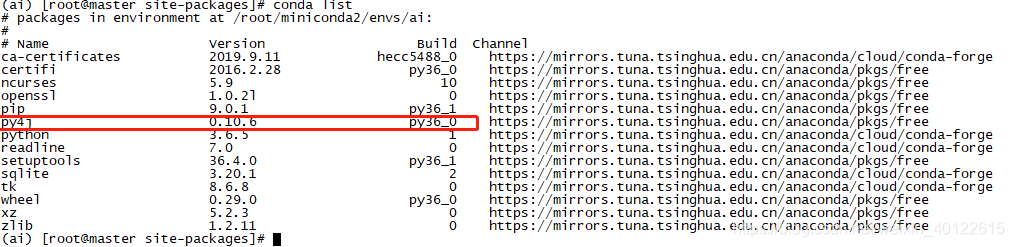

虚拟环境中的py4j的版本为0.10.6版

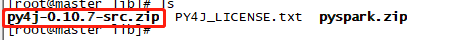

spark安装包中python目录下lib中的py4j版本为0.10.7版:

查看路径:/export/servers/spark-2.3.4-bin-hadoop2.7/python/lib

解决方案:

卸载虚拟环境中的py4j,安装和spark中一致的包即可。

# 卸载虚拟环境中的安装包

conda uninstall py4j

# 安装新的版本

conda install py4j=0.10.7