scala – Elasticsearch-Hadoop库无法连接到docker

org.elasticsearch.hadoop.rest.EsHadoopNoNodesLeftException: Connection error (check network and/or proxy settings)- all nodes failed; tried [[172.17.0.2:9200]]

at org.elasticsearch.hadoop.rest.NetworkClient.execute(NetworkClient.java:142)

at org.elasticsearch.hadoop.rest.RestClient.execute(RestClient.java:434)

at org.elasticsearch.hadoop.rest.RestClient.executeNotFoundAllowed(RestClient.java:442)

at org.elasticsearch.hadoop.rest.RestClient.exists(RestClient.java:518)

at org.elasticsearch.hadoop.rest.RestClient.touch(RestClient.java:524)

at org.elasticsearch.hadoop.rest.RestRepository.touch(RestRepository.java:491)

at org.elasticsearch.hadoop.rest.RestService.initSingleIndex(RestService.java:412)

at org.elasticsearch.hadoop.rest.RestService.createWriter(RestService.java:400)

at org.elasticsearch.spark.rdd.EsRDDWriter.write(EsRDDWriter.scala:40)

at org.elasticsearch.spark.rdd.EsSpark$$anonfun$saveToEs$1.apply(EsSpark.scala:67)

at org.elasticsearch.spark.rdd.EsSpark$$anonfun$saveToEs$1.apply(EsSpark.scala:67)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:66)

at org.apache.spark.scheduler.Task.run(Task.scala:89)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:214)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

16/08/08 12:30:46 WARN TaskSetManager: Lost task 0.0 in stage 2.0 (TID 2, localhost): org.elasticsearch.hadoop.rest.EsHadoopNoNodesLeftException: Connection error (check network and/or proxy settings)- all nodes failed; tried [[172.17.0.2:9200]]

at org.elasticsearch.hadoop.rest.NetworkClient.execute(NetworkClient.java:142)

at org.elasticsearch.hadoop.rest.RestClient.execute(RestClient.java:434)

at org.elasticsearch.hadoop.rest.RestClient.executeNotFoundAllowed(RestClient.java:442)

at org.elasticsearch.hadoop.rest.RestClient.exists(RestClient.java:518)

at org.elasticsearch.hadoop.rest.RestClient.touch(RestClient.java:524)

at org.elasticsearch.hadoop.rest.RestRepository.touch(RestRepository.java:491)

at org.elasticsearch.hadoop.rest.RestService.initSingleIndex(RestService.java:412)

at org.elasticsearch.hadoop.rest.RestService.createWriter(RestService.java:400)

at org.elasticsearch.spark.rdd.EsRDDWriter.write(EsRDDWriter.scala:40)

at org.elasticsearch.spark.rdd.EsSpark$$anonfun$saveToEs$1.apply(EsSpark.scala:67)

at org.elasticsearch.spark.rdd.EsSpark$$anonfun$saveToEs$1.apply(EsSpark.scala:67)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:66)

at org.apache.spark.scheduler.Task.run(Task.scala:89)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:214)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)上面这个问题,我们使用的容器部署的ElasticSearch,但是呢,它返回给我们的ip竟然是容器的IP,而 不是宿主机外网IP,这当然访问不了

movieDF.write

.option("es.nodes",eSConfig.httpHosts)

.option("es.http.timeout","100m")

.option("es.mapping.id","mid")

.option("es.nodes.wan.only","true")

.mode("overwrite")

.format("org.elasticsearch.spark.sql")

.save(eSConfig.index+"/"+ES_MOVIEW_INDEX)如上,加上.option("es.nodes.wan.only","true")

就可以返会正确IP

又出现新问题了。。。。

Bailing out...

at org.elasticsearch.hadoop.rest.bulk.BulkProcessor.flush(BulkProcessor.java:519)

at org.elasticsearch.hadoop.rest.bulk.BulkProcessor.close(BulkProcessor.java:541)

at org.elasticsearch.hadoop.rest.RestRepository.close(RestRepository.java:219)

at org.elasticsearch.hadoop.rest.RestService$PartitionWriter.close(RestService.java:122)

at org.elasticsearch.spark.rdd.EsRDDWriter$$anonfun$write$1.apply(EsRDDWriter.scala:67)

at org.elasticsearch.spark.rdd.EsRDDWriter$$anonfun$write$1.apply(EsRDDWriter.scala:67)

at org.apache.spark.TaskContext$$anon$1.onTaskCompletion(TaskContext.scala:131)

at org.apache.spark.TaskContextImpl$$anonfun$markTaskCompleted$1.apply(TaskContextImpl.scala:117)

at org.apache.spark.TaskContextImpl$$anonfun$markTaskCompleted$1.apply(TaskContextImpl.scala:117)

at org.apache.spark.TaskContextImpl$$anonfun$invokeListeners$1.apply(TaskContextImpl.scala:130)

at org.apache.spark.TaskContextImpl$$anonfun$invokeListeners$1.apply(TaskContextImpl.scala:128)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at org.apache.spark.TaskContextImpl.invokeListeners(TaskContextImpl.scala:128)

at org.apache.spark.TaskContextImpl.markTaskCompleted(TaskContextImpl.scala:116)

at org.apache.spark.scheduler.Task.run(Task.scala:139)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2020-02-06 22:23:13,913 ERROR --- [ Executor task launch worker for task 10] org.apache.spark.executor.Executor (line: 91) : Exception in task 6.0 in stage 2.0 (TID 10)

org.apache.spark.util.TaskCompletionListenerException: Could not write all entries for bulk operation [33/33]. Error sample (first [5] error messages):

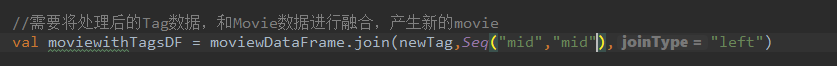

再仔细看代码

原来两个dataFrame进行join时,产生了重复字段,导致写入Elasticsearch时建立索引失败了。

在spark2.4.4里面两个dataFrame的join字段相同,写一个就行了。

呐呐呐~~!!!!1

就喜欢这么大的code0