实验环境:

| 主机名 | 功能 |

|---|---|

| server1 | elasticsearch+kibana |

| server2 | logstash+elasticsearch+apache |

| server3 | logstash+elasticsearch +redis |

配置部署elasticsearch

用Elasticsearch-Head(界面化集群操作和管理工具)管理elasticsearch集群

| 主机名 | 集群中充当的作用 |

|---|---|

| server1 | master |

| server2 | data |

| server3 | data |

Elasticsearch集群配置教程参考之前博客:

https://blog.csdn.net/chaos_oper/article/details/94395633

配置apache服务(server2)

1.安装apache

[root@server2 conf.d]# yum install -y httpd

2.修改测试页面

[root@server2 conf.d]# cd /var/www/html/

[root@server2 html]# vim index.html

www.redhat.org

3.开启apache服务

[root@server2 html]# systemctl start httpd

4.修改权限使得其他用户可以读取日志

[root@server2 conf.d]# chmod 755 /var/log/httpd/

[root@server2 conf.d]# ll -d /var/log/httpd/

drwxr-xr-x 2 root root 41 Jul 3 11:34 /var/log/httpd/

安装配置redis(server3)

1.解压redis-5.0.3.tar.gz

[root@server3 ~]# tar zxf redis-5.0.3.tar.gz

2.安装依赖包gcc

3.编译

[root@server3 ~]# cd redis-5.0.3/

[root@server3 redis-5.0.3]# make

[root@server3 redis-5.0.3]# make install

4.安装redis

[root@server3 redis-5.0.3]# cd utils/

[root@server3 utils]# ./install_server.sh

5.修改6379.conf文件

[root@server3 utils]# vim /etc/redis/6379.conf

70 bind 0.0.0.0

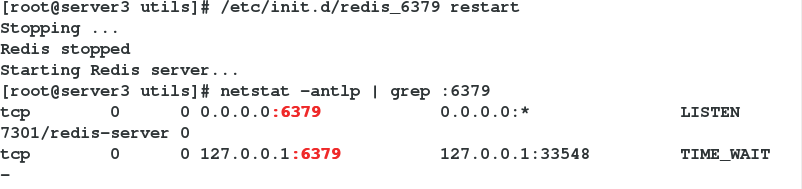

6.开启redis

配置部署logstash(server2和server3)

1.安装ruby

[root@server3 ~]# yum install -y ruby

2.安装logstash

[root@server3 ~]# rpm -ivh logstash-6.6.1.rpm

将server2端的日志采集存入部署在server3端的redis数据库

[root@server2 ~]# cd /etc/logstash/conf.d/

[root@server2 conf.d]# vim apache.conf

input {

file {

path => "/var/log/httpd/access_log"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{HTTPD_COMBINEDLOG}" }

}

}

output {

redis {

host => ["172.25.13.3:6379"]

data_type => "list"

key => logstashtoredis

}

}

[root@server2 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/apache.conf

将redis中数据传送到elasticsearch

[root@server3 conf.d]# vim redis.conf

input {

redis {

host => ["172.25.13.3:6379"]

data_type => "list"

key => logstashtoredis

}

}

outout {

stdout {}

elasticsearch {

hosts => ["172.25.13.1:9200"]

index => "apachelog-%{+YYYY.MM.dd}"

}

}

[root@server3 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis.conf