文章目录

前沿

此文章基于 SingleCluster 的 Pseudo-Distributed,

如果是Cluster,还没研究。。

文档列表

Hadoop

ssh: Could not resolve hostname

Pseudo-Distributed 模式启动dfs 的时候

[work@hostname123 ~/hadoop]sbin/start-dfs.sh

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [hostname123]

hostname123: ssh: Could not resolve hostname hostname123: Name or service not known

是因为 hostname hostname123 没有域名解析,在/etc/hosts里加一下就好了

sudo vim /etc/hosts

...

127.0.0.1 hostname123

...

hostname的问题需要 结合下文参考

Failed on local exception: java.io.IOException: Connection reset by peer

官方文档中 Pseudo-Distributed_Operation 中的一个配置。

etc/hadoop/core-site.xml:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

按照如此配置,启动dfs后,logs/hadoop-work-datanode-localhost.log 日志会报错

2019-05-21 10:40:47,218 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: localhost/127.0.0.1:9000

并且执行示例中的 bin/hdfs dfs -mkdir /user 后,会报错

DestHost:destPort localhost:9000 , LocalHost:localPort localhost/127.0.0.1:0. Failed on local exception: java.io.IOException: Connection reset by peer

而且官方示例中的 NameNode - http://localhost:9870/ 也无法访问

大概原因可能是 datanode 用localhost 导致了一些冲突。(没细查,有一个bind error,反正日志里好多报错)

我们把 localhost 改成内网地址。但是防止改动localhost 引起不必要麻烦,所以我们新建一个host

sudo vim /etc/hosts

...

#内网网卡地址

192.168.23.12 hadoophost

...

然后把 etc/hadoop/core-site.xml: 改为

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoophost:9000</value>

</property>

</configuration>

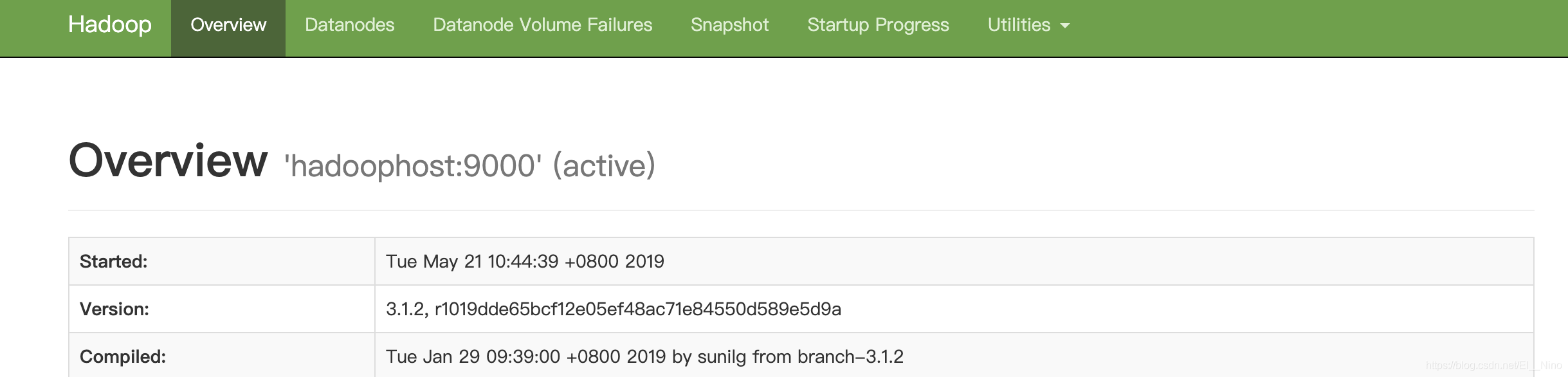

然后重启dfs,看日志正常了

2019-05-21 10:44:44,089 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Successfully sent block report 0xa93edda077e08d43, containing 1 storage report(s), of which we sent 1. The reports had 0 total blocks and used 1 RPC(s). This took 5 msec to generate and 77 msecs for RPC and NN processing. Got back one command: FinalizeCommand/5.

2019-05-21 10:44:44,089 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Got finalize command for block pool BP-1814454983-127.0.0.1-1558406194589

同时 locahost:9870 也可以访问了。

mkdir: `input’: No such file or directory

官方文档中 Pseudo-Distributed_Operation 中 Execution 的 Setp 5 中

bin/hdfs dfs -mkdir input

执行之后报错

mkdir: `input’: No such file or directory

需要改为执行

bin/hdfs dfs -mkdir -p input

Hive

schematool -dbType mysql -initSchema

如果不用derby,采用mysql存储 元数据库的话,需要安装 mariadb(mysql) ,并且下载 mysql-connector-java-8.0.16.jar lib

MysqlConnectorJava下载

注意,需要选择 Platform Independent

将 mysql-connector-java-8.0.16.jar 放到 $HIVE_HOME/lib/ 下面

编辑 $HIVE_HOME/conf/hive-site.xml 文件 (hive-default.xml.template 是默认示例配置)

数据库: hivedb

Driver: com.mysql.cj.jdbc.Driver

数据库用户名:root

数据库密码:root

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hivedb?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

<description>password to use against metastore database</description>

</property>

</configuration>

执行初始化即可。

schematool -dbType mysql -initSchema

PS: 默认MySQL必须要有密码,没有密码会提示需要密码,可能也可以不用密码,懒得弄了

beeline Connection refused

执行

beeline -u jdbc:hive2://localhost:10000

提示

Connecting to jdbc:hive2://localhost:10000

19/05/21 19:05:20 [main]: WARN jdbc.HiveConnection: Failed to connect to localhost:10000

Could not open connection to the HS2 server. Please check the server URI and if the URI is correct, then ask the administrator to check the server status.

Error: Could not open client transport with JDBC Uri: jdbc:hive2://localhost:10000: java.net.ConnectException: Connection refused (Connection refused) (state=08S01,code=0)

大概率是因为 hiveserver2 没启动起来,查看 10000 端口是否有监听,如果没有的话,多查几次,如果有了再进行连接。

netstat -anop|grep 10000

...

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

tcp 0 0 127.0.0.1:58990 127.0.0.1:10000 ESTABLISHED 19496/java keepalive (6832.63/0/0)

...

Expression not in GROUP BY

hive中group by的时候

select col_1,col_2 from table_name group by col_1;

会提示:

FAILED: SemanticException [Error 10025]: Line 1:12 Expression not in GROUP BY key ‘col_2′

有两种解决方法:

- 不关心col_2的值,且有多个col_2,那么语句改成

select col_1, collect_set( col_2 )[0] from table_name group by col_1;

- 如果每个col_2的值不同且关心col_2的值,那么可以改成

select col_1,col_2 from table_name group by col_1,col_2;

http://one-line-it.blogspot.com/2012/11/hive-expression-not-in-group-by-key.html