一、损失函数概念

1.1 损失函数是什么?

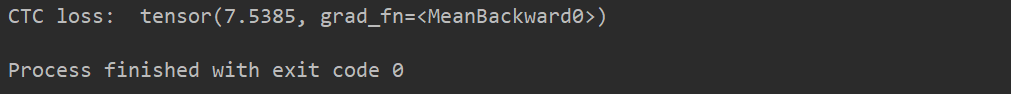

图中绿色方块为真实数据点,蓝色直线为线性回归模型的结果,可以看模型输出点与真实数据点存在一定的差距,而这个差距常用损失函数来进行描述

损失函数:衡量模型输出与真实标签的差异

损失函数(Loss Function): 计算一个样本的损失值

代价函数(Cost Function): 计算整个训练集样本的损失的平均值

目标函数(Objective Function):

1.2 pytorch中的Loss

class _Loss (Module):

def __init__(self, size_average=None, reduce=None, reduction='mean'):

super(_Loss, self).__init__()

if size_average is not None or reduce is not None:

self.reduction = _Reduction.legacy_get_string(size_average, reduce)

else:self.reduction = reduction

pytorch中的loss也继承于nn.Module,相当于一个网络层,其中size_average和reduce这两个参数已经被舍弃,不需要再使用

二、交叉熵的概念

交叉熵 = 信息熵 + 相对熵

信息熵:描述信息的不确定程度,熵越大,不确定性越大,数学描述为自信息的期望

自信息:衡量单个输出单个事件的不确定性

交叉熵:衡量两个分布之间的相似度

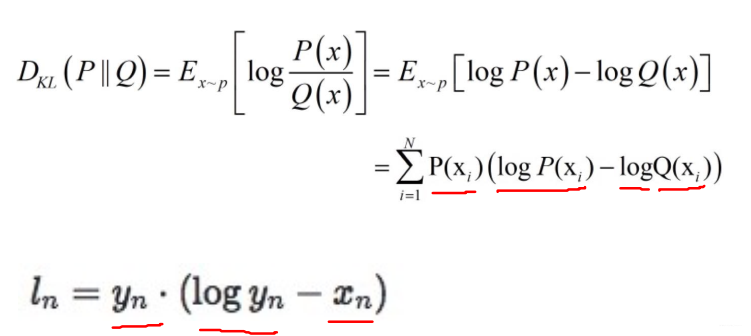

相对熵:又称为KL散度,用来衡量两个分布之间的差异(距离)

三、常用的损失函数

3.1 nn.CrossEntropyLoss

nn.CrossEntropyLoss(weight=None,

size_average=None,

ignore_index=-100,

reduce=None,

reduction='mean')

功能: nn.LogSoftmax()与nn.NLLLoss()结合,进行交叉熵计算

主要参数:

- weight: 各类别的loss设置权值

- ignore_index: 忽略某个类别,不计算损失函数值

- reduction: 计算模式, 可为none/sum/mean

- none: 逐个元素或样本计算

- sum: 所有元素求和, 返回标量

- mean: 加权平均, 返回标量

说明:

- nn.LogSoftmax()将输出归一化为概率取值范围,即0到1之间,然后再使用nn.NLLLoss()计算交叉熵

- 早期是使用size_average和reduce来设置计算模式,现在只使用reduction参数

交叉熵:

交叉熵损失函数:

- 对比交叉熵公式,其中Q(x)就是softmax输出

- 因为样本已经取出来了,所以样本P(x)=1,故没有P(xi)项

- 当设置了weight参数时,各个类别的函数值就有了相应的权值

代码:

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

# fake data

inputs = torch.tensor([[1, 2], [1, 3], [1, 3]], dtype=torch.float)

target = torch.tensor([0, 1, 1], dtype=torch.long)

# ----------------------------------- CrossEntropy loss: reduction -----------------------------------

# flag = 0

flag = 1

if flag:

# def loss function

loss_f_none = nn.CrossEntropyLoss(weight=None, reduction='none')

loss_f_sum = nn.CrossEntropyLoss(weight=None, reduction='sum')

loss_f_mean = nn.CrossEntropyLoss(weight=None, reduction='mean')

# forward

loss_none = loss_f_none(inputs, target)

loss_sum = loss_f_sum(inputs, target)

loss_mean = loss_f_mean(inputs, target)

# view

print("Cross Entropy Loss:\n ", loss_none, loss_sum, loss_mean)

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

idx = 0

input_1 = inputs.detach().numpy()[idx] # [1, 2]

target_1 = target.numpy()[idx] # [0]

# 第一项

x_class = input_1[target_1]

# 第二项

sigma_exp_x = np.sum(list(map(np.exp, input_1)))

log_sigma_exp_x = np.log(sigma_exp_x)

# 输出loss

loss_1 = -x_class + log_sigma_exp_x

print("第一个样本loss为: ", loss_1)

# ----------------------------------- weight -----------------------------------

# flag = 0

flag = 1

if flag:

# def loss function

weights = torch.tensor([1, 2], dtype=torch.float)

# weights = torch.tensor([0.7, 0.3], dtype=torch.float)

loss_f_none_w = nn.CrossEntropyLoss(weight=weights, reduction='none')

loss_f_sum = nn.CrossEntropyLoss(weight=weights, reduction='sum')

loss_f_mean = nn.CrossEntropyLoss(weight=weights, reduction='mean')

# forward

loss_none_w = loss_f_none_w(inputs, target)

loss_sum = loss_f_sum(inputs, target)

loss_mean = loss_f_mean(inputs, target)

# view

print("\nweights: ", weights)

print(loss_none_w, loss_sum, loss_mean)

# --------------------------------- compute by hand

flag = 0

# flag = 1

if flag:

weights = torch.tensor([1, 2], dtype=torch.float)

weights_all = np.sum(list(map(lambda x: weights.numpy()[x], target.numpy()))) # [0, 1, 1] # [1 2 2]

mean = 0

loss_sep = loss_none.detach().numpy()

for i in range(target.shape[0]):

x_class = target.numpy()[i]

tmp = loss_sep[i] * (weights.numpy()[x_class] / weights_all)

mean += tmp

print(mean)

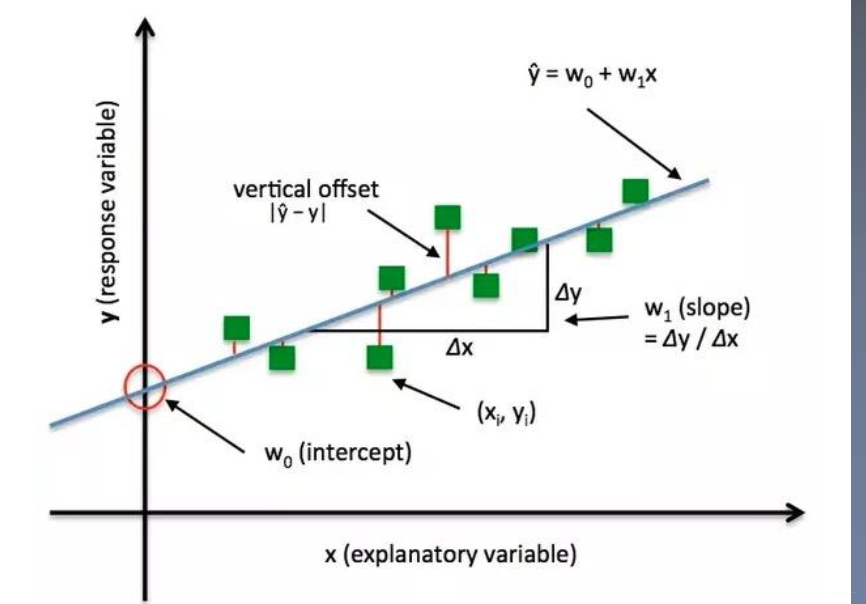

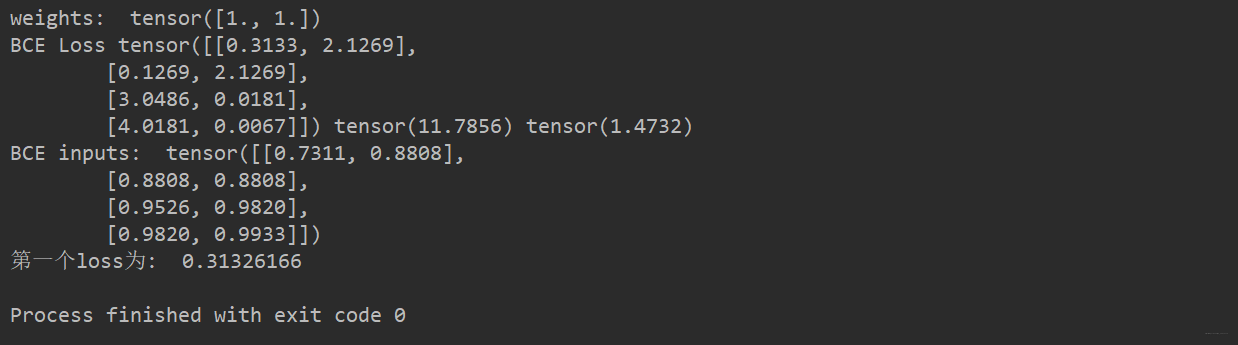

运行结果:

3.2 nn.NLLLoss

nn.NLLLoss(weight=None,

size_average=None,

ignore_index=-100,

reduce=None,

reduction='mean')

功能: 实现负对数似然函数中的负号功能

主要参数:

- weight: 各类别的loss设置权值

- ignore_index:忽略某个类别

- reduction:计算模式,可为none/sum/mean

- none-逐个元素计算

- sum-所有元素求和,返回标量

- mean-加权平均,返回标量

损失函数:

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

# fake data

inputs = torch.tensor([[1, 2], [1, 3], [1, 3]], dtype=torch.float)

target = torch.tensor([0, 1, 1], dtype=torch.long)

# ----------------------------------- 2 NLLLoss -----------------------------------

# flag = 0

flag = 1

if flag:

weights = torch.tensor([1, 1], dtype=torch.float)

loss_f_none_w = nn.NLLLoss(weight=weights, reduction='none')

loss_f_sum = nn.NLLLoss(weight=weights, reduction='sum')

loss_f_mean = nn.NLLLoss(weight=weights, reduction='mean')

# forward

loss_none_w = loss_f_none_w(inputs, target)

loss_sum = loss_f_sum(inputs, target)

loss_mean = loss_f_mean(inputs, target)

# view

print("\nweights: ", weights)

print("NLL Loss", loss_none_w, loss_sum, loss_mean)

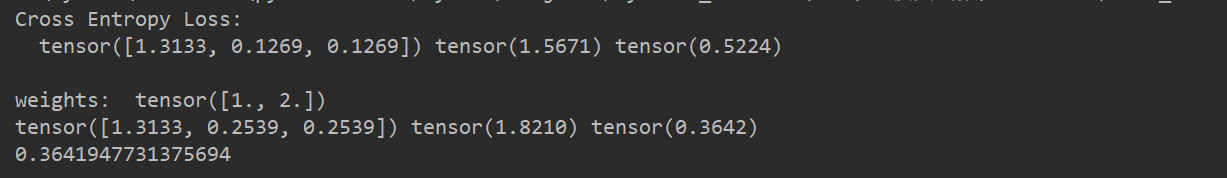

从运行结果中可看到,NNLLoss只不过是对输入取了负号

3.3 nn.BCELoss

nn.BCELoss(weight=None, size_average=None, reduce=None, reduction='mean')

功能:二分类交叉熵

注意事项: 输入值的取值应在[0,1]

主要参数:

- weight: 各类别的loss设置权值

- ignore_index: 忽略某个类别

- reduction: 计算模式,可为none/sum/mean

- none-逐个元素计算

- sum-所有元素求和,返回标量

- mean-加权平均,返回标量

损失函数:

- 是模型输出概率取值

- 是标签,取值为0或1

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

# fake data

inputs = torch.tensor([[1, 2], [1, 3], [1, 3]], dtype=torch.float)

target = torch.tensor([0, 1, 1], dtype=torch.long)

# ----------------------------------- 3 BCE Loss -----------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.tensor([[1, 2], [2, 2], [3, 4], [4, 5]], dtype=torch.float)

target = torch.tensor([[1, 0], [1, 0], [0, 1], [0, 1]], dtype=torch.float)

target_bce = target

# itarget

inputs = torch.sigmoid(inputs)

weights = torch.tensor([1, 1], dtype=torch.float)

loss_f_none_w = nn.BCELoss(weight=weights, reduction='none')

loss_f_sum = nn.BCELoss(weight=weights, reduction='sum')

loss_f_mean = nn.BCELoss(weight=weights, reduction='mean')

# forward

loss_none_w = loss_f_none_w(inputs, target_bce)

loss_sum = loss_f_sum(inputs, target_bce)

loss_mean = loss_f_mean(inputs, target_bce)

# view

print("\nweights: ", weights)

print("BCE Loss", loss_none_w, loss_sum, loss_mean)

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

idx = 0

x_i = inputs.detach().numpy()[idx, idx]

y_i = target.numpy()[idx, idx] #

# loss

# l_i = -[ y_i * np.log(x_i) + (1-y_i) * np.log(1-y_i) ] # np.log(0) = nan

l_i = -y_i * np.log(x_i) if y_i else -(1-y_i) * np.log(1-x_i)

# 输出loss

print("BCE inputs: ", inputs)

print("第一个loss为: ", l_i)

3.4 nn.BCEWithLogitsLoss

nn.BCEWithLogitsLoss(weight=None,

size_average=None,

reduce=None,

reduction='mean'

pos_weight=None)

功能: 结合Sigmoid与二分类交叉熵

注意事项: 网络最后不加sigmoid函数

主要参数:

- pos_weight : 正样本的权值,用来均衡正负样本

- weight: 各类别的loss设置权值

- ignore_index: 忽略某个类别

- reduction: 计算模式,可为none/sum/mea

- none-逐个元素计算

- sum-所有元素求和,返回标量

- mean-加权平均,返回标量

- 为sigmoid函数

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

# fake data

inputs = torch.tensor([[1, 2], [1, 3], [1, 3]], dtype=torch.float)

target = torch.tensor([0, 1, 1], dtype=torch.long)

# ----------------------------------- 4 BCE with Logis Loss -----------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.tensor([[1, 2], [2, 2], [3, 4], [4, 5]], dtype=torch.float)

target = torch.tensor([[1, 0], [1, 0], [0, 1], [0, 1]], dtype=torch.float)

target_bce = target

# inputs = torch.sigmoid(inputs)

weights = torch.tensor([1, 1], dtype=torch.float)

loss_f_none_w = nn.BCEWithLogitsLoss(weight=weights, reduction='none')

loss_f_sum = nn.BCEWithLogitsLoss(weight=weights, reduction='sum')

loss_f_mean = nn.BCEWithLogitsLoss(weight=weights, reduction='mean')

# forward

loss_none_w = loss_f_none_w(inputs, target_bce)

loss_sum = loss_f_sum(inputs, target_bce)

loss_mean = loss_f_mean(inputs, target_bce)

# view

print("\nweights: ", weights)

print(loss_none_w, loss_sum, loss_mean)

# --------------------------------- pos weight

flag = 0

# flag = 1

if flag:

inputs = torch.tensor([[1, 2], [2, 2], [3, 4], [4, 5]], dtype=torch.float)

target = torch.tensor([[1, 0], [1, 0], [0, 1], [0, 1]], dtype=torch.float)

target_bce = target

# itarget

# inputs = torch.sigmoid(inputs)

weights = torch.tensor([1], dtype=torch.float)

pos_w = torch.tensor([3], dtype=torch.float) # 3

loss_f_none_w = nn.BCEWithLogitsLoss(weight=weights, reduction='none', pos_weight=pos_w)

loss_f_sum = nn.BCEWithLogitsLoss(weight=weights, reduction='sum', pos_weight=pos_w)

loss_f_mean = nn.BCEWithLogitsLoss(weight=weights, reduction='mean', pos_weight=pos_w)

# forward

loss_none_w = loss_f_none_w(inputs, target_bce)

loss_sum = loss_f_sum(inputs, target_bce)

loss_mean = loss_f_mean(inputs, target_bce)

# view

print("\npos_weights: ", pos_w)

print(loss_none_w, loss_sum, loss_mean)

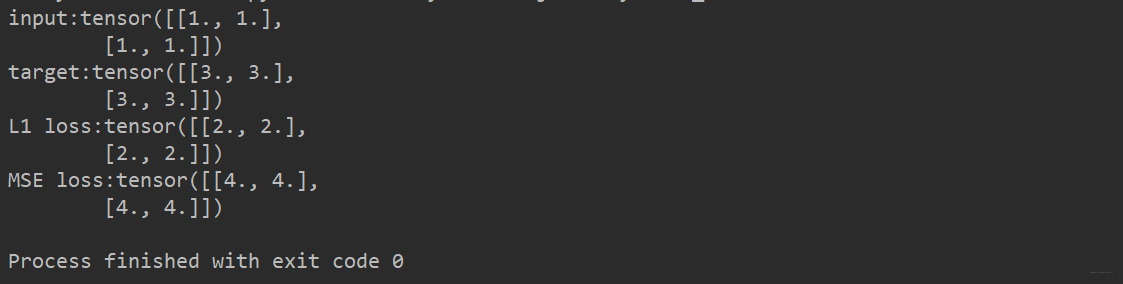

3.5 nn.L1Loss

nn.L1Loss(size_average=None, reduce=None, reduction='mean')

功能: 计算inputs与target之差的绝对值

- reduction: 计算模式, 可为none/sum/mean

- none: 逐个元素或样本计算

- sum: 所有元素求和, 返回标量

- mean: 加权平均, 返回标量

损失函数:

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

set_seed(1) # 设置随机种子

# ------------------------------------------------- 5 L1 loss ----------------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.ones((2, 2))

target = torch.ones((2, 2)) * 3

loss_f = nn.L1Loss(reduction='none')

loss = loss_f(inputs, target)

print("input:{}\ntarget:{}\nL1 loss:{}".format(inputs, target, loss))

3.6 nn.MSELoss

nn.MSELoss(size_average=None, reduce=None, reduction='mean')

功能: 计算inputs与target之差的平方

主要参数:

- reduction: 计算模式, 可为none/sum/mean

- none: 逐个元素或样本计算

- sum: 所有元素求和, 返回标量

- mean: 加权平均, 返回标量

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

set_seed(1) # 设置随机种子

# ------------------------------------------------- 6 MSE loss ----------------------------------------------

loss_f_mse = nn.MSELoss(reduction='none')

loss_mse = loss_f_mse(inputs, target)

print("MSE loss:{}".format(loss_mse))

运行结果:

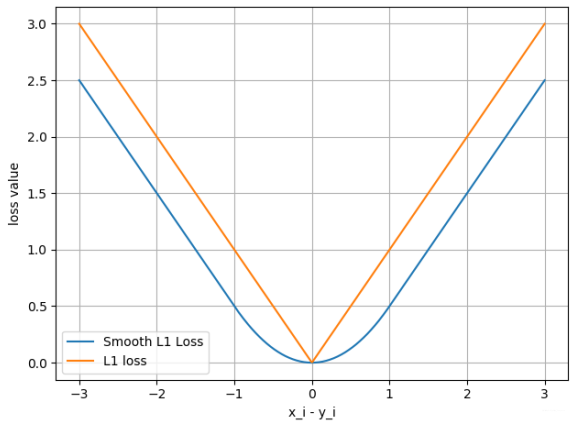

3.7 nn.SmoothL1Loss

nn.SmoothL1Loss(size_average=None, reduce=None, reduction='mean')

功能:平滑的L1Loss

主要参数:

- reduction: 计算模式, 可为none/sum/mean

- none: 逐个元素或样本计算

- sum: 所有元素求和, 返回标量

- mean: 加权平均, 返回标量

损失函数:

- 其中

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

set_seed(1) # 设置随机种子

# ------------------------------------------------- 7 Smooth L1 loss ----------------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.linspace(-3, 3, steps=500)

target = torch.zeros_like(inputs)

loss_f = nn.SmoothL1Loss(reduction='none')

loss_smooth = loss_f(inputs, target)

loss_l1 = np.abs(inputs.numpy())

plt.plot(inputs.numpy(), loss_smooth.numpy(), label='Smooth L1 Loss')

plt.plot(inputs.numpy(), loss_l1, label='L1 loss')

plt.xlabel('x_i - y_i')

plt.ylabel('loss value')

plt.legend()

plt.grid()

plt.show()

运行结果:

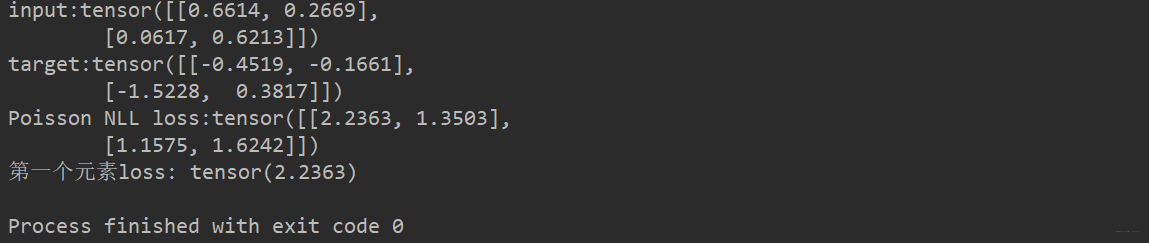

3.8 nn.PoissonNLLLoss

nn.PoissonNLLLoss(log_input=True,

full=False,

size_average=None,

eps=1e-08,

reduce=None,

eduction='mean')

功能:泊松分布的负对数似然损失函数

主要参数:

- log_input: 输入是否为对数形式,决定计算公式

- log_input = True,loss(input, target) = exp(input)- target * input

- log_input = False,loss(input, target) = input - target * log(input+eps)

- full: 计算所有loss,默认为False

- eps: 修正项,避免log (input)为nan

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

set_seed(1) # 设置随机种子

# ------------------------------------------------- 8 Poisson NLL Loss ----------------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.randn((2, 2))

target = torch.randn((2, 2))

loss_f = nn.PoissonNLLLoss(log_input=True, full=False, reduction='none')

loss = loss_f(inputs, target)

print("input:{}\ntarget:{}\nPoisson NLL loss:{}".format(inputs, target, loss))

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

idx = 0

loss_1 = torch.exp(inputs[idx, idx]) - target[idx, idx]*inputs[idx, idx]

print("第一个元素loss:", loss_1)

3.9 nn.KLDivLoss

nn.KLDivLoss(size_average=None, reduce=None, reduction='mean')

功能: 计算KLD (divergence) , KL散度,相对熵

注意事项: 需提前将输入转换成probabilities,然后计算log,通过nn.logsoftmax()实现

主要参数:

- reduction: none/sum/mean/batchmean-batchsize维度求平均值

- none-逐个元素计算

- sum-所有元素求和,返回标量

- mean-加权平均,返回标量

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

set_seed(1) # 设置随机种子

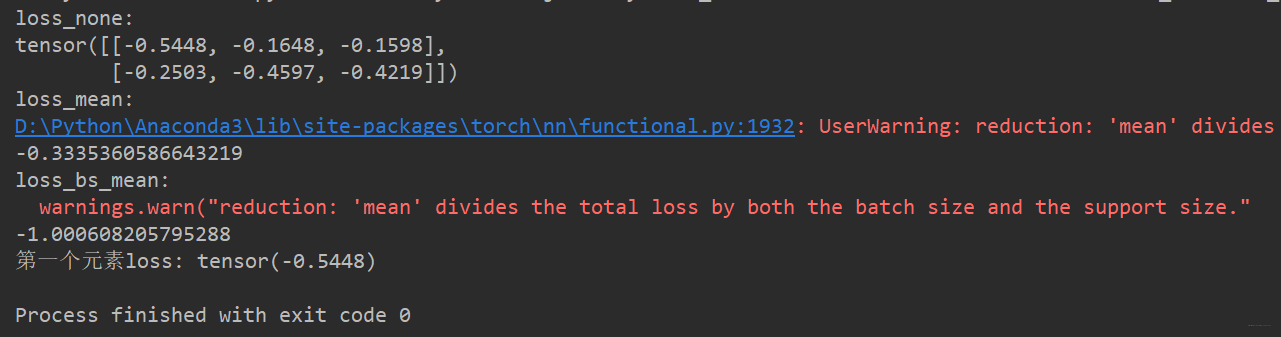

# ------------------------------------------------- 9 KL Divergence Loss ----------------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.tensor([[0.5, 0.3, 0.2], [0.2, 0.3, 0.5]])

inputs_log = torch.log(inputs)

target = torch.tensor([[0.9, 0.05, 0.05], [0.1, 0.7, 0.2]], dtype=torch.float)

loss_f_none = nn.KLDivLoss(reduction='none')

loss_f_mean = nn.KLDivLoss(reduction='mean')

loss_f_bs_mean = nn.KLDivLoss(reduction='batchmean')

loss_none = loss_f_none(inputs, target)

loss_mean = loss_f_mean(inputs, target)

loss_bs_mean = loss_f_bs_mean(inputs, target)

print("loss_none:\n{}\nloss_mean:\n{}\nloss_bs_mean:\n{}".format(loss_none, loss_mean, loss_bs_mean))

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

idx = 0

loss_1 = target[idx, idx] * (torch.log(target[idx, idx]) - inputs[idx, idx])

print("第一个元素loss:", loss_1)

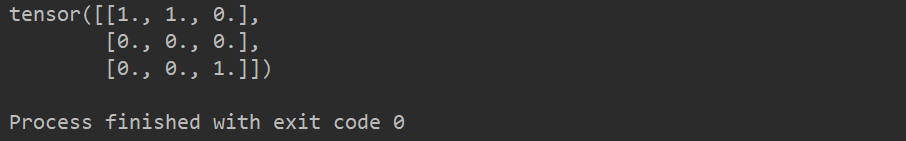

3.10 nn.MarginRankingLoss

nn.MarginRankingLoss(margin=0.0,

size_average=None,

reduce=None,

reduction='mean')

功能: 计算两个向量之间的相似度,用于排序任务

特别说明: 该方法计算两组数据之间的差异,返回一个n*n的loss矩阵

主要参数:

- margin : 边界值, x1与x2之间的差异值

- reduction :计算模式,可为none/sum/mean

损失函数:

- y=1时,希望x1比x2大,当x1>x2时,不产生loss

- y=-1时,希望x2比x1大,当x2>x1时,不产生loss

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

# ---------------------------------------------- 10 Margin Ranking Loss --------------------------------------------

# flag = 0

flag = 1

if flag:

x1 = torch.tensor([[1], [2], [3]], dtype=torch.float)

x2 = torch.tensor([[2], [2], [2]], dtype=torch.float)

target = torch.tensor([1, 1, -1], dtype=torch.float)

loss_f_none = nn.MarginRankingLoss(margin=0, reduction='none')

loss = loss_f_none(x1, x2, target)

print(loss)

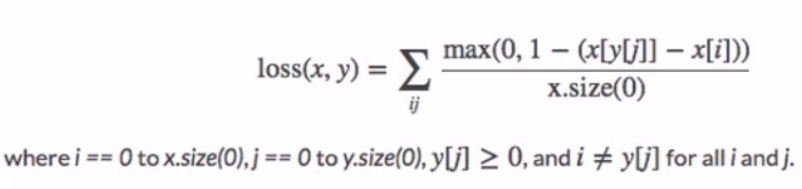

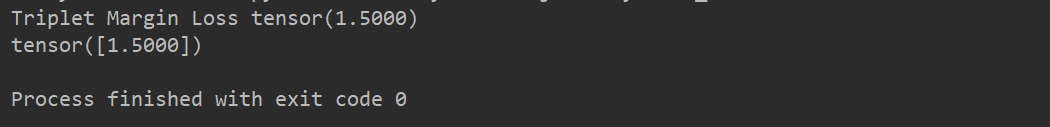

3.11 nn.MultiLabelMarginLoss

nn.MultiLabelMarginLoss(size_average=None, reduce=None, reduction='mean')

功能: 多标签边界损失函数

举例: 四分类任务,样本x属于0类和3类,

标签: [0, 3,-1,-1] ,不是[1,0,0,1]

主要参数:

- reduction:计算模式,可为none/sum/mean

- none-逐个元素计算

- sum-所有元素求和,返回标量

- mean-加权平均,返回标量

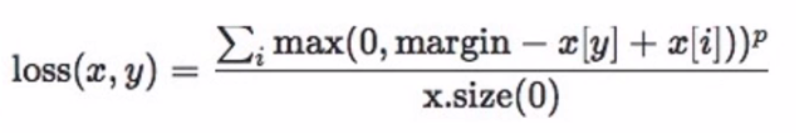

损失函数:

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

# ---------------------------------------------- 11 Multi Label Margin Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

x = torch.tensor([[0.1, 0.2, 0.4, 0.8]])

y = torch.tensor([[0, 3, -1, -1]], dtype=torch.long)

loss_f = nn.MultiLabelMarginLoss(reduction='none')

loss = loss_f(x, y)

print(loss)

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

x = x[0]

item_1 = (1-(x[0] - x[1])) + (1 - (x[0] - x[2])) # [0]

item_2 = (1-(x[3] - x[1])) + (1 - (x[3] - x[2])) # [3]

loss_h = (item_1 + item_2) / x.shape[0]

print(loss_h)

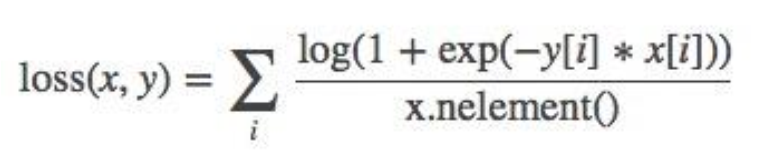

3.12 nn.SoftMarginLoss

nn.SoftMarginLoss(size_average=None, reduce=None, reduction='mean')

功能: 计算二分类的logistic损失

主要参数:

- reduction:计算模式,可为none/sum/mean

- none-逐个元素计算

- sum-所有元素求和,返回标量

- mean-加权平均,返回标量

损失函数:

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

# ---------------------------------------------- 12 SoftMargin Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.tensor([[0.3, 0.7], [0.5, 0.5]])

target = torch.tensor([[-1, 1], [1, -1]], dtype=torch.float)

loss_f = nn.SoftMarginLoss(reduction='none')

loss = loss_f(inputs, target)

print("SoftMargin: ", loss)

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

idx = 0

inputs_i = inputs[idx, idx]

target_i = target[idx, idx]

loss_h = np.log(1 + np.exp(-target_i * inputs_i))

print(loss_h)

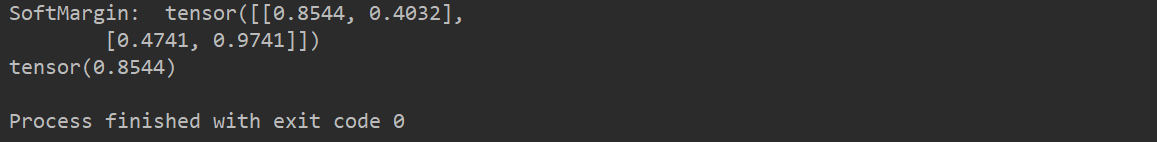

3.13 nn.MultiLabelSoftMarginLoss

nn.MultiLabelSoftMarginLoss(weight=None,size_average=None, reduce=None, reduction='mean')

功能:SoftMarginLoss的多标签版本

主要参数:

- weight:各类别的loss设置权值

- reduction:计算模式,可为none/sum/mean

- none-逐个元素计算

- sum-所有元素求和,返回标量

- mean-加权平均,返回标量

损失函数:

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

# ---------------------------------------------- 13 MultiLabel SoftMargin Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.tensor([[0.3, 0.7, 0.8]])

target = torch.tensor([[0, 1, 1]], dtype=torch.float)

loss_f = nn.MultiLabelSoftMarginLoss(reduction='none')

loss = loss_f(inputs, target)

print("MultiLabel SoftMargin: ", loss)

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

i_0 = torch.log(torch.exp(-inputs[0, 0]) / (1 + torch.exp(-inputs[0, 0])))

i_1 = torch.log(1 / (1 + torch.exp(-inputs[0, 1])))

i_2 = torch.log(1 / (1 + torch.exp(-inputs[0, 2])))

loss_h = (i_0 + i_1 + i_2) / -3

print(loss_h)

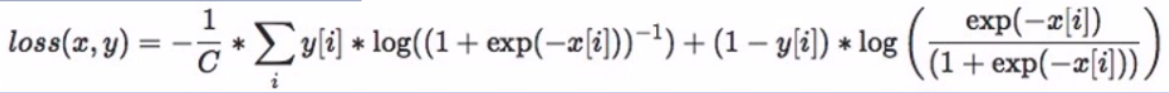

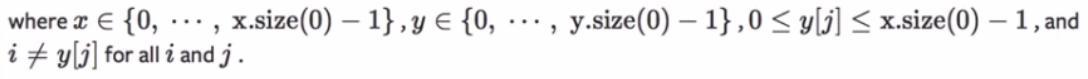

3.14 nn.MultiMarginLoss

nn.MultiMarginLoss(p=1, margin=1.0, weight=None, size_average=None, reduce=None, reduction='mean')

功能: 计算多分类的折页损失

主要参数:

- P: 可选1或2

- weight: 各类别的loss设置权值

- margin: 边界值

- reduction:计算模式,可为none/sum/mean

- none-逐个元素计算

- sum-所有元素求和,返回标量

- mean-加权平均,返回标量

损失函数:

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

# ---------------------------------------------- 14 Multi Margin Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

x = torch.tensor([[0.1, 0.2, 0.7], [0.2, 0.5, 0.3]])

y = torch.tensor([1, 2], dtype=torch.long)

loss_f = nn.MultiMarginLoss(reduction='none')

loss = loss_f(x, y)

print("Multi Margin Loss: ", loss)

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

x = x[0]

margin = 1

i_0 = margin - (x[1] - x[0])

# i_1 = margin - (x[1] - x[1])

i_2 = margin - (x[1] - x[2])

loss_h = (i_0 + i_2) / x.shape[0]

print(loss_h)

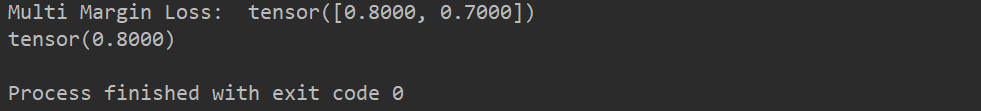

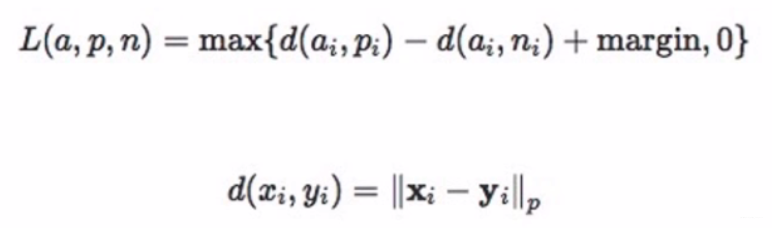

3.15 nn.TripletMarginLoss

nn.TripletMarginLoss(margin=1.0,

p=2.0,

eps=1e-06,

swap=False,

size_average=None,

reduce=None,

reduction='mean')

功能: 计算三元组损失,人脸验证中常用

主要参数:

- p:范数的阶,默认为2

- margin :边界值

- reduction: 计算模式, 可为none/sum/mean

- none-逐个元素计算

- sum-所有元素求和,返回标量

- mean-加权平均,返回标量

损失函数:

训练时,希望anchor与positive的距离近,与negative的距离远

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

# ---------------------------------------------- 15 Triplet Margin Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

anchor = torch.tensor([[1.]])

pos = torch.tensor([[2.]])

neg = torch.tensor([[0.5]])

loss_f = nn.TripletMarginLoss(margin=1.0, p=1)

loss = loss_f(anchor, pos, neg)

print("Triplet Margin Loss", loss)

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

margin = 1

a, p, n = anchor[0], pos[0], neg[0]

d_ap = torch.abs(a-p)

d_an = torch.abs(a-n)

loss = d_ap - d_an + margin

print(loss)

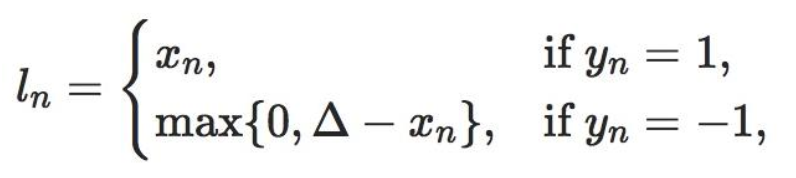

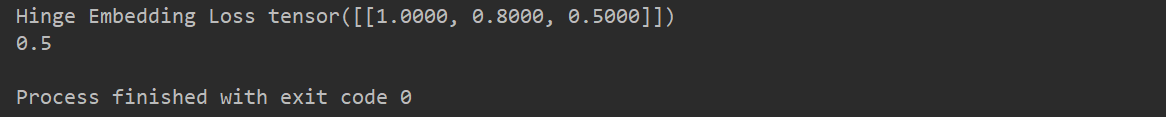

3.16 nn.HingeEmbeddingLoss

nn.HingeEmbeddingLoss(margin=1.0, size_average=None, reduce=None, reduction='mean')

功能: 计算两个输入的相似性,常用于非线性embedding和半监督学习

特别注意: 输入x应为两个输入之差的绝对值

主要参数:

- margin :边界值

- reduction:计算模式,可为none/sum/mean

- none-逐个元素计算

- sum-所有元素求和,返回标量

- mean-加权平均,返回标量

损失函数:

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

# ---------------------------------------------- 16 Hinge Embedding Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.tensor([[1., 0.8, 0.5]])

target = torch.tensor([[1, 1, -1]])

loss_f = nn.HingeEmbeddingLoss(margin=1, reduction='none')

loss = loss_f(inputs, target)

print("Hinge Embedding Loss", loss)

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

margin = 1.

loss = max(0, margin - inputs.numpy()[0, 2])

print(loss)

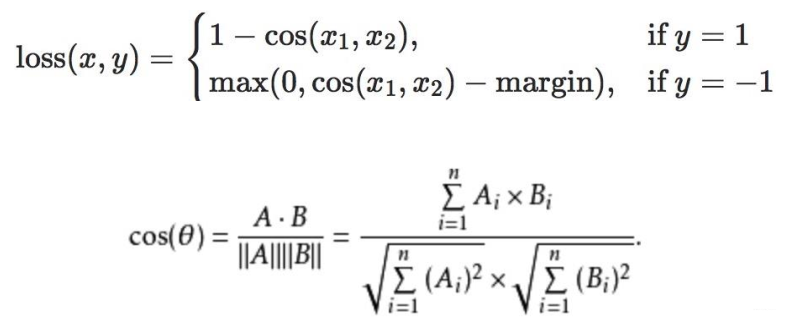

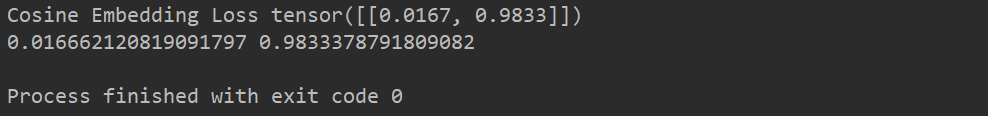

3.17 nn.CosineEmbeddingLoss

nn.CosineEmbeddingLoss(margin=0.0, size_average =None, reduce=None, reduction='mean')

功能: 采用余弦相似度计算两个输入的相似性

主要参数:

- margin :可取值[-1,1],推荐为[0, 0.5]

- reduction :计算模式,可为none/sum/mean

- none-逐个元素计算

- sum-所有元素求和,返回标量

- mean-加权平均,返回标量

损失函数:

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

# ---------------------------------------------- 17 Cosine Embedding Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

x1 = torch.tensor([[0.3, 0.5, 0.7], [0.3, 0.5, 0.7]])

x2 = torch.tensor([[0.1, 0.3, 0.5], [0.1, 0.3, 0.5]])

target = torch.tensor([[1, -1]], dtype=torch.float)

loss_f = nn.CosineEmbeddingLoss(margin=0., reduction='none')

loss = loss_f(x1, x2, target)

print("Cosine Embedding Loss", loss)

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

margin = 0.

def cosine(a, b):

numerator = torch.dot(a, b)

denominator = torch.norm(a, 2) * torch.norm(b, 2)

return float(numerator/denominator)

l_1 = 1 - (cosine(x1[0], x2[0]))

l_2 = max(0, cosine(x1[0], x2[0]))

print(l_1, l_2)

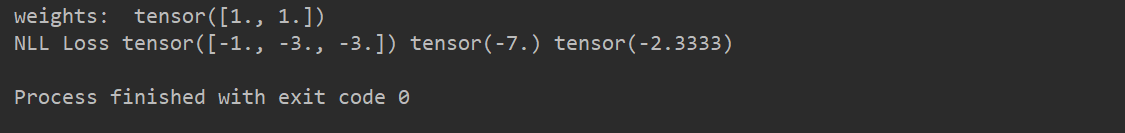

3.18 nn.CTCLoss

torch.nn.CTCLoss(blank=0, reduction='mean', zero_infinity=F alse)

功能: 计算CTC损失,解决时序类数据的分类connectionist Temporal classification

主要参数:

- blank: blank label

- zero_infinity : 无穷大的值或梯度置0

- reduction:计算模式,可为none/sum/mean

损失函数可参考:

A. Graves et al.: Connectionist Temporal Classification:Labelling Unsegmented Sequence Data with RecurrentNeural Networks

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

# ---------------------------------------------- 18 CTC Loss -----------------------------------------

flag = 0

# flag = 1

if flag:

T = 50 # Input sequence length

C = 20 # Number of classes (including blank)

N = 16 # Batch size

S = 30 # Target sequence length of longest target in batch

S_min = 10 # Minimum target length, for demonstration purposes

# Initialize random batch of input vectors, for *size = (T,N,C)

inputs = torch.randn(T, N, C).log_softmax(2).detach().requires_grad_()

# Initialize random batch of targets (0 = blank, 1:C = classes)

target = torch.randint(low=1, high=C, size=(N, S), dtype=torch.long)

input_lengths = torch.full(size=(N,), fill_value=T, dtype=torch.long)

target_lengths = torch.randint(low=S_min, high=S, size=(N,), dtype=torch.long)

ctc_loss = nn.CTCLoss()

loss = ctc_loss(inputs, target, input_lengths, target_lengths)

print("CTC loss: ", loss)