win7 安装PyTorch并测试

4月25号,PyTorch官方支持windows安装,博主第一时间安装使用了,下面分享安装过程和测试案例。

-

安装anaconda,方法查看:https://blog.csdn.net/zyb228/article/details/77995520

版本选择:anaconda3,python3.5的就可以 -

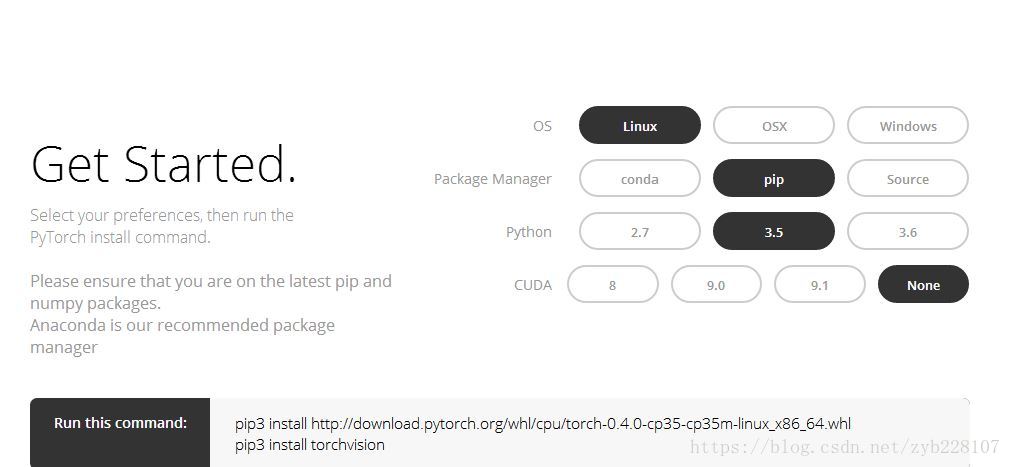

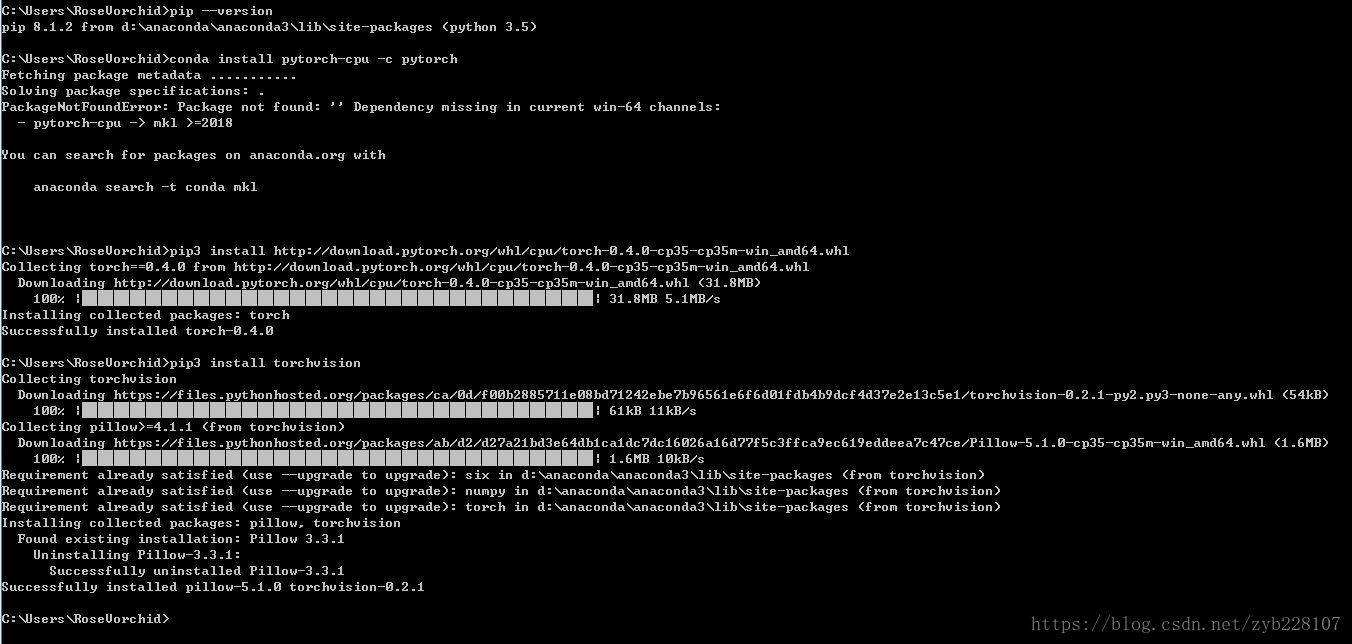

安装PyTorch:

由于conda安装很慢,而且出错,所以本文采用pip3安装,如下图:

测试:

import torch

import torch.nn as nn

import numpy as np

import matplotlib.pyplot as plt

from torch.autograd import Variable

# 超参数

input_size = 1

output_size = 1

num = 60

learning_rate = 0.002

# 构造数据

x_train = np.array([ [6.93], [4.168], [4.2], [5.5],[3.3], [6.71],

[9.79], [6.3], [7.2], [5.13], [7.997],

[10.71], [7.59], [2.167],[3.1]], dtype=np.float32)

y_train = np.array([[1.7], [2.76], [2.596], [2.53], [1.573],

[3.366], [1.221], [1.65], [2.904], [1.694],

[3.465], [2.827], [2.09], [3.19], [1.3]], dtype=np.float32)

# 线性回归模型(Linear Regression Model)

class LRegression(nn.Module):

def __init__(self, input_size, output_size):

super(LRegression, self).__init__()

self.linear = nn.Linear(input_size, output_size)

def forward(self, x):

out = self.linear(x)

return out

model = LRegression(input_size, output_size)

loss_criterion = nn.MSELoss()

#优化器Optimizer

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

# 训练模型

for i in range(num):

# 将ndarray转换为Variabl

inputs = Variable(torch.from_numpy(x_train))

targets = Variable(torch.from_numpy(y_train))

#前向传播

optimizer.zero_grad()

outputs = model(inputs)

loss = loss_criterion(outputs, targets)

#反向传播

loss.backward()

#优化

optimizer.step()

if (i+1) % 5 == 0:

print ('%d/%d, Loss: %.4f'

%(i+1, num, loss.data[0]))

# 显示

inputs=Variable(torch.from_numpy(x_train))

pred = model(inputs).data.numpy()

plt.plot(x_train, y_train, 'ro', label='Original data')

plt.plot(x_train, pred, label='Fitted line')

plt.legend()

plt.show()

注:代码来源于网络

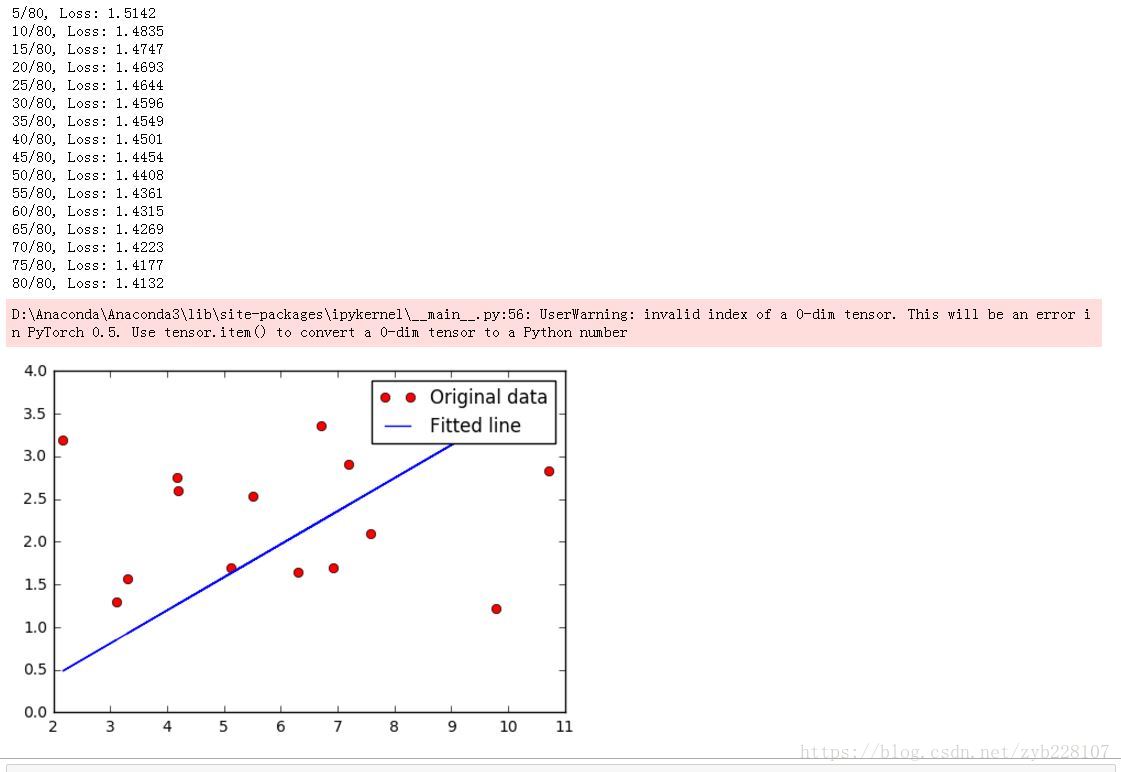

效果:

最新版本的Pytorch 1.3 安装测试案例,

训练模块代码由 博主 lyjiang1 根据最新安装的测试情况进行更新。

感谢博主 lyjiang1!

import torch

import torch.nn as nn

import numpy as np

import matplotlib.pyplot as plt

from torch.autograd import Variable

# 超参数

input_size = 1

output_size = 1

num = 60

learning_rate = 0.002

# 构造数据

x_train = np.array([ [6.93], [4.168], [4.2], [5.5],[3.3], [6.71],

[9.79], [6.3], [7.2], [5.13], [7.997],

[10.71], [7.59], [2.167],[3.1]], dtype=np.float32)

y_train = np.array([[1.7], [2.76], [2.596], [2.53], [1.573],

[3.366], [1.221], [1.65], [2.904], [1.694],

[3.465], [2.827], [2.09], [3.19], [1.3]], dtype=np.float32)

# 线性回归模型(Linear Regression Model)

class LRegression(nn.Module):

def __init__(self, input_size, output_size):

super(LRegression, self).__init__()

self.linear = nn.Linear(input_size, output_size)

def forward(self, x):

out = self.linear(x)

return out

model = LRegression(input_size, output_size)

loss_criterion = nn.MSELoss()

#优化器Optimizer

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

# 训练模型

#这段训练模型代码 是由 lyjiang1 根据最新版本的Pytorch 修改的测试案例

# 再次特别感谢 lyjiang1 !

for i in range(num):

# 将ndarray转换为Variabl

inputs = Variable(torch.from_numpy(x_train))

targets = Variable(torch.from_numpy(y_train))

#前向传播

optimizer.zero_grad()

outputs = model(inputs)

loss = loss_criterion(outputs, targets)

#反向传播

loss.backward()

#优化

optimizer.step()

#a=loss.data[0] 老版本

a=loss.item() #新版本

if (i+1) % 5 == 0:

print ('%d/%d, Loss: %.4f' % (i+1, num, a))

# 显示

inputs=Variable(torch.from_numpy(x_train))

pred = model(inputs).data.numpy()

plt.plot(x_train, y_train, 'ro', label='Original data')

plt.plot(x_train, pred, label='Fitted line')

plt.legend()

plt.show()

更多TensorFlow和PyTorch技术干货请关注: