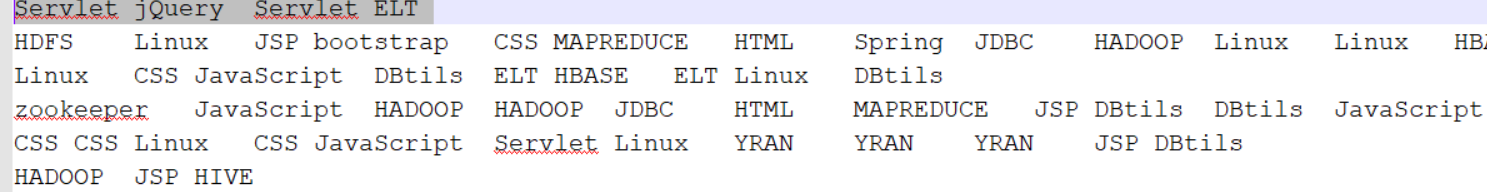

老规矩!数据 是什么样的?

数据以\t 分割 UTF-8编码格式

1.编程思想

Mapreduce的具有聚合的特性,我们将数据聚合然后value值设为null即可 非常简单,可以理解为wordcount 没有value值

2.代码:

package com.hnxy.mr.distinct;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import com.hnxy.mr.distinct.DistinctWord.DWMapper;

import com.hnxy.mr.distinct.DistinctWord.DWReducer;

public class DistinctWord2 extends Configured implements Tool {

public static class DistinctWord2Mapper extends Mapper<LongWritable, Text, Text, NullWritable> {

private Text outkey = new Text();

private NullWritable outval = NullWritable.get();

String[] star = null;

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, NullWritable>.Context context)

throws IOException, InterruptedException {

// 去重的业务逻辑 拿到每一行数据进行切割 利用mapreduce的特性(聚合)讲value设为null即可

star = value.toString().split("\t");

for (String s : star) {

outkey.set(s);

}

context.write(outkey, outval);

}

}

public static class DistinctWord2Reducer extends Reducer<Text, NullWritable, Text, NullWritable> {

private Text outkey = new Text();

private NullWritable outval = NullWritable.get();

@Override

protected void reduce(Text key, Iterable<NullWritable> values,

Reducer<Text, NullWritable, Text, NullWritable>.Context context)

throws IOException, InterruptedException {

// 直接输出

outkey.set(key);

context.write(key, outval);

}

}

@Override

public int run(String[] args) throws Exception {

// 创建Configretion对象

Configuration conf = this.getConf();

// 创建job对象

Job job = Job.getInstance(conf, "job");

// jar包容器类

job.setJarByClass(DistinctWord.class);

// mapreduce的类

job.setMapperClass(DistinctWord2Mapper.class);

job.setReducerClass(DistinctWord2Reducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(NullWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

// 设置文件路径

Path in = new Path(args[0]);

Path out = new Path(args[1]);

FileInputFormat.addInputPath(job, in);

FileOutputFormat.setOutputPath(job, out);

// 创建操作hdfs对象

FileSystem fs = FileSystem.get(conf);

if (fs.exists(out)) {

fs.delete(out, true);

System.out.println(job.getJobName() + "'s Path output is deleted");

}

// 执行

boolean con = job.waitForCompletion(true);

if (con) {

System.out.println("ok");

} else {

System.out.println("file");

}

return 0;

}

public static void main(String[] args) throws Exception {

// TODO Auto-generated method stub

System.exit(ToolRunner.run(new DistinctWord2(), args));

}

}