文章目录

- 1.前言

- 2.官网

- 3.概念

- 4.架构

- 5.Discovering services [服务发布类型]

- 5.1.ClusterIP 类型

- 5.1.1. 案例分析1- 内部请求访问pod集群

- 5.1.1.1.whoami-deployment.yaml 创建配置文件

- 5.1.1.2.kubectl apply -f whoami-deployment.yaml [启动]

- 5.1.1.3.kubectl get pods -o wide [查看]

- 5.1.1.4.kubectl expose deployment whoami-deployment [将 whoami-deployment暴露给service whoami-depoyment]

- 5.1.1.5.service集群访问

- 5.1.1.6.kubectl describe svc {service_name} [查看service的详情]

- 5.1.1.7.kubectl scale deployment whoami-deployment --replicas=5 [pod扩容]

- 5.1.1.8.查看service详情负载节点,下面新增了2个节点

- 5.1.1.9.查看所有的pod 并service集群访问测试

- 5.1.1.10.总结

- 5.1.2.案例分析2-使用yaml文件创建service

- 5.1.3.案例分析3-kubectl delete svc {service_name} [删除service]

- 5.2.NodePort 类型 [外部请求访问pod集群]

- 5.3.Ingress 类型 [外部请求访问pod集群]

- 备注 安装监控端口的工具 lsof

1.前言

1.下面讲的东西,是基于前面k8s集群搭建成功的环境下去演示的,大家首先需要有集群化的k8s环境

2.如果没有集群环境,大家可以参考下面的地址

https://blog.csdn.net/u014636209/article/details/103752870

2.官网

https://kubernetes.io/docs/concepts/services-networking/service/

3.概念

4.架构

5.Discovering services [服务发布类型]

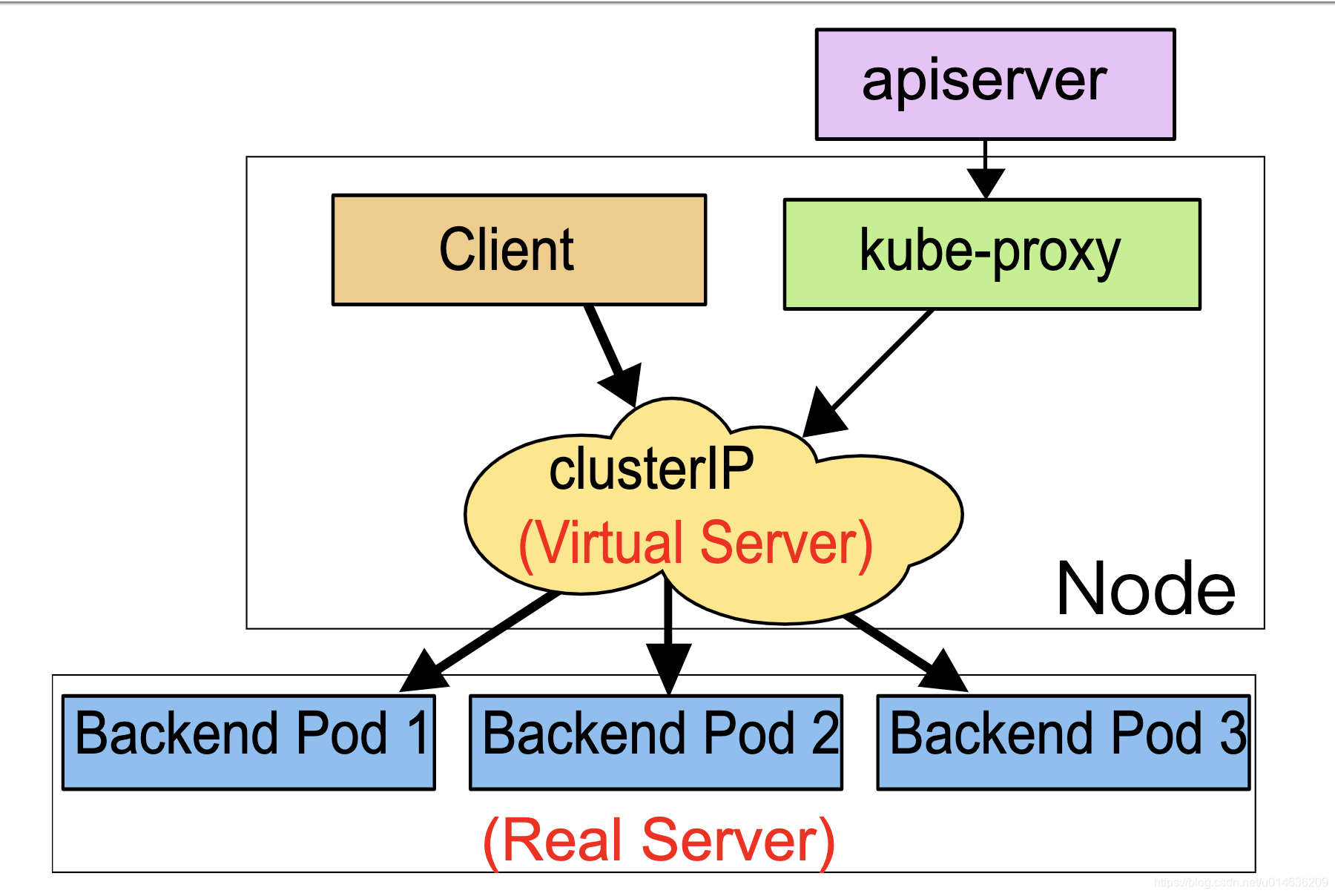

1.Service是作为一种网络服务暴露正在运行的一系列pod的方式;

2.我们知道我们在创建pod的时候,可以通过Replcas副本数量这个属性去定义集群中pod的数量

一旦一个pod死亡或者停止,那么另外一个pod就会重新创建出来;

因为一个pod一个ip,因此pod是变化的,所以负载的机器也是变化;

另外,随着集群的扩容,pod有可能增加,ip也会有新增;

那么我们能否通过一个前置的固定ip和端口去负载后面这些一类的pod呢?

这个功能的实现就是Service;

5.1.ClusterIP 类型

这个是Service的默认类型,解决的主要是 内部请求访问pod集群的问题

5.1.1. 案例分析1- 内部请求访问pod集群

5.1.1.1.whoami-deployment.yaml 创建配置文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami-deployment

labels:

app: whoami

spec:

replicas: 3

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- name: whoami

image: jwilder/whoami

ports:

- containerPort: 8000

5.1.1.2.kubectl apply -f whoami-deployment.yaml [启动]

[root@manager-node demo]# vi whoami-deployment.yaml

[root@manager-node demo]# kubectl apply -f whoami-deployment.yaml

deployment.apps/whoami-deployment created

5.1.1.3.kubectl get pods -o wide [查看]

[root@manager-node demo]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whoami-deployment-678b64444d-5m44j 0/1 ContainerCreating 0 10s <none> worker02-node <none> <none>

whoami-deployment-678b64444d-6bgrm 0/1 ContainerCreating 0 10s <none> worker01-node <none> <none>

whoami-deployment-678b64444d-sxwfl 0/1 ContainerCreating 0 10s <none> worker01-node <none> <none>

[root@manager-node demo]#

5.1.1.4.kubectl expose deployment whoami-deployment [将 whoami-deployment暴露给service whoami-depoyment]

[root@manager-node demo]# kubectl expose deployment whoami-deployment

service/whoami-deployment exposed

[root@manager-node demo]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6d10h

whoami-deployment ClusterIP 10.107.193.103 <none> 8000/TCP 14s

[root@manager-node demo]#

** 备注说明 **

1.如果kubectl expose deployment whoami-deployment 这个是默认将

whoami-deployment暴露给 whoami-deployment ,service没有话 ,

会自动创建一个跟deployment名字一样的service

2.通过上面的查看kubectl get svc 我们知道service已经创建完成;

3.因此我们认为也是service的其中一种创建方式

5.1.1.5.service集群访问

[root@manager-node demo]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6d10h

whoami-deployment ClusterIP 10.107.193.103 <none> 8000/TCP 14s

[root@manager-node demo]#

1.通过上面的查看,我们知道,whoami-demployment这个service已经创建完成

并且拥有了自己的ip 10.107.193.103:8000,接下来,我们在集群的外面就可以

访问这个service whoami-demployment里面的服务了;即使这个service

whoami-demployment下的pod的ip变化,都不影响,因为service实现了负载均衡;

2.当然,这里注意下,虽然不在同一个集群里面,但是必须在同一个网络里面;

怎么理解?也就是必须都在内网里面,相互之间的ip是可以ping通的

这里只是实现了负载;

3.演示如下:我们在主节点上去访问,可以看到会随机的分配到三个node节点上

[root@manager-node demo]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whoami-deployment-678b64444d-5m44j 1/1 Running 0 19m 192.168.38.78 worker02-node <none> <none>

whoami-deployment-678b64444d-6bgrm 1/1 Running 0 19m 192.168.101.31 worker01-node <none> <none>

whoami-deployment-678b64444d-sxwfl 1/1 Running 0 19m 192.168.101.30 worker01-node <none> <none>

[root@manager-node demo]#

[root@manager-node demo]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-sxwfl

[root@manager-node demo]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-6bgrm

[root@manager-node demo]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-5m44j

[root@manager-node demo]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-6bgrm

[root@manager-node demo]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-6bgrm

[root@manager-node demo]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-sxwfl

[root@manager-node demo]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-5m44j

[root@manager-node demo]#

5.1.1.6.kubectl describe svc {service_name} [查看service的详情]

[root@manager-node ~]# kubectl describe svc whoami-deployment

Name: whoami-deployment

Namespace: default

Labels: app=whoami

Annotations: <none>

Selector: app=whoami

Type: ClusterIP

IP: 10.107.193.103

Port: <unset> 8000/TCP

TargetPort: 8000/TCP

Endpoints: 192.168.101.36:8000,192.168.101.37:8000,192.168.38.81:8000

Session Affinity: None

Events: <none>

[root@manager-node ~]#

备注

1.service的ip挂着这些pod(Endpoints):192.168.101.36:8000,192.168.101.37:8000,192.168.38.81:8000

5.1.1.7.kubectl scale deployment whoami-deployment --replicas=5 [pod扩容]

[root@manager-node ~]# kubectl scale deployment whoami-deployment --replicas=5

deployment.extensions/whoami-deployment scaled

[root@manager-node ~]#

5.1.1.8.查看service详情负载节点,下面新增了2个节点

[root@manager-node ~]# kubectl describe svc whoami-deployment

Name: whoami-deployment

Namespace: default

Labels: app=whoami

Annotations: <none>

Selector: app=whoami

Type: ClusterIP

IP: 10.107.193.103

Port: <unset> 8000/TCP

TargetPort: 8000/TCP

Endpoints: 192.168.101.36:8000,192.168.101.37:8000,192.168.38.81:8000 + 2 more...

Session Affinity: None

Events: <none>

[root@manager-node ~]#

5.1.1.9.查看所有的pod 并service集群访问测试

[root@manager-node ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

whoami-deployment-678b64444d-5m44j 1/1 Running 3 10h

whoami-deployment-678b64444d-6bgrm 1/1 Running 3 10h

whoami-deployment-678b64444d-jls6m 1/1 Running 0 5m18s

whoami-deployment-678b64444d-m9dsj 1/1 Running 0 5m18s

whoami-deployment-678b64444d-sxwfl 1/1 Running 3 10h

很明显上面whoami-deployment-678b64444d-jls6m和whoami-deployment-678b64444d-m9dsj创建成功

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-sxwfl

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-sxwfl

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-jls6m

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-6bgrm

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-6bgrm

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-sxwfl

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-m9dsj

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-m9dsj

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-6bgrm

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-jls6m

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-6bgrm

[root@manager-node ~]# curl http://10.107.193.103:8000

I'm whoami-deployment-678b64444d-5m44j

[root@manager-node ~]#

5.1.1.10.总结

1.上面的ClusterIP的方式,主要是解决的是内部访问的问题,如果需要访问内部的一些服务,可能就不太合适

2.外面的访问方式,可以参考下面NodePort类型和Ingress类型;

5.1.2.案例分析2-使用yaml文件创建service

my-sevice.yaml [创建配置文件 ]

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app: MyApp

ports:

- protocol: TCP

port: 80

targetPort: 9376

备注

1.上述配置创建一个名称为 “my-service” 的 Service 对象,它会将请求代理到使用 TCP 端口 9376,

并且具有标签 "app=MyApp" 的 Pod 上。 Kubernetes 为该服务分配一个 IP 地址(有时称为 “集群IP” ),

该 IP 地址由服务代理使用。

kubectl apply -f my-sevice.yaml [启动并查看]

[root@manager-node demo]# kubectl apply -f my-sevice.yaml

service/my-service created

[root@manager-node demo]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6d20h

my-service ClusterIP 10.98.189.250 <none> 80/TCP 10s

whoami-deployment ClusterIP 10.107.193.103 <none> 8000/TCP 10h

[root@manager-node demo]#

如下已经创建

my-service ClusterIP 10.98.189.250 <none> 80/TCP 10s

5.1.3.案例分析3-kubectl delete svc {service_name} [删除service]

[root@manager-node demo]# kubectl delete svc whoami-deployment

service "whoami-deployment" deleted

这里注意下,service的删除并不意味着pod的删除

[root@manager-node demo]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whoami-deployment-678b64444d-5m44j 1/1 Running 3 10h 192.168.38.81 worker02-node <none> <none>

whoami-deployment-678b64444d-6bgrm 1/1 Running 3 10h 192.168.101.37 worker01-node <none> <none>

whoami-deployment-678b64444d-jls6m 1/1 Running 0 24m 192.168.38.83 worker02-node <none> <none>

whoami-deployment-678b64444d-m9dsj 1/1 Running 0 24m 192.168.38.82 worker02-node <none> <none>

whoami-deployment-678b64444d-sxwfl 1/1 Running 3 10h 192.168.101.36 worker01-node <none> <none>

[root@manager-node demo]#

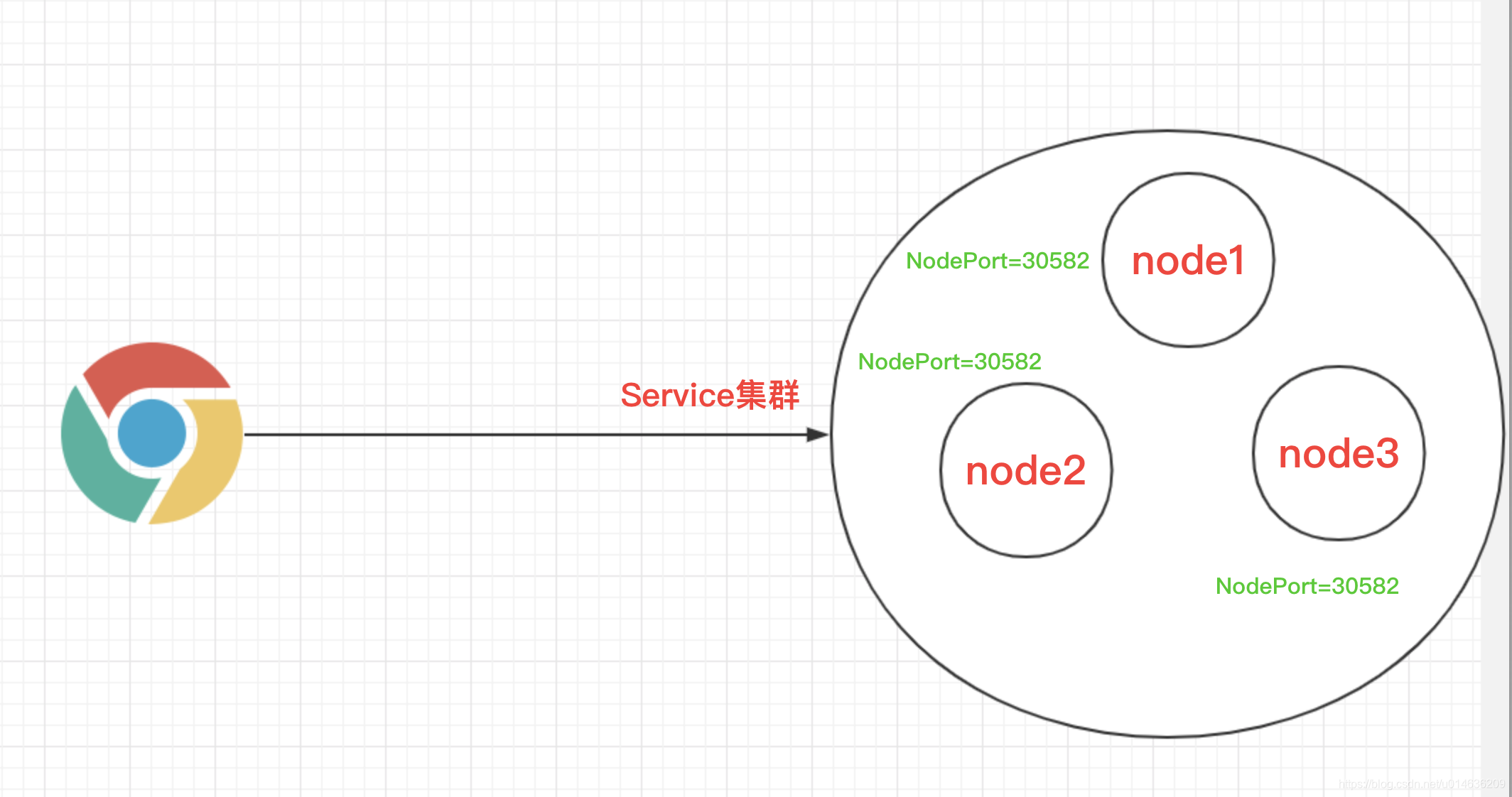

5.2.NodePort 类型 [外部请求访问pod集群]

5.2.1.概述

1.对一些应用的某些部分,可能希望通过外部Kubernetes 集群外部IP 地址暴露 Service,

2.通俗点讲,有可能外部的应用请求,无法访问pod集群里面的ip和端口,但是呢,可以访问这个物理的机器ip

这个时候我们可以,在物理机器上开放一个端口,让请求进来,然后这个ip端口映射集群里面的service的端口

从而实现pod集群的访问;

3.为了解决上面的问题,我们可以查看下面的Service 发布服务-服务类型 (除了CLusterIP以外的类型)

5.2.2.样例 1

创建配置文件 whoami-nodeport-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami-nodeport-deployment

labels:

app: whoami-nodeport

spec:

replicas: 3

selector:

matchLabels:

app: whoami-nodeport

template:

metadata:

labels:

app: whoami-nodeport

spec:

containers:

- name: whoami

image: jwilder/whoami

ports:

- containerPort: 8000

kubectl apply -f whoami-nodeport-deployment.yaml [启动]

[root@manager-node demo]# vi whoami-nodeport-deployment.yaml

[root@manager-node demo]# kubectl apply -f whoami-nodeport-deployment.yaml

deployment.apps/whoami-nodeport-deployment created

[root@manager-node demo]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whoami-nodeport-deployment-79f9f8947d-h9cmm 1/1 Running 0 8s 192.168.101.43 worker01-node <none> <none>

whoami-nodeport-deployment-79f9f8947d-mgw8k 1/1 Running 0 8s 192.168.38.85 worker02-node <none> <none>

whoami-nodeport-deployment-79f9f8947d-qddpd 1/1 Running 0 8s 192.168.38.86 worker02-node <none> <none>

[root@manager-node demo]#

kubectl expose deployment whoami-nodeport-deployment --type=NodePort [暴露服务设置发布服务类型NodePort]

[root@manager-node demo]# kubectl expose deployment whoami-nodeport-deployment --type=NodePort

service/whoami-nodeport-deployment exposed

[root@manager-node demo]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8d

whoami-nodeport-deployment NodePort 10.105.66.198 <none> 8000:30582/TCP 7s

[root@manager-node demo]#

备注:30582端口是随机分配的,当然也可以指定

lsof -i tcp:30582[查看端口的使用的进程]

[root@manager-node demo]# lsof -i tcp:30582

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

kube-prox 4423 root 11u IPv6 316107 0t0 TCP *:30582 (LISTEN)

[root@worker01-node ~]# lsof -i tcp:30582

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

kube-prox 4182 root 11u IPv6 39819 0t0 TCP *:30582 (LISTEN)

[root@worker01-node ~]#

[root@worker02-node ~]# lsof -i tcp:30582

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

kube-prox 3533 root 11u IPv6 34812 0t0 TCP *:30582 (LISTEN)

[root@worker02-node ~]#

三台机器上都会进行开放一个主机的端口30582的服务

链接验证

这里注意下,电脑比较卡,我把workder02-node节点停掉了,所有的pod都在worker01-node上

[root@manager-node demo]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whoami-nodeport-deployment-79f9f8947d-6j2gv 1/1 Running 0 32m 192.168.101.45 worker01-node <none> <none>

whoami-nodeport-deployment-79f9f8947d-h9cmm 1/1 Running 0 55m 192.168.101.43 worker01-node <none> <none>

whoami-nodeport-deployment-79f9f8947d-hp6rg 1/1 Running 0 32m 192.168.101.44 worker01-node <none> <none>

whoami-nodeport-deployment-79f9f8947d-mgw8k 1/1 Terminating 0 55m 192.168.38.85 worker02-node <none> <none>

whoami-nodeport-deployment-79f9f8947d-qddpd 1/1 Terminating 0 55m 192.168.38.86 worker02-node <none> <none>

[root@manager-node demo]#

我在主节点上通过30582端口去验证,发现会负载请求到service集群中的不同pod上,实现了负载请求

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-6j2gv

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-6j2gv

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-6j2gv

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-6j2gv

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-h9cmm

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-6j2gv

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-h9cmm

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-hp6rg

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-hp6rg

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-6j2gv

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-hp6rg

[root@manager-node demo]# curl 192.168.1.111:30582

I'm whoami-nodeport-deployment-79f9f8947d-6j2gv

[root@manager-node demo]# curl 192.168.1.112:3058

总结

优势

1.也就是通过上面主节点的ip和端口(可以认为主机的ip和端口),就可以实现对service中负载的pod的请求

2.这样就是实现了外部的请求负载

缺点

1.在每一个Node节点都要多开放一个端口,这样有可能影响后期的应用部署出现端口占用的现象;

2.因此不推荐该种方式;

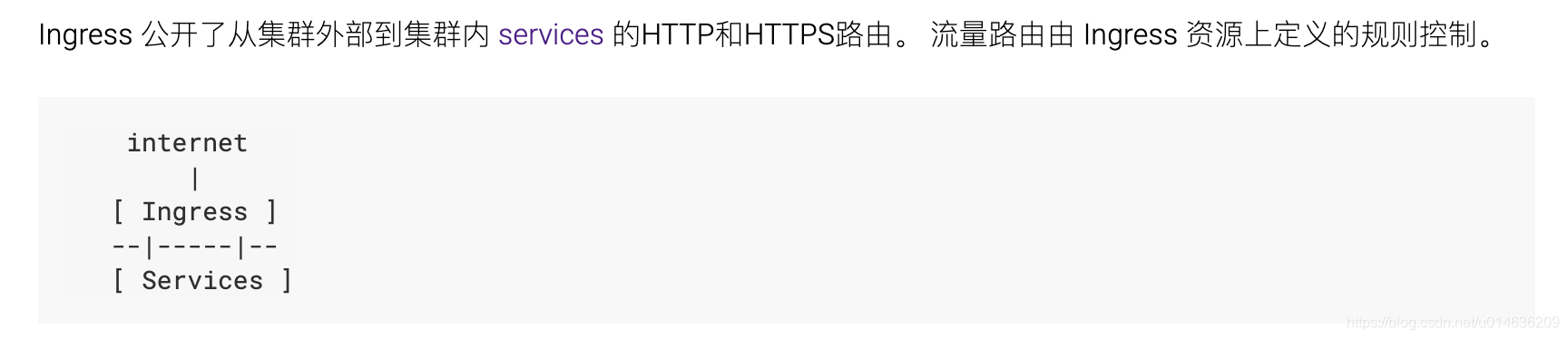

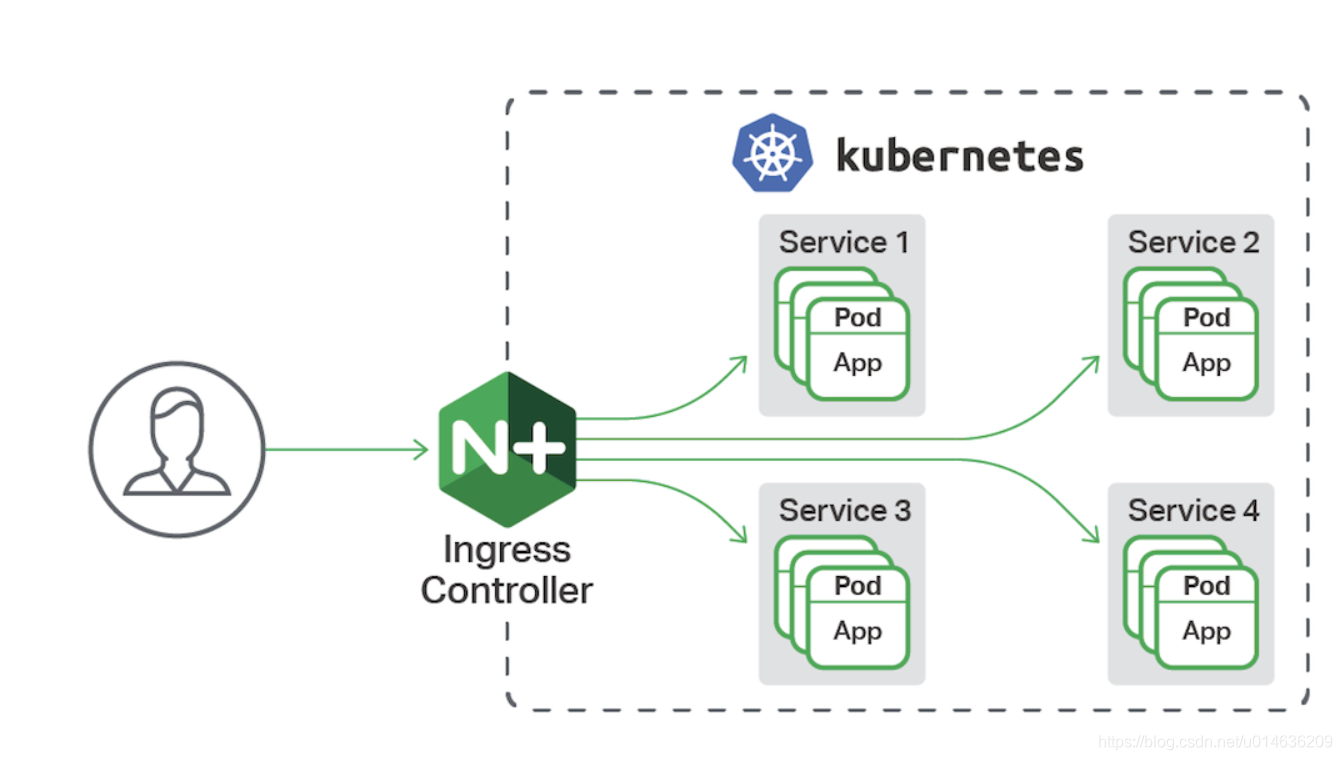

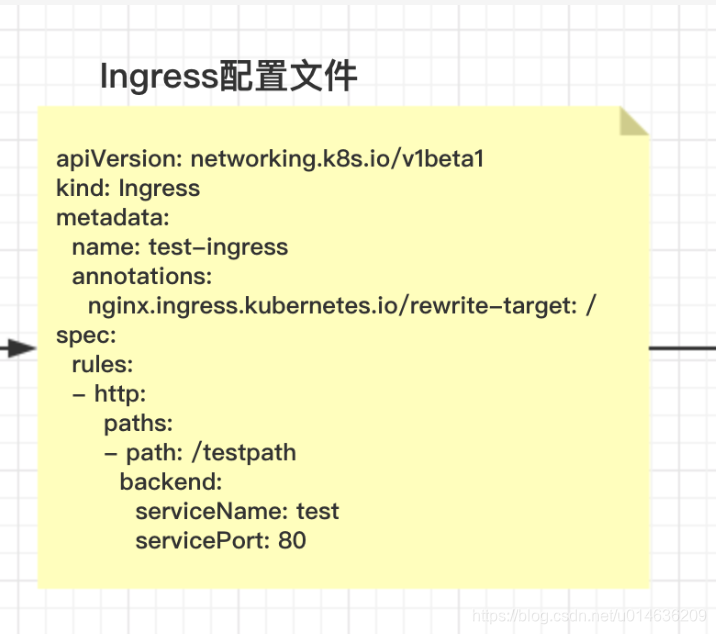

5.3.Ingress 类型 [外部请求访问pod集群]

5.3.1.官网

https://kubernetes.io/docs/concepts/services-networking/ingress/

5.3.2.概述

5.3.3.先决条件 ingress-controller

You must have an ingress controller to satisfy an Ingress. Only creating an Ingress resource has no effect.

You may need to deploy an Ingress controller such as ingress-nginx. You can choose from a number of Ingress controllers.

Ideally, all Ingress controllers should fit the reference specification. In reality, the various Ingress controllers operate slightly differently.

您必须具有 ingress 控制器才能满足 Ingress 的要求。仅创建 Ingress 资源无效。

您可能需要部署 Ingress 控制器,例如 ingress-nginx。您可以从许多 Ingress 控制器中进行选择。

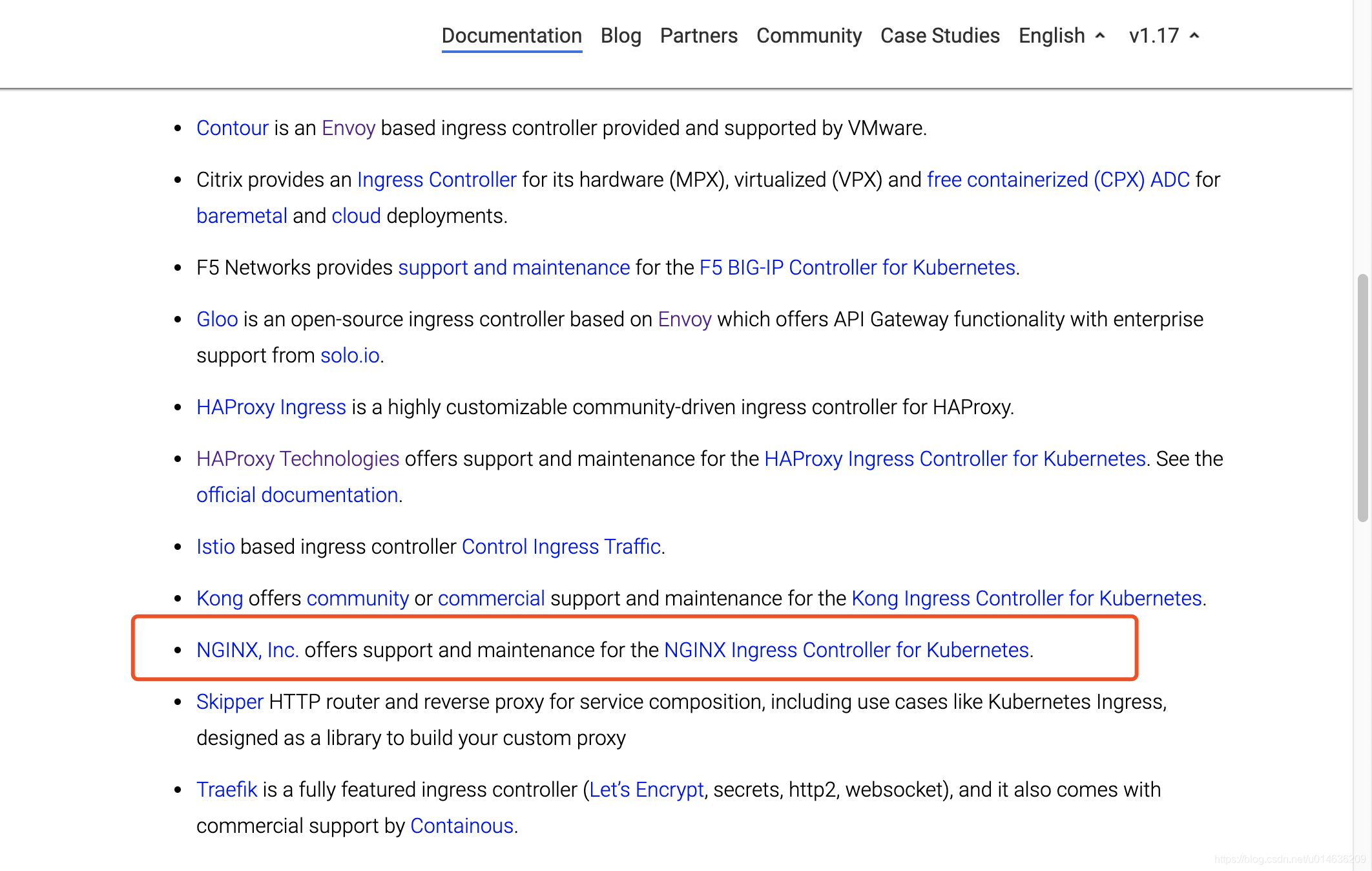

**ingress-controller的实现方式很多,见下面,我们选择的是:NGINX Ingress **

[https://kubernetes.io/docs/concepts/services-networking/ingress-controllers/]

5.3.4.ingress-nginx github源码地址

https://github.com/kubernetes/ingress-nginx

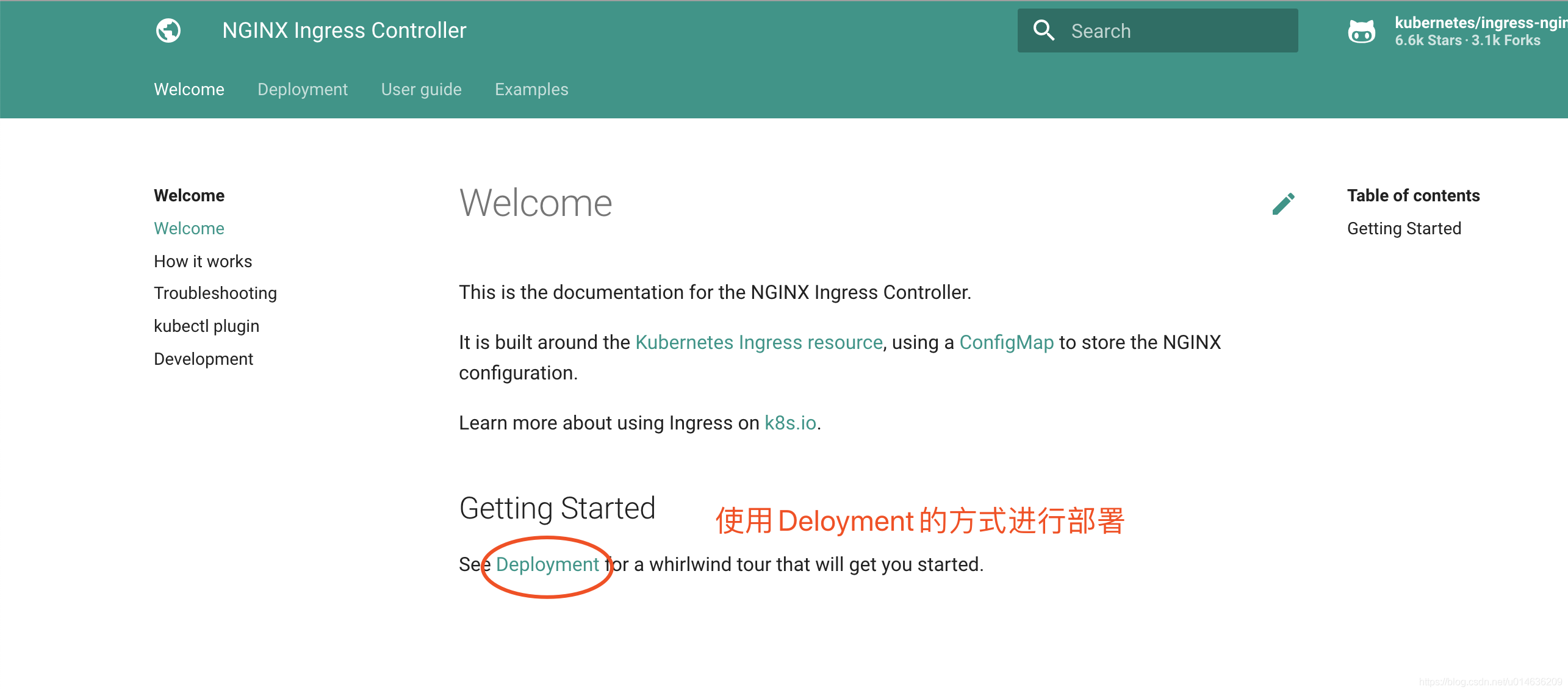

5.3.5 ingress-nginx controller在线文档

官网

https://kubernetes.github.io/ingress-nginx/

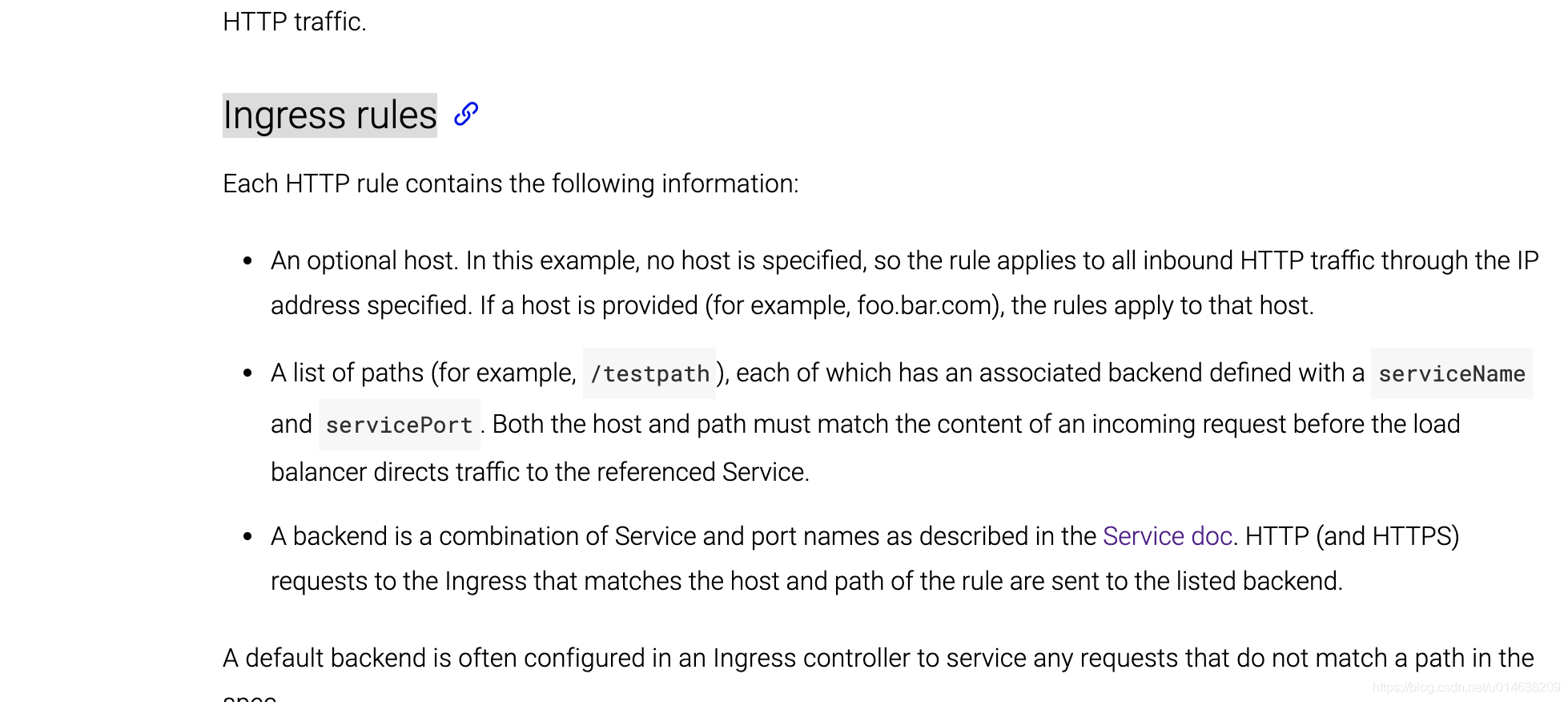

ingress配置规则概述

https://kubernetes.io/docs/concepts/services-networking/ingress/

安装步骤

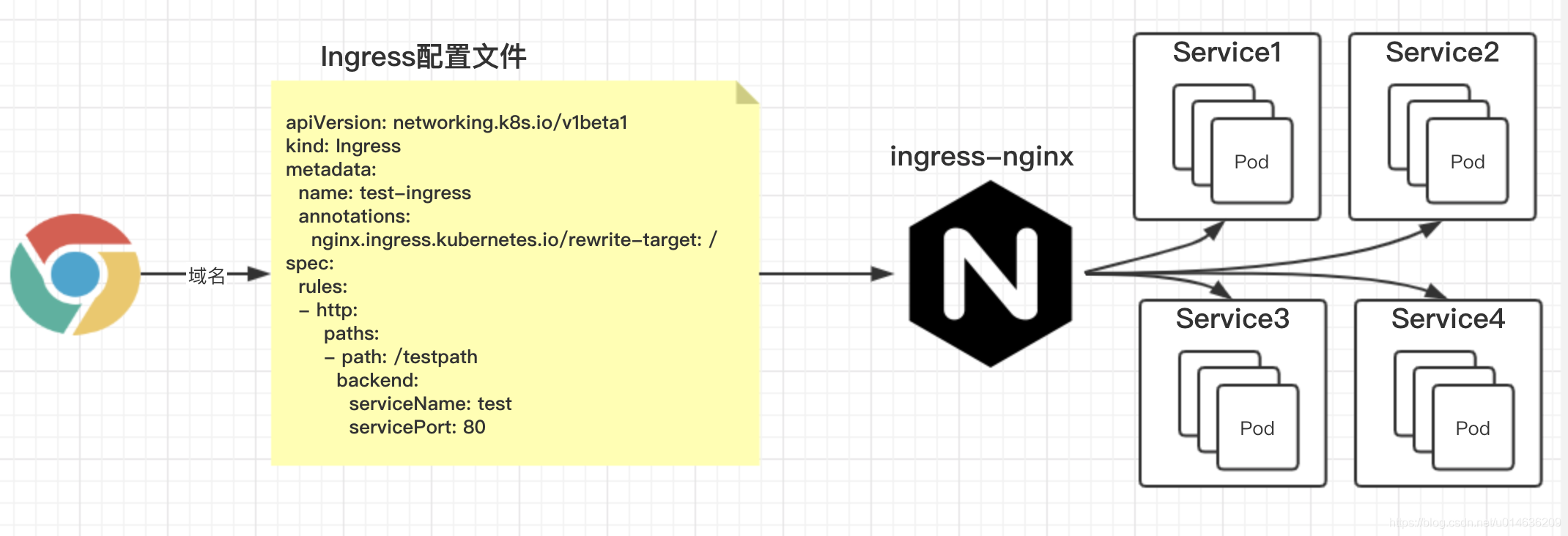

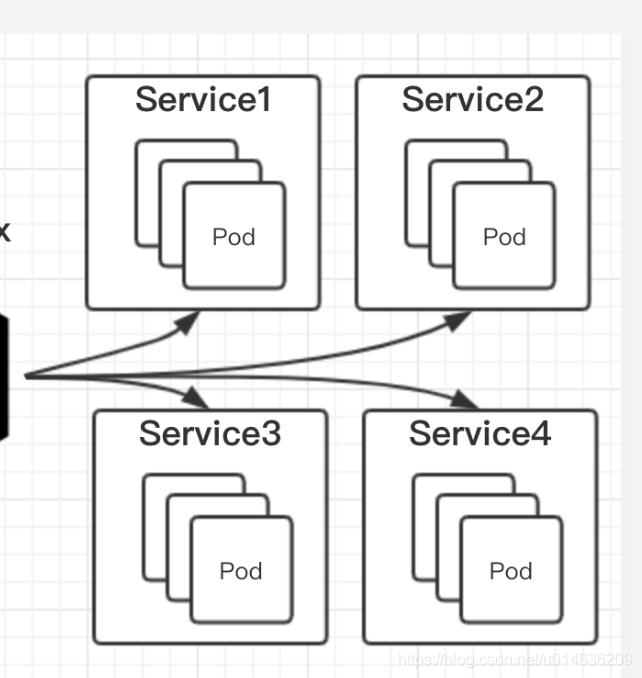

5.3.6.架构流程

1.首先我们前面已经知道,ingress-nginx我们可以直接安装配置即可;

2.ingress配置文件,类似我们的nginx的配置文件nginx.conf文件,这里我们可以配置相关的域名-host,以及对应着访问“域名+端口”访问具体那个

service,另外这里也可以配置我们访问的相关路径-path等等;

当然Ingress的配置文件,都是通过我们安装的ingress-nginx去做路由操作的;

3.就是后面我们只需要配置Ingress配置文件即可,在某一台主机上部署这个,然后后面通过域名进行访问;

5.3.7.样例1

5.3.7.1.创建配置文件my-tomcat.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deployment

labels:

app: tomcat

spec:

replicas: 1

selector:

matchLabels:

app: tomcat

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: tomcat

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: tomcat-service

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: tomcat

这里是service服务

5.3.7.2.启动

[root@manager-node ingress-nginx]# kubectl apply -f my-tomcat.yaml

deployment.apps/tomcat-deployment created

service/tomcat-service created

[root@manager-node ingress-nginx]#

[root@manager-node ingress-nginx]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

tomcat-deployment-6b9d6f8547-jfqw9 0/1 ContainerCreating 0 91s <none> worker01-node <none> <none>

[root@manager-node ingress-nginx]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

tomcat-deployment 0/1 1 0 98s

[root@manager-node ingress-nginx]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11d

tomcat-service ClusterIP 10.109.202.14 <none> 80/TCP 4m

whoami-nodeport-deployment NodePort 10.105.66.198 <none> 8000:30582/TCP 3d21h

[root@manager-node ingress-nginx]# kubectl describe svc tomcat-service

Name: tomcat-service

Namespace: default

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"name":"tomcat-service","namespace":"default"},"spec":{"ports":[{"port":8...

Selector: app=tomcat

Type: ClusterIP

IP: 10.109.202.14

Port: <unset> 80/TCP

TargetPort: 8080/TCP

Endpoints:

Session Affinity: None

Events: <none>

[root@manager-node ingress-nginx]#

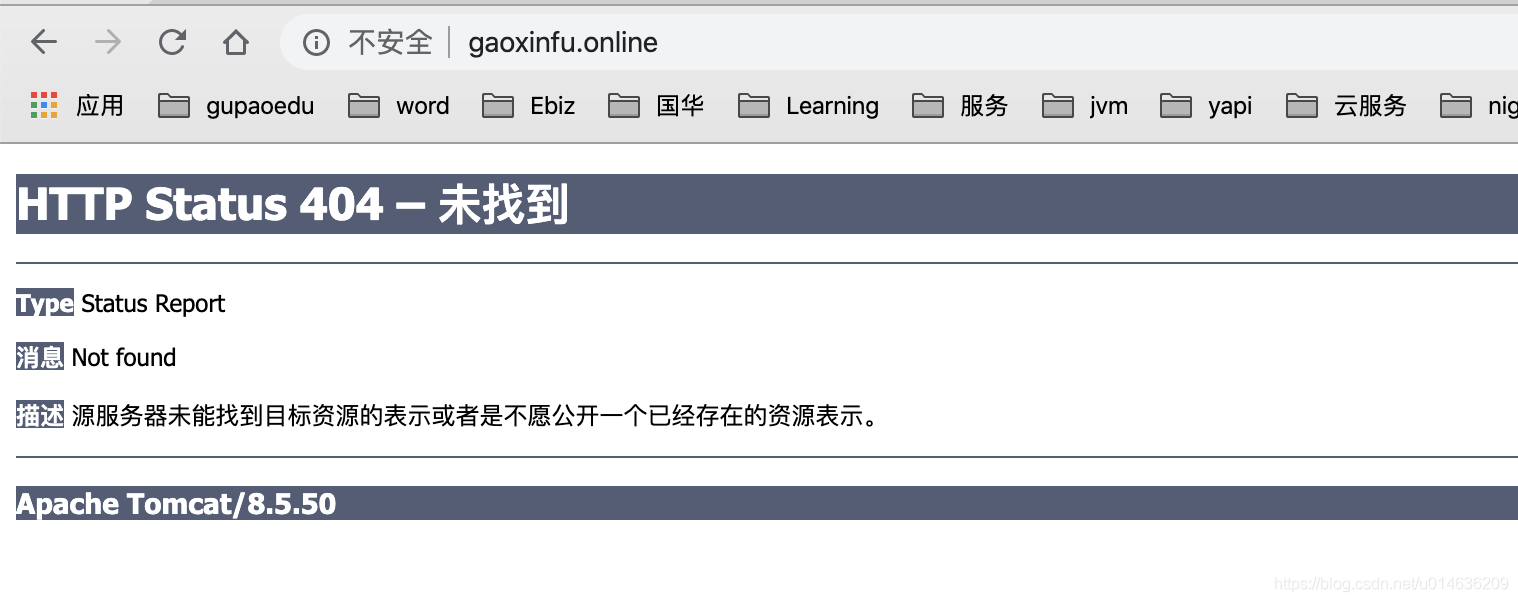

5.3.7.3.节点页面验证

子节点ip验证

[root@manager-node ingress-nginx]# curl 192.168.101.51:8080

<!doctype html><html lang="en"><head><title>HTTP Status 404 – Not Found</title><style type="text/css">body {font-family:Tahoma,Arial,sans-serif;} h1, h2, h3, b {color:white;background-color:#525D76;} h1 {font-size:22px;} h2 {font-size:16px;} h3 {font-size:14px;} p {font-size:12px;} a {color:black;} .line {height:1px;background-color:#525D76;border:none;}</style></head><body><h1>HTTP Status 404 – Not Found</h1><hr class="line" /><p><b>Type</b> Status Report</p><p><b>Message</b> Not found</p><p><b>Description</b> The origin server did not find a current representation for the target resource or is not willing to disclose that one exists.</p><hr class="line" /><h3>Apache Tomcat/8.5.50</h3></body></html>[root@manager-node ingress-nginx]#

service ip 验证

[root@manager-node ingress-nginx]# curl 10.109.202.14

<!doctype html><html lang="en"><head><title>HTTP Status 404 – Not Found</title><style type="text/css">body {font-family:Tahoma,Arial,sans-serif;} h1, h2, h3, b {color:white;background-color:#525D76;} h1 {font-size:22px;} h2 {font-size:16px;} h3 {font-size:14px;} p {font-size:12px;} a {color:black;} .line {height:1px;background-color:#525D76;border:none;}</style></head><body><h1>HTTP Status 404 – Not Found</h1><hr class="line" /><p><b>Type</b> Status Report</p><p><b>Message</b> Not found</p><p><b>Description</b> The origin server did not find a current representation for the target resource or is not willing to disclose that one exists.</p><hr class="line" /><h3>Apache Tomcat/8.5.50</h3></body></html>[root@manager-node ingress-nginx]#

备注 我这边不知道为啥出来的404 我们先不管了 暂且认为为是成功了 能够访问到

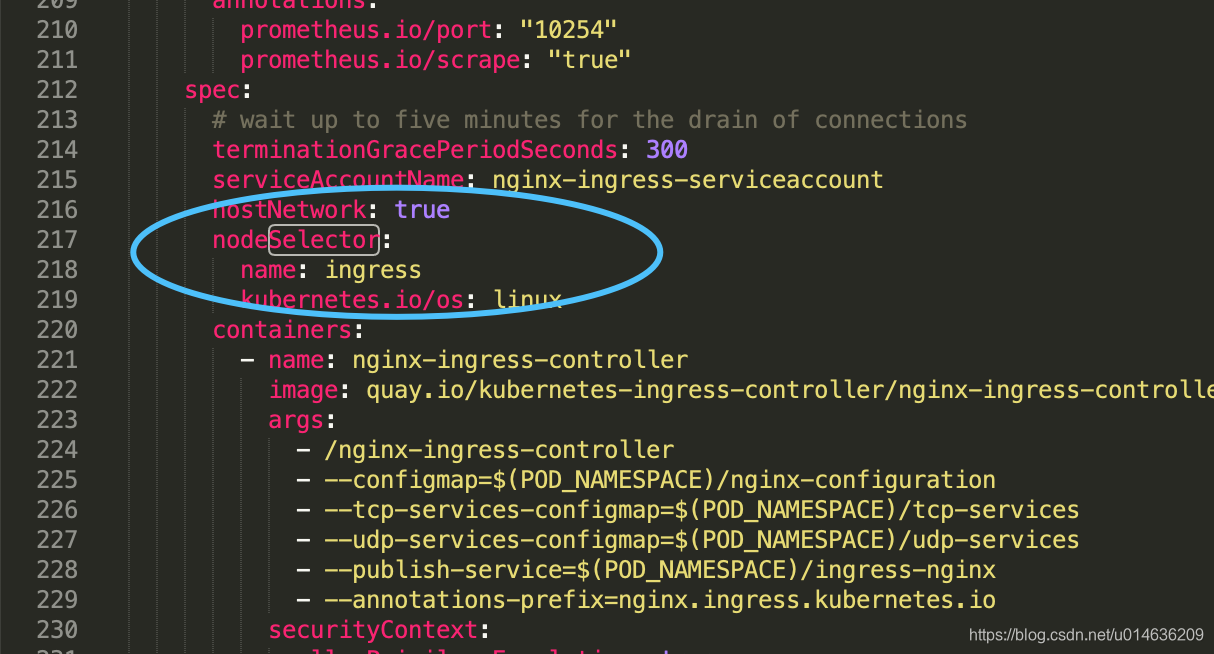

5.3.7.4.指定node服务器 的标签 kubectl label node worker01-node name=ingress

[root@manager-node ingress-nginx]# kubectl label node worker01-node name=ingress

node/worker01-node labeled

[root@manager-node ingress-nginx]#

1.给服务器node worker01-node 打一个标签 即 name=ingress(key为name,value为ingress),用于我们ingress的部署访问

5.3.7.5.安装 ingress-nginx服务

5.3.7.5.1.创建配置文件 mandatory.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

hostNetwork: true

nodeSelector:

name: ingress

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 33

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

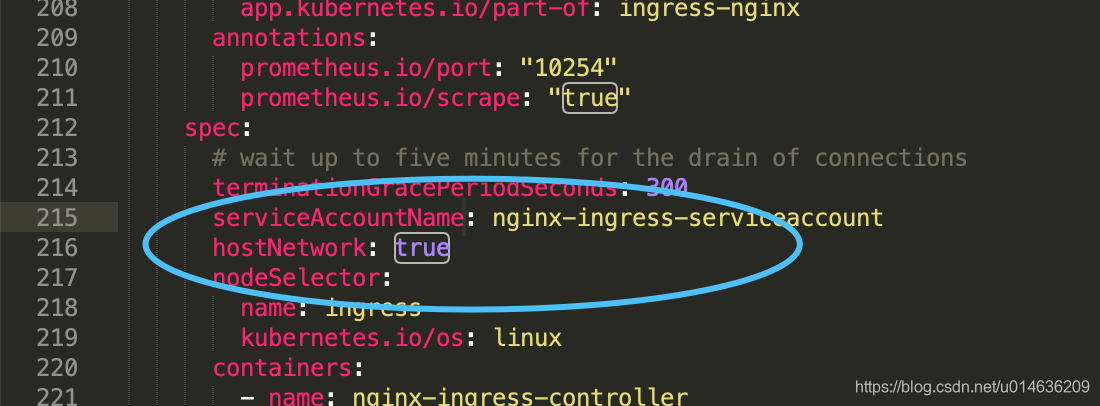

注意配置文件中的节点选择器

网络类型改为 hostNetwork为true,即 ip跟主机一致

这里相当于

5.3.7.2.kubectl apply -f mandatory.yaml [启动]

[root@manager-node ingress-nginx]# pwd

/root/demo/ingress-nginx

[root@manager-node ingress-nginx]# ls -la

total 8

drwxr-xr-x. 2 root root 28 Jan 13 10:36 .

drwxr-xr-x. 3 root root 4096 Jan 13 10:19 ..

-rw-r--r--. 1 root root 506 Jan 13 10:36 my-tomcat.yaml

[root@manager-node ingress-nginx]# vi mandatory.yaml

[root@manager-node ingress-nginx]# kubectl apply -f mandatory.yaml

namespace/ingress-nginx created

configmap/nginx-configuration created

configmap/tcp-services created

configmap/udp-services created

serviceaccount/nginx-ingress-serviceaccount created

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created

role.rbac.authorization.k8s.io/nginx-ingress-role created

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created

deployment.apps/nginx-ingress-controller created

[root@manager-node ingress-nginx]#

正在拉取镜像

[root@manager-node ingress-nginx]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ingress-controller-7c66dcdd6c-nstjc 0/1 ContainerCreating 0 114s 10.0.2.15 worker01-node <none> <none>

[root@manager-node ingress-nginx]#

5.3.7.3.查看当前pod创建的进度 kubectl describe pod nginx-ingress-controller-7c66dcdd6c-nstjc -n ingress-nginx

[root@manager-node ingress-nginx]# kubectl describe pod nginx-ingress-controller-7c66dcdd6c-nstjc -n ingress-nginx

Name: nginx-ingress-controller-7c66dcdd6c-nstjc

Namespace: ingress-nginx

Priority: 0

PriorityClassName: <none>

Node: worker01-node/10.0.2.15

Start Time: Mon, 13 Jan 2020 10:54:58 +0000

Labels: app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/part-of=ingress-nginx

pod-template-hash=7c66dcdd6c

Annotations: prometheus.io/port: 10254

prometheus.io/scrape: true

Status: Pending

IP: 10.0.2.15

Controlled By: ReplicaSet/nginx-ingress-controller-7c66dcdd6c

Containers:

nginx-ingress-controller:

Container ID:

Image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1

Image ID:

Ports: 80/TCP, 443/TCP

Host Ports: 80/TCP, 443/TCP

Args:

/nginx-ingress-controller

--configmap=$(POD_NAMESPACE)/nginx-configuration

--tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

--udp-services-configmap=$(POD_NAMESPACE)/udp-services

--publish-service=$(POD_NAMESPACE)/ingress-nginx

--annotations-prefix=nginx.ingress.kubernetes.io

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Liveness: http-get http://:10254/healthz delay=10s timeout=10s period=10s #success=1 #failure=3

Readiness: http-get http://:10254/healthz delay=0s timeout=10s period=10s #success=1 #failure=3

Environment:

POD_NAME: nginx-ingress-controller-7c66dcdd6c-nstjc (v1:metadata.name)

POD_NAMESPACE: ingress-nginx (v1:metadata.namespace)

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from nginx-ingress-serviceaccount-token-dgcxr (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

nginx-ingress-serviceaccount-token-dgcxr:

Type: Secret (a volume populated by a Secret)

SecretName: nginx-ingress-serviceaccount-token-dgcxr

Optional: false

QoS Class: BestEffort

Node-Selectors: kubernetes.io/os=linux

name=ingress

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m20s default-scheduler Successfully assigned ingress-nginx/nginx-ingress-controller-7c66dcdd6c-nstjc to worker01-node

Normal Pulling 3m20s kubelet, worker01-node Pulling image "quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1"

[root@manager-node ingress-nginx]#

最终

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 5m50s default-scheduler Successfully assigned ingress-nginx/nginx-ingress-controller-7c66dcdd6c-nstjc to worker01-node

Normal Pulling 5m50s kubelet, worker01-node Pulling image "quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1"

Normal Pulled 111s kubelet, worker01-node Successfully pulled image "quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1"

Normal Created 111s kubelet, worker01-node Created container nginx-ingress-controller

Normal Started 110s kubelet, worker01-node Started container nginx-ingress-controller

[root@manager-node ingress-nginx]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ingress-controller-7c66dcdd6c-nstjc 1/1 Running 0 5m58s 10.0.2.15 worker01-node <none> <none>

[root@manager-node ingress-nginx]#

5.3.7.4.查看进度实时方式

[root@manager-node ingress-nginx]# kubectl get pods --all-namespaces -l app.kubernetes.io/name=ingress-nginx --watch

NAMESPACE NAME READY STATUS RESTARTS AGE

ingress-nginx nginx-ingress-controller-7c66dcdd6c-nstjc 1/1 Running 0 7m13s

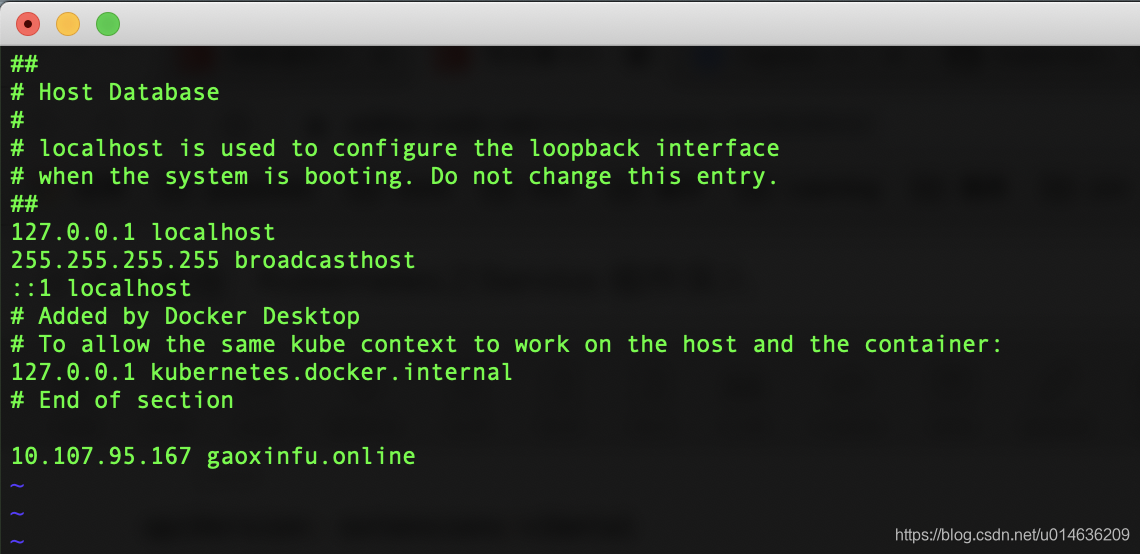

5.3.7.5.ingress配置

配置文件

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress

spec:

rules:

- host: gaoxinfu.online

http:

paths:

- path: /

backend:

serviceName: tomcat-service

servicePort: 80

启动

[root@manager-node ingress-nginx]# kubectl apply -f nginx-ingress.yaml

ingress.extensions/nginx-ingress created

[root@manager-node ingress-nginx]# kubectl get ingress

NAME HOSTS ADDRESS PORTS AGE

nginx-ingress gaoxinfu.online 80 22s

[root@manager-node ingress-nginx]#

host文件修改

192.168.1.122 gaoxinfu.online

192.168.1.122 是我的work01-node的主机的ip

相当于这个步骤

备注 安装监控端口的工具 lsof

[root@manager-node ~]# yum install -y lsof

Loaded plugins: fastestmirror

Determining fastest mirrors

Could not retrieve mirrorlist http://mirrorlist.centos.org/?release=7&arch=x86_64&repo=extras&infra=vag error was

12: Timeout on http://mirrorlist.centos.org/?release=7&arch=x86_64&repo=extras&infra=vag: (28, 'Operation too slow. Less than 1000 bytes/sec transferred the last 30 seconds')

* base: mirrors.aliyun.com

* extras: mirrors.cn99.com

* updates: mirrors.aliyun.com

base | 3.6 kB 00:00:00

docker-ce-stable | 3.5 kB 00:00:00

extras | 2.9 kB 00:00:00

kubernetes | 1.4 kB 00:00:00

updates | 2.9 kB 00:00:00

Resolving Dependencies

--> Running transaction check

---> Package lsof.x86_64 0:4.87-6.el7 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

===================================================================================================================================================================================================================

Package Arch Version Repository Size

===================================================================================================================================================================================================================

Installing:

lsof x86_64 4.87-6.el7 base 331 k

Transaction Summary

===================================================================================================================================================================================================================

Install 1 Package

Total download size: 331 k

Installed size: 927 k

Downloading packages:

lsof-4.87-6.el7.x86_64.rpm | 331 kB 00:00:00

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : lsof-4.87-6.el7.x86_64 1/1

Verifying : lsof-4.87-6.el7.x86_64 1/1

Installed:

lsof.x86_64 0:4.87-6.el7

Complete!

[root@manager-node ~]#