1、环境准备

1.1 flume组件开发环境

flume组件依赖的jar如下:

<!-- provided -->

<dependency>

<groupId>commons-lang</groupId>

<artifactId>commons-lang</artifactId>

<version>2.5</version>

</dependency>

<!-- hbase相关组件 -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<exclusions>

<exclusion>

<groupId>io.netty</groupId>

<artifactId>netty</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- flume相关插件 -->

<dependency>

<groupId>org.apache.flume.flume-ng-sinks</groupId>

<artifactId>flume-ng-hbase-sink</artifactId>

<version>${version.flume}</version>

</dependency>

<!-- JDK依赖 -->

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>${version.java}</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

<!-- log -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

</dependency>

1.2 自定义hbase序列化

下面定义了接口常量,虽然有些反模式,但较为容易理解。注意:java通过thrift框架生成文件,需要去除__isset_bitfield字段

import com.google.gson.Gson;

import com.google.gson.GsonBuilder;

public interface DcmFlumeBaseConstant {

String SEPARATE = "$";

Gson GSON = new GsonBuilder().create();

Charset UTF_8 = Charset.forName("UTF-8");

Charset ISO_8859_1 = Charset.forName("ISO-8859-1");

String __isset_bitfield = "__isset_bitfield";

interface Fields {

String ID = "id";

}

}

《flume构建高可用、可扩展的海量日志采集系统》中描述Flume和HBase交互环境中,只关心Put和Increment,也就是下面的PutRequest和AtomicIncrementRequest

Flume有两类HBase Sink,一类是Hbase sink和Async Hbase sink,Hbase sink采取的是逐个向hbase集群发送事件,而Async Hbase sink则采用非阻塞的且使用多线程写数据到Hbase。显然,两种方式Async Hbase sink的安全性较Hbase sink低,而性能要较Hbase sink高。

import org.apache.commons.lang.StringUtils;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.conf.ComponentConfiguration;

import org.apache.flume.sink.hbase.AsyncHbaseEventSerializer;

import org.apache.hadoop.hbase.util.Bytes;

import org.hbase.async.AtomicIncrementRequest;

import org.hbase.async.PutRequest;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

/**

* json数据hbase序列化,row_key默认使用ID

*

* @author dzm

*/

public class HbaseSerializer implements AsyncHbaseEventSerializer {

private static final Logger logger = LoggerFactory.getLogger(HbaseSerializer.class);

public static final ArrayList<PutRequest> EMPTY_ARRAYLIST = new ArrayList<>();

// hbase中的表

private byte[] table;

// 列簇

private byte[] cf;

private Event currentEvent;

@Override

public void initialize(byte[] table, byte[] cf) {

this.table = table;

this.cf = cf;

}

@Override

public void configure(Context context) {

}

@Override

public void configure(ComponentConfiguration conf) {

}

@SuppressWarnings("unchecked")

@Override

public List<PutRequest> getActions() {

String eventStr = new String(currentEvent.getBody(), DcmFlumeBaseConstant.UTF_8);

if (logger.isDebugEnabled()) {

logger.debug("event body: " + eventStr);

}

if (StringUtils.isNotEmpty(eventStr)) {

// 这里看到数据取自eventStr

Map<String, Object> dataMap = DcmFlumeBaseConstant.GSON.fromJson(eventStr, HashMap.class);

try {

filter(dataMap);

} catch (Exception e) {

return EMPTY_ARRAYLIST;

}

if (dataMap == null || dataMap.size() == 0) {

return EMPTY_ARRAYLIST;

}

List<PutRequest> rows = new ArrayList<PutRequest>();

// hbase中写入行记录

List<byte[]> qualifiers = new ArrayList<byte[]>();

List<byte[]> values = new ArrayList<byte[]>();

for (Map.Entry<String, Object> entry : dataMap.entrySet()) {

// __isset_bitfield为thrift字段,不需要存储

if (DcmFlumeBaseConstant.__isset_bitfield.equals(entry.getKey())) {

continue;

}

if (entry.getValue() != null) {

// 为空的数据,不用写入到hbase中

if (entry.getValue() instanceof java.util.List) {

// list类型在detail的sink中处理

} else if (StringUtils.isNotEmpty(entry.getValue().toString())) {

qualifiers.add(Bytes.toBytes(entry.getKey()));

values.add(Bytes.toBytes(entry.getValue().toString()));

}

}

}

byte[][] qualifiersB = new byte[qualifiers.size()][];

qualifiers.toArray(qualifiersB);

byte[][] valuesB = new byte[values.size()][];

values.toArray(valuesB);

// getRowKey(dataMap)获取rowkey

PutRequest put = new PutRequest(table, getRowKey(dataMap), cf, qualifiersB, valuesB);

rows.add(put);

return rows;

}

return EMPTY_ARRAYLIST;

}

/**

* Hbase的 row key=id

*

* @param dataMap

* @return

*/

protected byte[] getRowKey(Map<String, Object> dataMap) {

String rowKey = dataMap.get(DcmFlumeBaseConstant.Fields.ID).toString();

dataMap.remove("DcmFlumeBaseConstant.Fields.ID");

return Bytes.toBytes(rowKey);

}

@Override

public void setEvent(Event event) {

this.currentEvent = event;

}

@Override

public void cleanUp() {

table = null;

cf = null;

currentEvent = null;

}

/**

* hbase计数器,不需要

*/

@Override

public List<AtomicIncrementRequest> getIncrements() {

return new ArrayList<AtomicIncrementRequest>();

}

/**

* 是否需要保存

*

* @param dataMap

*/

public void filter(Map<String, Object> dataMap) {

}

如果想更改一下rowkey,设为自己的

private static String DATE_FORMAT = "yyyyMMddHHmmss";

/**

* 对传入的数据进行过滤

*

* @param dataMap

*/

@Override

public void filter(Map<String, Object> dataMap) {

if (!dataMap.containsKey(("id"))) {

dataMap = null;

}

String userId = dataMap.get("id").toString();

String eventType = dataMap.get("eventType").toString();

String time = DateUtil.formatDate(DateUtil.parseDate(dataMap.get("time")), DATE_FORMAT);

dataMap.put("userId", userId);

dataMap.put("id", userId + DcmFlumeBaseConstant.SEPARATE + eventType + DcmFlumeBaseConstant.SEPARATE + time);

}

1.3 hadoop、hbase环境变量

flume写入hadoop、hbase需要一些jar,因为jar太多,最简单的办法是通过环境变量来设置,

hadoop、hbase自身是不用启动的

# flume

export FLUME_HOME=/application/flume

export PATH=$PATH:$FLUME_HOME/bin

# hadoop hbase

export HADOOP_HOME=/application/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HBASE_HOME=/application/hbase

export PATH=$PATH:$HBASE_HOME/bin

export HBASE_LIBRARY_PATH=$HBASE_HOME/lib/native/Linux-amd64-64

2 接口实现

2.1 flume配置文件

参考Flume1.7.0入门:安装、部署、及flume的案例,Source是采集数据,sink是从Channel收集数据,运行在一个独立线程,sink组件是用于把数据发送到目的地的组件,Channel:连接 sources 和 sinks ,这个有点像一个队列,source组件把数据收集来以后,临时存放在channel中,即channel组件在agent中是专门用来存放临时数据的——对采集到的数据进行简单的缓存,可以存放在memory、jdbc、file等等。

下面的配置信息,可以解读为:35001端口采集avro对象,采集后的数据先保存在kafka中,接着由sink来处理,序列化采用我自定义的HbaseSerializer,将数据写入到hbase中。

那么assemble是做什么的呢?它用于定义source和sink的绑定关系,两者之间通过channel进行关联

# 上传flume-xx-conf.properties到$FLUME_HOME/conf目录下

[root@bwsc73 conf]# cat dcm-order-flume-conf.properties

# read from kafka and write to hbase

dcm-order-agent.sources = dcm-order-source

dcm-order-agent.channels = dcm-order-channel

dcm-order-agent.sinks = dcm-order-sink

# source

dcm-order-agent.sources.dcm-order-source.type=avro

dcm-order-agent.sources.dcm-order-source.bind=0.0.0.0

dcm-order-agent.sources.dcm-order-source.port=35001

# channel

dcm-order-agent.channels.dcm-order-channel.flumeBatchSize = 100

dcm-order-agent.channels.dcm-order-channel.type = org.apache.flume.channel.kafka.KafkaChannel

dcm-order-agent.channels.dcm-order-channel.kafka.bootstrap.servers = bwsc68:9092,bwsc68:9092,bwsc70:9092

dcm-order-agent.channels.dcm-order-channel.kafka.topic = dcm_order

dcm-order-agent.channels.dcm-order-channel.kafka.consumer.group.id = dcm_order_flume_channel

#dcm-order-agent.channels.dcm-order-channel.kafka.consumer.auto.offset.reset = latest

# sink

dcm-order-agent.sinks.dcm-order-sink.type = asynchbase

dcm-order-agent.sinks.dcm-order-sink.table = dcm_order

dcm-order-agent.sinks.dcm-order-sink.columnFamily = i

dcm-order-agent.sinks.dcm-order-sink.zookeeperQuorum = bwsc65:2181,bwsc66:2181,bwsc67:2181

dcm-order-agent.sinks.dcm-order-sink.serializer = com.dzm.dcm.flume.sink.HbaseSerializer

# assemble

dcm-order-agent.sources.dcm-order-source.channels = dcm-order-channel

dcm-order-agent.sinks.dcm-order-sink.channel = dcm-order-channel

3 flume组件部署

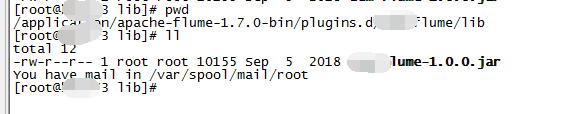

3.1 文件上传在哪里

mkdir -p $FLUME_HOME/plugins.d/your_project/lib

上传到jar文件至$FLUME_HOME/plugins.d/your_project/lib目录

3.2 启动

# 在$FLUME_HOME目录输入以下命令启动(注:必须在flume根目录启动)

nohup bin/flume-ng agent -n your-order-agent -c conf -f conf/your-order-flume-conf.properties > your-order-flume.out 2>&1 &

从下面可以看到那些依赖的jar,停止flume的进程,通过kill命令即可

[root@bwsc73 conf]# ps -ef|grep flume-multi-hbase-sink

root 6122 4792 0 11:53 pts/1 00:00:00 grep --color=auto flume-multi-hbase-sink

root 20523 1 0 Jun12 ? 01:20:57 /usr/java/jdk1.8.0_151/bin/java -Xmx20m -cp /application/flume/lib/*:/application/flume/plugins.d/bw-msg-flume/lib/*:/application/flume/plugins.d/dcm-flume/lib/*:/application/flume/plugins.d/flume-invoice-bft/lib/*:/application/flume/plugins.d/flume-invoice-wp/lib/*:/application/flume/plugins.d/flume-invoice-wp/libext/*:/application/hadoop-2.6.4/etc/hadoop:/application/hadoop-2.6.4/share/hadoop/common/lib/*:/application/hadoop-2.6.4/share/hadoop/common/*:/application/hadoop-2.6.4/share/hadoop/hdfs:/application/hadoop-2.6.4/share/hadoop/hdfs/lib/*:/application/hadoop-2.6.4/share/hadoop/hdfs/*:/application/hadoop-2.6.4/share/hadoop/yarn/lib/*:/application/hadoop-2.6.4/share/hadoop/yarn/*:/application/hadoop-2.6.4/share/hadoop/mapreduce/lib/*:/application/hadoop-2.6.4/share/hadoop/mapreduce/*:/application/hadoop/contrib/capacity-scheduler/*.jar:/application/hbase/conf:/usr/java/jdk/lib/tools.jar:/application/hbase:/application/hbase/lib/activation-1.1.jar:/application/hbase/lib/antisamy-1.4.3.jar:/application/hbase/lib/aopalliance-1.0.jar:/application/hbase/lib/apacheds-i18n-2.0.0-M15.jar:/application/hbase/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/application/hbase/lib/api-asn1-api-1.0.0-M20.jar:/application/hbase/lib/api-util-1.0.0-M20.jar:/application/hbase/lib/asm-3.1.jar:/application/hbase/lib/avro-1.7.4.jar:/application/hbase/lib/batik-css-1.7.jar:/application/hbase/lib/batik-ext-1.7.jar:/application/hbase/lib/batik-util-1.7.jar:/application/hbase/lib/bsh-core-2.0b4.jar:/application/hbase/lib/commons-beanutils-1.7.0.jar:/application/hbase/lib/commons-beanutils-core-1.7.0.jar:/application/hbase/lib/commons-cli-1.2.jar:/application/hbase/lib/commons-codec-1.9.jar:/application/hbase/lib/commons-collections-3.2.2.jar:/application/hbase/lib/commons-compress-1.4.1.jar:/application/hbase/lib/commons-configuration-1.6.jar:/application/hbase/lib/commons-daemon-1.0.13.jar:/application/hbase/lib/commons-digester-1.8.jar:/application/hbase/lib/commons-el-1.0.jar:/application/hbase/lib/commons-fileupload-1.2.jar:/application/hbase/lib/commons-httpclient-3.1.jar:/application/hbase/lib/commons-io-2.4.jar:/application/hbase/lib/commons-lang-2.6.jar:/application/hbase/lib/commons-logging-1.2.jar:/application/hbase/lib/commons-math-2.2.jar:/application/hbase/lib/commons-math3-3.1.1.jar:/application/hbase/lib/commons-net-3.1.jar:/application/hbase/lib/disruptor-3.3.0.jar:/application/hbase/lib/esapi-2.1.0.jar:/application/hbase/lib/findbugs-annotations-1.3.9-1.jar:/application/hbase/lib/guava-12.0.1.jar:/application/hbase/lib/guice-3.0.jar:/application/hbase/lib/guice-servlet-3.0.jar:/application/hbase/lib/hadoop-annotations-2.5.1.jar:/application/hbase/lib/hadoop-auth-2.5.1.jar:/application/hbase/lib/hadoop-client-2.5.1.jar:/application/hbase/lib/hadoop-common-2.5.1.jar:/application/hbase/lib/hadoop-hdfs-2.5.1.jar:/application/hbase/lib/hadoop-mapreduce-client-app-2.5.1.jar:/application/hbase/lib/hadoop-mapreduce-client-common-2.5.1.jar:/application/hbase/lib/hadoop-mapreduce-client-core-2.5.1.jar:/application/hbase/lib/hadoop-mapreduce-client-jobclient-2.5.1.jar:/application/hbase/lib/hadoop-mapreduce-client-shuffle-2.5.1.jar:/application/hbase/lib/hadoop-yarn-api-2.5.1.jar:/application/hbase/lib/hadoop-yarn-client-2.5.1.jar:/application/hbase/lib/hadoop-yarn-common-2.5.1.jar:/application/hbase/lib/hadoop-yarn-server-common-2.5.1.jar:/application/hbase/lib/hbase-annotations-1.1.4.jar:/application/hbase/lib/hbase-annotations-1.1.4-tests.jar:/application/hbase/lib/hbase-client-1.1.4.jar:/application/hbase/lib/hbase-common-1.1.4.jar:/application/hbase/lib/hbase-common-1.1.4-tests.jar:/application/hbase/lib/hbase-examples-1.1.4.jar:/application/hbase/lib/hbase-hadoop2-compat-1.1.4.jar:/application/hbase/lib/hbase-hadoop-compat-1.1.4.jar:/application/hbase/lib/hbase-it-1.1.4.jar:/application/hbase/lib/hbase-it-1.1.4-tests.jar:/application/hbase/lib/hbase-prefix-tree-1.1.4.jar:/application/hbase/lib/hbase-procedure-1.1.4.jar:/application/hbase/lib/hbase-protocol-1.1.4.jar:/application/hbase/lib/hbase-resource-bundle-1.1.4.jar:/application/hbase/lib/hbase-rest-1.1.4.jar:/application/hbase/lib/hbase-server-1.1.4.jar:/application/hbase/lib/hbase-server-1.1.4-tests.jar:/application/hbase/lib/hbase-shell-1.1.4.jar:/application/hbase/lib/hbase-thrift-1.1.4.jar:/application/hbase/lib/htrace-core-3.1.0-incubating.jar:/application/hbase/lib/httpclient-4.2.5.jar:/application/hbase/lib/httpcore-4.1.3.jar:/application/hbase/lib/jackson-core-asl-1.9.13.jar:/application/hbase/lib/jackson-jaxrs-1.9.13.jar:/application/hbase/lib/jackson-mapper-asl-1.9.13.jar:/application/hbase/lib/jackson-xc-1.9.13.jar:/application/hbase/lib/jamon-runtime-2.3.1.jar:/application/hbase/lib/jasper-compiler-5.5.23.jar:/application/hbase/lib/jasper-runtime-5.5.23.jar:/application/hbase/lib/javax.inject-1.jar:/application/hbase/lib/java-xmlbuilder-0.4.jar:/application/hbase/lib/jaxb-api-2.2.2.jar:/application/hbase/lib/jaxb-impl-2.2.3-1.jar:/application/hbase/lib/jcodings-1.0.8.jar:/application/hbase/lib/jersey-client-1.9.jar:/application/hbase/lib/jersey-core-1.9.jar:/application/hbase/lib/jersey-guice-1.9.jar:/application/hbase/lib/jersey-json-1.9.jar:/application/hbase/lib/jersey-server-1.9.jar:/application/hbase/lib/jets3t-0.9.0.jar:/application/hbase/lib/jettison-1.3.3.jar:/application/hbase/lib/jetty-6.1.26.jar:/application/hbase/lib/jetty-sslengine-6.1.26.jar:/application/hbase/lib/jetty-util-6.1.26.jar:/application/hbase/lib/joni-2.1.2.jar:/application/hbase/lib/jruby-complete-1.6.8.jar:/application/hbase/lib/jsch-0.1.42.jar:/application/hbase/lib/jsp-2.1-6.1.14.jar:/application/hbase/lib/jsp-api-2.1-6.1.14.jar:/application/hbase/lib/jsr305-1.3.9.jar:/application/hbase/lib/junit-4.12.jar:/application/hbase/lib/leveldbjni-all-1.8.jar:/application/hbase/lib/libthrift-0.9.0.jar:/application/hbase/lib/log4j-1.2.17.jar:/application/hbase/lib/metrics-core-2.2.0.jar:/application/hbase/lib/nekohtml-1.9.12.jar:/application/hbase/lib/netty-3.2.4.Final.jar:/application/hbase/lib/netty-all-4.0.23.Final.jar:/application/hbase/lib/paranamer-2.3.jar:/application/hbase/lib/protobuf-java-2.5.0.jar:/application/hbase/lib/servlet-api-2.5-6.1.14.jar:/application/hbase/lib/servlet-api-2.5.jar:/application/hbase/lib/slf4j-api-1.7.7.jar:/application/hbase/lib/slf4j-log4j12-1.7.5.jar:/application/hbase/lib/snappy-java-1.0.4.1.jar:/application/hbase/lib/spymemcached-2.11.6.jar:/application/hbase/lib/xalan-2.7.0.jar:/application/hbase/lib/xml-apis-1.3.03.jar:/application/hbase/lib/xml-apis-ext-1.3.04.jar:/application/hbase/lib/xmlenc-0.52.jar:/application/hbase/lib/xom-1.2.5.jar:/application/hbase/lib/xz-1.0.jar:/application/hbase/lib/zookeeper-3.4.6.jar:/application/hadoop-2.6.4/etc/hadoop:/application/hadoop-2.6.4/share/hadoop/common/lib/*:/application/hadoop-2.6.4/share/hadoop/common/*:/application/hadoop-2.6.4/share/hadoop/hdfs:/application/hadoop-2.6.4/share/hadoop/hdfs/lib/*:/application/hadoop-2.6.4/share/hadoop/hdfs/*:/application/hadoop-2.6.4/share/hadoop/yarn/lib/*:/application/hadoop-2.6.4/share/hadoop/yarn/*:/application/hadoop-2.6.4/share/hadoop/mapreduce/lib/*:/application/hadoop-2.6.4/share/hadoop/mapreduce/*:/application/hadoop/contrib/capacity-scheduler/*.jar:/application/hbase/conf:/lib/* -Dja

杀掉全部flume进程

ps -ef | grep flume | grep -v grep | awk '{print $2}' | xargs kill -9

3.3 调试

#查看日志

tail -fn 100 logs/flume.log

# 如果需要检查报文是否接收成功,修改flume/conf/log4j.properties,加入配置,配置需要debug模式的包名全路径后重新启动

log4j.lodcgger.com.your_package.your_path= DEBUG

3.4 haproxy配置

35001端口在properties文件中

frontend dcm_order_flume_front

bind *:35101

mode tcp

log global

option tcplog

timeout client 3600s

backlog 4096

maxconn 1000000

default_backend dcm_order_flume_back

backend dcm_order_flume_back

mode tcp

option log-health-checks

option redispatch

option tcplog

balance roundrobin

timeout connect 1s

timeout queue 5s

timeout server 3600s

balance roundrobin

server f1 bwhs180:35001 check inter 2000 rise 3 fall 3 weight 1

server f2 bwhs181:35001 check inter 2000 rise 3 fall 3 weight 1

server f3 bwhs183:35001 check inter 2000 rise 3 fall 3 weight 1

3.5 flume进程是否活着在

根据端口来确定进程是否还活着,如果挂掉了,那么就重启一下

hdfsAgent=`lsof -i:36001 | awk 'NR==2{print $2}'`

echo $hdfsAgent

if [ "$hdfsAgent" = "" ]; then

echo "hdfs-agent is restart!"

nohup bin/flume-ng agent -n hdfs-agent -c conf -f conf/hdfs-flume-conf.properties &

else

echo "hdfs-agent is alive!"

fi

另外重启flume,可以参考

hdfsAgent=`ps -ef | grep flume | grep hdfs-agent | awk '{print $2}'`

echo $hdfsAgent

if [ "$hdfsAgent" = "" ]; then

echo "hdfs-agent is restart!"

nohup bin/flume-ng agent -n hdfs-agent -c conf -f conf/hdfs-flume-conf.properties &

else

echo "hdfs-agent is alive!"

fi

4 问题集

4.1 java.lang.OutOfMemoryError: Java heap space

参考flume系列之Java heap space大小设置,在conf/flume-env.sh中增加配置

export JAVA_OPTS="-Xms512m -Xmx2000m -Dcom.sun.management.jmxremote"

即使增加了这个配置,还是会发生kafka相关操作及问题汇总,总是会出现org.apache.kafka.common.errors.NotLeaderForPartitionException的问题,前期研究第4.1.2章 flume的拓扑结构,就想到了,flume中source节点与sink节点分开,这样就可以降低flume资源消耗的风险.

23 Jul 2019 13:58:41,136 ERROR [kafka-producer-network-thread | producer-1] (org.apache.kafka.common.utils.KafkaThread$1.uncaughtException:30) - Uncaught exception in kafka-producer-network-thread | producer-1:

java.lang.OutOfMemoryError: Java heap space

at java.util.HashMap.newNode(HashMap.java:1747)

at java.util.HashMap.putVal(HashMap.java:631)

at java.util.HashMap.put(HashMap.java:612)

at org.apache.kafka.common.Cluster.<init>(Cluster.java:48)

at org.apache.kafka.common.requests.MetadataResponse.<init>(MetadataResponse.java:176)

at org.apache.kafka.clients.NetworkClient$DefaultMetadataUpdater.handleResponse(NetworkClient.java:578)

at org.apache.kafka.clients.NetworkClient$DefaultMetadataUpdater.maybeHandleCompletedReceive(NetworkClient.java:565)

at org.apache.kafka.clients.NetworkClient.handleCompletedReceives(NetworkClient.java:441)

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:265)

at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:216)

at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:128)

at java.lang.Thread.run(Thread.java:748)

23 Jul 2019 13:58:41,136 ERROR [Thread-1] (org.apache.thrift.server.TThreadedSelectorServer$SelectorThread.run:544) - run() exiting due to uncaught error

java.lang.OutOfMemoryError: Java heap space

at java.nio.HeapByteBuffer.<init>(HeapByteBuffer.java:57)

at java.nio.ByteBuffer.allocate(ByteBuffer.java:335)

at org.apache.thrift.server.AbstractNonblockingServer$FrameBuffer.read(AbstractNonblockingServer.java:338)

at org.apache.thrift.server.AbstractNonblockingServer$AbstractSelectThread.handleRead(AbstractNonblockingServer.java:202)

at org.apache.thrift.server.TThreadedSelectorServer$SelectorThread.select(TThreadedSelectorServer.java:576)

at org.apache.thrift.server.TThreadedSelectorServer$SelectorThread.run(TThreadedSelectorServer.java:536)

4.2 SinkRunner-PollingRunner-DefaultSinkProcessor

26 Jul 2019 08:49:38,274 ERROR [SinkRunner-PollingRunner-DefaultSinkProcessor] (org.apache.kafka.clients.consumer.internals.ConsumerCoordinator$OffsetCommitResponseHandler.handle:550) - Error UNKNOWN_MEMBER_ID occurred while committing offsets for group flume_scrapy_snapshot_channel

26 Jul 2019 08:49:38,274 ERROR [SinkRunner-PollingRunner-DefaultSinkProcessor] (org.apache.flume.SinkRunner$PollingRunner.run:158) - Unable to deliver event. Exception follows.

org.apache.kafka.clients.consumer.CommitFailedException: Commit cannot be completed due to group rebalance

at org.apache.kafka.clients.consumer.internals.ConsumerCoordinator$OffsetCommitResponseHandler.handle(ConsumerCoordinator.java:552)

at org.apache.kafka.clients.consumer.internals.ConsumerCoordinator$OffsetCommitResponseHandler.handle(ConsumerCoordinator.java:493)

at org.apache.kafka.clients.consumer.internals.AbstractCoordinator$CoordinatorResponseHandler.onSuccess(AbstractCoordinator.java:665)

at org.apache.kafka.clients.consumer.internals.AbstractCoordinator$CoordinatorResponseHandler.onSuccess(AbstractCoordinator.java:644)

at org.apache.kafka.clients.consumer.internals.RequestFuture$1.onSuccess(RequestFuture.java:167)

at org.apache.kafka.clients.consumer.internals.RequestFuture.fireSuccess(RequestFuture.java:133)

at org.apache.kafka.clients.consumer.internals.RequestFuture.complete(RequestFuture.java:107)

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient$RequestFutureCompletionHandler.onComplete(ConsumerNetworkClient.java:380)

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:274)

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.clientPoll(ConsumerNetworkClient.java:320)

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:213)

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:193)

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:163)

at org.apache.kafka.clients.consumer.internals.ConsumerCoordinator.commitOffsetsSync(ConsumerCoordinator.java:358)

at org.apache.kafka.clients.consumer.KafkaConsumer.commitSync(KafkaConsumer.java:968)

at org.apache.flume.channel.kafka.KafkaChannel$ConsumerAndRecords.commitOffsets(KafkaChannel.java:684)

at org.apache.flume.channel.kafka.KafkaChannel$KafkaTransaction.doCommit(KafkaChannel.java:567)

at org.apache.flume.channel.BasicTransactionSemantics.commit(BasicTransactionSemantics.java:151)

at com.bwjf.flume.hbase.flume.sink.MultiAsyncHBaseSink.process(MultiAsyncHBaseSink.java:288)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:67)

at org.apache.flume.SinkRunner$PollingRunn