接上一篇在本机用windows 跑kettle迁移,鉴于其中一张表每天增量200W左右,本地迁移速度太慢,加上vpn连接服务器不稳定经常断,kettle并不支持断点续传,决定把windows上的kettle配置放在linux环境上跑。

一:linux安装jdk

参考:https://www.cnblogs.com/nothingonyou/p/11936850.html

二:liunx部署kettle

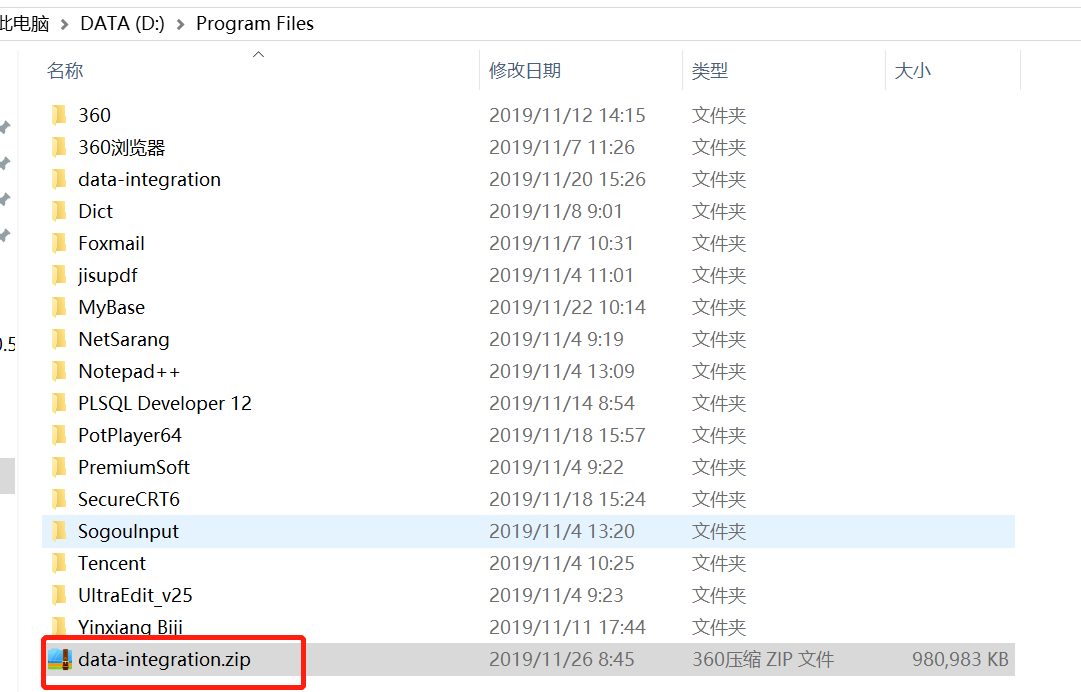

kettle是直接部署在了要转换的mysql服务器上,避免了中间的传输网络消耗,把windows下已解压的文件,压缩后上传至linux相关目录,如图:

1.建立kettle目录,并解压文件

[root@localhost opt]# mkdir kettle

[root@localhost opt]# mv data-integration.zip kettle/

[root@localhost opt]# cd kettle

[root@localhost kettle]# unzip data-integration.zip

[root@localhost kettle]# rm -rf data-integration.zip

[root@localhost kettle]# cd data-integration/

[root@localhost data-integration]# chmod +x *.sh

2.测试是否安装成功

[root@localhost /]# cd /opt/kettle/data-integration/

[root@localhost data-integration]# ./kitchen.sh

出现下面信息,表明安装成功:

[root@localhost data-integration]# ./kitchen.sh

#######################################################################

WARNING: no libwebkitgtk-1.0 detected, some features will be unavailable

Consider installing the package with apt-get or yum.

e.g. 'sudo apt-get install libwebkitgtk-1.0-0'

#######################################################################

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=51200m; support was removed in 8.0

Options:

-rep = Repository name

-user = Repository username

-pass = Repository password

-job = The name of the job to launch

-dir = The directory (dont forget the leading /)

-file = The filename (Job XML) to launch

-level = The logging level (Basic, Detailed, Debug, Rowlevel, Error, Minimal, Nothing)

-logfile = The logging file to write to

-listdir = List the directories in the repository

-listjobs = List the jobs in the specified directory

-listrep = List the available repositories

-norep = Do not log into the repository

-version = show the version, revision and build date

-param = Set a named parameter <NAME>=<VALUE>. For example -param:FILE=customers.csv

-listparam = List information concerning the defined parameters in the specified job.

-export = Exports all linked resources of the specified job. The argument is the name of a ZIP file.

-custom = Set a custom plugin specific option as a String value in the job using <NAME>=<Value>, for example: -custom:COLOR=Red

-maxloglines = The maximum number of log lines that are kept internally by Kettle. Set to 0 to keep all rows (default)

-maxlogtimeout = The maximum age (in minutes) of a log line while being kept internally by Kettle. Set to 0 to keep all rows indefinitely (default)

备注:

kitchen.sh:用来执行job作业

pan.sh:用来执行ktr转换

三:脚本调用kettle程序

1.创建kettle工作目录

[root@localhost opt]# mkdir -p /opt/kettle/kettle_file/job

[root@localhost opt]# mkdir -p /opt/kettle/kettle_file/transition

[root@localhost opt]# mkdir -p /opt/kettle/kettle_sh

[root@localhost opt]# mkdir -p /opt/kettle/kettle_log

2.在/opt/kettle/kettle_sh目录下创建执行文件vim o2m.sh

#!/bin/sh

cd /opt/kettle/data-integration/

export JAVA_HOME=/opt/apps/java/jdk1.8.0_191

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

./pan.sh -file=/opt/kettle/kettle_file/transition/o2m.ktr >>/opt/kettle/kettle_log/o2m_$(date +%Y%m%d).log &

3.修改执行权限

chmod +x o2m.sh

4.配置好windows 的ktr文件,测试成功后上传至linux对应的目录transition下

5.linux上oracle和mysql的驱动目录和windows不一样,放在:

/opt/kettle/data-integration/libswt/linux/x86_64

[root@localhost x86_64]# pwd

/opt/kettle/data-integration/libswt/linux/x86_64

[root@localhost x86_64]# ll

总用量 7444

-rw-r--r--. 1 root root 992808 11月 26 09:03 mysql-connector-java-5.1.41-bin.jar

-rw-r--r--. 1 root root 2001778 11月 26 09:03 mysql-connector-java-6.0.6.jar

-rw-r--r--. 1 root root 2739670 11月 26 09:04 ojdbc6.jar

-rw-r--r--. 1 root root 1880133 5月 16 2017 swt.jar

6.执行sh脚本文件

./o2m.sh &

7.查看log观察转换情况:

[root@localhost kettle_log]# tail -20f 1127_20191128.log

2019/11/28 14:55:26 - 表输出.4 - 完成处理 (I=0, O=510558, R=510558, W=510558, U=0, E=0)

2019/11/28 14:55:26 - 表输出.0 - 完成处理 (I=0, O=510558, R=510558, W=510558, U=0, E=0)

2019/11/28 14:55:26 - 表输出.5 - 完成处理 (I=0, O=510558, R=510558, W=510558, U=0, E=0)

2019/11/28 14:55:26 - 表输出.1 - 完成处理 (I=0, O=510558, R=510558, W=510558, U=0, E=0)

2019/11/28 14:55:26 - 表输出.2 - 完成处理 (I=0, O=510558, R=510558, W=510558, U=0, E=0)

2019/11/28 14:55:26 - 表输出.3 - 完成处理 (I=0, O=510558, R=510558, W=510558, U=0, E=0)

2019/11/28 14:55:26 - 表输出.7 - 完成处理 (I=0, O=510558, R=510558, W=510558, U=0, E=0)

2019/11/28 14:55:26 - Pan - 完成!

2019/11/28 14:55:26 - Pan - 开始=2019/11/28 14:52:41.492, 停止=2019/11/28 14:55:26.329

2019/11/28 14:55:26 - Pan - Processing ended after 2 minutes and 44 seconds (164 seconds total).

2019/11/28 14:55:26 - 2 -

2019/11/28 14:55:26 - 2 - 进程 表输入.0 成功结束, 处理了 4084464 行. ( 24905 行/秒)

2019/11/28 14:55:26 - 2 - 进程 表输出.0 成功结束, 处理了 510558 行. ( 3113 行/秒)

2019/11/28 14:55:26 - 2 - 进程 表输出.1 成功结束, 处理了 510558 行. ( 3113 行/秒)

2019/11/28 14:55:26 - 2 - 进程 表输出.2 成功结束, 处理了 510558 行. ( 3113 行/秒)

2019/11/28 14:55:26 - 2 - 进程 表输出.3 成功结束, 处理了 510558 行. ( 3113 行/秒)

2019/11/28 14:55:26 - 2 - 进程 表输出.4 成功结束, 处理了 510558 行. ( 3113 行/秒)

2019/11/28 14:55:26 - 2 - 进程 表输出.5 成功结束, 处理了 510558 行. ( 3113 行/秒)

2019/11/28 14:55:26 - 2 - 进程 表输出.6 成功结束, 处理了 510558 行. ( 3113 行/秒)

2019/11/28 14:55:26 - 2 - 进程 表输出.7 成功结束, 处理了 510558 行. ( 3113 行/秒)

可以看到,400W+的数据只用了3分钟不到便完成了转换,速度得到了大大提升。