- 启动失败

异常信息: Starting namenodes on [master] master: Warning: Permanently added 'master,' (ECDSA) to the list of known hosts. master: ERROR: JAVA_HOME is not set and could not be found. Starting datanodes localhost: ERROR: JAVA_HOME is not set and could not be found. Starting secondary namenodes [master] master: ERROR: JAVA_HOME is not set and could not be found.

解决:配置{HADOOP_HOME}/etc/hadoop/hadoop-env.sh中添加JAVA_HOME配置 - IDEA中访问 DataNode的host配置不正确无法访问导致的文件不可写入异常

[Thread-5] INFO org.apache.hadoop.hdfs.DataStreamer - Exception in createBlockOutputStream blk_1073741830_1006 java.net.ConnectException: Connection timed out: no further information at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717) at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206) at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:533) at org.apache.hadoop.hdfs.DataStreamer.createSocketForPipeline(DataStreamer.java:253) at org.apache.hadoop.hdfs.DataStreamer.createBlockOutputStream(DataStreamer.java:1725) at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1679) at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:716)

原因:客户端创建文件先访问NameNode服务器进行创建文件Meta信息,以及文件树,此时访问的的NameNode的IP,NameNode服务器创建文件成功后,会返回对应dataNode的服务器节点,但此时的节点信息中的ip是与NameNode在同一网络下的内网IP,客户端是无法访问,也就无法写入

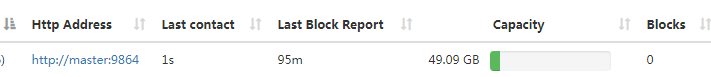

解决:在客户端中,或者服务器端etc/hadoop/hdfs-site.xml中配置conf.set("dfs.client.use.datanode.hostname","true");--设置客户端访问datanode使用hostname来进行访问使用此配置服务端返回的就是在NameNode的控制台中相应的DataNode对应的HttpAddress

- TODO

HDFS使用过程中的问题

猜你喜欢

转载自www.cnblogs.com/cazio/p/11899099.html

今日推荐

周排行