实现排序功能

(1)按照价格排序

第一种

package com.ruozedata.spark.spark02

import com.ruozedata.spark.homework.utils.ContextUtils

import com.ruozedata.spark.homework.utils.ImplicitAspect._

object SortApp01 {

def main(args: Array[String]): Unit = {

val sc = ContextUtils.getSparkContext(this.getClass.getSimpleName)

val products = sc.parallelize(List("皮鞭 20 10","蜡烛 20 100","扑克牌 5 2000","iphone11 7000 1000"))

products.map(x => {

val splits = x.split(" ")

val name = splits(0)

val price = splits(1).toDouble

val amount = splits(2).toInt

(name, price, amount)

}).sortBy(-_._2).printInfo()

sc.stop()

}

}

输出的结果:

(iphone11,7000.0,1000)

(皮鞭,20.0,10)

(蜡烛,20.0,100)

(扑克牌,5.0,2000)

-------------------------

这里一定要注意:

你运行出来的结果可能顺序不对,sortBy是全局排序的 所以你测试的时候 可以设置分区数为 1

上面的结果当然也可以按多个排:

价格相同,再按照库存的数量排序

package com.ruozedata.spark.spark02

import com.ruozedata.spark.homework.utils.ContextUtils

import com.ruozedata.spark.homework.utils.ImplicitAspect._

object SortApp01 {

def main(args: Array[String]): Unit = {

val sc = ContextUtils.getSparkContext(this.getClass.getSimpleName)

val products = sc.parallelize(List("皮鞭 20 10","蜡烛 20 100","扑克牌 5 2000","iphone11 7000 1000"),1)

products.map(x => {

val splits = x.split(" ")

val name = splits(0)

val price = splits(1).toDouble

val amount = splits(2).toInt

(name, price, amount)

}).sortBy(x=>(-x._2,-x._3)).printInfo()

sc.stop()

}

}

结果:

(iphone11,7000.0,1000)

(蜡烛,20.0,100)

(皮鞭,20.0,10)

(扑克牌,5.0,2000)

-------------------------

上面那种是使用Tuple的方式 ,生产上还是要封装一个类来实现的

第二种

package com.ruozedata.spark.spark02

import com.ruozedata.spark.homework.utils.ContextUtils

import com.ruozedata.spark.homework.utils.ImplicitAspect._

object SortApp02 {

def main(args: Array[String]): Unit = {

val sc = ContextUtils.getSparkContext(this.getClass.getSimpleName)

val products = sc.parallelize(List("皮鞭 20 10","蜡烛 20 100","扑克牌 5 2000","iphone11 7000 1000"),1)

products.map(x => {

val splits = x.split(" ")

val name = splits(0)

val price = splits(1).toDouble

val amount = splits(2).toInt

new Products(name,price,amount)

}).sortBy(x=>x).printInfo()

sc.stop()

}

}

直接报错:

Error:(21, 18) No implicit Ordering defined for com.ruozedata.spark.spark02.Products.

}).sortBy(x=>x).printInfo()

Error:(21, 18) not enough arguments for method sortBy: (implicit ord: Ordering[com.ruozedata.spark.spark02.Products], implicit ctag: scala.reflect.ClassTag[com.ruozedata.spark.spark02.Products])org.apache.spark.rdd.RDD[com.ruozedata.spark.spark02.Products].

Unspecified value parameters ord, ctag.

}).sortBy(x=>x).printInfo()

按上面那么写有什么问题?

/**

* Return this RDD sorted by the given key function.

*/

def sortBy[K](

f: (T) => K,

ascending: Boolean = true,

numPartitions: Int = this.partitions.length)

(implicit ord: Ordering[K], ctag: ClassTag[K]): RDD[T] = withScope {

this.keyBy[K](f)

.sortByKey(ascending, numPartitions)

.values

}

注意:

1.有一个隐式转换的 需要一个Ordering 这个东西 ,而我们传进去的是x ==》 product

它根本找不到任何排序规则

因为:

Error:(21, 18) not enough arguments for method sortBy: (implicit ord: Ordering[com.ruozedata.spark.spark02.Products], implicit ctag: scala.reflect.ClassTag[com.ruozedata.spark.spark02.Products])org.apache.spark.rdd.RDD[com.ruozedata.spark.spark02.Products].

所以:

1.not enough arguments

2.(implicit ord: Ordering[com.ruozedata.spark.spark02.Products] 一个Products类型

package com.ruozedata.spark.spark02

class Products(val name:String , val price:Double,val amount:Int)

extends Ordered[Products]{

override def compare(that: Products): Int = {

this.amount - that.amount

}

}

修改之后再看:

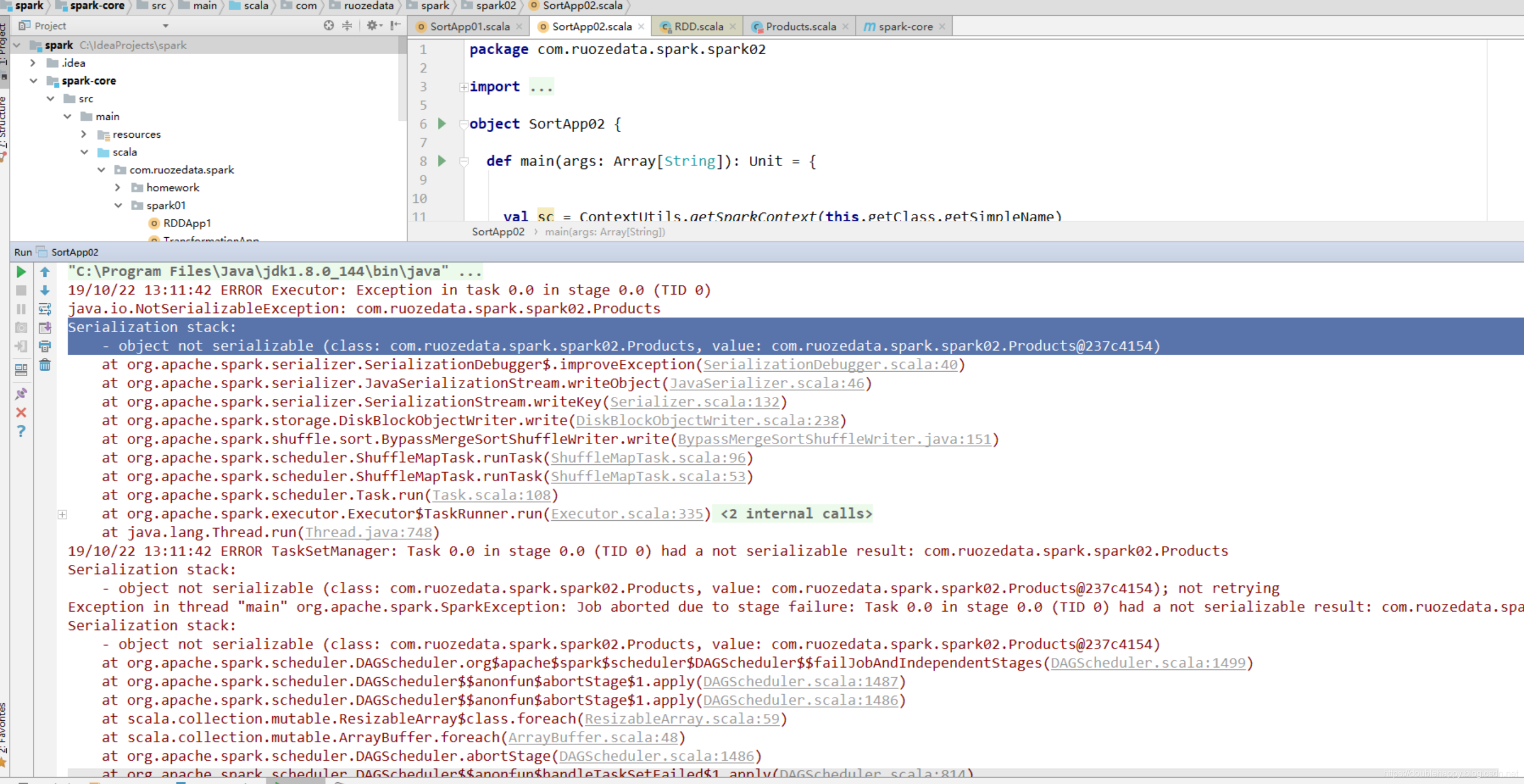

又报错了:

Serialization stack:

- object not serializable (class: com.ruozedata.spark.spark02.Products, value: com.ruozedata.spark.spark02.Products@237c4154)

class Products(val name:String , val price:Double,val amount:Int)

extends Ordered[Products] with Serializable{

override def compare(that: Products): Int = {

this.amount - that.amount

}

}

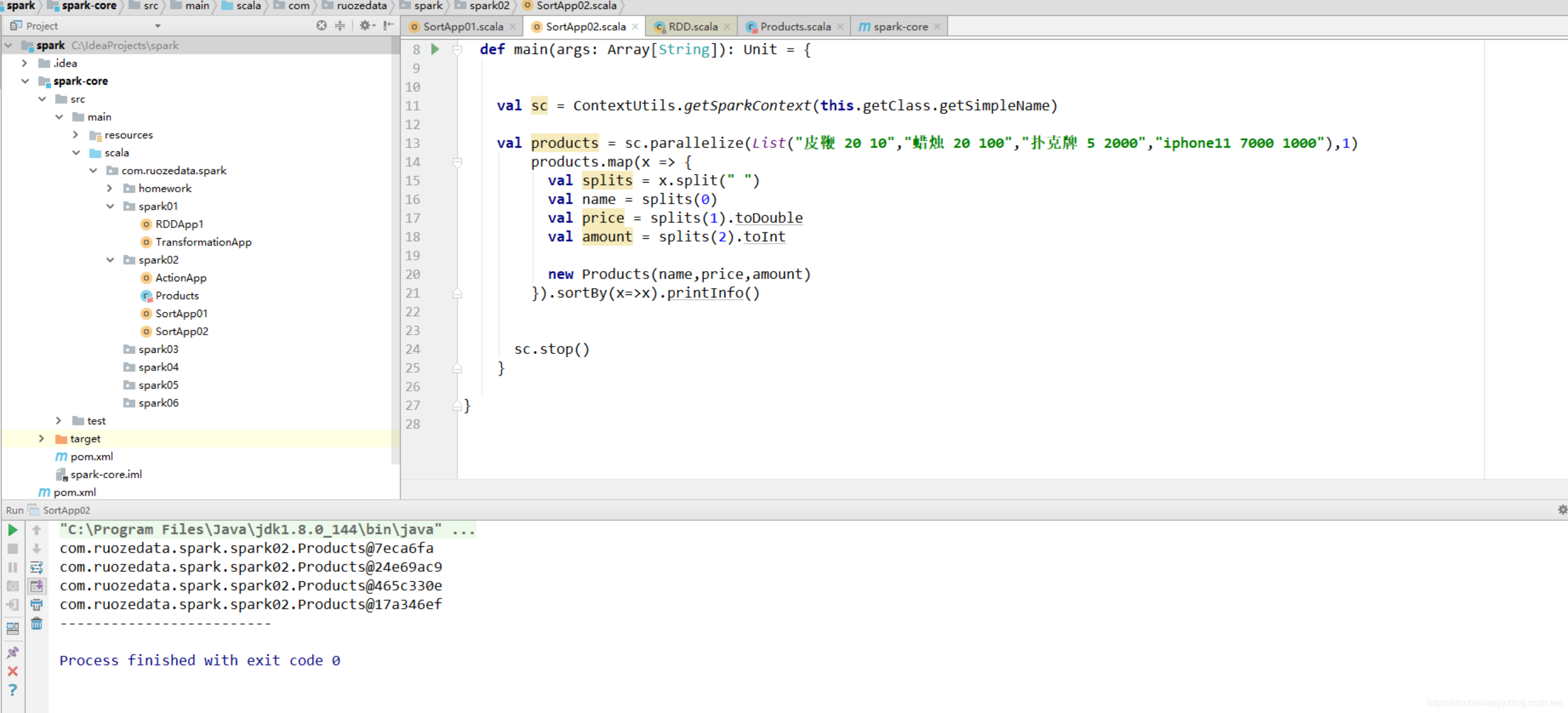

修改之后:

没问题了 那么我想看输出结果 该怎么办呢?

toString 就可以了

class Products(val name:String , val price:Double,val amount:Int)

extends Ordered[Products] with Serializable{

override def compare(that: Products): Int = {

this.amount - that.amount

}

override def toString: String = name + "\t" + price + "\t" +amount

}

package com.ruozedata.spark.spark02

import com.ruozedata.spark.homework.utils.ContextUtils

import com.ruozedata.spark.homework.utils.ImplicitAspect._

object SortApp02 {

def main(args: Array[String]): Unit = {

val sc = ContextUtils.getSparkContext(this.getClass.getSimpleName)

val products = sc.parallelize(List("皮鞭 20 10","蜡烛 20 100","扑克牌 5 2000","iphone11 7000 1000"),1)

products.map(x => {

val splits = x.split(" ")

val name = splits(0)

val price = splits(1).toDouble

val amount = splits(2).toInt

new Products(name,price,amount)

}).sortBy(x=>x).printInfo()

sc.stop()

}

}

结果:

皮鞭 20.0 10

蜡烛 20.0 100

iphone11 7000.0 1000

扑克牌 5.0 2000

-------------------------

工作当中别这么用 毕竟自己实现类 还需要序列化 挺麻烦的

第三种 case class

生产上用的比较多

package com.ruozedata.spark.spark02

case class Products02( name:String, price:Double, amount:Int)

extends Ordered[Products02] {

override def compare(that: Products02): Int = {

this.amount - that.amount

}

}

package com.ruozedata.spark.spark02

import com.ruozedata.spark.homework.utils.ContextUtils

import com.ruozedata.spark.homework.utils.ImplicitAspect._

object SortApp02 {

def main(args: Array[String]): Unit = {

val sc = ContextUtils.getSparkContext(this.getClass.getSimpleName)

val products = sc.parallelize(List("皮鞭 20 10","蜡烛 20 100","扑克牌 5 2000","iphone11 7000 1000"),1)

products.map(x => {

val splits = x.split(" ")

val name = splits(0)

val price = splits(1).toDouble

val amount = splits(2).toInt

// new Products(name,price,amount)

Products02(name,price,amount)

}).sortBy(x=>x).printInfo()

sc.stop()

}

}

结果是:

Products02(皮鞭,20.0,10)

Products02(蜡烛,20.0,100)

Products02(iphone11,7000.0,1000)

Products02(扑克牌,5.0,2000)

-------------------------

那么 class 和case class 的区别是什么? 看scala篇

**第四种 使用隐式转换的方式 **

package com.ruozedata.spark.spark02

import com.ruozedata.spark.homework.utils.ContextUtils

import com.ruozedata.spark.homework.utils.ImplicitAspect._

object SortApp03 {

def main(args: Array[String]): Unit = {

val sc = ContextUtils.getSparkContext(this.getClass.getSimpleName)

val products = sc.parallelize(List("皮鞭 20 10","蜡烛 20 100","扑克牌 5 2000","iphone11 7000 1000"),1)

products.map(x => {

val splits = x.split(" ")

val name = splits(0)

val price = splits(1).toDouble

val amount = splits(2).toInt

new Products03(name,price,amount)

}).sortBy(x=>x).printInfo()

sc.stop()

}

}

class Products03( name:String, price:Double, amount:Int)

不准对Products03 做任何修改 完成排序功能该怎么办?

Product3默认是不能排序的 ==implicit> 能排序的

package com.ruozedata.spark.spark02

import com.ruozedata.spark.homework.utils.ContextUtils

import com.ruozedata.spark.homework.utils.ImplicitAspect._

object SortApp03 {

def main(args: Array[String]): Unit = {

val sc = ContextUtils.getSparkContext(this.getClass.getSimpleName)

val products = sc.parallelize(List("皮鞭 20 10","蜡烛 20 100","扑克牌 5 2000","iphone11 7000 1000"),1)

products.map(x => {

val splits = x.split(" ")

val name = splits(0)

val price = splits(1).toDouble

val amount = splits(2).toInt

new Products03(name,price,amount)

}).sortBy(x=>x).printInfo()

/**

* Product3默认是不能排序的 ====implicit==> 能排序的

*/

implicit def product2Ordered(products: Products03):Ordered[Products03] = new Ordered[Products03]{

override def compare(that: Products03): Int = {

products.amount - that.amount

}

}

sc.stop()

}

}

class Products03( val name:String, val price:Double, val amount:Int) extends Serializable {

override def toString: String = name + "\t" + price + "\t" + amount

}

第五种 花里胡哨

能看懂就行 不需要掌握

实际上也是 隐式转换 隐式变量

思路就是 :

(implicit ord: Ordering[com.ruozedata.spark.spark02.Products] 一个Products类型

既然你却这个 就隐式转换给你

package com.ruozedata.spark.spark02

import com.ruozedata.spark.homework.utils.ContextUtils

import com.ruozedata.spark.homework.utils.ImplicitAspect._

import org.apache.spark.rdd.RDD

object SortApp04 {

def main(args: Array[String]): Unit = {

val sc = ContextUtils.getSparkContext(this.getClass.getSimpleName)

val products = sc.parallelize(List("皮鞭 20 10","蜡烛 20 100","扑克牌 5 2000","iphone11 7000 1000"),1)

val product = products.map(x => {

val splits = x.split(" ")

val name = splits(0)

val price = splits(1).toDouble

val amount = splits(2).toInt

(name, price, amount)

})

/**

* Ordering on

*

* -x._2, -x._3 排序规则

* (Double,Int) 定义的是规则的返回值的类型 就是参与排序的 类型

* (String,Double,Int) 数据的类型

*/

implicit val ord = Ordering[(Double,Int)].on[(String,Double,Int)](x=>(-x._2, -x._3))

product.sortBy(x=>x).printInfo()

sc.stop()

}

}

结果是:

(iphone11,7000.0,1000)

(蜡烛,20.0,100)

(皮鞭,20.0,10)

(扑克牌,5.0,2000)

-------------------------

package com.ruozedata.spark.spark02

import com.ruozedata.spark.homework.utils.ContextUtils

import com.ruozedata.spark.homework.utils.ImplicitAspect._

import org.apache.spark.rdd.RDD

object SortApp04 {

def main(args: Array[String]): Unit = {

val sc = ContextUtils.getSparkContext(this.getClass.getSimpleName)

val products = sc.parallelize(List("皮鞭 20 10","蜡烛 20 100","扑克牌 5 2000","iphone11 7000 1000"),1)

val product = products.map(x => {

val splits = x.split(" ")

val name = splits(0)

val price = splits(1).toDouble

val amount = splits(2).toInt

Products02(name, price, amount)

})

/**

* Ordering on

*

* -x._2, -x._3 排序规则

* (Double,Int) 定义的是规则的返回值的类型 就是参与排序的 类型

* (String,Double,Int) 数据的类型

*/

implicit val ord = Ordering[(Double,Int)].on[Products02](x=>(-x.price, -x.amount))

product.sortBy(x=>x).printInfo()

sc.stop()

}

}

总结:

后面两种都是隐式转换:

一个是增强类

一个是隐式参数

隐式转换没什么 听着挺吓人的