本篇文章主要介绍 kubelet 的启动流程。

kubernetes 版本: v1.13

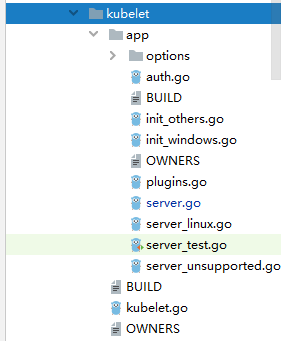

代码目录结构

kubernetes/cmd/kubelet

kubelet 启动流程

kubelet 启动流程时序图

启动流程分析

1、kubelet 入口函数 main(cmd/kubelet/kubelet.go)

func main() {

rand.Seed(time.Now().UnixNano())

command := app.NewKubeletCommand(server.SetupSignalHandler())

logs.InitLogs()

defer logs.FlushLogs()

if err := command.Execute(); err != nil {

fmt.Fprintf(os.Stderr, "%v\n", err)

os.Exit(1)

}

}2、初始化 kubelet 配置(cmd/kubelet/app/server.go)

NewKubeletCommand() 函数主要负责获取配置文件中的参数,校验参数以及为参数设置默认值。

// NewKubeletCommand creates a *cobra.Command object with default parameters

func NewKubeletCommand(stopCh <-chan struct{}) *cobra.Command {

cleanFlagSet := pflag.NewFlagSet(componentKubelet, pflag.ContinueOnError)

cleanFlagSet.SetNormalizeFunc(flag.WordSepNormalizeFunc)

// Kubelet配置分两部分:

// KubeletFlag: 指那些不允许在 kubelet 运行时进行修改的配置集,或者不能在集群中各个 Nodes 之间共享的配置集。

// KubeletConfiguration: 指可以在集群中各个Nodes之间共享的配置集,可以进行动态配置。

kubeletFlags := options.NewKubeletFlags()

kubeletConfig, err := options.NewKubeletConfiguration()

...

cmd := &cobra.Command{

...

Run: func(cmd *cobra.Command, args []string) {

// 读取 kubelet 配置文件

if configFile := kubeletFlags.KubeletConfigFile; len(configFile) > 0 {

kubeletConfig, err = loadConfigFile(configFile)

if err != nil {

glog.Fatal(err)

}

...

}

// 校验 kubelet 参数

if err := kubeletconfigvalidation.ValidateKubeletConfiguration(kubeletConfig); err != nil {

glog.Fatal(err)

}

...

// 此处初始化了 kubeletDeps

kubeletDeps, err := UnsecuredDependencies(kubeletServer)

if err != nil {

glog.Fatal(err)

}

...

// 启动程序

if err := Run(kubeletServer, kubeletDeps, stopCh); err != nil {

glog.Fatal(err)

}

},

}

...

return cmd

}

kubeletDeps 包含 kubelet 运行所必须的配置,是为了实现 dependency injection,其目的是为了把 kubelet 依赖的组件对象作为参数传进来,这样可以控制 kubelet 的行为。主要包括监控功能(cadvisor),cgroup 管理功能(containerManager)等。

NewKubeletCommand() 会调用 Run() 函数,Run() 中主要调用 run() 函数进行一些准备事项。

3、创建和 apiserver 通信的对象(cmd/kubelet/app/server.go)

run() 函数的主要功能:

- 1、创建 kubeClient,evnetClient 用来和 apiserver 通信。创建 heartbeatClient 向 apiserver 上报心跳状态。

- 2、为 kubeDeps 设定一些默认值。

- 3、启动监听 Healthz 端口的 http server,默认端口是 10248。

func run(s *options.KubeletServer, kubeDeps *kubelet.Dependencies, stopCh <-chan struct{}) (err error) {

...

// 判断 kubelet 的启动模式

if standaloneMode {

...

} else if kubeDeps.KubeClient == nil || kubeDeps.EventClient == nil || kubeDeps.HeartbeatClient == nil || kubeDeps.DynamicKubeClient == nil {

...

// 创建对象 kubeClient

kubeClient, err = clientset.NewForConfig(clientConfig)

...

// 创建对象 evnetClient

eventClient, err = v1core.NewForConfig(&eventClientConfig)

...

// heartbeatClient 上报状态

heartbeatClient, err = clientset.NewForConfig(&heartbeatClientConfig)

...

}

// 为 kubeDeps 设定一些默认值

if kubeDeps.Auth == nil {

auth, err := BuildAuth(nodeName, kubeDeps.KubeClient, s.KubeletConfiguration)

if err != nil {

return err

}

kubeDeps.Auth = auth

}

if kubeDeps.CAdvisorInterface == nil {

imageFsInfoProvider := cadvisor.NewImageFsInfoProvider(s.ContainerRuntime, s.RemoteRuntimeEndpoint)

kubeDeps.CAdvisorInterface, err = cadvisor.New(imageFsInfoProvider, s.RootDirectory, cadvisor.UsingLegacyCadvisorStats(s.ContainerRuntime, s.RemoteRuntimeEndpoint))

if err != nil {

return err

}

}

}

//

if err := RunKubelet(s, kubeDeps, s.RunOnce); err != nil {

return err

}

...

// 启动监听 Healthz 端口的 http server

if s.HealthzPort > 0 {

healthz.DefaultHealthz()

go wait.Until(func() {

err := http.ListenAndServe(net.JoinHostPort(s.HealthzBindAddress, strconv.Itoa(int(s.HealthzPort))), nil)

if err != nil {

glog.Errorf("Starting health server failed: %v", err)

}

}, 5*time.Second, wait.NeverStop)

}

...

}

kubelet 对 pod 资源的获取方式有三种:第一种是通过文件获得,文件一般放在 /etc/kubernetes/manifests 目录下面;第二种也是通过文件过得,只不过文件是通过 URL 获取的;第三种是通过 watch kube-apiserver 获取。其中前两种模式下,我们称 kubelet 运行在 standalone 模式下,运行在 standalone 模式下的 kubelet 一般用于调试某些功能。

run() 中调用 RunKubelet() 函数进行后续操作。

4、初始化 kubelet 组件内部的模块(cmd/kubelet/app/server.go)

RunKubelet() 主要功能:

- 1、初始化 kubelet 组件中的各个模块,创建出 kubelet 对象。

- 2、启动垃圾回收服务。

func RunKubelet(kubeServer *options.KubeletServer, kubeDeps *kubelet.Dependencies, runOnce bool) error {

...

// 初始化 kubelet 内部模块

k, err := CreateAndInitKubelet(&kubeServer.KubeletConfiguration,

kubeDeps,

&kubeServer.ContainerRuntimeOptions,

kubeServer.ContainerRuntime,

kubeServer.RuntimeCgroups,

kubeServer.HostnameOverride,

kubeServer.NodeIP,

kubeServer.ProviderID,

kubeServer.CloudProvider,

kubeServer.CertDirectory,

kubeServer.RootDirectory,

kubeServer.RegisterNode,

kubeServer.RegisterWithTaints,

kubeServer.AllowedUnsafeSysctls,

kubeServer.RemoteRuntimeEndpoint,

kubeServer.RemoteImageEndpoint,

kubeServer.ExperimentalMounterPath,

kubeServer.ExperimentalKernelMemcgNotification,

kubeServer.ExperimentalCheckNodeCapabilitiesBeforeMount,

kubeServer.ExperimentalNodeAllocatableIgnoreEvictionThreshold,

kubeServer.MinimumGCAge,

kubeServer.MaxPerPodContainerCount,

kubeServer.MaxContainerCount,

kubeServer.MasterServiceNamespace,

kubeServer.RegisterSchedulable,

kubeServer.NonMasqueradeCIDR,

kubeServer.KeepTerminatedPodVolumes,

kubeServer.NodeLabels,

kubeServer.SeccompProfileRoot,

kubeServer.BootstrapCheckpointPath,

kubeServer.NodeStatusMaxImages)

if err != nil {

return fmt.Errorf("failed to create kubelet: %v", err)

}

...

if runOnce {

if _, err := k.RunOnce(podCfg.Updates()); err != nil {

return fmt.Errorf("runonce failed: %v", err)

}

glog.Infof("Started kubelet as runonce")

} else {

//

startKubelet(k, podCfg, &kubeServer.KubeletConfiguration, kubeDeps, kubeServer.EnableServer)

glog.Infof("Started kubelet")

}

}

func CreateAndInitKubelet(...){

// NewMainKubelet 实例化一个 kubelet 对象,并对 kubelet 内部各个模块进行初始化

k, err = kubelet.NewMainKubelet(kubeCfg,

kubeDeps,

crOptions,

containerRuntime,

runtimeCgroups,

hostnameOverride,

nodeIP,

providerID,

cloudProvider,

certDirectory,

rootDirectory,

registerNode,

registerWithTaints,

allowedUnsafeSysctls,

remoteRuntimeEndpoint,

remoteImageEndpoint,

experimentalMounterPath,

experimentalKernelMemcgNotification,

experimentalCheckNodeCapabilitiesBeforeMount,

experimentalNodeAllocatableIgnoreEvictionThreshold,

minimumGCAge,

maxPerPodContainerCount,

maxContainerCount,

masterServiceNamespace,

registerSchedulable,

nonMasqueradeCIDR,

keepTerminatedPodVolumes,

nodeLabels,

seccompProfileRoot,

bootstrapCheckpointPath,

nodeStatusMaxImages)

if err != nil {

return nil, err

}

// 通知 apiserver kubelet 启动了

k.BirthCry()

// 启动垃圾回收服务

k.StartGarbageCollection()

return k, nil

}

func NewMainKubelet(kubeCfg *kubeletconfiginternal.KubeletConfiguration,...){

...

if kubeDeps.PodConfig == nil {

var err error

// 初始化 makePodSourceConfig,监听 pod 元数据的来源(FILE, URL, api-server),将不同 source 的 pod configuration 合并到一个结构中

kubeDeps.PodConfig, err = makePodSourceConfig(kubeCfg, kubeDeps, nodeName, bootstrapCheckpointPath)

if err != nil {

return nil, err

}

}

// kubelet 服务端口,默认 10250

daemonEndpoints := &v1.NodeDaemonEndpoints{

KubeletEndpoint: v1.DaemonEndpoint{Port: kubeCfg.Port},

}

// 使用 reflector 把 ListWatch 得到的服务信息实时同步到 serviceStore 对象中

serviceIndexer := cache.NewIndexer(cache.MetaNamespaceKeyFunc, cache.Indexers{cache.NamespaceIndex: cache.MetaNamespaceIndexFunc})

if kubeDeps.KubeClient != nil {

serviceLW := cache.NewListWatchFromClient(kubeDeps.KubeClient.CoreV1().RESTClient(), "services", metav1.NamespaceAll, fields.Everything())

r := cache.NewReflector(serviceLW, &v1.Service{}, serviceIndexer, 0)

go r.Run(wait.NeverStop)

}

serviceLister := corelisters.NewServiceLister(serviceIndexer)

// 使用 reflector 把 ListWatch 得到的节点信息实时同步到 nodeStore 对象中

nodeIndexer := cache.NewIndexer(cache.MetaNamespaceKeyFunc, cache.Indexers{})

if kubeDeps.KubeClient != nil {

fieldSelector := fields.Set{api.ObjectNameField: string(nodeName)}.AsSelector()

nodeLW := cache.NewListWatchFromClient(kubeDeps.KubeClient.CoreV1().RESTClient(), "nodes", metav1.NamespaceAll, fieldSelector)

r := cache.NewReflector(nodeLW, &v1.Node{}, nodeIndexer, 0)

go r.Run(wait.NeverStop)

}

nodeInfo := &predicates.CachedNodeInfo{NodeLister: corelisters.NewNodeLister(nodeIndexer)}

...

// node 资源不足时的驱逐策略的设定

thresholds, err := eviction.ParseThresholdConfig(enforceNodeAllocatable, kubeCfg.EvictionHard, kubeCfg.EvictionSoft, kubeCfg.EvictionSoftGracePeriod, kubeCfg.EvictionMinimumReclaim)

if err != nil {

return nil, err

}

evictionConfig := eviction.Config{

PressureTransitionPeriod: kubeCfg.EvictionPressureTransitionPeriod.Duration,

MaxPodGracePeriodSeconds: int64(kubeCfg.EvictionMaxPodGracePeriod),

Thresholds: thresholds,

KernelMemcgNotification: experimentalKernelMemcgNotification,

PodCgroupRoot: kubeDeps.ContainerManager.GetPodCgroupRoot(),

}

...

// 容器引用的管理

containerRefManager := kubecontainer.NewRefManager()

// oom 监控

oomWatcher := NewOOMWatcher(kubeDeps.CAdvisorInterface, kubeDeps.Recorder)

// 根据配置信息和各种对象创建 Kubelet 实例

klet := &Kubelet{

hostname: hostname,

hostnameOverridden: len(hostnameOverride) > 0,

nodeName: nodeName,

...

}

// 从 cAdvisor 获取当前机器的信息

machineInfo, err := klet.cadvisor.MachineInfo()

// 对 pod 的管理(如: 增删改等)

klet.podManager = kubepod.NewBasicPodManager(kubepod.NewBasicMirrorClient(klet.kubeClient), secretManager, configMapManager, checkpointManager)

// 容器运行时管理

runtime, err := kuberuntime.NewKubeGenericRuntimeManager(...)

// pleg

klet.pleg = pleg.NewGenericPLEG(klet.containerRuntime, plegChannelCapacity, plegRelistPeriod, klet.podCache, clock.RealClock{})

// 创建 containerGC 对象,进行周期性的容器清理工作

containerGC, err := kubecontainer.NewContainerGC(klet.containerRuntime, containerGCPolicy, klet.sourcesReady)

// 创建 imageManager 管理镜像

imageManager, err := images.NewImageGCManager(klet.containerRuntime, klet.StatsProvider, kubeDeps.Recorder, nodeRef, imageGCPolicy, crOptions.PodSandboxImage)

// statusManager 实时检测节点上 pod 的状态,并更新到 apiserver 对应的 pod

klet.statusManager = status.NewManager(klet.kubeClient, klet.podManager, klet)

// 探针管理

klet.probeManager = prober.NewManager(...)

// token 管理

tokenManager := token.NewManager(kubeDeps.KubeClient)

// 磁盘管理

klet.volumeManager = volumemanager.NewVolumeManager()

// 将 syncPod() 注入到 podWorkers 中

klet.podWorkers = newPodWorkers(klet.syncPod, kubeDeps.Recorder, klet.workQueue, klet.resyncInterval, backOffPeriod, klet.podCache)

// 容器驱逐策略管理

evictionManager, evictionAdmitHandler := eviction.NewManager(klet.resourceAnalyzer, evictionConfig, killPodNow(klet.podWorkers, kubeDeps.Recorder), klet.imageManager, klet.containerGC, kubeDeps.Recorder, nodeRef, klet.clock)

...

}

RunKubelet 最后会调用 startKubelet() 进行后续的操作。

5、启动 kubelet 内部的模块及服务(cmd/kubelet/app/server.go)

startKubelet() 的主要功能:

- 1、以 goroutine 方式启动 kubelet 中的各个模块。

- 2、启动 kubelet http server。

func startKubelet(k kubelet.Bootstrap, podCfg *config.PodConfig, kubeCfg *kubeletconfiginternal.KubeletConfiguration, kubeDeps *kubelet.Dependencies, enableServer bool) {

go wait.Until(func() {

// 以 goroutine 方式启动 kubelet 中的各个模块

k.Run(podCfg.Updates())

}, 0, wait.NeverStop)

// 启动 kubelet http server

if enableServer {

go k.ListenAndServe(net.ParseIP(kubeCfg.Address), uint(kubeCfg.Port), kubeDeps.TLSOptions, kubeDeps.Auth, kubeCfg.EnableDebuggingHandlers, kubeCfg.EnableContentionProfiling)

}

if kubeCfg.ReadOnlyPort > 0 {

go k.ListenAndServeReadOnly(net.ParseIP(kubeCfg.Address), uint(kubeCfg.ReadOnlyPort))

}

}

// Run starts the kubelet reacting to config updates

func (kl *Kubelet) Run(updates <-chan kubetypes.PodUpdate) {

if kl.logServer == nil {

kl.logServer = http.StripPrefix("/logs/", http.FileServer(http.Dir("/var/log/")))

}

if kl.kubeClient == nil {

glog.Warning("No api server defined - no node status update will be sent.")

}

// Start the cloud provider sync manager

if kl.cloudResourceSyncManager != nil {

go kl.cloudResourceSyncManager.Run(wait.NeverStop)

}

if err := kl.initializeModules(); err != nil {

kl.recorder.Eventf(kl.nodeRef, v1.EventTypeWarning, events.KubeletSetupFailed, err.Error())

glog.Fatal(err)

}

// Start volume manager

go kl.volumeManager.Run(kl.sourcesReady, wait.NeverStop)

if kl.kubeClient != nil {

// Start syncing node status immediately, this may set up things the runtime needs to run.

go wait.Until(kl.syncNodeStatus, kl.nodeStatusUpdateFrequency, wait.NeverStop)

go kl.fastStatusUpdateOnce()

// start syncing lease

if utilfeature.DefaultFeatureGate.Enabled(features.NodeLease) {

go kl.nodeLeaseController.Run(wait.NeverStop)

}

}

go wait.Until(kl.updateRuntimeUp, 5*time.Second, wait.NeverStop)

// Start loop to sync iptables util rules

if kl.makeIPTablesUtilChains {

go wait.Until(kl.syncNetworkUtil, 1*time.Minute, wait.NeverStop)

}

// Start a goroutine responsible for killing pods (that are not properly

// handled by pod workers).

go wait.Until(kl.podKiller, 1*time.Second, wait.NeverStop)

// Start component sync loops.

kl.statusManager.Start()

kl.probeManager.Start()

// Start syncing RuntimeClasses if enabled.

if kl.runtimeClassManager != nil {

go kl.runtimeClassManager.Run(wait.NeverStop)

}

// Start the pod lifecycle event generator.

kl.pleg.Start()

kl.syncLoop(updates, kl)

}

6、syncLoop 主进程

syncLoop 是 kubelet 的主循环方法,它从不同的管道(FILE,URL, API-SERVER)监听 pod 的变化,并把它们汇聚起来。当有新的变化发生时,它会调用对应的函数,保证 Pod 处于期望的状态。

func (kl *Kubelet) syncLoop(updates <-chan kubetypes.PodUpdate, handler SyncHandler) {

glog.Info("Starting kubelet main sync loop.")

// syncTicker 每秒检测一次是否有需要同步的 pod workers

syncTicker := time.NewTicker(time.Second)

defer syncTicker.Stop()

housekeepingTicker := time.NewTicker(housekeepingPeriod)

defer housekeepingTicker.Stop()

plegCh := kl.pleg.Watch()

const (

base = 100 * time.Millisecond

max = 5 * time.Second

factor = 2

)

duration := base

for {

if rs := kl.runtimeState.runtimeErrors(); len(rs) != 0 {

glog.Infof("skipping pod synchronization - %v", rs)

// exponential backoff

time.Sleep(duration)

duration = time.Duration(math.Min(float64(max), factor*float64(duration)))

continue

}

// reset backoff if we have a success

duration = base

kl.syncLoopMonitor.Store(kl.clock.Now())

//

if !kl.syncLoopIteration(updates, handler, syncTicker.C, housekeepingTicker.C, plegCh) {

break

}

kl.syncLoopMonitor.Store(kl.clock.Now())

}

}

syncLoopIteration() 方法对多个管道进行遍历,如果 pod 发生变化,则会调用相应的 Handler,在 Handler 中通过调用 dispatchWork 分发任务。

Pod启动流程分析

Pod的启动在syncLoop方法下调用的syncLoopIteration方法开始。在syncLoopIteration方法内,有5个重要的参数

// Arguments:

// 1. configCh: a channel to read config events from

// 2. handler: the SyncHandler to dispatch pods to

// 3. syncCh: a channel to read periodic sync events from

// 4. houseKeepingCh: a channel to read housekeeping events from

// 5. plegCh: a channel to read PLEG updates from1、configCh:获取Pod信息的channel,关于Pod相关的事件都从该channel获取;

2、handler:处理Pod的handler;

3、syncCh:同步所有等待同步的Pod;

4、houseKeepingCh:清理Pod的channel;

5、plegCh:获取PLEG信息,同步Pod。

每个参数都是一个channel,通过select判断某个channel获取到信息,处理相应的操作。Pod的启动显然与configCh相关。

通过获取configCh信息,获取Pod整个生命周期中的多种状态

// PodOperation defines what changes will be made on a pod configuration.

type PodOperation int

const (

// This is the current pod configuration

SET PodOperation = iota

// Pods with the given ids are new to this source

ADD

// Pods with the given ids are gracefully deleted from this source

DELETE

// Pods with the given ids have been removed from this source

REMOVE

// Pods with the given ids have been updated in this source

UPDATE

// Pods with the given ids have unexpected status in this source,

// kubelet should reconcile status with this source

RECONCILE

// Pods with the given ids have been restored from a checkpoint.

RESTORE

// These constants identify the sources of pods

// Updates from a file

FileSource = "file"

// Updates from querying a web page

HTTPSource = "http"

// Updates from Kubernetes API Server

ApiserverSource = "api"

// Updates from all sources

AllSource = "*"

NamespaceDefault = metav1.NamespaceDefault

)

相对应的,每个状态对应相应的处理方法

// SyncHandler is an interface implemented by Kubelet, for testability

type SyncHandler interface {

HandlePodAdditions(pods []*v1.Pod)

HandlePodUpdates(pods []*v1.Pod)

HandlePodRemoves(pods []*v1.Pod)

HandlePodReconcile(pods []*v1.Pod)

HandlePodSyncs(pods []*v1.Pod)

HandlePodCleanups() error

}其中,ADD操作对应Pod的创建,其对应的处理方法为HandlePodAdditions。

进入HandlePodAdditions方法,主要以下几个步骤:

1、根据Pod的创建时间对Pod进行排序;

2、podManager添加Pod;(对Pod的管理依赖于podManager)

3、处理mirrorPod,即静态Pod的处理;

4、通过dispatchWork方法分发任务,处理Pod的创建;

5、probeManager添加Pod。(readiness和liveness探针)dispatchWork方法内,最核心的是调用了kl.podWorkers.UpdatePod方法对Pod进行创建。UpdatePod方法通过podUpdates的map类型获取相对应的Pod,map的key为Pod的UID,value为UpdatePodOptions的结构体channel。通过获取到需要创建的Pod之后,单独起一个goroutine调用managePodLoop方法完成Pod的创建,managePodLoop方法最终调用syncPodFn完成Pod的创建,syncPodFn对应的就是Kubelet的syncPod方法,位于kubernetes/pkg/kubelet/kubelet.go下。经过层层环绕,syncPod就是最终处理Pod创建的方法。syncPod主要的工作流如注释

// The workflow is:

// * If the pod is being created, record pod worker start latency

// * Call generateAPIPodStatus to prepare an v1.PodStatus for the pod

// * If the pod is being seen as running for the first time, record pod

// start latency

// * Update the status of the pod in the status manager

// * Kill the pod if it should not be running

// * Create a mirror pod if the pod is a static pod, and does not

// already have a mirror pod

// * Create the data directories for the pod if they do not exist

// * Wait for volumes to attach/mount

// * Fetch the pull secrets for the pod

// * Call the container runtime's SyncPod callback

// * Update the traffic shaping for the pod's ingress and egress limits

//1、更新Pod的状态,对应generateAPIPodStatus和statusManager.SetPodStatus方法;

2、创建Pod存储的目录,对应makePodDataDirs方法;

3、挂载对应的volume,对应volumeManager.WaitForAttachAndMount方法;

4、获取ImagePullSecrets,对应getPullSecretsForPod方法;

5、创建容器,对应containerRuntime.SyncPod方法,如下

// SyncPod syncs the running pod into the desired pod by executing following steps:

//

// 1. Compute sandbox and container changes.

// 2. Kill pod sandbox if necessary.

// 3. Kill any containers that should not be running.

// 4. Create sandbox if necessary.

// 5. Create init containers.

// 6. Create normal containers.调用的是:func (m *kubeGenericRuntimeManager) SyncPod

至此,Pod的启动到创建过程完成。通过kubectl describe pod命令可以查看Pod创建的整个生命周期。

PLEG分析

PLEG,即PodLifecycleEventGenerator,用来记录Pod生命周期中对应的各种事件。在kubelet中,启动主进程的syncLoop之前,先启动pleg,如下

func (kl *Kubelet) Run(updates <-chan kubetypes.PodUpdate) {

...

// Start the pod lifecycle event generator.

kl.pleg.Start()

kl.syncLoop(updates, kl)

...

}

Start方法通过启动一个定时的任务执行relist方法

relist主要的工作就是通过比对Pod的原始状态和现在的状态,判断Pod当前所处的生命周期,核心代码如下

对每一个Pod,比对Pod内的容器,通过computeEvents-->generateEvents生成事件。在generateEvents内,生成以下事件:

1、newState为plegContainerRunning,对应ContainerStarted事件;

2、newState为plegContainerExited,对应ContainerDied事件;

3、newState为plegContainerUnknown,对应ContainerChanged事件;

4、newState为plegContainerNonExistent,查找oldState,如果对应plegContainerExited,则生成的事件为ContainerRemoved,否则事件为ContainerDied和ContainerRemoved;

生成完事件之后,将事件一一通知到eventChannel,该channel对应的就是syncLoopIteration方法下的plegCh

在syncLoopIteration方法下,接收到plegCh channel传输过来的消息之后,执行HandlePodSyncs同步方法,最终调用到dispatchWork这个Pod的处理方法,对Pod的生命进行管理。

GC管理

Kubelet会定时去清理多余的container和image,完成ContainerGC和ImageGC。Kubelet在启动的Run方法里,会先去调用imageManager的Start方法,代码位于kubernetes/pkg/kubelet/kubelet.go下,调用了initializeModules方法。imageManager.Start方法主要执行两个步骤:

1、detectImages:主要用来监控images,判断镜像是可被发现的;

2、ListImages:主要用来获取镜像信息,写入到缓存imageCache中。

在启动的CreateAndInitKubelet方法中,开始执行镜像与容器的回收

StartGarbageCollection方法启用两个goroutine,一个用来做ContainerGC,一个用来做ImageGC,代码如下

func (kl *Kubelet) StartGarbageCollection() {

loggedContainerGCFailure := false

go wait.Until(func() {

if err := kl.containerGC.GarbageCollect(); err != nil {

klog.Errorf("Container garbage collection failed: %v", err)

kl.recorder.Eventf(kl.nodeRef, v1.EventTypeWarning, events.ContainerGCFailed, err.Error())

loggedContainerGCFailure = true

} else {

var vLevel klog.Level = 4

if loggedContainerGCFailure {

vLevel = 1

loggedContainerGCFailure = false

}

klog.V(vLevel).Infof("Container garbage collection succeeded")

}

}, ContainerGCPeriod, wait.NeverStop)

// when the high threshold is set to 100, stub the image GC manager

if kl.kubeletConfiguration.ImageGCHighThresholdPercent == 100 {

klog.V(2).Infof("ImageGCHighThresholdPercent is set 100, Disable image GC")

return

}

prevImageGCFailed := false

go wait.Until(func() {

if err := kl.imageManager.GarbageCollect(); err != nil {

if prevImageGCFailed {

klog.Errorf("Image garbage collection failed multiple times in a row: %v", err)

// Only create an event for repeated failures

kl.recorder.Eventf(kl.nodeRef, v1.EventTypeWarning, events.ImageGCFailed, err.Error())

} else {

klog.Errorf("Image garbage collection failed once. Stats initialization may not have completed yet: %v", err)

}

prevImageGCFailed = true

} else {

var vLevel klog.Level = 4

if prevImageGCFailed {

vLevel = 1

prevImageGCFailed = false

}

klog.V(vLevel).Infof("Image garbage collection succeeded")

}

}, ImageGCPeriod, wait.NeverStop)

}可以看到容器的GC默认是每分钟执行一次,镜像的GC默认是每5分钟执行一次,通过定时执行GC的清理完成容器与镜像的回收。

容器的GC主要完成的任务包括删除被驱除的容器、删除sandboxes以及清理Pod的sandbox的日志目录,代码位于kubernetes/pkg/kubelet/kuberuntime/kuberuntime_gc.go下,调用了GarbageCollect方法;镜像的GC主要完成多余镜像的删除和存储空间的释放,代码位于kubernetes/pkg/kubelet/images/image_gc_manager.go下,调用了GarbageCollect方法。

总结

本篇文章主要讲述了 kubelet 组件从加载配置到初始化内部的各个模块再到启动 kubelet 服务的整个流程,上面的时序图能清楚的看到函数之间的调用关系,但是其中每个组件具体的工作方式以及组件之间的交互方式还不得而知,后面会一探究竟。

参考:

https://www.huweihuang.com/article/source-analysis/kubelet/NewKubeletCommand/

https://www.jianshu.com/p/e07d84cce9f9

https://www.huweihuang.com/article/source-analysis/kubelet/NewKubeletCommand/