Abstract

In this paper,the author we attempt to fight such chaos(FAKE NEWS) with itself to make automatic rumor detection More robust and effective;

The idea is inspired by adversarial learning method originated from Generative Adversarial Networks.

In the approach,a generator is designed to produce uncertain or conflicting voices,complicating the original conventional threads in order to pressurize the discriminator to learn stronger rumor indicative representations from augmented,more challenging examples.

Different from traditional data-driven approach to rumor detection,the method can capture low-frequency but stronger non-trivial patterns via such adversarial training.

Existing Method

RNN\CNN

Nevertheless, existing data-driven approaches typically rely on finding indicative responses such as skeptical and disagreeing opinions for detection.

Our seemingly counter-intuitive idea is inspired by the Generative Adversarial Networks or dubbed as GAN [6, 7], where a discriminative classifier learns to distinguish whether an instance is from real world, and a generative model is trained to confuse the discriminator by generating proximately realistic examples.

Why can such a GAN-style method do better in feature learning?

The main contributions of our paper are four-fold:

- the first generative approach for rumor detection using a text-based GAN-style framework

- model rumor dissemination as generative information campaigns for generating confusing training examples to challenge the discriminator of its detection capacity.

- we reinforce our discriminator

- our model is more robust and effective than state-of-the-art baselines

Problem Statement

a claim is commonly represented by a set of posts (i.e., tweets) relevant to the claim which can be collected via Twitter's search function.

GENERATIVE ADVERSARIAL LEARNING FOR RUMOR DETECTION

Controversial Example Generation

A straightforward way is to twist or complicate the opinions expressed in the original data examples via a handful of rule templates.

We design two generators, one for distorting non-rumor to make it look like a rumor, and the other for 'whitewashing' rumor so that it looks like a non-rumor.

Considering the time sequence structure of posts in each instance,we use a sequence-to-sequence model for the generative transformation, which is illustrated in Figure.

We use GRU to store hidden representation:

The output of the last time step hT from the GRU-RNN encoder will be the hidden representation of Xy. Note that the sequence length T is not fixed which can vary with different instances

GAN-Style Adversarial Learning Model

We use the performance of discriminator as a reward to guide the generator.It consists of an adversarial learning module and two reconstruction modules (one for rumor and the other for non-rumor).

Adversarial learning module:

We formulate adversarial loss as the negative of discriminator loss based on the generator-augmented training data.

where LD(·) is the loss between the ground-truth class probability distribution y¯ and the class distribution yˆ predicted by discriminator given an input instance.

We combine the generated examples and original ones to augment the training set.we do not want to seriously weaken those useful features in the original example. Thus, the original example Xy is combined with X˜y for training as shown in Figure 3.

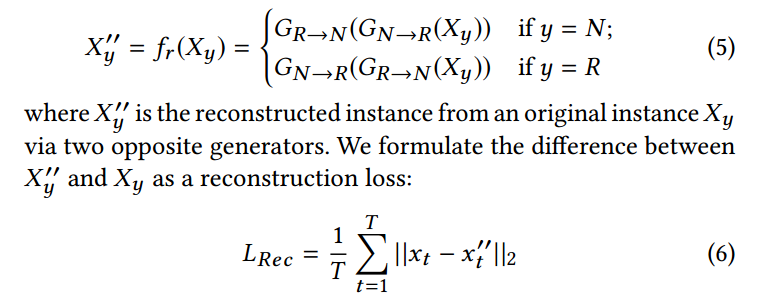

Reconstruction module:

We introduce a reconstruction mechanism to make the generative process reversible. The idea is that the opinionated voices will be reversible through two generators of opposite direction so as to minimize the loss of fidelity of information.

Objective of optimization:

And the objective of adversarial learning takes a min-max form.

Rumor Discriminator

With the combined data set,the discriminator learns to capyure more discriminative features,especially from low-frequency non-trivial patterns.

We build the discriminator based on a RNN rumor detection model.

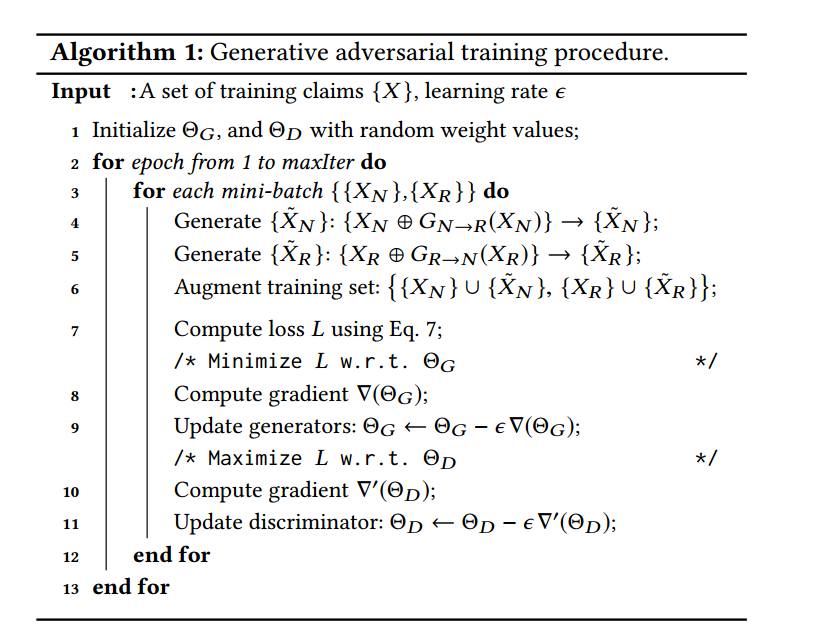

The generators and discriminator are alternately trained using stochastic gradient decent with mini-batches.

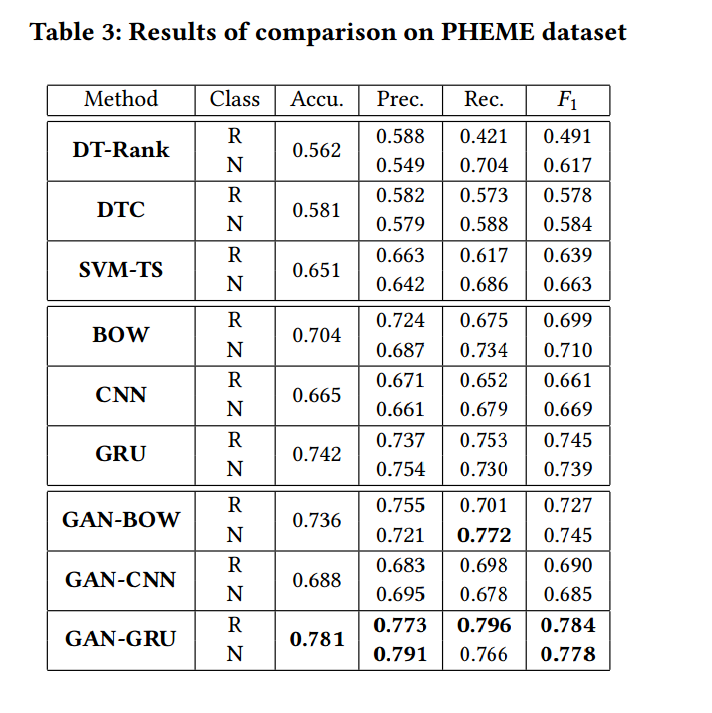

EXPERIMENTS AND RESULTS

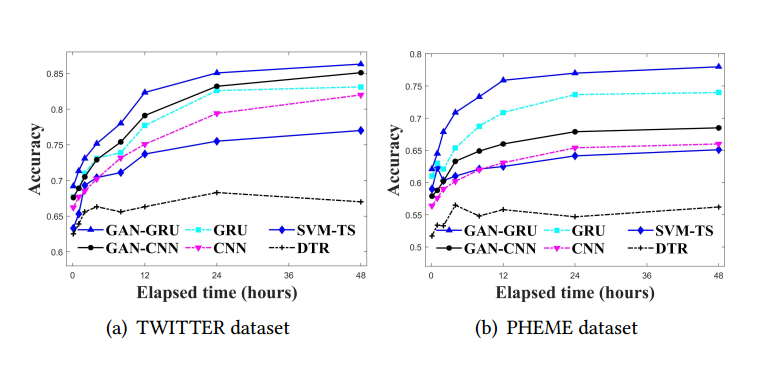

Early Rumor Detection

CONCLUSION AND FUTURE WORK

Our neural-network-based generators create training examples to confuse rumor discriminator so that the discriminator are forced to learn more powerful features from the augmented training data.