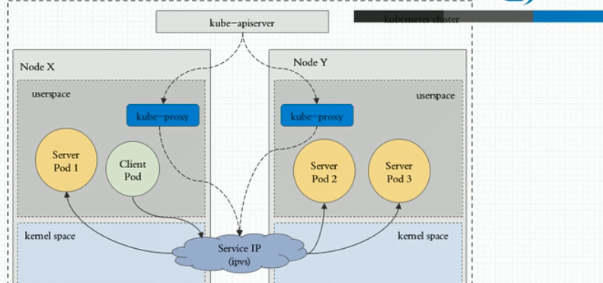

Service对应组件关系

1、在kubernetes平台之上,pod是有生命周期的,所以为了能够给对应的客户端提供一个固定的访问端点,因此我们在客户端和服务Pod之间添加一个固定的中间层,这个中间层我们称之为Service,这个Service的真正工作还要严重依赖于我们k8s之上部署的附件。称之为kubernetes的dns服务,不同的k8s版本实现可能不同,较新版本中默认使用的CoreDNS,1.11版本之前版本用的kube-dns,Service名称解析是强依赖于DNS附件的,因此我们部署完k8s以后必须要去部署一个CoreDNS或者kube-dns。

2、k8s要想能够向客户端提供网络功能,它需要依赖于第三方的方案,这种第三方方案可通过(至少较新版本中) cni(容器网络插件标准的接口)来进行接入任何遵循这种插件标准的第三方方案。当然,这里面的方案有很多个,像我们之前部署的flannel,canal等。

3、在k8s中有三类网络地址,分别是节点网络(node network),pod网络(pod network),集群网络(cluster network或Service network)前两种网络都是实实在在的存在纯硬件设备或软件模拟的,都是存在的。后一种集群网络 的IP称之为virtual IP,因为这些IP没有实实在在配置在某个接口上。它仅是出现在Service的规则中。

4、那么Service是什么呢?在每一个节点上我们工作了一个组件叫kube-proxy,此组件将始终监视着master上的api server中有关Service的资源变动信息,这种是通过k8s中的固有的一种请求方法watch(监视)来实现的。一旦有Service的资源的变动,包括创建,kube-proxy都要将其转换为当前节点之上的能够实现service资源调度,包括将用户请求调度到后端特定pod资源之上的规则中,这个规则有可能是iptables,也有可能是ipvs,取决于Service的实现方式

5、Service实现方式在k8s上有三种模型

(1)userspace,来自内部的请求client pod请求某个服务时一定先到达当前节点内核空间的iptables规则,也就是service的规则。这个service的工作方式是请求到达service后由service先把它转为本地监听在某个套接字上的用户空间的kube-proxy,它来负责处理,处理完后再转给service IP,最终代理至于service相关联的各个pod实现调度。可以发现请求由client pod发给service,service还要回到监听在这个端口上的kube-proxy,由kube-proxy来进行分发,所以kube-proxy是工作在用户空间的进程。所以其被称之为userspace。这种方式效率很低,原因在于先要到内核空间然后再到当前主机的用户空间kube-proxy,由kube-proxy封装其报文代理完以后再回到内核空间然后由iptables规则进行分发。

(2)iptables,后来就到了第二种方式,方法是,客户端ip请求时直接请求service的ip,这个请求报文被本地内核空间中的service规则所截取,进而直接调度给server pod,这种方式直接工作在内核空间由iptables规则直接负责调度。

(3)ipvs,client pod请求到达内核空间后直接由ipvs规则来调度,直接调度给pod网络地址范围内的相关Pod资源。

6、我们在安装并配置k8s的时候设定service工作在什么模式下他就会生成对应的什么模式的规则。1.10及之前的版本用的是iptables,再往前是1.1之前用的是userspace,1.11默认使用的是ipvs,若ipvs未被激活则默认为iptables。如果某个服务背后的Pod资源发生改变,比如service的标签选择器适用的版本又多一个那么这个pod适用的信息会立即反应到apiserver上,因为多个pod信息是会存在apiserver的etcd中,而后kube-proxy能够检测到这种变化并将其立即转化为service规则。所以他的转化是动态实时的,如果删除了一个pod并且这个pod没有被重构,这个状态结果会反馈至apiserver的etcd中,这种变化被kube-proxy watch到了,然后立即将其转换成iptables规则。

7、service到pod是有一个中间层的,service不会直接到pod,service会先到endpoints ,endpoints也是一个标准的k8s对象。其相当于是pod 地址 + 端口,然后再由endpoint关联至后端的pod,但是我们理解的话可以直接理解为从service直接到pod就行,但事实上我们可以为service手动创建endpoints资源。

service创建

kubectl explain svc.spec

KIND: Service VERSION: v1 RESOURCE: spec <Object> DESCRIPTION: Spec defines the behavior of a service. https://git.k8s.io/community/contributors/devel/api-conventions.md#spec-and-status ServiceSpec describes the attributes that a user creates on a service. FIELDS: clusterIP <string> #默认是自动分配,也可自己指定 clusterIP is the IP address of the service and is usually assigned randomly by the master. If an address is specified manually and is not in use by others, it will be allocated to the service; otherwise, creation of the service will fail. This field can not be changed through updates. Valid values are "None", empty string (""), or a valid IP address. "None" can be specified for headless services when proxying is not required. Only applies to types ClusterIP, NodePort, and LoadBalancer. Ignored if type is ExternalName. More info: https://kubernetes.io/docs/concepts/services-networking/service/#virtual-ips-and-service-proxies externalIPs <[]string> externalIPs is a list of IP addresses for which nodes in the cluster will also accept traffic for this service. These IPs are not managed by Kubernetes. The user is responsible for ensuring that traffic arrives at a node with this IP. A common example is external load-balancers that are not part of the Kubernetes system. externalName <string> externalName is the external reference that kubedns or equivalent will return as a CNAME record for this service. No proxying will be involved. Must be a valid RFC-1123 hostname (https://tools.ietf.org/html/rfc1123) and requires Type to be ExternalName. externalTrafficPolicy <string> externalTrafficPolicy denotes if this Service desires to route external traffic to node-local or cluster-wide endpoints. "Local" preserves the client source IP and avoids a second hop for LoadBalancer and Nodeport type services, but risks potentially imbalanced traffic spreading. "Cluster" obscures the client source IP and may cause a second hop to another node, but should have good overall load-spreading. healthCheckNodePort <integer> healthCheckNodePort specifies the healthcheck nodePort for the service. If not specified, HealthCheckNodePort is created by the service api backend with the allocated nodePort. Will use user-specified nodePort value if specified by the client. Only effects when Type is set to LoadBalancer and ExternalTrafficPolicy is set to Local. loadBalancerIP <string> Only applies to Service Type: LoadBalancer LoadBalancer will get created with the IP specified in this field. This feature depends on whether the underlying cloud-provider supports specifying the loadBalancerIP when a load balancer is created. This field will be ignored if the cloud-provider does not support the feature. loadBalancerSourceRanges <[]string> If specified and supported by the platform, this will restrict traffic through the cloud-provider load-balancer will be restricted to the specified client IPs. This field will be ignored if the cloud-provider does not support the feature." More info: https://kubernetes.io/docs/tasks/access-application-cluster/configure-cloud-provider-firewall/ ports <[]Object> #我们打算把哪个端口与后端容器端口建立关联关系 The list of ports that are exposed by this service. More info: https://kubernetes.io/docs/concepts/services-networking/service/#virtual-ips-and-service-proxies publishNotReadyAddresses <boolean> publishNotReadyAddresses, when set to true, indicates that DNS implementations must publish the notReadyAddresses of subsets for the Endpoints associated with the Service. The default value is false. The primary use case for setting this field is to use a StatefulSet's Headless Service to propagate SRV records for its Pods without respect to their readiness for purpose of peer discovery. selector <map[string]string> #关联到哪些pod资源上 Route service traffic to pods with label keys and values matching this selector. If empty or not present, the service is assumed to have an external process managing its endpoints, which Kubernetes will not modify. Only applies to types ClusterIP, NodePort, and LoadBalancer. Ignored if type is ExternalName. More info: https://kubernetes.io/docs/concepts/services-networking/service/ sessionAffinity <string> Supports "ClientIP" and "None". Used to maintain session affinity. Enable client IP based session affinity. Must be ClientIP or None. Defaults to None. More info: https://kubernetes.io/docs/concepts/services-networking/service/#virtual-ips-and-service-proxies sessionAffinityConfig <Object> sessionAffinityConfig contains the configurations of session affinity. type <string> type determines how the Service is exposed. Defaults to ClusterIP. Valid options are ExternalName, ClusterIP, NodePort, and LoadBalancer. "ExternalName" maps to the specified externalName. "ClusterIP" allocates a cluster-internal IP address for load-balancing to endpoints. Endpoints are determined by the selector or if that is not specified, by manual construction of an Endpoints object. If clusterIP is "None", no virtual IP is allocated and the endpoints are published as a set of endpoints rather than a stable IP. "NodePort" builds on ClusterIP and allocates a port on every node which routes to the clusterIP. "LoadBalancer" builds on NodePort and creates an external load-balancer (if supported in the current cloud) which routes to the clusterIP. More info: https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services---service-types

kubectl explain svc.spec.ports

KIND: Service VERSION: v1 RESOURCE: ports <[]Object> DESCRIPTION: The list of ports that are exposed by this service. More info: https://kubernetes.io/docs/concepts/services-networking/service/#virtual-ips-and-service-proxies ServicePort contains information on service's port. FIELDS: name <string> #ports的名称 The name of this port within the service. This must be a DNS_LABEL. All ports within a ServiceSpec must have unique names. This maps to the 'Name' field in EndpointPort objects. Optional if only one ServicePort is defined on this service. nodePort <integer> #指定节点上的端口,只有类型为NodePort时才生效。 The port on each node on which this service is exposed when type=NodePort or LoadBalancer. Usually assigned by the system. If specified, it will be allocated to the service if unused or else creation of the service will fail. Default is to auto-allocate a port if the ServiceType of this Service requires one. More info: https://kubernetes.io/docs/concepts/services-networking/service/#type-nodeport port <integer> -required- #这个服务对外提供服务的端口 The port that will be exposed by this service. protocol <string> #协议,默认tcp The IP protocol for this port. Supports "TCP" and "UDP". Default is TCP. targetPort <string> #容器的端口 Number or name of the port to access on the pods targeted by the service. Number must be in the range 1 to 65535. Name must be an IANA_SVC_NAME. If this is a string, it will be looked up as a named port in the target Pod's container ports. If this is not specified, the value of the 'port' field is used (an identity map). This field is ignored for services with clusterIP=None, and should be omitted or set equal to the 'port' field. More info: https://kubernetes.io/docs/concepts/services-networking/service/#defining-a-service

ClusterIP

默认为ClusterIP表示给其分配一个集群ip地址仅用于集群内通信,使用此类型时只有两个端口有用

- port,service地址上的端口

- targetPort,pod ip上的端口

cat redis-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: redis

namespace: default

spec:

selector:

app: redis

role: logstor

clusterIP: 10.97.97.97

type: ClusterIP

ports:

- port: 6379

targetPort: 6379

部署服务

kubectl apply -f redis-svc.yaml kubectl describe svc redis

service创建完只要k8s上的集群的dns服务是存在的那么我们在这儿就可以直接解析他的服务名,服务名的解析方式为每一个服务创建完以后都会在集群的dns中自动动态添加一个资源记录。不止一个,还会包含服务层

svc记录,A记录等,添加完后就可以解析,资源记录的格式为 SVC_NAME(服务名).NS_NAME(名称空间名).DOMAIN.LTD.(域名后缀),而集群的默认域名后缀是svc.cluster.local. 因此如果我们没改域名后缀,那么

我们每一个服务创建完就是这种域名格式的,比如上面的资源记录为 redis.default.svc.cluster.local

NodePort

接入集群外部的流量,只有使用这种类型时才能使用nodePort,否则是没用的

myapp.svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp

namespace: default

spec:

selector:

app: myapp

release: canary

clusterIP: 10.99.99.99 #指定固定ClusterIP

type: NodePort

ports:

- port: 80 #Service上的端口

targetPort: 80 #pod IP上的端口

nodePort: 30080 #节点端口,也可以不指定让系统动态分配

部署服务

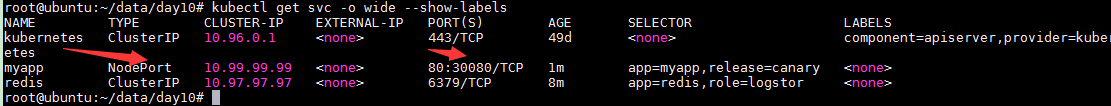

kubectl apply -f myapp.svc.yaml kubectl get svc -o wide --show-labels

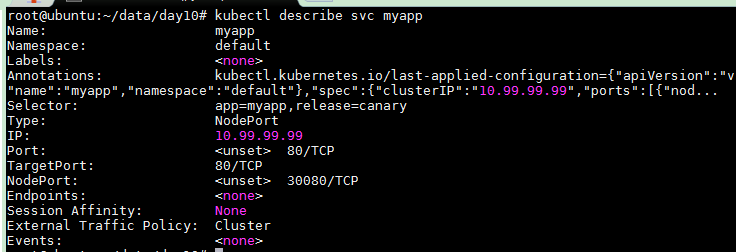

查看服务详细信息

kubectl describe svc myapp

apiVersion: apps/v1 kind: ReplicaSet metadata: name: myapp namespace: default spec: replicas: 2 selector: matchLabels: app: myapp release: canary template: metadata: name: myapp-pod labels: app: myapp release: canary environment: qa spec: containers: - name: myapp-container image: ikubernetes/myapp:v1 ports: - name: http containerPort: 80

重新打开一个shell访问集群任意一个节点,可以看到其还有负载均衡的效果,并且流量是经过了好几级转换,首先由nodeport转换为service port,再由service转成pod port

curl 192.168.228.138:30080/hostname.html

LoadBalance

LoadBalance(负载均衡及服务(LBaas)的一键调用):表示我们把k8s部署在虚拟机上而虚拟机是工作在云环境中,而我们云环境支持lb负载均衡器时使用。自动触发在外部创建一个负载均衡器。 比如在阿里云上

买了四个虚拟主机,同时又买了阿里云的LBaas的服务,在这四个vps上部署了k8s集群,然后这个k8s集群可以与其底层的公有云IaaS公有云的api相交互。其自身有这个能力,其能去调底层的IAAS云计算层中的API,

调的时候其能够请求去创建一个外置的负载均衡器,比如我们有四个节点,一个master,正常工作的是三个节点,在这三个节点上都使用的是同一个nodePort对集群外提供服务,它会自动请求底层IAAS用纯软件的方

式做一个负载均衡器并且为这个负载均衡器提供的配置信息是我们本机这三个节点的(注意是节点IP)节点端口上提供的相应服务,可以自动通过底层IAAS的api创建这个软负载均衡器的时候提供后端有哪几个节点,

因此,回头用户通过云计算环境之外的客户端来访问阿里云的内部的LBAAS生成的负载均衡器时由该负载均衡器来调度到后端几个节点的nodePort上,然后由nodeport转发给service,再由service在集群内部负载均衡

至pod上,因此可以发现其有两级负载均衡,第一级是将用户请求负载给多个node中的某一个,再由node通过service反代给集群内部的多个pod中的某一个。

ExternalName

将集群外部的服务引入至集群内部在集群内部直接使用。假如我们有个k8s集群,有三个工作节点,三个节点上有一些节点上的pod资源是作为客户端使用的,当此客户端访问某一服务时,此服务应该是由其它pod提供

的,但有这种可能性,我们pod访问服务集群中没有,但是集群外有个服务,比如在我们的本地局域网环境中,但是是在k8s集群之外,或者在互联网上有一个服务,比如dns等,我们期望这个服务让集群内能够访问

到,集群内一般用的都是私网地址,就算我们能够将请求报文路由出去离开本地网络到外部去那么外部响应报文也回不来,这样干是没法正常通信的,因此,ExternalName就是用来实现我们在集群中建一个服务

(service),这个service的端点不是本地port而是service关联到外部服务上去了因此我们客户端访问service时由service通过层级转换,包括nodePort转换请求到外部的服务中,外部服务先回给nodeIP,再由nodeIP转交

给service,再由service转交给pod client,从而让pod能够访问集群外部的服务。这样就能让我们集群内的pod像使用集群内部的服务一样来使用集群外部的服务。对此种服务来讲我们的cluster IP作用在于pod client内

部解析时使用,更重要的是ExternalName此时很关键,因为ExternalName确实应该是一个name而不是一个IP,并且此name还必须要被我们dns服务所解析才能够被访问,所以ExternalName引入时有这么一个基本限

制(了解一下就好),在svc.spec.中有如下字段

另外,在我们svc实现负载均衡时还支持sessionAffinity(会话联系,上述explain中有),默认值为None,因此其是随机基于iptables来调度的,若我们将其值定义成ClientIP则表示把来自同一个客户端IP的请求始终调度到同一个后端pod上去。

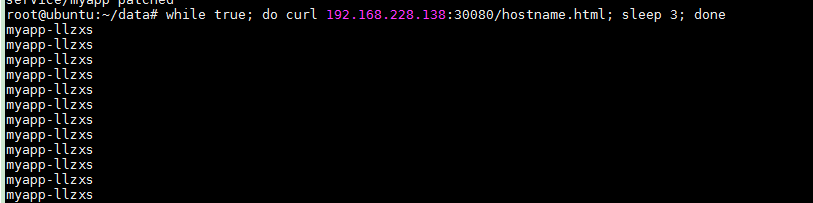

while true; do curl 192.168.228.138:30080/hostname.html; sleep 3; done

打上补丁

kubectl patch svc myapp -p '{"spec":{"sessionAffinity":"ClientIP"}}'

再次访问

while true; do curl 192.168.228.138:30080/hostname.html; sleep 3; done

可以看到同一 Ip 访问的都是同一个 pod资源,可以看到我们打的补丁已经生效

headless

无头service(headless),我们此前使用service一直是客户端pod访问service时解析的应该是service的名称,每一个service应该有其名称,并且其解析结果应该是其ClusterIP,一般解析ClusterIP一般只有一个。但是

我们也可以这样干,把中间层去掉,每一个pod也有其自己名称,我们可以在解析service IP时,将其解析给后端的pod IP,这种service就叫无头service。创建这种service时我们一样只需要指定明确定义clusterIP,并

且指定其值为None。

myapp-svc-headless.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp-svc

namespace: default

spec:

selector:

app: myapp

release: canary

clusterIP: "None"

ports:

- port: 80 #Service上的端口

targetPort: 80 #pod IP上的端口

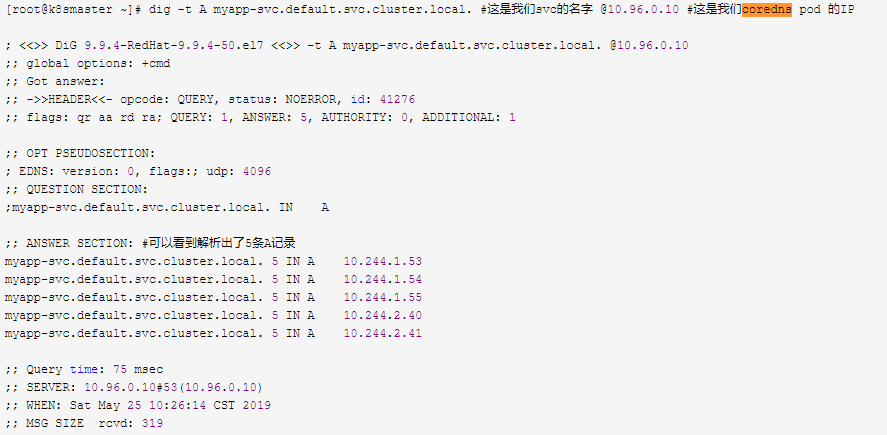

通过dig解析

dig -t A myapp-svc.default.svc.cluster.local.

通过以上的 servic可以发现有个问题,当我们定义完service后,我们要访问service后端的pod需要多级调度或代理,因此如果我们要建一个https服务的话我们会发现我们每一个myapp都要配置为https的主机,事实上k8s还有一种引入集群外部流量的方式叫做 ingress ,我们service是4层调度,但是ingress是七层调度器,它利用一种七层pod实现将外部流量引入到内部来,但是事实上他也脱离不了service的工作。作为ingress作为七层调度时我们必须要用Pod中的运行的七层服务功能的应用来调度,可以用nginx,haproxy等等