此篇内容承接上篇:LFW精确度验证__python读写txt

参考博客:

我在百度,知乎,github上搜了下lfw验证准确率的方法,资源很少,所以我将我感觉有用的链接都整理到了下面:

Labeled Faces in the Wild 官方网站:http://vis-www.cs.umass.edu/lfw/#views

pairs.txt 官方介绍:http://vis-www.cs.umass.edu/lfw/README.txt

LFW介绍整理:https://blog.csdn.net/jobbofhe/article/details/79416661

基于VGG-Face的人脸识别测试:https://blog.csdn.net/u013078356/article/details/60955197 【主要参考】

LFW的正确率,使用方法:https://blog.csdn.net/u014696921/article/details/70161852 【主要参考】

LFW_API:https://github.com/jakezhaojb/LFW_API

具体参考工程:https://github.com/KangKangLoveCat/insightface_ncnn

#include <vector> #include <iostream> #include <opencv2/opencv.hpp> #include "arcface.h" #include "mtcnn.h" #include <algorithm> #include <cmath> #include <fstream> #include <pthread.h> using namespace cv; using namespace std; #define PAIR_LINES 6000 vector< pair<string, string> > pos_vec; vector< pair<string, string> > neg_vec; MtcnnDetector detector("../models"); Arcface arc("../models"); cv::Mat ncnn2cv(ncnn::Mat img) { unsigned char pix[img.h * img.w * 3]; img.to_pixels(pix, ncnn::Mat::PIXEL_BGR); cv::Mat cv_img(img.h, img.w, CV_8UC3); for (int i = 0; i < cv_img.rows; i++) { for (int j = 0; j < cv_img.cols; j++) { cv_img.at<cv::Vec3b>(i,j)[0] = pix[3 * (i * cv_img.cols + j)]; cv_img.at<cv::Vec3b>(i,j)[1] = pix[3 * (i * cv_img.cols + j) + 1]; cv_img.at<cv::Vec3b>(i,j)[2] = pix[3 * (i * cv_img.cols + j) + 2]; } } return cv_img; } void preprocess(){ fstream label("./label.txt"); string left, right; int num; for(int i=0; i<PAIR_LINES; i++){ label >> left >> right >> num; if(num == 1) pos_vec.push_back(make_pair(left, right)); else neg_vec.push_back(make_pair(left, right)); } } void* pos(void* args){ fstream pos("./pos_scores.txt", fstream::in | fstream::out | fstream::trunc); string pos_left, pos_right, neg_left, neg_right; int pair_id = 0; int num=0; Mat img1, img2; ncnn::Mat ncnn_img1, ncnn_img2, det1, det2; vector<FaceInfo> results1, results2; vector<float> feature1, feature2; for(auto pos_it = pos_vec.begin(); pos_it != pos_vec.end(); ++pos_it){ pair_id++; pos_left = pos_it->first; pos_right = pos_it->second; img1 = imread(pos_left); img2 = imread(pos_right); ncnn_img1 = ncnn::Mat::from_pixels(img1.data, ncnn::Mat::PIXEL_BGR, img1.cols, img1.rows); ncnn_img2 = ncnn::Mat::from_pixels(img2.data, ncnn::Mat::PIXEL_BGR, img2.cols, img2.rows); results1 = detector.Detect(ncnn_img1); results2 = detector.Detect(ncnn_img2); if(results1.size()==0 || results2.size()==0) continue; det1 = preprocess(ncnn_img1, results1[0]); det2 = preprocess(ncnn_img2, results2[0]); feature1 = arc.getFeature(det1); feature2 = arc.getFeature(det2); float cal = calcSimilar(feature1, feature2); if(cal<0.35) ++num; pos << pair_id <<"\t"<< cal << "\t" <<pos_left<<"\t"<<pos_right<<endl; cout << pair_id << "pos finish" <<endl; } pos << pair_id << "\t" << num << endl; pos.close(); } void* neg(void* args){ fstream neg("./neg_scores.txt", fstream::in | fstream::out | fstream::trunc); string pos_left, pos_right, neg_left, neg_right; int pair_id = 0; int num=0; Mat img1, img2; ncnn::Mat ncnn_img1, ncnn_img2, det1, det2; vector<FaceInfo> results1, results2; vector<float> feature1, feature2; for(auto neg_it = neg_vec.begin(); neg_it != neg_vec.end(); ++neg_it){ pair_id++; neg_left = neg_it->first; neg_right = neg_it->second; img1 = imread(neg_left); img2 = imread(neg_right); ncnn_img1 = ncnn::Mat::from_pixels(img1.data, ncnn::Mat::PIXEL_BGR, img1.cols, img1.rows); ncnn_img2 = ncnn::Mat::from_pixels(img2.data, ncnn::Mat::PIXEL_BGR, img2.cols, img2.rows); results1 = detector.Detect(ncnn_img1); results2 = detector.Detect(ncnn_img2); if(results1.size()==0 || results2.size()==0) continue; det1 = preprocess(ncnn_img1, results1[0]); det2 = preprocess(ncnn_img2, results2[0]); feature1 = arc.getFeature(det1); feature2 = arc.getFeature(det2); float cal = calcSimilar(feature1, feature2); if(cal>0.35) ++num; neg << pair_id <<"\t"<< cal << "\t" <<neg_left<<"\t"<<neg_right<<endl; cout << pair_id << "neg finish" <<endl; } neg << pair_id << "\t" << num << endl; neg.close(); } int main(int argc, char* argv[]) { preprocess(); pthread_t tids[2]; int ret1 = pthread_create(&tids[0], NULL, pos, NULL); if(ret1 != 0) cout<<"pthread_create_error: error_code="<< ret1<<endl; int ret2 = pthread_create(&tids[1], NULL, neg, NULL); if(ret2 != 0) cout<<"pthread_create_error: error_code="<< ret2<<endl; pthread_exit(NULL); return 0; }

可以看到双线程的效果

一共6000组图片,3000组同人,3000组不同人,考虑到图片的质量不是很高(本身就是通过网络收集,像素也低)

加上图片有少量采集错误(不同人的照片错归为了同一人,影响测试结果的大概不到10组),所以我设置了一个比较低的阈值0.35进行测试

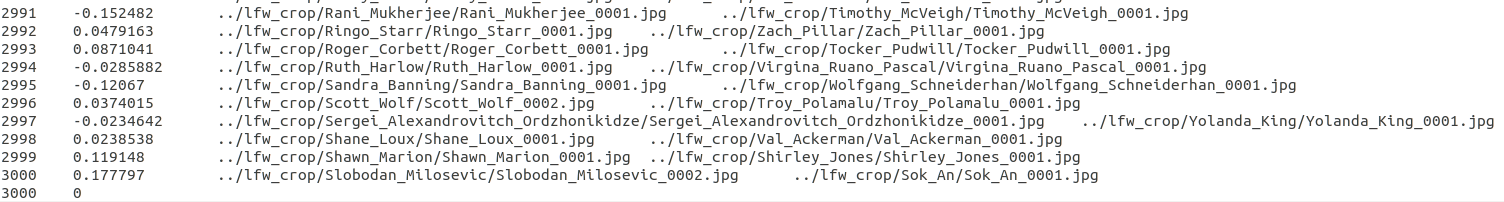

pos_scores.txt

3000组正样本中,有51组相似度低于0.35

neg_scores.txt

3000组负样本中,有0组相似度高于0.35,我肉眼搜索了一下最高0.30

总的来看6000组在阈值0.35的精确度为99.15,阈值设为0.3应该会有更好的表现

由于没有找到比较可靠的资料,所以测试方案是我自己设计的,虽然感觉这种测试精确度的方法不太科学,但相似度并没有一个准确的衡量方法

此方案算是1:1验证,也可以当作1:n来测试,但计算量会大些