可遗忘数据

[ICLR2019] An Empirical Study of Example Forgetting during Deep Neural Network Learning

当神经网络在 t+1 时刻给出误分类、而在 t 时刻给出了准确的分类时,

就称为发生了遗忘事件(forgetting event)。

(时刻指梯度下降迭代次数)

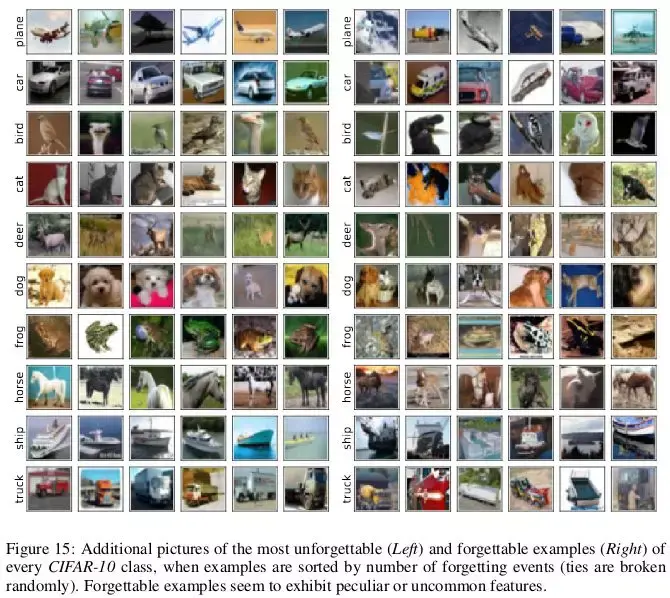

对于不会经历遗忘事件的样本,称之为不可遗忘样本(unfogettable example)。

MNIST 中 91.7%、permutedMNIST 中 75.3%、CIFAR-10 中 31.3% 以及 CIFAR-100 中 7.62%

的数据属于不可遗忘样本。

随着图像数据集的 多样性和复杂性 上升,神经网络遗忘更多的样本。

操纵可遗忘数据

不可遗忘样本编码了绝大部分的冗余信息。

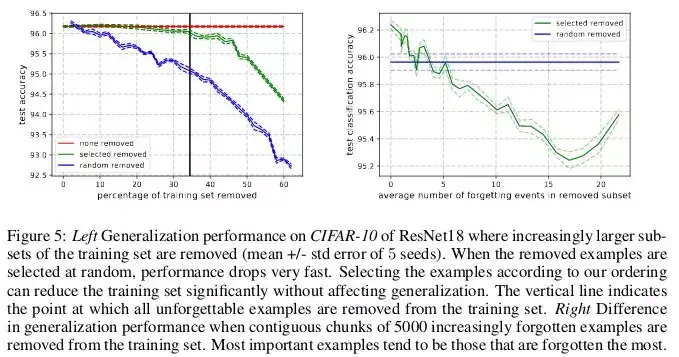

将样本按其不可遗忘性(unforgettability)进行排序,就可以通过删除绝大部分的不可遗忘样本,对数据集完成压缩。

在 CIFAR-10 中,

30% 的数据 可以在不影响测试集准确率的情况下移除,

而删除 35% 的数据 则会产生 0.2% 的微小测试准确率下降。

如果所移除的 30% 数据 是随机挑选而非基于不可遗忘性,那么就会导致约 1% 的显著下降。

Hebbian theory

When an axon(轴突) of cell A is near enough to excite a cell B

and repeatedly or persistently takes part in firing it,

some growth process or metabolic change takes place in one or both cells such that

A’s efficiency, as one of the cells firing B, is increased.[1]

(强化连接)

Mathematical

Learn by epoch

Volume learning

The compound most commonly identified as fulfilling this retrograde transmitter role is nitric oxide(一氧化氮), which, due to its high solubility and diffusibility(溶解度和扩散性), often exerts effects on nearby neurons.

保持记忆

Memory Aware Synapses: Learning what (not) to forget

between training,

use these unlabeled samples to update importance weights for the model parameters.

Data that appears frequently, will have a bigger contribution.

This way, the agent learns what is important and should not be forgotten.

(LLL: Lifelong learning ~=不间断迁移学习)

局部近似为hebb学习

知道的太浅了