使用kubeadm安装无疑是一种不错的选择。参考:https://www.cnblogs.com/benjamin77/p/9783797.html

1、环境准备

1.1系统配置

系统是CentOS Linux release 7.6

[root@k8s-master ~]# cat /etc/hosts 192.168.1.134 k8s-master 192.168.1.135 k8s-node1

192.168.1.136 k8s-node2

禁用防火墙和selinux

systemctl stop firewalld systemctl disable firewalld setenforce 0 sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

关闭系统的Swap(Kubernetes 1.8开始要求)

swapoff -a yes | cp /etc/fstab /etc/fstab_bak cat /etc/fstab_bak |grep -v swap > /etc/fstab

添加内核参数文件 /etc/sysctl.d/k8s.conf

echo """

vm.swappiness = 0

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

""" > /etc/sysctl.conf sysctl -p

执行命令使修改生效

modprobe br_netfilter sysctl -p /etc/sysctl.d/k8s.conf

同步时间

ntpdate -u ntp.api.bz

升级内核到最新(已准备内核离线安装包,可选)centos7 升级内核

所有机器需要设定/etc/sysctl.d/k8s.conf的系统参数

# https://github.com/moby/moby/issues/31208 # ipvsadm -l --timout # 修复ipvs模式下长连接timeout问题 小于900即可 cat <<EOF > /etc/sysctl.d/k8s.conf net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_intvl = 30 net.ipv4.tcp_keepalive_probes = 10 net.ipv6.conf.all.disable_ipv6 = 1 net.ipv6.conf.default.disable_ipv6 = 1 net.ipv6.conf.lo.disable_ipv6 = 1 net.ipv4.neigh.default.gc_stale_time = 120 net.ipv4.conf.all.rp_filter = 0 net.ipv4.conf.default.rp_filter = 0 net.ipv4.conf.default.arp_announce = 2 net.ipv4.conf.lo.arp_announce = 2 net.ipv4.conf.all.arp_announce = 2 net.ipv4.ip_forward = 1 net.ipv4.tcp_max_tw_buckets = 5000 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 1024 net.ipv4.tcp_synack_retries = 2 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.netfilter.nf_conntrack_max = 2310720 fs.inotify.max_user_watches=89100 fs.may_detach_mounts = 1 fs.file-max = 52706963 fs.nr_open = 52706963 net.bridge.bridge-nf-call-arptables = 1 vm.swappiness = 0 vm.overcommit_memory=1 vm.panic_on_oom=0 EOF sysctl --system

设置开机启动

# 启动docker sed -i "13i ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT" /usr/lib/systemd/system/docker.service systemctl daemon-reload systemctl enable docker systemctl start docker # 设置kubelet开机启动 systemctl enable kubelet systemctl enable keepalived systemctl enable haproxy

设置免密登录

# 1、三次回车后,密钥生成完成 ssh-keygen # 2、拷贝密钥到其他节点 ssh-copy-id -i ~/.ssh/id_rsa.pub 用户名字@192.168.x.xxx

1.2安装Docker(所有节点)

yum install -y yum-utils device-mapper-persistent-data lvm2

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker.repo

查看可下载的版本

[root@k8s-master ~]# yum list docker-ce.x86_64 --showduplicates |sort -r

# yum makecache fast

# yum install docker-ce -y

[root@k8s-node1 ~]# docker -v Docker version 18.06.1-ce, build e68fc7a

[root@k8s-node1 ~]#sed -i "13i ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT" /usr/lib/systemd/system/docker.service

[root@k8s-node1 ~]# systemctl daemon-reload ;systemctl start docker ;systemctl enable docker

2.使用kubeadm部署Kubernetes

2.1安装kubelet 和 kubeadm

生成kubernetes的yum仓库配置文件/etc/yum.repos.d/kubernetes.repo,内容如下:(默认安装最新版本,我此时安装时版本为1.14.2)

[kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg enabled=1

yum makecache fast yum install -y kubelet kubeadm kubectl

关闭swap功能

[root@k8s-node1 yum.repos.d]# swapoff -a [root@k8s-node1 yum.repos.d]# sysctl -p /etc/sysctl.d/k8s.conf

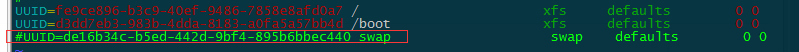

注释掉 、/etc/fstab 中swap的条目

mount -a

echo "KUBELET_EXTRA_ARGS=--fail-swap-on=false" > /etc/sysconfig/kubelet

2.2 使用kubeadm init初始化集群

在各节点开机启动kubelet服务:

systemctl enable kubelet.service

使用kubeadm初始化集群,会发生一下错误,这是由于初始化时,先从本地查找 有没有kubenetes组件的相关镜像如果找不到就从谷歌镜像站下载,如果你不FQ就只能让本地存在这些镜像。

我们可以从docker镜像站下载kubernetes相关组件的镜像然后给他重新打tag

[root@k8s-master ~]#kubeadm init --kubernetes-version=v1.14.2 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --apiserver-advertise-address=10.0.0.11 --ignore-preflight-errors=Swap

执行脚本

[root@k8s-master ~]# cat k8s.sh

docker pull mirrorgooglecontainers/kube-apiserver:v1.14.2

docker pull mirrorgooglecontainers/kube-controller-manager:v1.14.2

docker pull mirrorgooglecontainers/kube-scheduler:v1.14.2

docker pull mirrorgooglecontainers/kube-proxy:v1.14.2

docker pull mirrorgooglecontainers/pause:3.1

docker pull mirrorgooglecontainers/etcd:3.3.10

docker pull coredns/coredns:1.3.1

docker tag mirrorgooglecontainers/kube-proxy:v1.14.2 k8s.gcr.io/kube-proxy:v1.14.2

docker tag mirrorgooglecontainers/kube-scheduler:v1.14.2 k8s.gcr.io/kube-scheduler:v1.14.2

docker tag mirrorgooglecontainers/kube-apiserver:v1.14.2 k8s.gcr.io/kube-apiserver:v1.14.2

docker tag mirrorgooglecontainers/kube-controller-manager:v1.14.2 k8s.gcr.io/kube-controller-manager:v1.14.2

docker tag mirrorgooglecontainers/etcd:3.3.10 k8s.gcr.io/etcd:3.3.10

docker tag coredns/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

docker rmi mirrorgooglecontainers/kube-proxy:v1.14.2

docker rmi mirrorgooglecontainers/kube-scheduler:v1.14.2

docker rmi mirrorgooglecontainers/kube-apiserver:v1.14.2

docker rmi mirrorgooglecontainers/kube-controller-manager:v1.14.2

docker rmi mirrorgooglecontainers/etcd:3.3.10

docker rmi coredns/coredns:1.3.1

docker rmi mirrorgooglecontainers/pause:3.1

bash k8s.sh

[root@k8s-master ~]# docker images

具体操作如下:

查看kubernetes的版本

[root@k8s-master yum.repos.d]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.2", GitCommit:"4ed3216f3ec431b140b1d899130a69fc671678f4", GitTreeState:"clean", BuildDate:"2019-05-30T16:43:08Z", GoVersion:"go1.10.4", Compiler:"gc", Platform:"linux/amd64"}

再次在master节点上执行初始化

[root@k8s-master ~]#kubeadm init --kubernetes-version=v1.14.2 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --apiserver-advertise-address=10.0.0.11 --ignore-preflight-errors=Swap

输出信息如下:

[root@k8s-master ~]#kubeadm init --kubernetes-version=v1.14.2 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --apiserver-advertise-address=10.0.0.11 --ignore-preflight-errors=Swap

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.153:6443 --token il8ser.l9jyab9xa6m2t971 \

--discovery-token-ca-cert-hash sha256:492ec57cb9723ae8a71c2b9668bb7a86f0333c530ea5db10540882d6a6463efc

按照上面输出提示进行操作

[root@k8s-master ~]# mkdir -p $HOME/.kube [root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

mkdir -p ~/k8s/ && cd ~/k8s

[root@k8s-master k8s]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#这里改成自己的网卡

# vim kube-flannel.yml args: - --ip-masq - --kube-subnet-mgr - --iface=eth0 # kubectl apply -f kube-flannel.yml

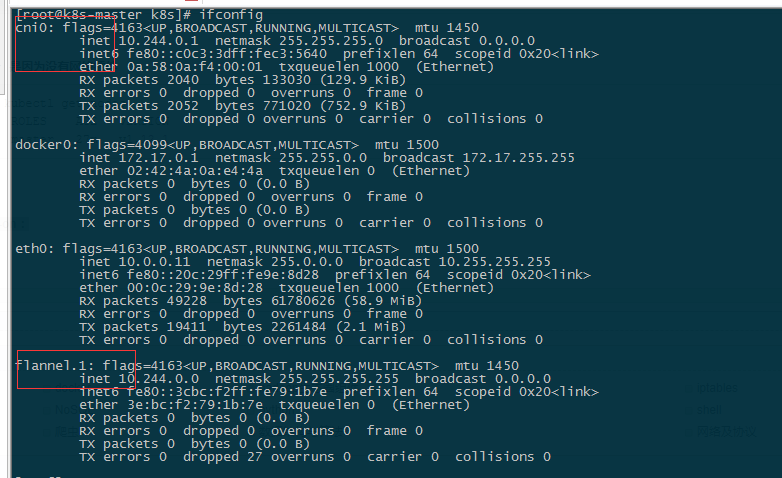

查看集群状态

集群初始化如果遇到问题,可以使用下面的命令进行清理:

kubeadm reset ifconfig cni0 down ip link delete cni0 ifconfig flannel.1 down ip link delete flannel.1 rm -rf /var/lib/cni/

2.3 安装Pod Network

#这时master状态为notready 是因为没有网络插件

[root@k8s-master k8s]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master NotReady master 27m v1.12.1

接下来安装flannel network add-on:

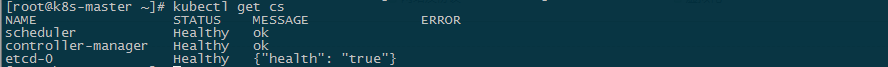

获取组件健康状态

[root@k8s-master k8s]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

[root@k8s-master k8s]# kubectl describe node k8s-master

Name: k8s-master

Roles: master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=k8s-master

node-role.kubernetes.io/master=

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Wed, 17 Oct 2018 21:24:01 +0800

Taints: node-role.kubernetes.io/master:NoSchedule

node.kubernetes.io/not-ready:NoSchedule

Unschedulable: false

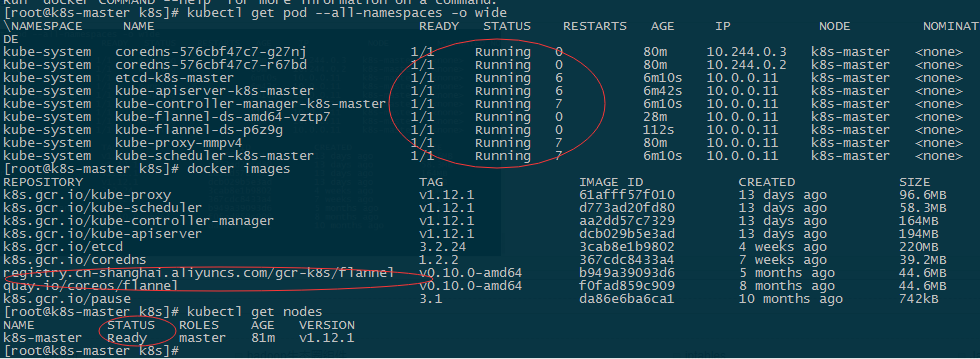

上面输出显示首先会下载一个flannel镜像,namespace全部为running状态, master为ready

2.4master参与工作负载

出于安全考虑Pod不会被调度到Master Node上,也就是说Master Node不参与工作负载。这是因为当前的master节点node1被打上了node-role.kubernetes.io/master:NoSchedule的污点:

[root@k8s-master k8s]# kubectl describe node k8s-master | grep Taint Taints: node-role.kubernetes.io/master:NoSchedule

# 如果需要改回来不想让master节点参与到工作负载

kubectl taint node k8s-master node-role.kubernetes.io/master="":NoSchedule

去除污点使k8s-master参与负载

[root@k8s-master k8s]# kubectl taint nodes k8s-master node-role.kubernetes.io/master-

node/k8s-master untainted

[root@k8s-master k8s]# kubectl describe node k8s-master | grep Taint

Taints: <none>

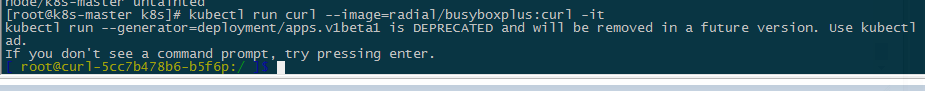

2.5测试DNS

kubectl run curl --image=radial/busyboxplus:curl -it

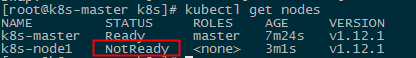

2.6 向Kubernetes集群中添加Node节点

下面我们将node1 node2这个主机添加到Kubernetes集群中, 在node1和node2上执行:

[root@k8s-node1 ~]# kubeadm join 10.0.0.11:6443 --token i4us8x.pw2f3botcnipng8e --discovery-token-ca-cert-hash sha256:d16ac747c2312ae829aa29a3596f733f920ca3d372d9f1b34d33c938be067e51

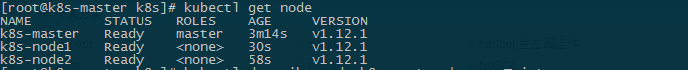

查看节点,

原因是节点k8-node1也要获取镜像,执行以上的获取镜像的脚本即可,两个节点分别重置集群,kubeadm reset,然后重新初始化。

从master节点如果需要移出这个node1节点

在master节点上执行:

kubectl drain k8s-node1 --delete-local-data --force --ignore-daemonsets kubectl delete node k8s-node1