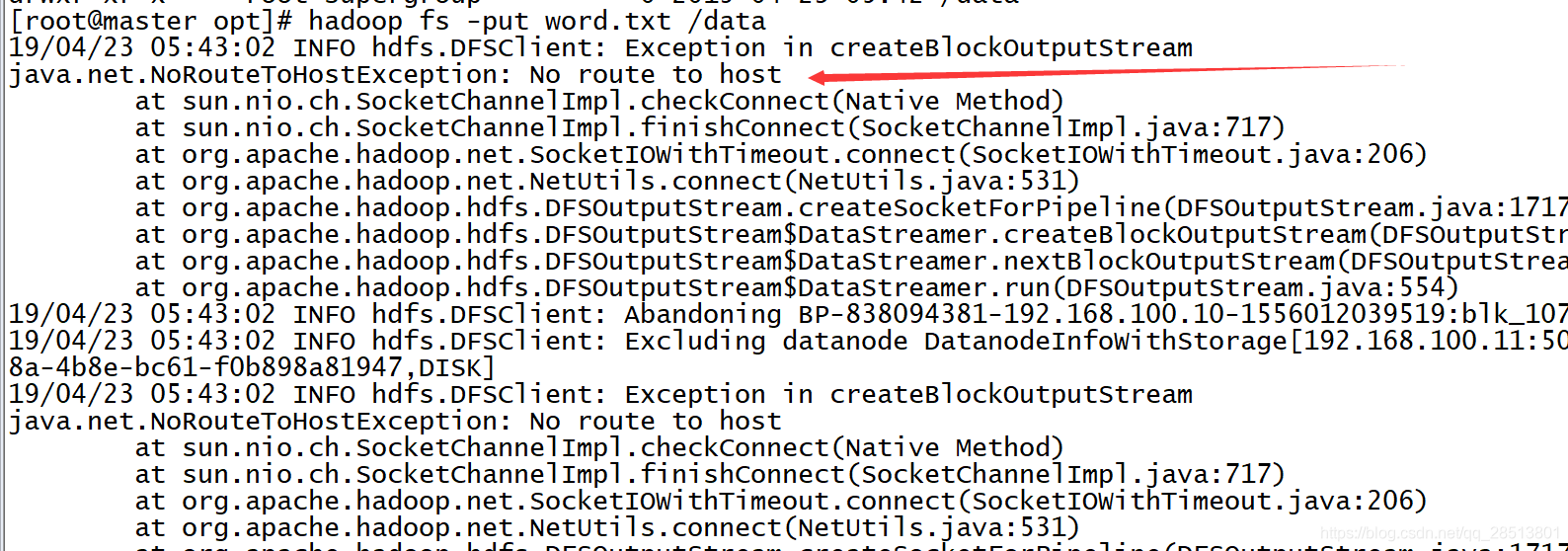

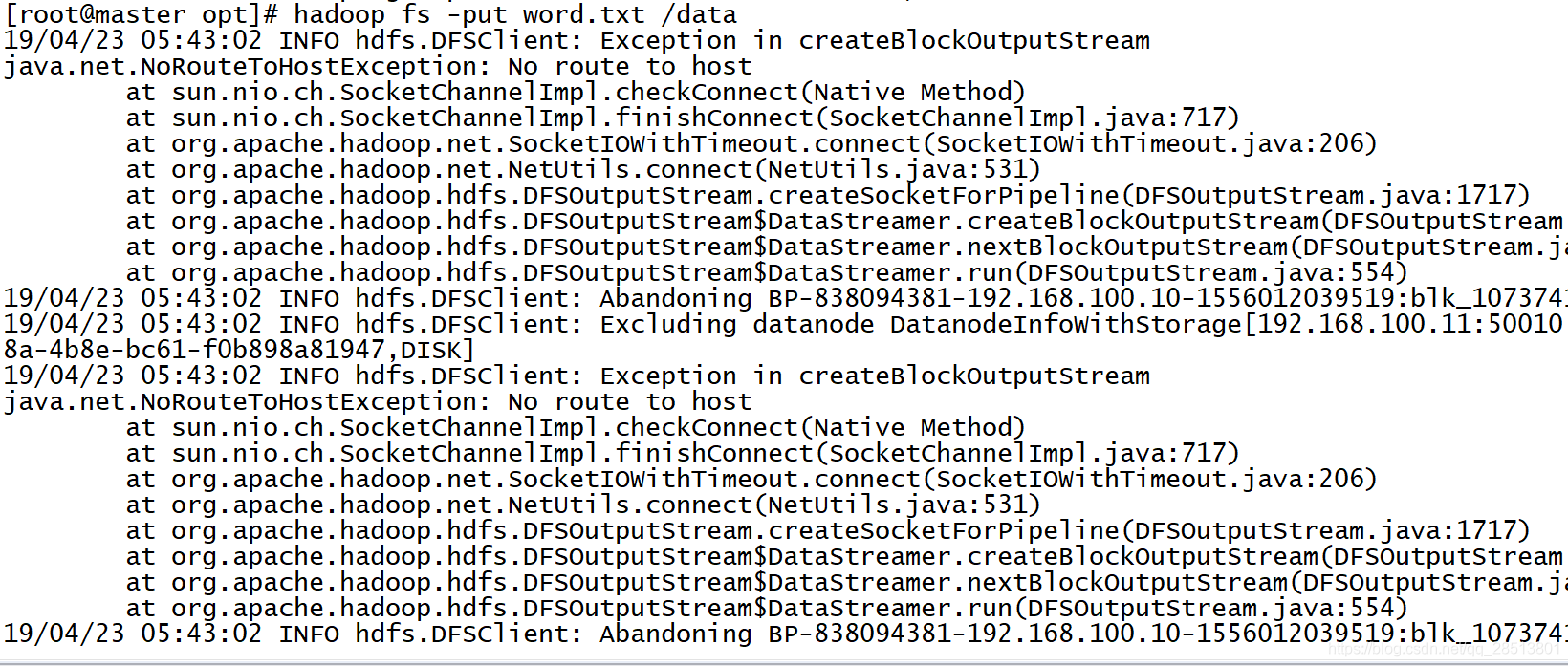

操作hdfs系统时 使用put上传文件时 出现一个报错

19/04/23 05:43:02 INFO hdfs.DFSClient: Exception in createBlockOutputStream

java.net.NoRouteToHostException: No route to host

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.hdfs.DFSOutputStream.createSocketForPipeline(DFSOutputStream.java:1717)

at org.apache.hadoop.hdfs.DFSOutputStream

DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1400)

at org.apache.hadoop.hdfs.DFSOutputStream

DataStreamer.createBlockOutputStream(DFSOutputStream.java:1447)

at org.apache.hadoop.hdfs.DFSOutputStream

DataStreamer.run(DFSOutputStream.java:554)

19/04/23 05:43:02 INFO hdfs.DFSClient: Abandoning BP-838094381-192.168.100.10-1556012039519:blk_1073741826_1002

19/04/23 05:43:02 INFO hdfs.DFSClient: Excluding datanode DatanodeInfoWithStorage[192.168.100.12:50010,DS-a75fbfec-d9e0-4300-8d4a-e34d503f316d,DISK]

19/04/23 05:43:02 WARN hdfs.DFSClient: DataStreamer Exception

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /data/word.txt.COPYING could only be replicated to 0 nodes instead of minReplication (=1). There are 2 datanode(s) running and 2 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1625)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getNewBlockTargets(FSNamesystem.java:3132)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:3056)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:725)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:493)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol

Server

Server.call(RPC.java:982)

at org.apache.hadoop.ipc.Server$Handler

Handler

Handler.run(Server.java:2213)

at org.apache.hadoop.ipc.Client.call(Client.java:1476)

at org.apache.hadoop.ipc.Client.call(Client.java:1413)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy10.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.a

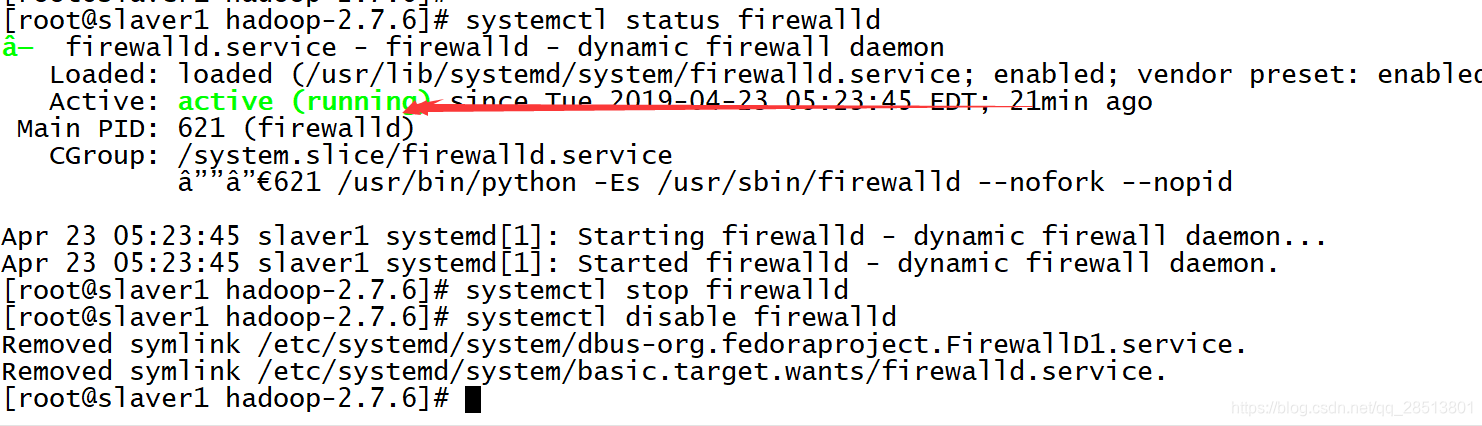

这样只需要关闭firewalld就可以啦

然后一切正常

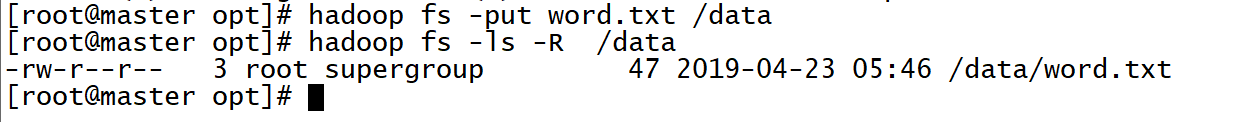

[root@master opt]# hadoop fs -put word.txt /data

[root@master opt]# hadoop fs -ls -R /data

-rw-r–r-- 3 root supergroup 47 2019-04-23 05:46 /data/word.txt

这里举一个wordcount例子进行检验

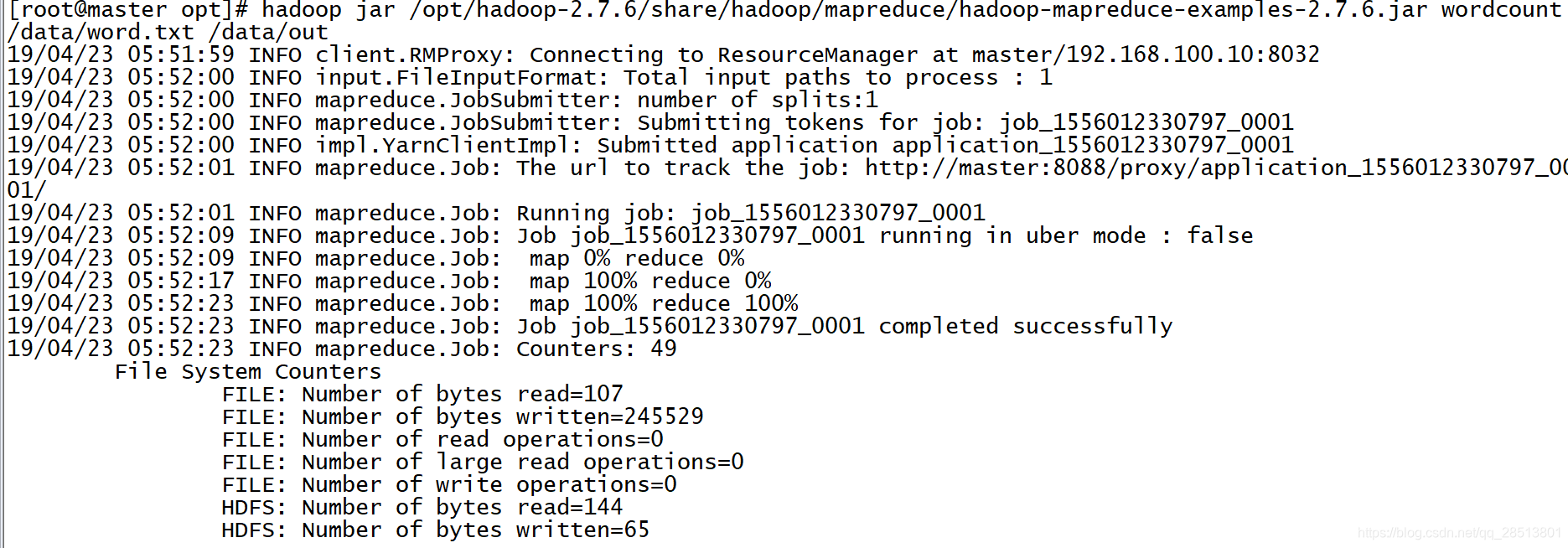

[root@master opt]# hadoop jar /opt/hadoop-2.7.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.6.jar wordcount /data/word.txt /data/out

19/04/23 05:51:59 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.100.10:8032

19/04/23 05:52:00 INFO input.FileInputFormat: Total input paths to process : 1

19/04/23 05:52:00 INFO mapreduce.JobSubmitter: number of splits:1

19/04/23 05:52:00 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1556012330797_0001

19/04/23 05:52:00 INFO impl.YarnClientImpl: Submitted application application_1556012330797_0001

19/04/23 05:52:01 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1556012330797_0001/

19/04/23 05:52:01 INFO mapreduce.Job: Running job: job_1556012330797_0001

[root@master opt]# hadoop jar /opt/hadoop-2.7.6/share/hadoop/mapreduce/

hadoop-mapreduce-client-app-2.7.6.jar hadoop-mapreduce-client-jobclient-2.7.6-tests.jar

hadoop-mapreduce-client-common-2.7.6.jar hadoop-mapreduce-client-shuffle-2.7.6.jar

hadoop-mapreduce-client-core-2.7.6.jar hadoop-mapreduce-examples-2.7.6.jar

hadoop-mapreduce-client-hs-2.7.6.jar lib/

hadoop-mapreduce-client-hs-plugins-2.7.6.jar lib-examples/

hadoop-mapreduce-client-jobclient-2.7.6.jar sources/

[root@master opt]# hadoop jar /opt/hadoop-2.7.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.6.jar wordcount /data/word.txt /data/out

19/04/23 05:51:59 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.100.10:8032

19/04/23 05:52:00 INFO input.FileInputFormat: Total input paths to process : 1

19/04/23 05:52:00 INFO mapreduce.JobSubmitter: number of splits:1

19/04/23 05:52:00 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1556012330797_0001

19/04/23 05:52:00 INFO impl.YarnClientImpl: Submitted application application_1556012330797_0001

19/04/23 05:52:01 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1556012330797_0001/

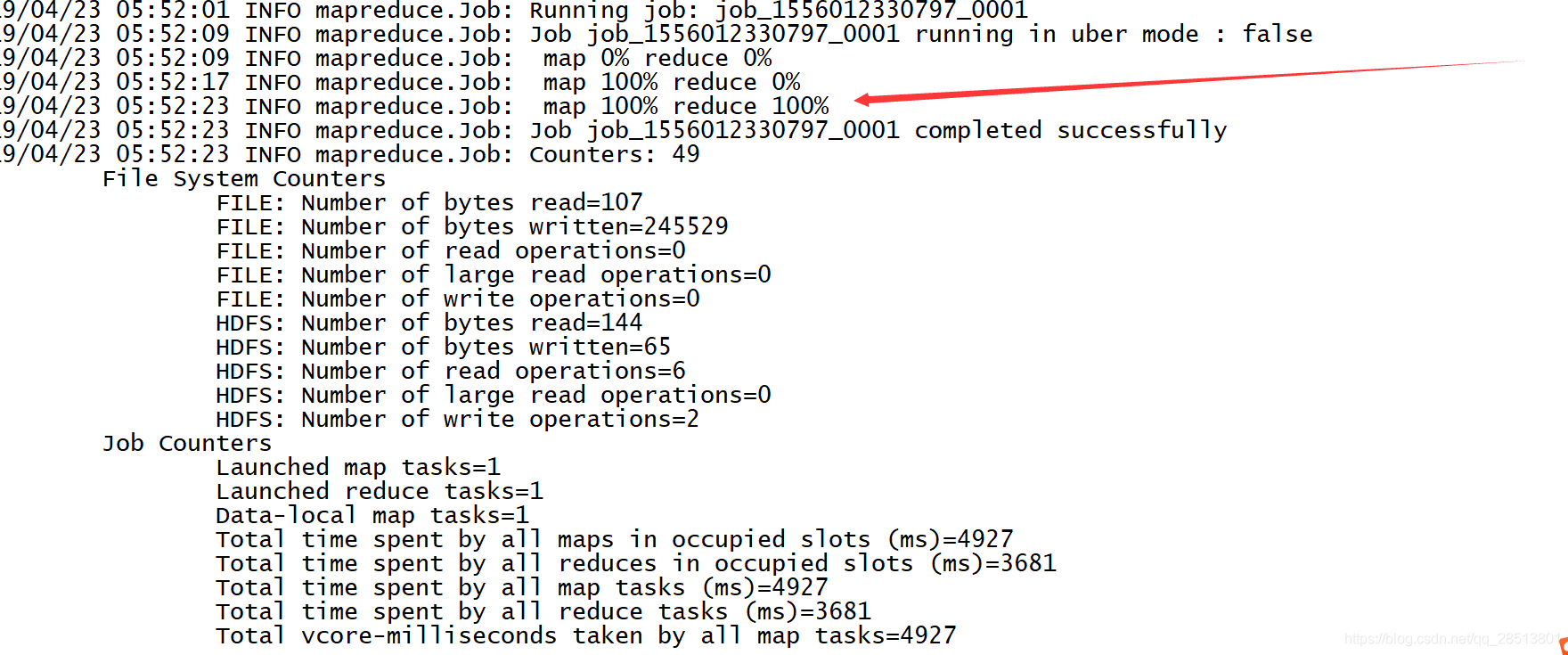

19/04/23 05:52:01 INFO mapreduce.Job: Running job: job_1556012330797_0001

19/04/23 05:52:09 INFO mapreduce.Job: Job job_1556012330797_0001 running in uber mode : false

19/04/23 05:52:09 INFO mapreduce.Job: map 0% reduce 0%

19/04/23 05:52:17 INFO mapreduce.Job: map 100% reduce 0%

19/04/23 05:52:23 INFO mapreduce.Job: map 100% reduce 100%

19/04/23 05:52:23 INFO mapreduce.Job: Job job_1556012330797_0001 completed successfully

19/04/23 05:52:23 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=107

FILE: Number of bytes written=245529

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=144

HDFS: Number of bytes written=65

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=4927

Total time spent by all reduces in occupied slots (ms)=3681

Total time spent by all map tasks (ms)=4927

Total time spent by all reduce tasks (ms)=3681

Total vcore-milliseconds taken by all map tasks=4927

Total vcore-milliseconds taken by all reduce tasks=3681

Total megabyte-milliseconds taken by all map tasks=5045248

Total megabyte-milliseconds taken by all reduce tasks=3769344

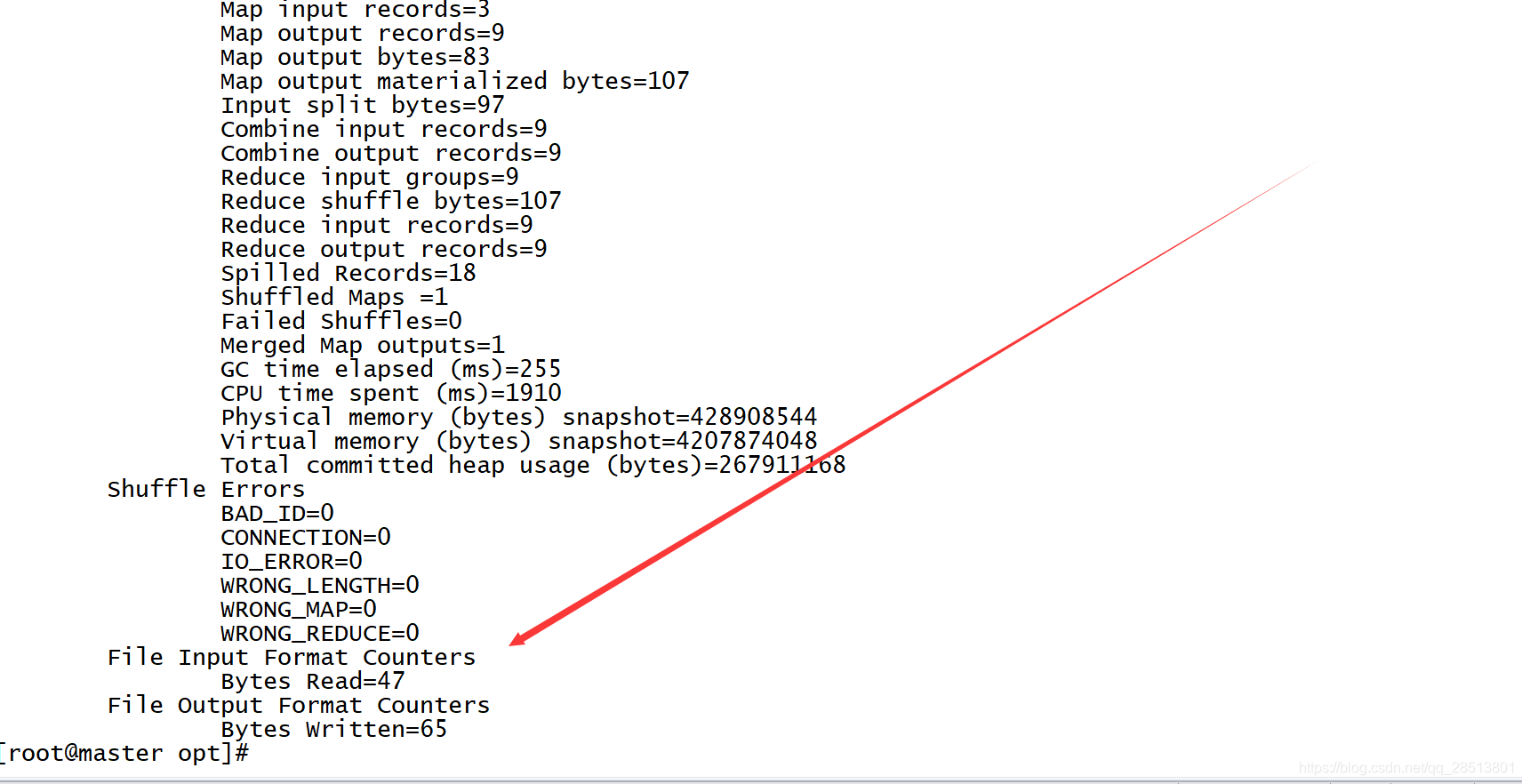

Map-Reduce Framework

Map input records=3

Map output records=9

Map output bytes=83

Map output materialized bytes=107

Input split bytes=97

Combine input records=9

Combine output records=9

Reduce input groups=9

Reduce shuffle bytes=107

Reduce input records=9

Reduce output records=9

Spilled Records=18

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=255

CPU time spent (ms)=1910

Physical memory (bytes) snapshot=428908544

Virtual memory (bytes) snapshot=4207874048

Total committed heap usage (bytes)=267911168

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=47

File Output Format Counters

Bytes Written=65

[root@master opt]#

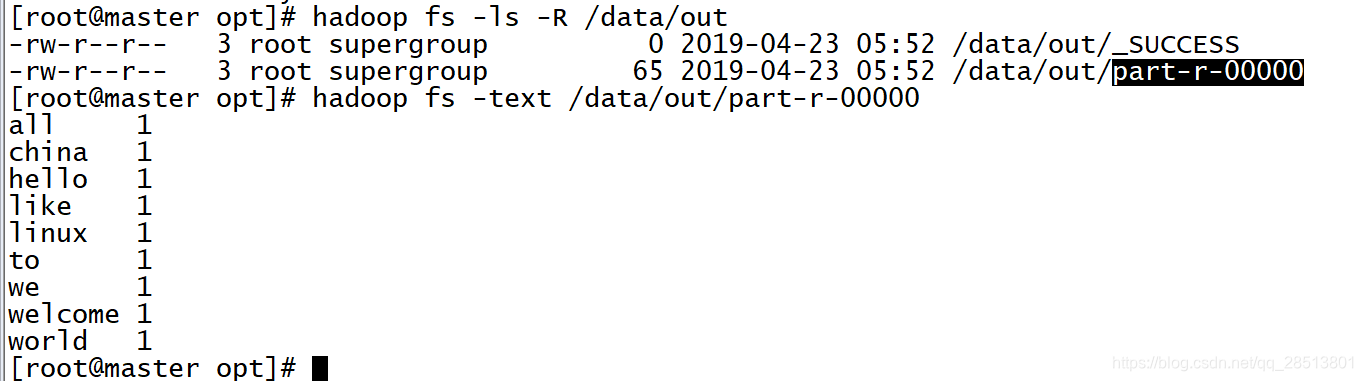

[root@master opt]# hadoop fs -ls -R /data/out

-rw-r–r-- 3 root supergroup 0 2019-04-23 05:52 /data/out/_SUCCESS

-rw-r–r-- 3 root supergroup 65 2019-04-23 05:52 /data/out/part-r-00000

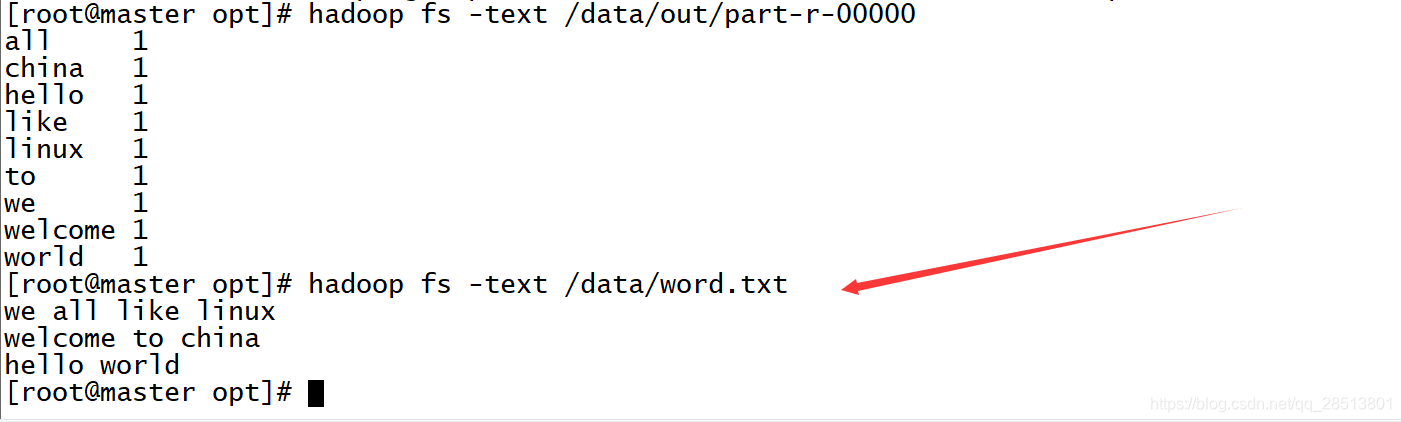

[root@master opt]# hadoop fs -text /data/out/part-r-00000

all 1

china 1

hello 1

like 1

linux 1

to 1

we 1

welcome 1

world 1

[root@master opt]#

[root@master opt]# hadoop fs -text /data/word.txt

we all like linux

welcome to china

hello world

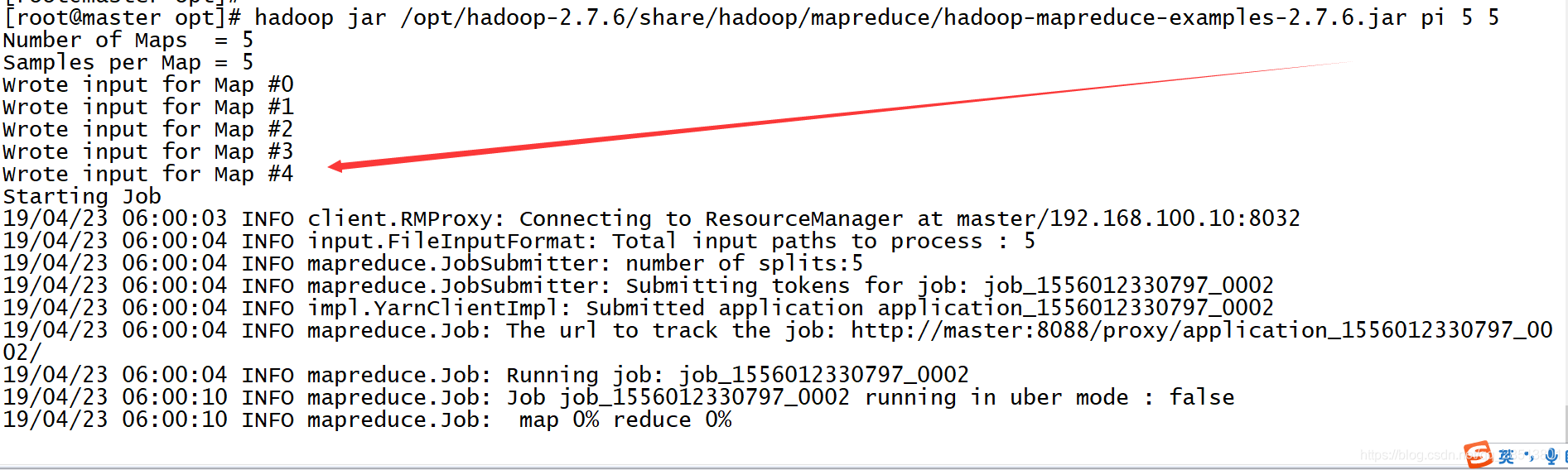

实例二:

[root@master opt]# hadoop jar /opt/hadoop-2.7.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.6.jar pi 5 5

Number of Maps = 5

Samples per Map = 5

Wrote input for Map #0

Wrote input for Map #1

Wrote input for Map #2

Wrote input for Map #3

Wrote input for Map #4

Starting Job

19/04/23 06:00:03 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.100.10:8032

19/04/23 06:00:04 INFO input.FileInputFormat: Total input paths to process : 5

19/04/23 06:00:04 INFO mapreduce.JobSubmitter: number of splits:5

19/04/23 06:00:04 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1556012330797_0002

19/04/23 06:00:04 INFO impl.YarnClientImpl: Submitted application application_1556012330797_0002

19/04/23 06:00:04 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1556012330797_0002/

19/04/23 06:00:04 INFO mapreduce.Job: Running job: job_1556012330797_0002

19/04/23 06:00:03 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.100.10:8032

19/04/23 06:00:04 INFO input.FileInputFormat: Total input paths to process : 5

19/04/23 06:00:04 INFO mapreduce.JobSubmitter: number of splits:5

19/04/23 06:00:04 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1556012330797_0002

19/04/23 06:00:04 INFO impl.YarnClientImpl: Submitted application application_1556012330797_0002

19/04/23 06:00:04 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1556012330797_0002/

19/04/23 06:00:04 INFO mapreduce.Job: Running job: job_1556012330797_0002

19/04/23 06:00:10 INFO mapreduce.Job: Job job_1556012330797_0002 running in uber mode : false

19/04/23 06:00:10 INFO mapreduce.Job: map 0% reduce 0%

19/04/23 06:00:28 INFO mapreduce.Job: map 80% reduce 0%

19/04/23 06:00:29 INFO mapreduce.Job: map 100% reduce 0%

19/04/23 06:00:33 INFO mapreduce.Job: map 100% reduce 100%

19/04/23 06:00:33 INFO mapreduce.Job: Job job_1556012330797_0002 completed successfully

19/04/23 06:00:33 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=116

FILE: Number of bytes written=738213

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1300

HDFS: Number of bytes written=215

HDFS: Number of read operations=23

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

Job Counters

Launched map tasks=5

Launched reduce tasks=1

Data-local map tasks=5

Total time spent by all maps in occupied slots (ms)=74789

Total time spent by all reduces in occupied slots (ms)=3160

Total time spent by all map tasks (ms)=74789

Total time spent by all reduce tasks (ms)=3160

Total vcore-milliseconds taken by all map tasks=74789

Total vcore-milliseconds taken by all reduce tasks=3160

Total megabyte-milliseconds taken by all map tasks=76583936

Total megabyte-milliseconds taken by all reduce tasks=3235840

Map-Reduce Framework

Map input records=5

Map output records=10

Map output bytes=90

Map output materialized bytes=140

Input split bytes=710

Combine input records=0

Combine output records=0

Reduce input groups=2

Reduce shuffle bytes=140

Reduce input records=10

Reduce output records=0

Spilled Records=20

Shuffled Maps =5

Failed Shuffles=0

Merged Map outputs=5

GC time elapsed (ms)=2229

CPU time spent (ms)=4930

Physical memory (bytes) snapshot=1430020096

Virtual memory (bytes) snapshot=12611244032

Total committed heap usage (bytes)=1041760256

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=590

File Output Format Counters

Bytes Written=97

Job Finished in 29.656 seconds

Estimated value of Pi is 3.68000000000000000000

[root@master opt]#

可以看出 其实解决这样的小问题 可以看一下抛出的异常就可以了

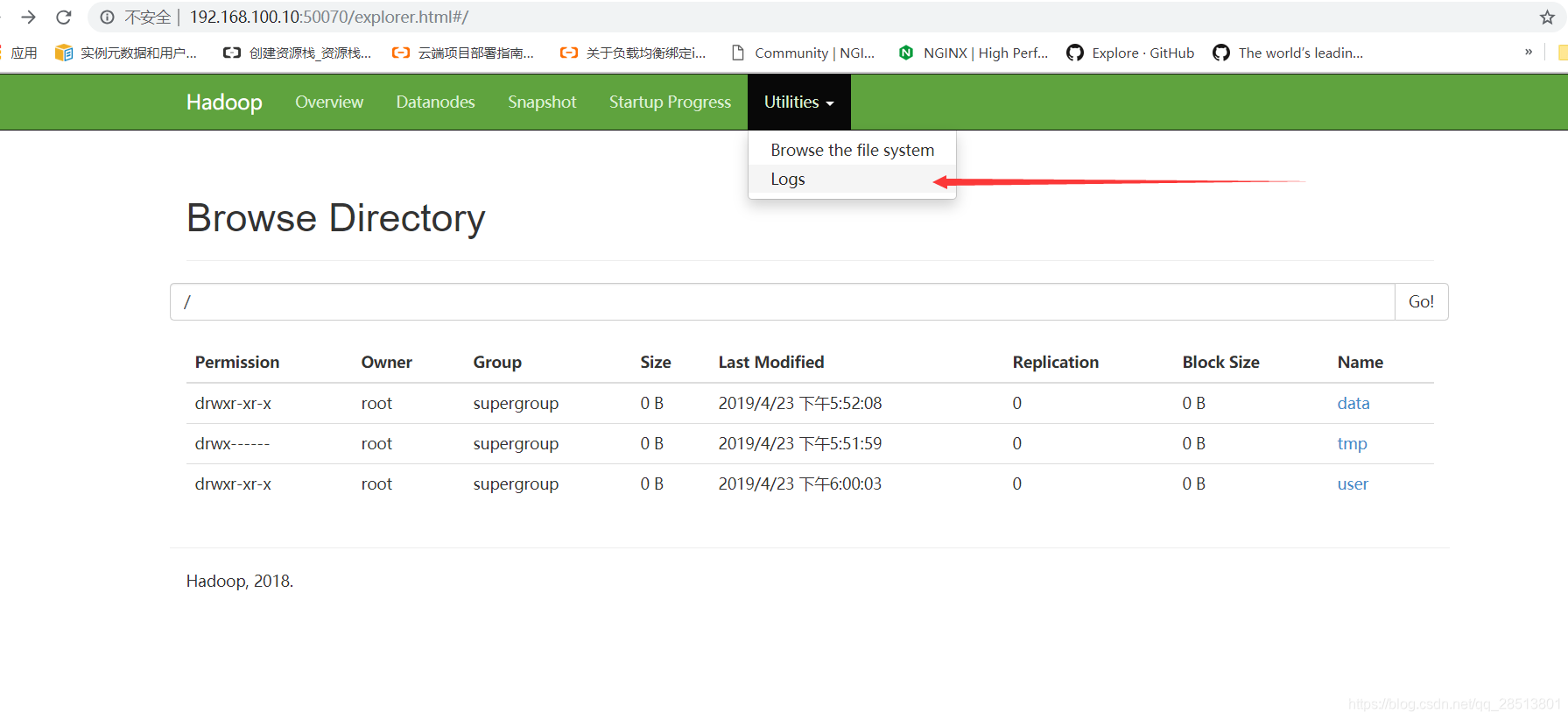

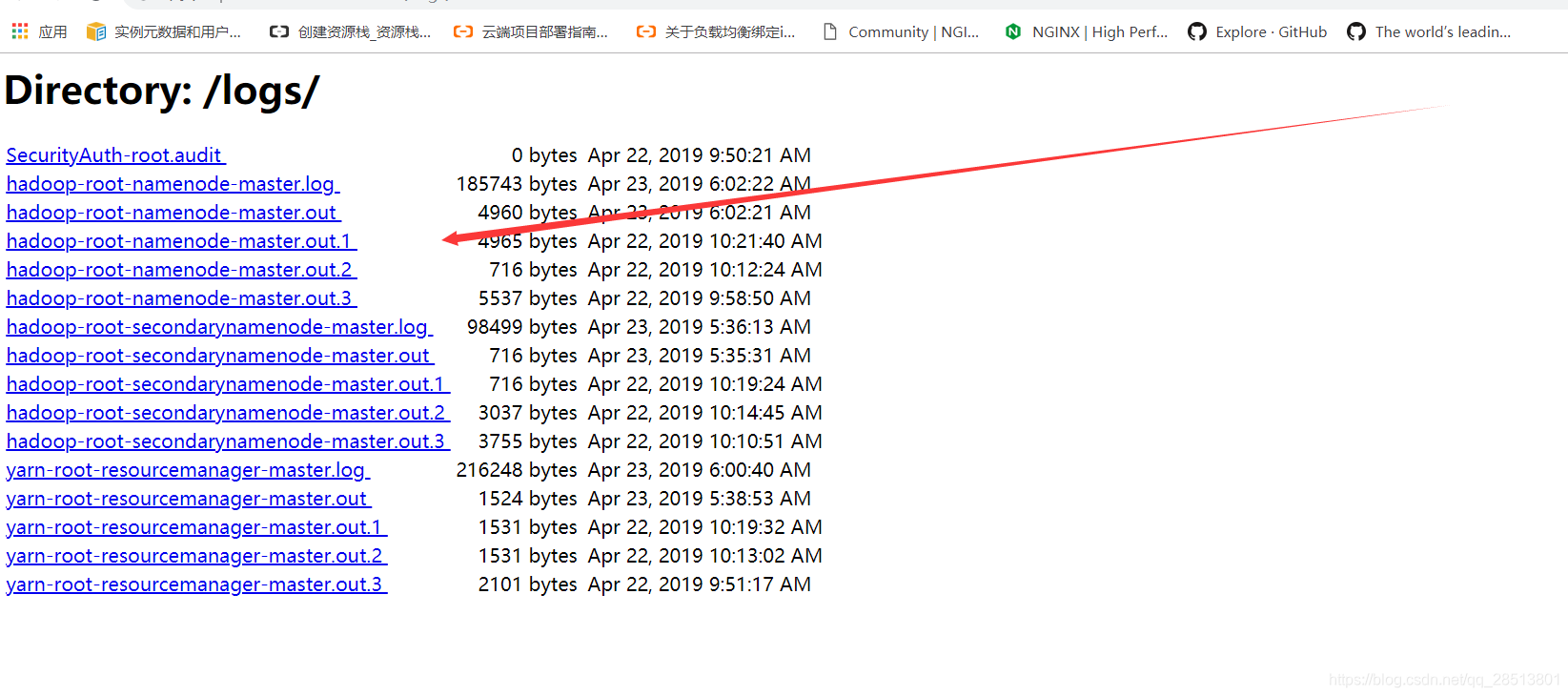

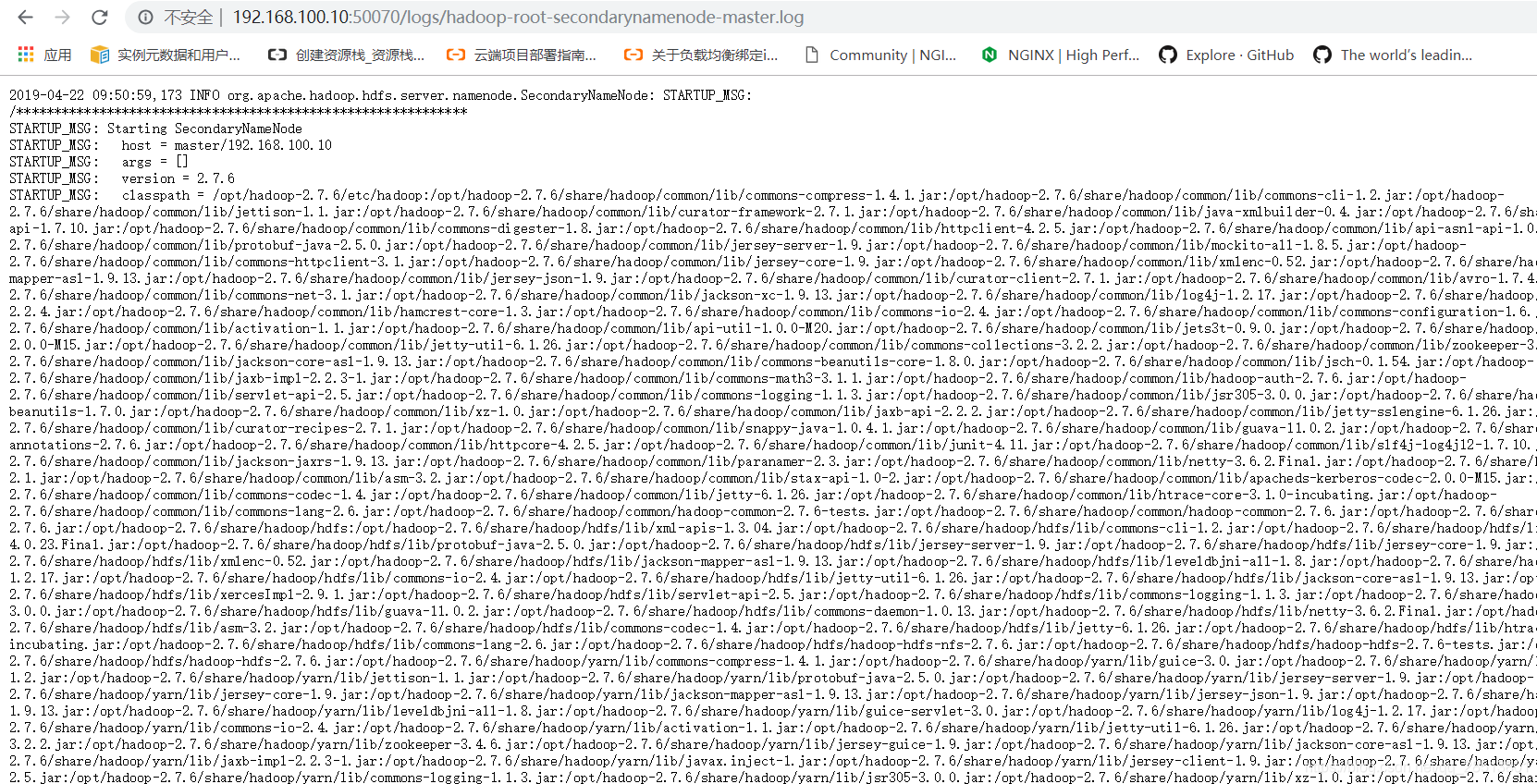

如果很复杂就查看日志: