爬取案例:

目标网站:

url = 'http://www.chinanews.com/rss/scroll-news.xml'

页面特点:

先创建爬虫项目:

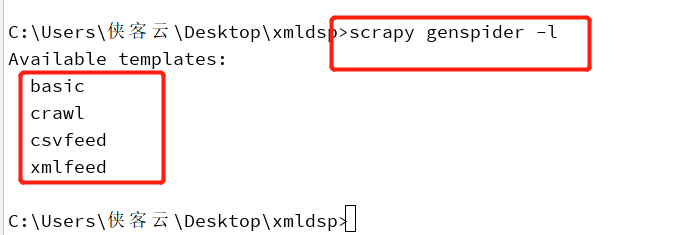

也可以查看爬虫类:

创建xmlFeed 爬虫可以用:

scrapy genspider -t xmlfeed cnew chinanews.com

2. 或可以先创建普通爬虫,再将普通的scrapy爬虫类改为XMLFeedSpider 爬虫类

该爬虫代码:

# -*- coding: utf-8 -*- import scrapy from scrapy.spiders import XMLFeedSpider from ..items import FeedItem class NewsSpider(XMLFeedSpider): name = 'news' #allowed_domains = ['www.chinanews.com'] start_urls = ['http://www.chinanews.com/rss/scroll-news.xml'] #iterator = 'itetnodes' #itertag = 'item' def parse_node(self, response, node): # item = FeedItem() item ={} item['title'] = node.xpath('title/text()').extract_first() item['link'] = node.xpath('link/text()').extract_first() item['desc'] =node.xpath('description/text()').extract_first() item['pub_date'] = node.xpath('pubDate/text()').extract_first() print(item) yield item

3. 将settings中的配置

# Obey robots.txt rules ROBOTSTXT_OBEY = False

4. 启动爬虫

scrapy crawl news --nolog

5.爬取效果