【环境】

| 组件名称 | 组件版本 |

|---|---|

| Hadoop | Hadoop-2.6.0-cdh5.7.0-src.tar.gz |

| jdk | jdk-8u45-linux-x64.gz |

| hive | hive-1.1.0-cdh5.7.0.tar.gz |

| hue | hue-3.9.0-cdh5.7.0 |

【下载依赖##root用户下进行】

[root@hadoop001 ~]#yum -y install ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi gcc gcc-c++ krb5-devel libtidy libxml2-devel libxslt-devel openldap-devel python-devel sqlite-devel openssl-devel mysql-devel gmp-devel

【下载hue并解压】

[hadoop@hadoop001 app]$ pwd

/home/hadoop/app

[hadoop@hadoop001 app]$ wget http://archive.cloudera.com/cdh5/cdh/5/hue-3.9.0-cdh5.7.0.tar.gz

[hadoop@hadoop001 app]$ ll

-rw-r--r-- 1 hadoop hadoop 66826791 Apr 18 19:56 hue-3.9.0-cdh5.7.0.tar.gz

[hadoop@hadoop001 app]$ tar -xzvf hue-3.9.0-cdh5.7.0.tar.gz

[hadoop@hadoop001 app]$ll

drwxr-xr-x 11 hadoop hadoop 4096 Apr 18 21:51 hue-3.9.0-cdh5.7.0

-rw-r--r-- 1 hadoop hadoop 66826791 Apr 18 19:56 hue-3.9.0-cdh5.7.0.tar.gz

【配置环境变量】

[hadoop@hadoop001 app]vim ~/.bash_profile

export HUE_HOME=/home/hadoop/app/hue-3.9.0-cdh5.7.0

export PATH=$HUE_HOME/build/env/bin:$PATH

[hadoop@hadoop001 app]source ~/.bash_profile

[hadoop@hadoop001 app]$ echo $HUE_HOME

/home/hadoop/app/hue-3.9.0-cdh5.7.0

【修改配置文件】

vi $HUE_HOME/desktop/conf/hue.ini

【desktop】

[desktop]

http_host=0.0.0.0 # 监听地址

http_port=8888 # 启动端口

# Time zone name

time_zone=Asia/Shanghai # 时区

【hadoop】

- [hadoop]

- fs_defaultfs=hdfs://hadoop001:8020

- webhdfs_url=http://hadoop001:50070/webhdfs/v1

【yarn_clusters】

- [[yarn_clusters]]

[[[default]]]

# Enter the host on which you are running the ResourceManager

resourcemanager_host=hadoop001

# The port where the ResourceManager IPC listens on

## resourcemanager_port=8032

# Whether to submit jobs to this cluster

submit_to=True

# Resource Manager logical name (required for HA)

## logical_name=

# Change this if your YARN cluster is Kerberos-secured

## security_enabled=false

# URL of the ResourceManager API

resourcemanager_api_url=http://hadoop001:8088

# URL of the ProxyServer API

proxy_api_url=http://hadoop001:8088

# URL of the HistoryServer API

history_server_api_url=http://hadoop001:19888

- [beeswax]

# Host where HiveServer2 is running.

# If Kerberos security is enabled, use fully-qualified domain name (FQDN).

hive_server_host=hadoop001

# Port where HiveServer2 Thrift server runs on.

hive_server_port=10000 # hiveserver2的启动端口,如果改了这里要同步

# Hive configuration directory, where hive-site.xml is located

hive_conf_dir=/home/hadoop/app/hive/conf #hive的conf目录

【MySQL】

## [[[mysql]]]

# Name to show in the UI.

nice_name="My SQL DB"

# For MySQL and PostgreSQL, name is the name of the database.

# For Oracle, Name is instance of the Oracle server. For express edition

# this is 'xe' by default.

name=mysqldb

# Database backend to use. This can be:

# 1. mysql

# 2. postgresql

# 3. oracle

engine=mysql

# IP or hostname of the database to connect to.

host=hadoop001

# Port the database server is listening to. Defaults are:

# 1. MySQL: 3306

# 2. PostgreSQL: 5432

# 3. Oracle Express Edition: 1521

port=3306

# Username to authenticate with when connecting to the database.

user=root

# Password matching the username to authenticate with when

# connecting to the database.

password=123456

【HADOOP配置修改】

vi $HADOOP_HOME/etc/hadoop/hdfs-site.xml

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

vi $HADOOP_HOME/etc/hadoop/core-site.xm

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

vi $HADOOP_HOME/etc/hadoop/yarn-site.xml

<!--打开HDFS上日志记录功能-->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!--在HDFS上聚合的日志最长保留多少秒。3天-->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>259200</value>

</property>

vi $HADOOP_HOME/etc/hadoop/httpfs-site.xml

<property>

<name>httpfs.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>httpfs.proxyuser.hue.groups</name>

<value>*</value>

</property>

【hive的配置修改】

vi $HIVE_HOME/conf/ hive-site.xml

<property>

<name>hive.metastore.uris</name>

<value>thrift://hadoop001:9083</value>

<description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>hadoop001</value>

<description>Bind host on which to run the HiveServer2 Thrift service.</description>

</property>

【重启hdfs和yarn】

cd $HADOOP_HOME/sbin

[hadoop@hadoop001 sbin]$ stop-all.sh

[hadoop@hadoop001 sbin]$ start-all.sh

【使用beeline连接hs2,-n hadoop 这里的hadoop是启动beeline的用户hadoop】

- [hadoop@hadoop001 ~]$ beeline -u jdbc:hive2://hadoop001:10000/default -n hadoop

【启动HUE】

【执行以下指令对hue数据库进行初始化】

- cd $HUE_HOME/build/env/

- bin/hue syncdb

- bin/hue migrate

【启动Hive metastore】

- nohup hive --service metastore &

【启动hiveserver2】

- [hadoop@hadoop001 ~]$ nohup hive --service hiveserver2 >~/app/hive-1.1.0-cdh5.7.0/console.log 2>&1 &

【启动HUE】

- nohup supervisor &

[INFO] Not running as root, skipping privilege drop

starting server with options:

{'daemonize': False,

'host': '0.0.0.0',

'pidfile': None,

'port': 8888,

'server_group': 'hue',

'server_name': 'localhost',

'server_user': 'hue',

'ssl_certificate': None,

'ssl_certificate_chain': None,

'ssl_cipher_list': 'ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:CAMELLIA:DES-CBC3-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!aECDH:!EDH-DSS-DES-CBC3-SHA:!EDH-RSA-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA',

'ssl_private_key': None,

'threads': 40,

'workdir': None}

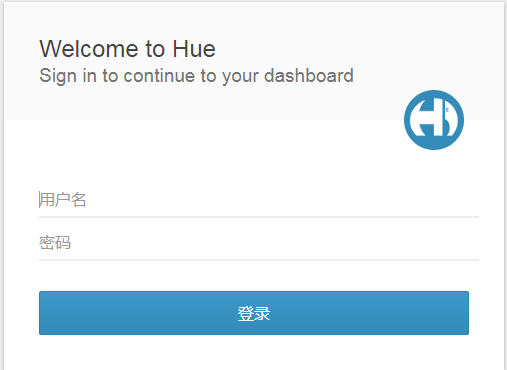

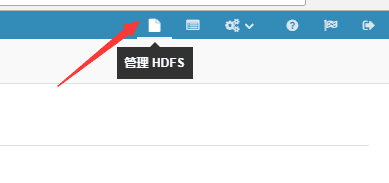

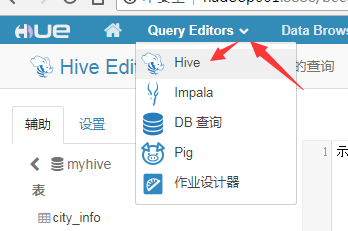

【界面服务】

【界面服务查看hive和hdfs是否可用,如图所示】

- http://hadoop001:8888