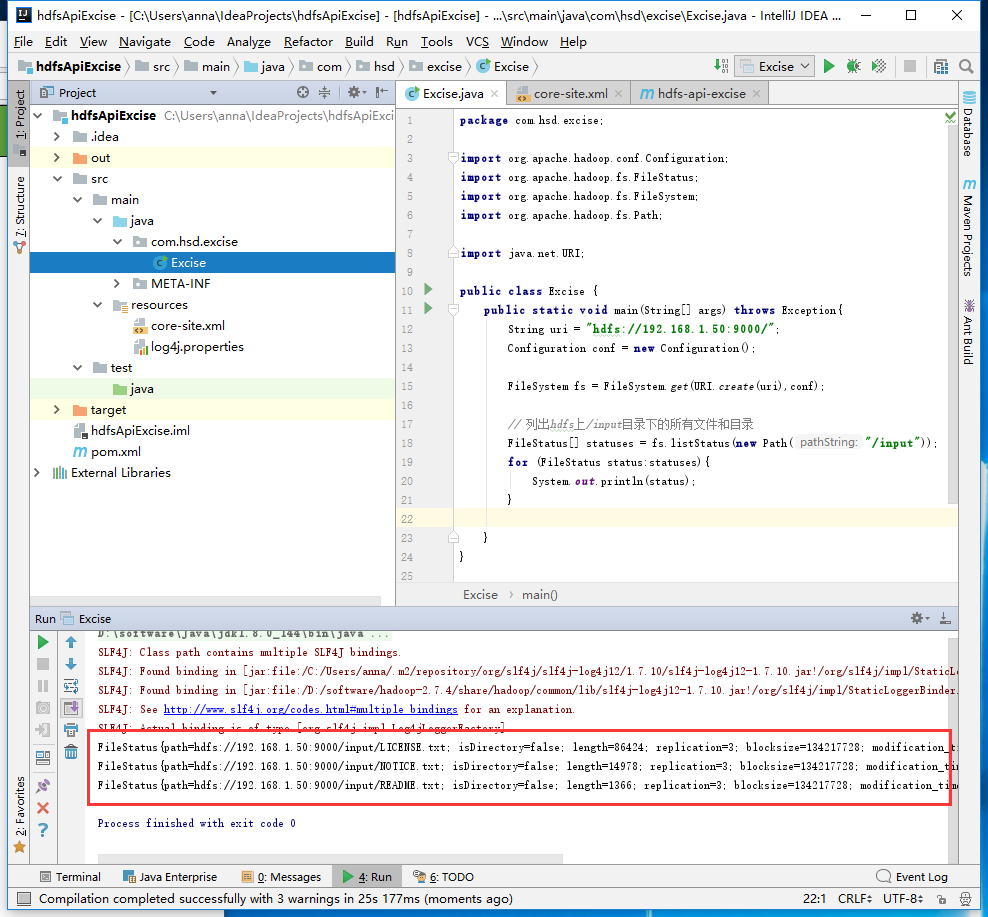

搭建idea开发环境,实现idea远程开发、调试、打包。

资源环境

- idea 2017.2

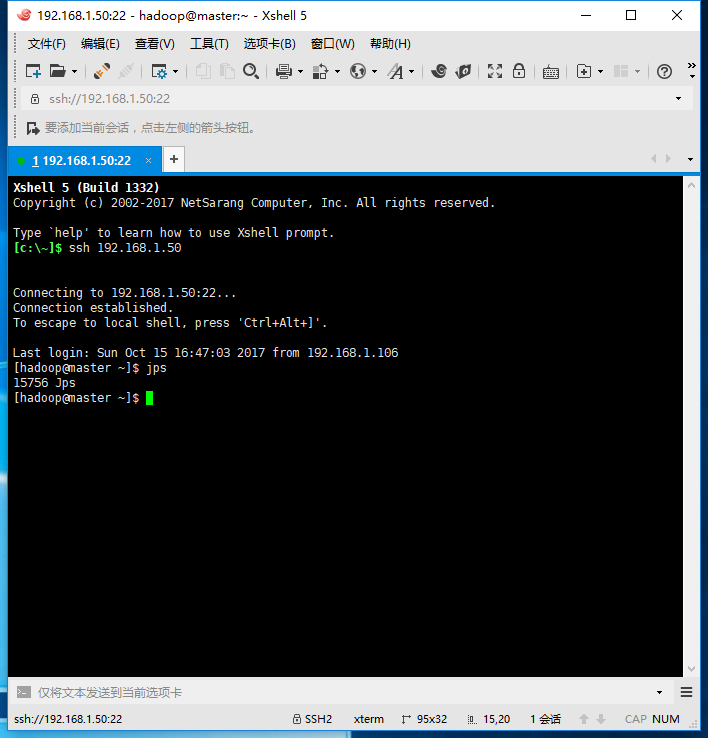

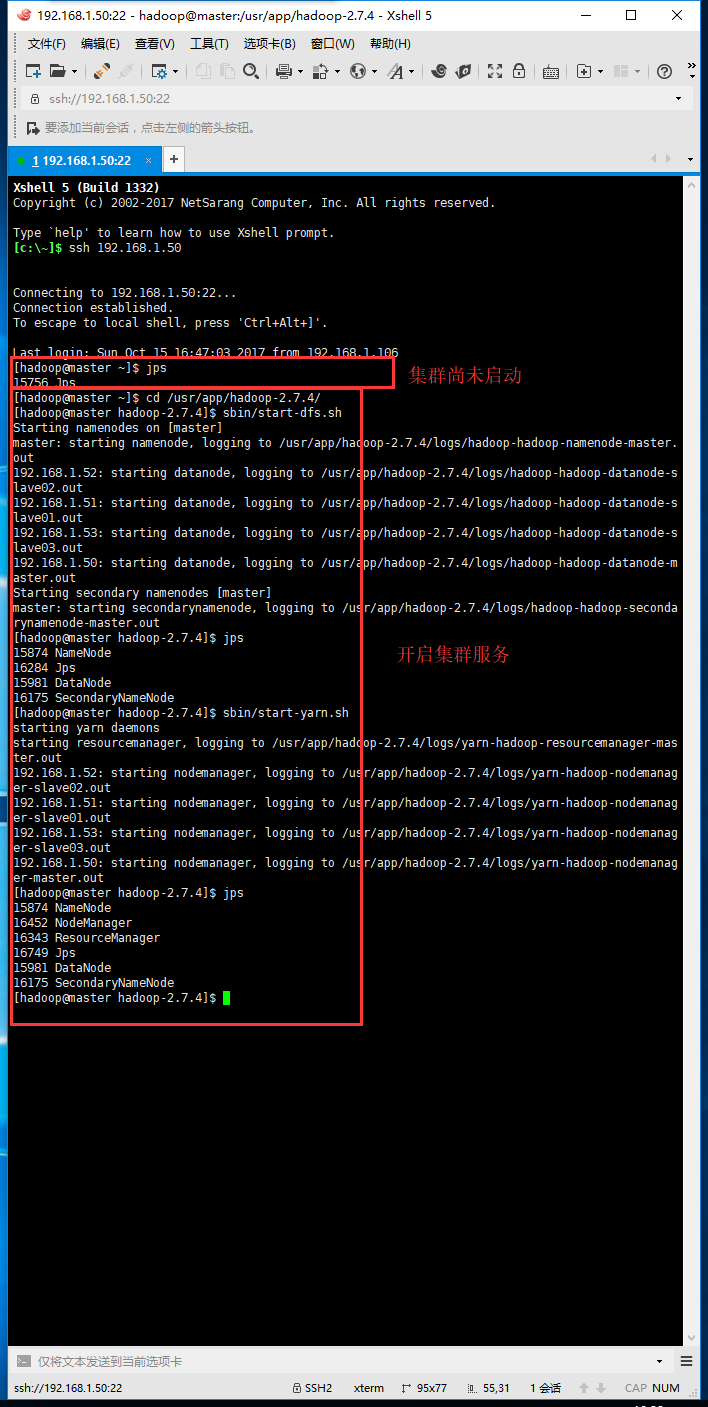

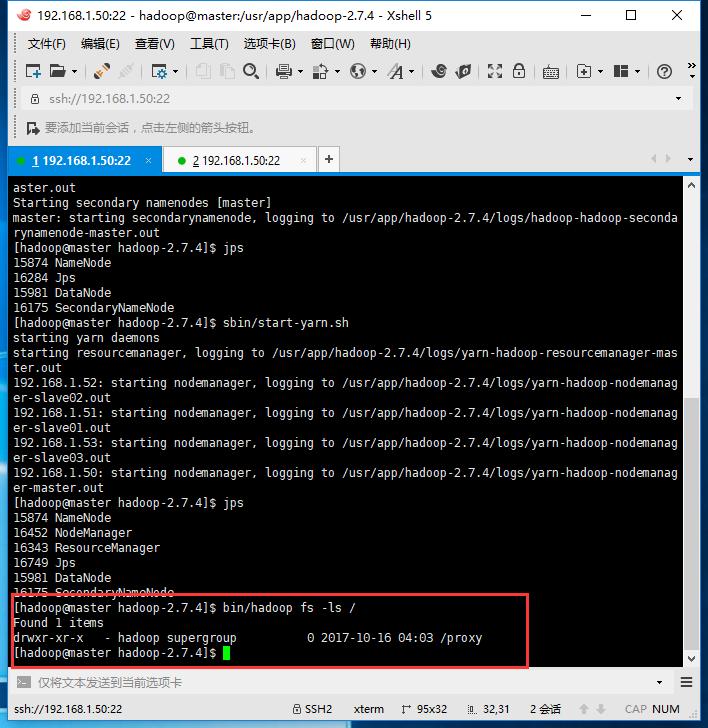

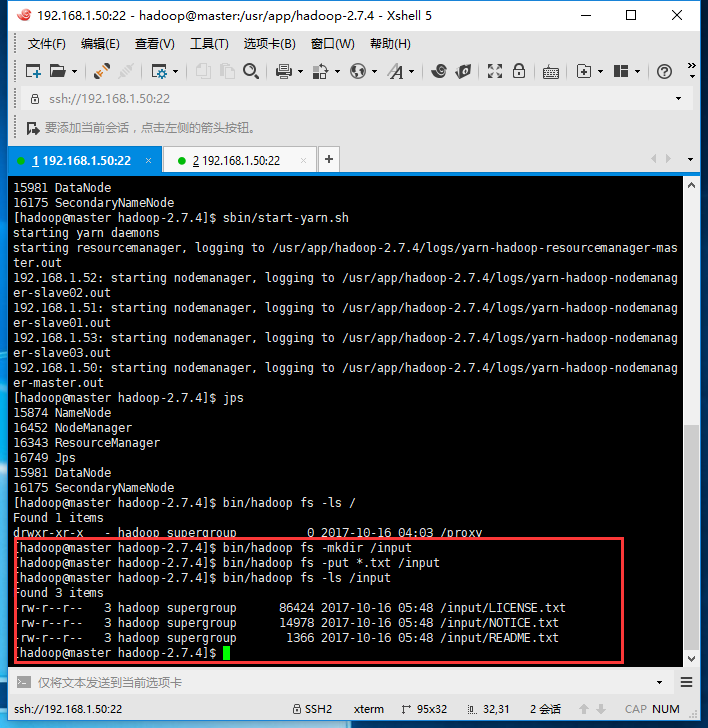

- Hadoop 集群环境

搭建步骤:http://www.cnblogs.com/YellowstonePark/p/7750213.html

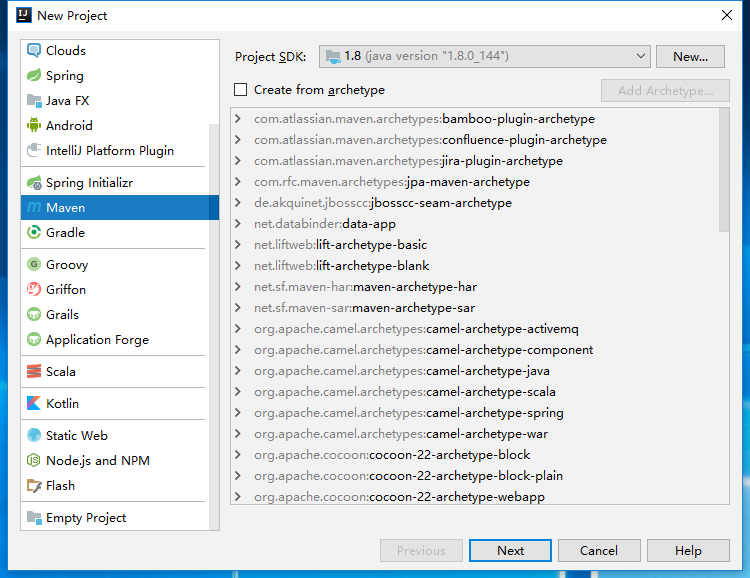

新建项目

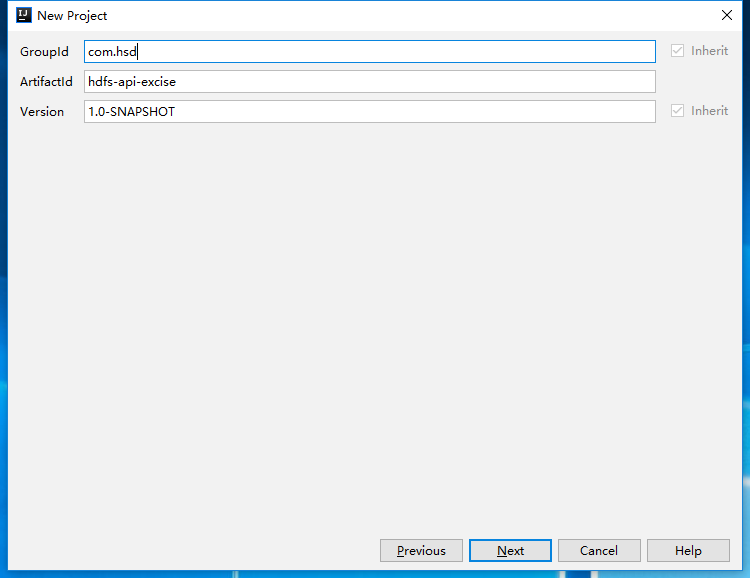

输入GroupId、ArtifactId

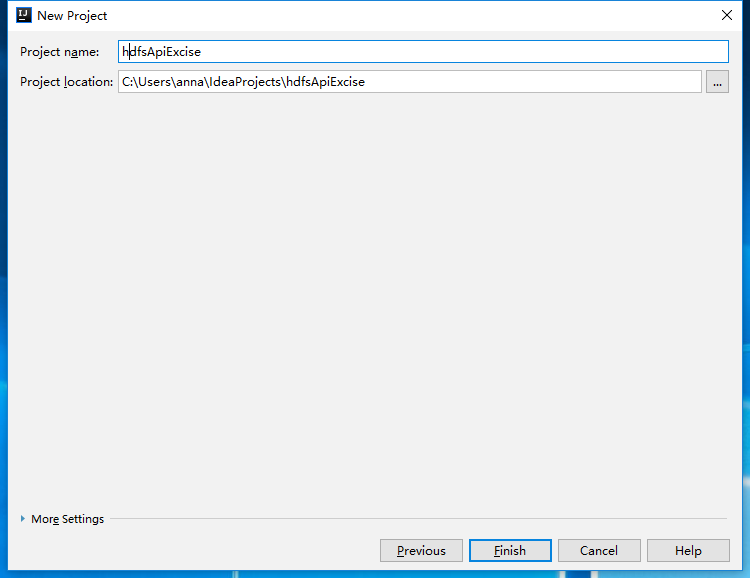

输入project Name 、Project Location

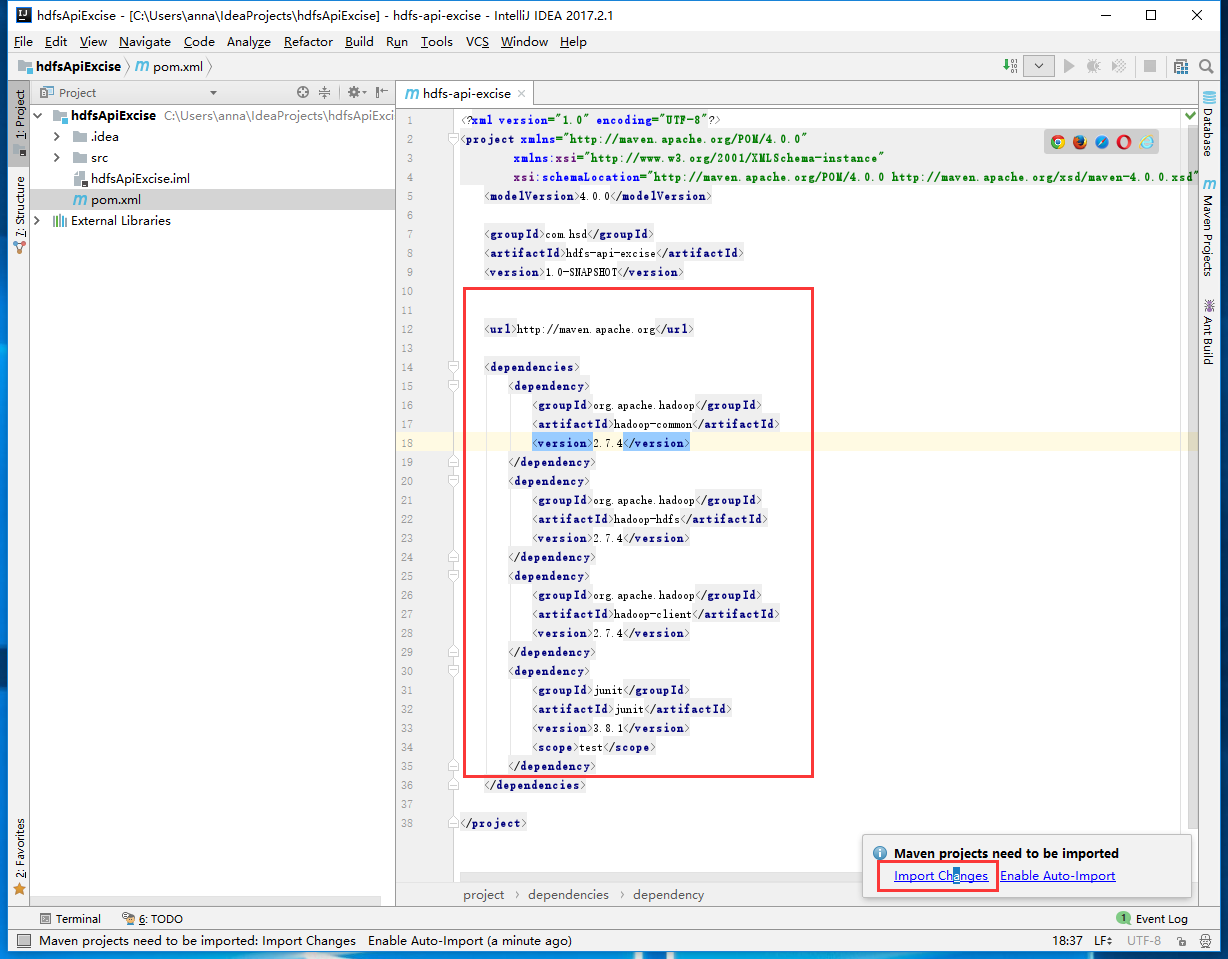

修改pom.xml 添加依赖

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

|

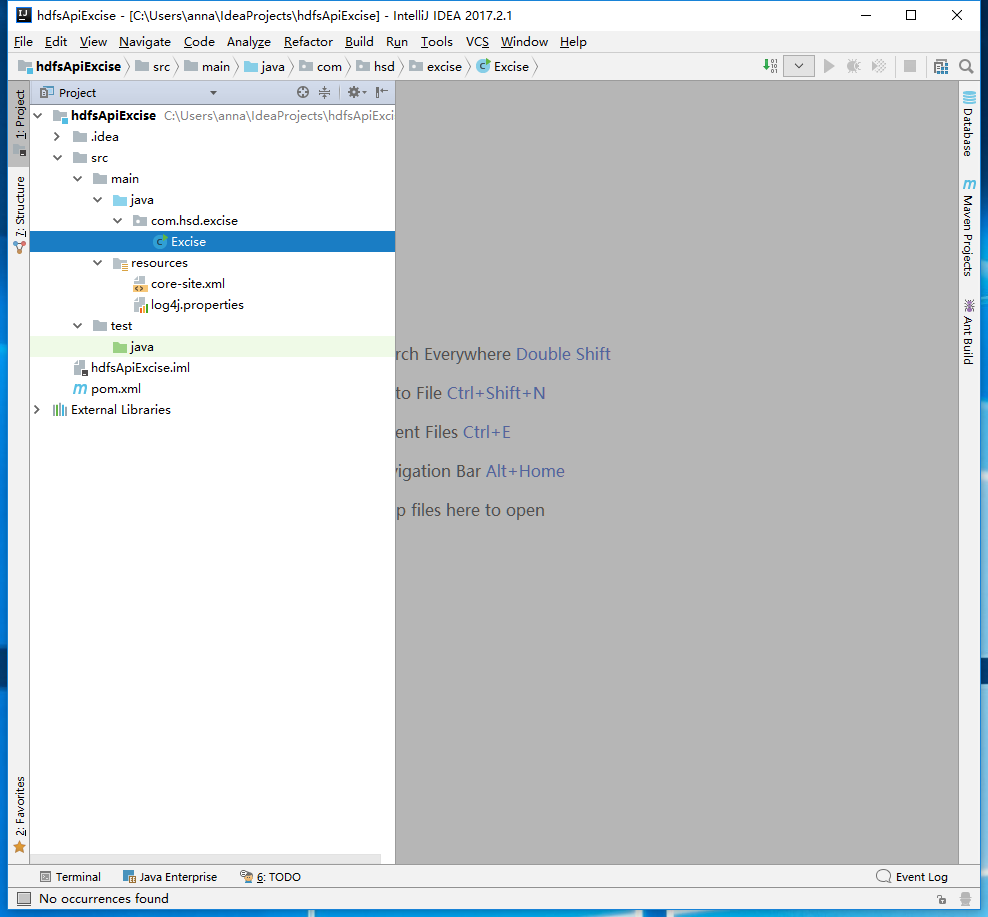

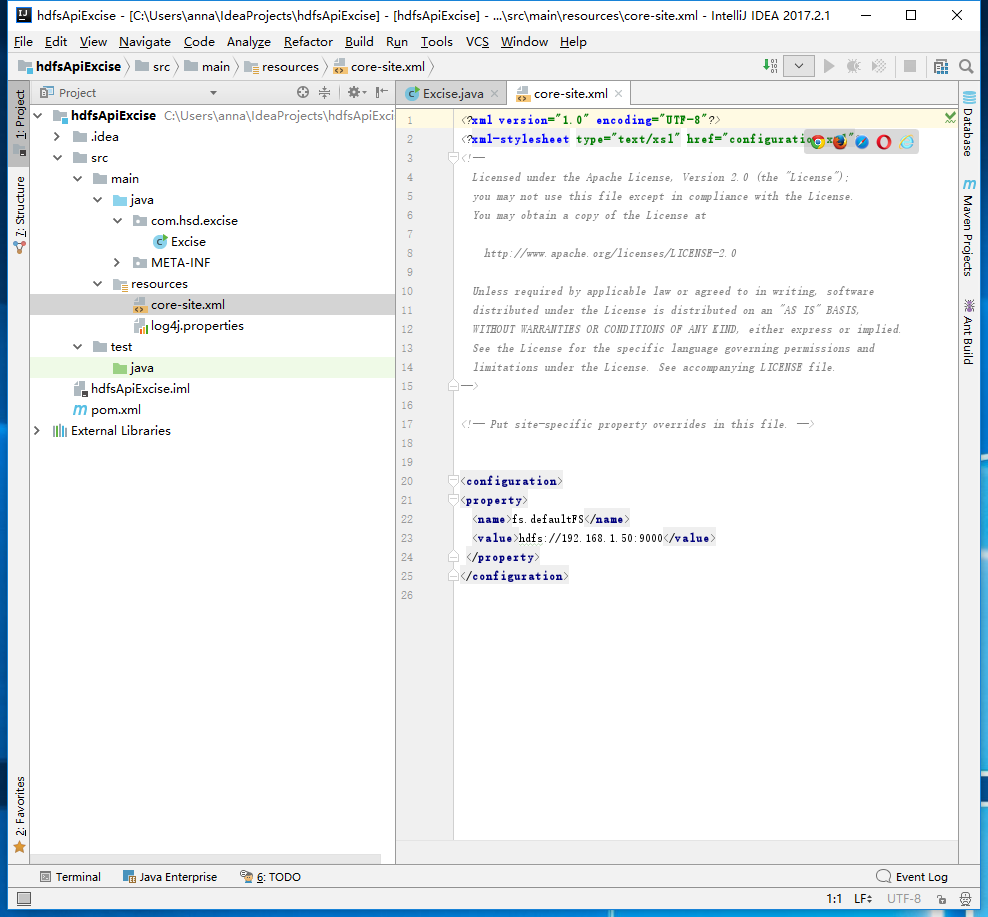

将hadoop配置文件夹下的log4j.properties 和 core-site.xml文件复制到项目resources目录下

| 1 2 3 4 5 6 |

|

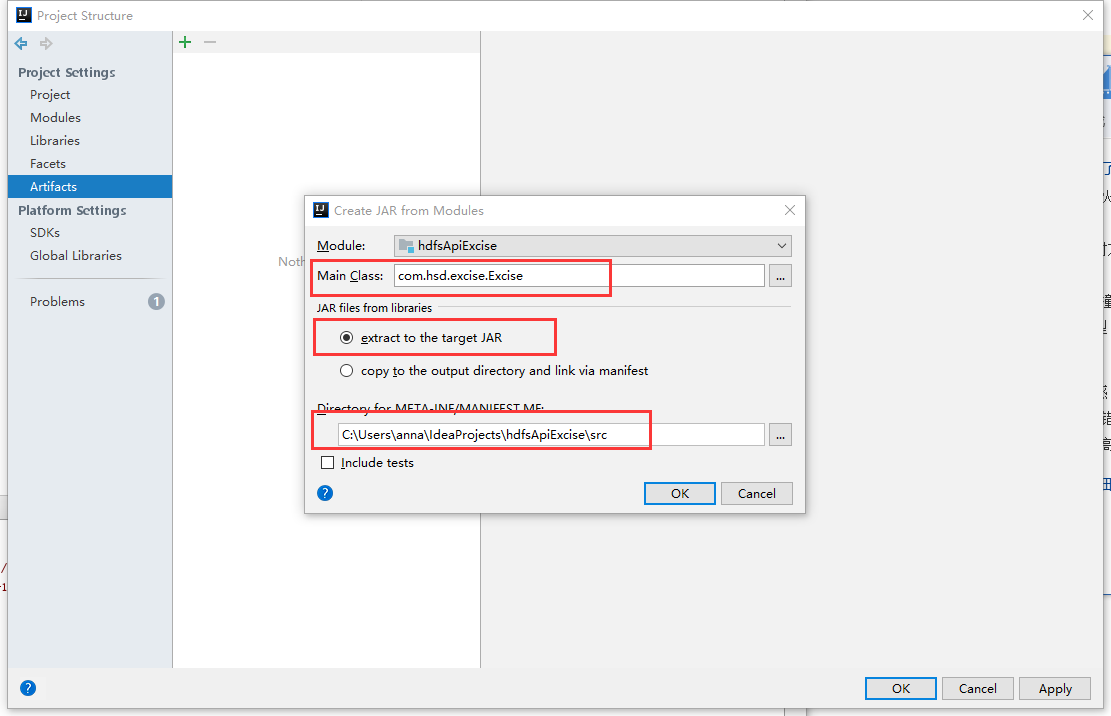

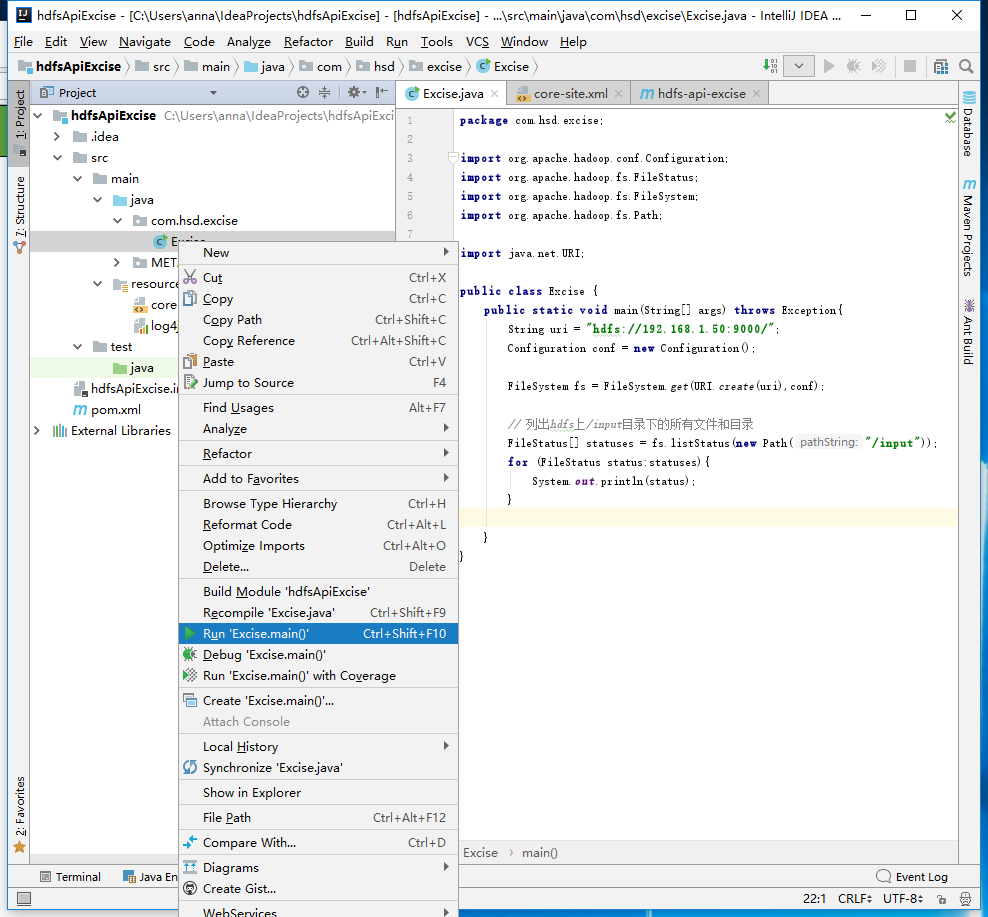

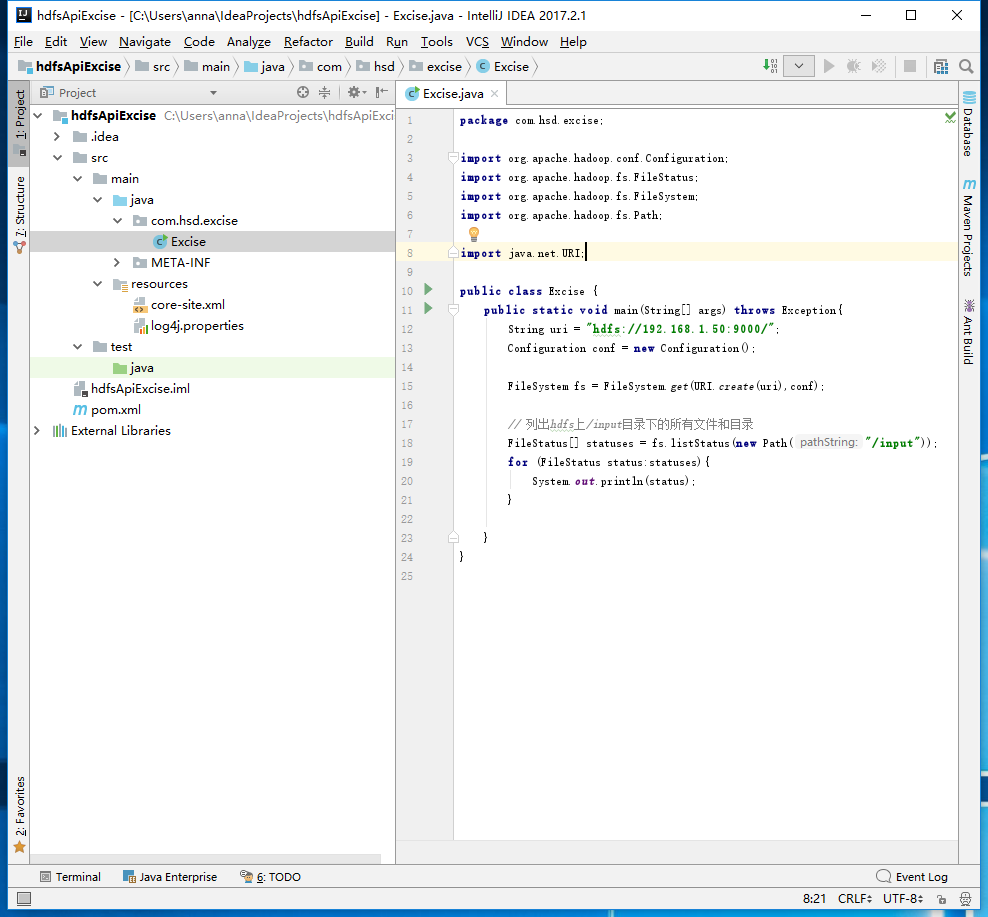

新建包com.hsd.excise 和 Excise java类文件

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

|

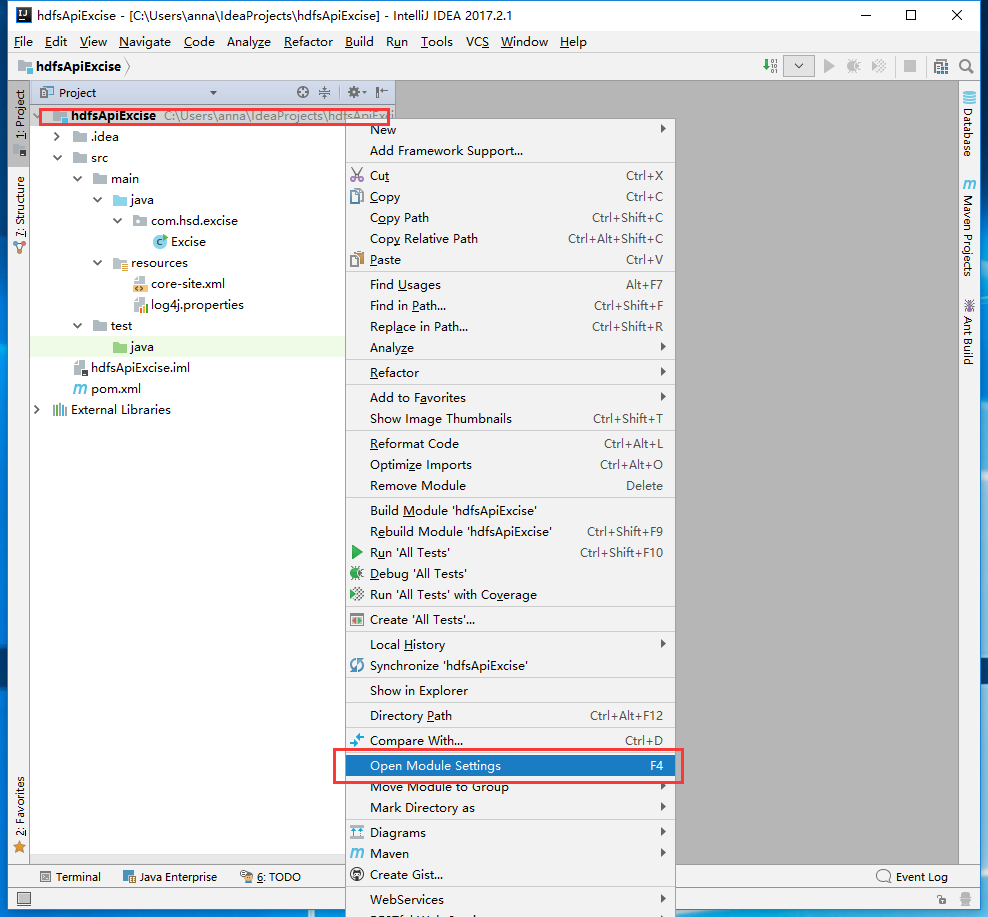

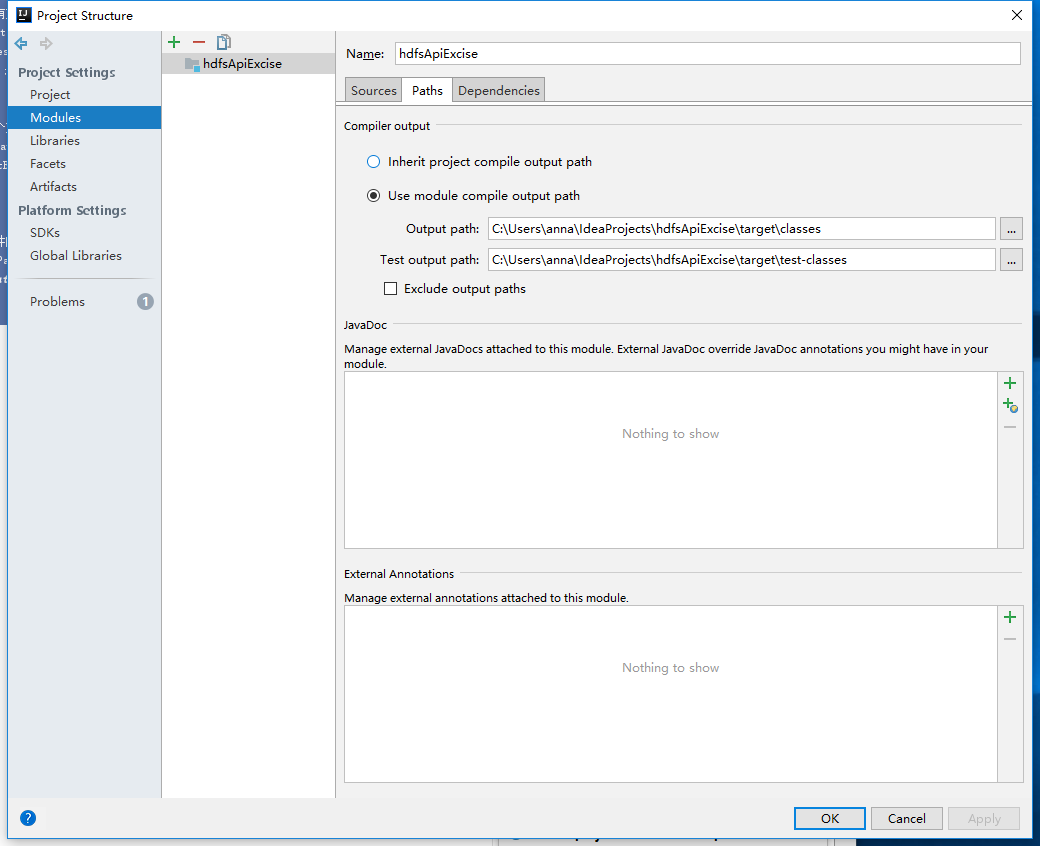

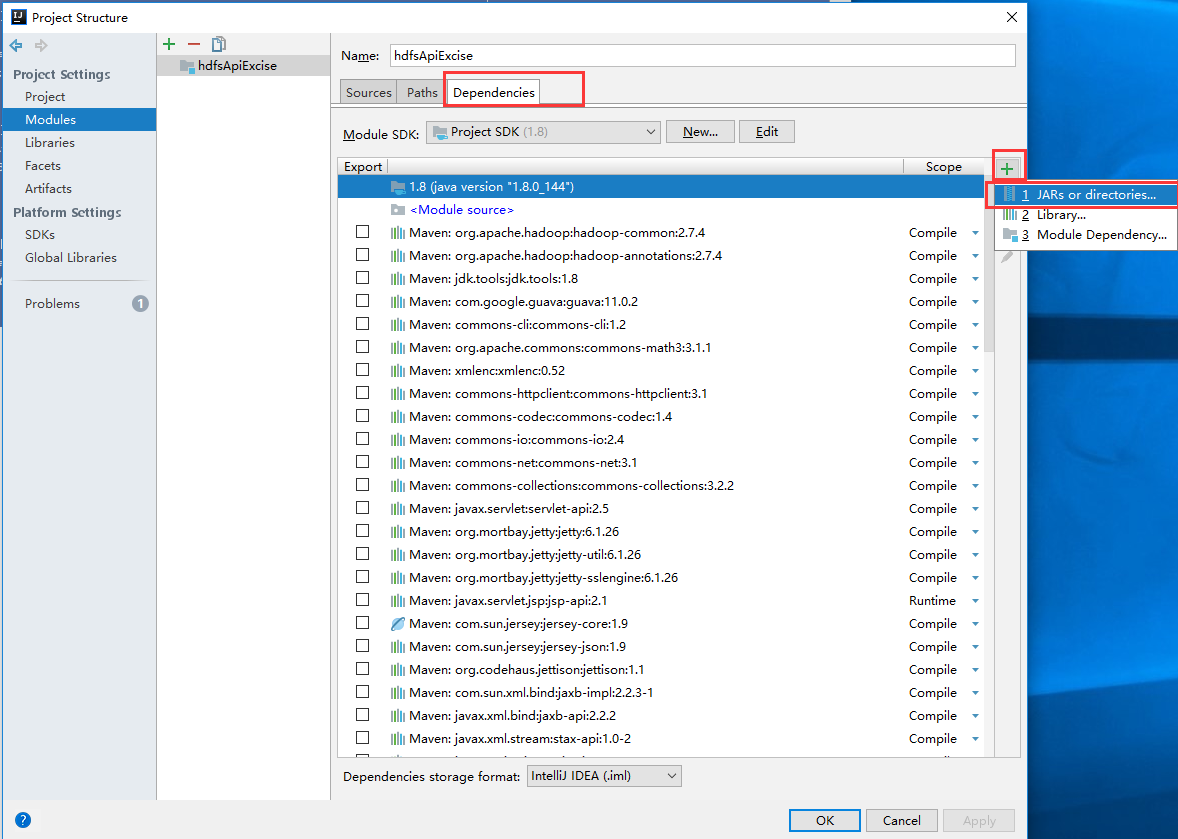

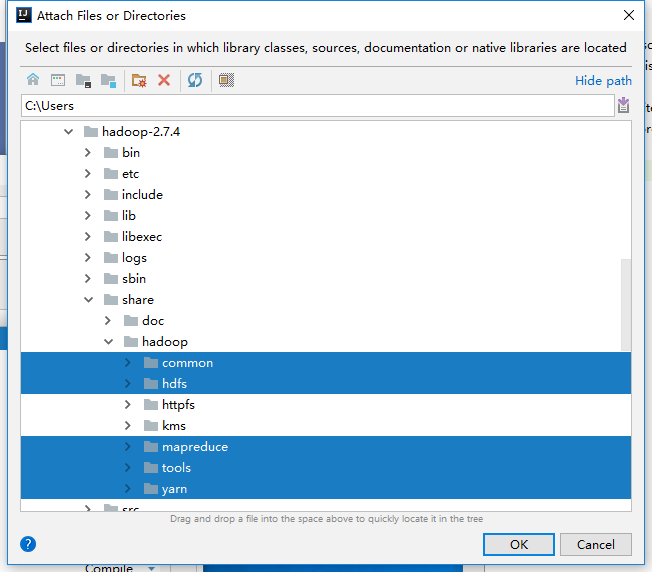

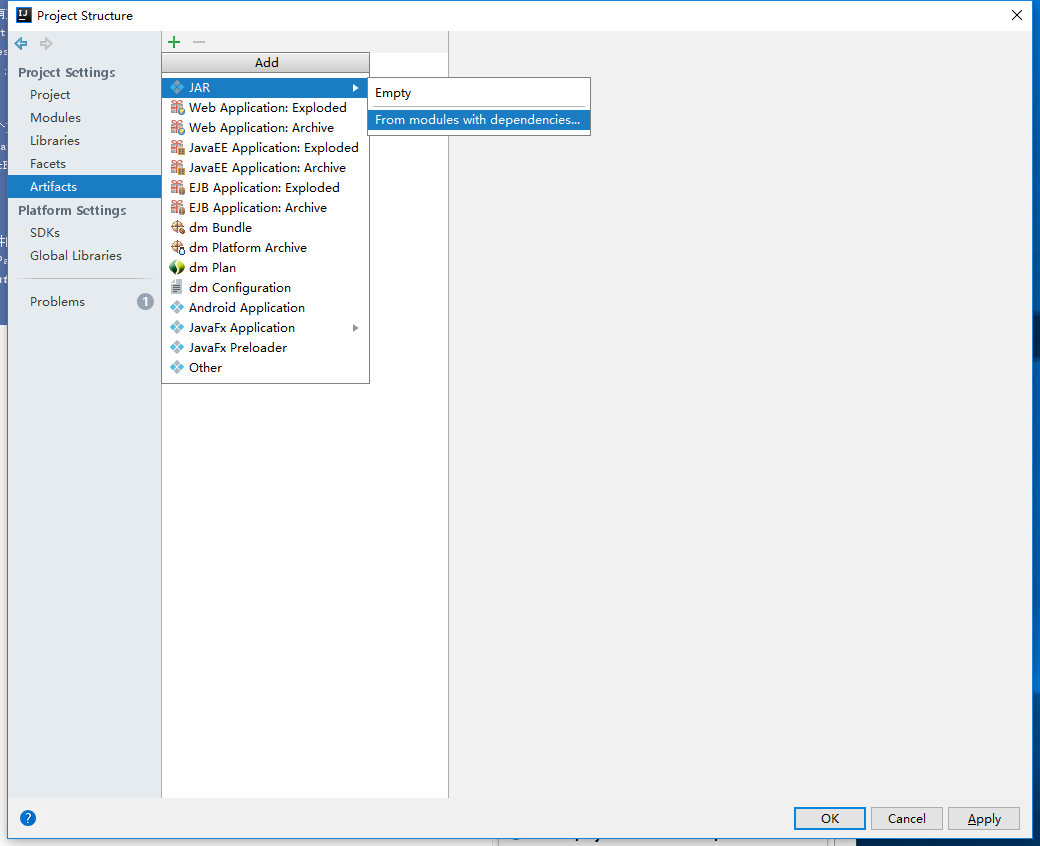

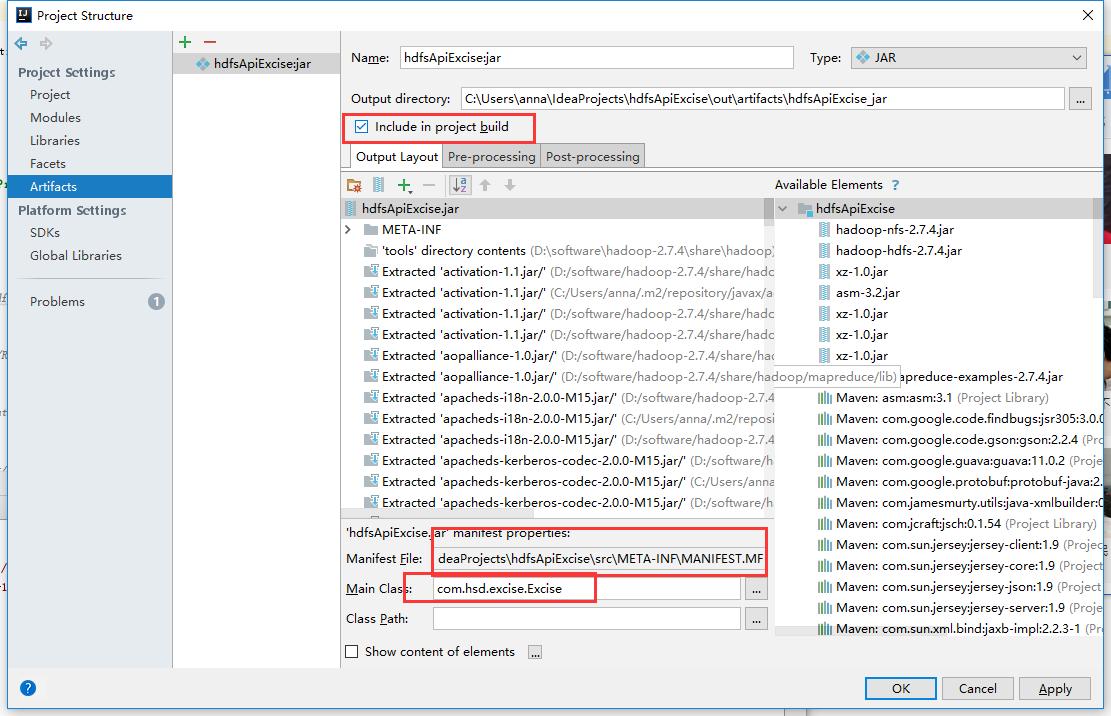

选中 项目名右键 ->Open Module Setting