环境:

VMware Centos7.6系统 全部禁止selinux和firewalld

1、安装配置docker

[root@k8smaster ~]# cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

[root@k8smaster ~]# docker version

Client:

Version: 1.13.1

API version: 1.26

Package version: docker-1.13.1-94.gitb2f74b2.el7.centos.x86_64

Go version: go1.10.3

Git commit: b2f74b2/1.13.1

Built: Tue Mar 12 10:27:24 2019

OS/Arch: linux/amd64

Server:

Version: 1.13.1

API version: 1.26 (minimum version 1.12)

Package version: docker-1.13.1-94.gitb2f74b2.el7.centos.x86_64

Go version: go1.10.3

Git commit: b2f74b2/1.13.1

Built: Tue Mar 12 10:27:24 2019

OS/Arch: linux/amd64

Experimental: false

配置国内镜像源

[root@k8smaster ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://registry.docker-cn.com"]

}

2、下载所需的docker镜像,因为kubeadm默认从谷歌源下载,国内用不了

[root@k8smaster ~]# cat getk8s_images.sh

#!/bin/bash

images=(

kube-apiserver:v1.14.0

kube-controller-manager:v1.14.0

kube-scheduler:v1.14.0

kube-proxy:v1.14.0

pause:3.1

etcd:3.3.10

coredns:1.3.1

kubernetes-dashboard-amd64:v1.10.0

heapster-amd64:v1.5.4

heapster-grafana-amd64:v5.0.4

heapster-influxdb-amd64:v1.5.2

pause-amd64:3.1

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

done

[root@k8smaster ~]# sh -x getk8s_images.sh

3、配置yum源安装kube系列工具

[root@k8smaster ~]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

[root@k8smaster ~]# yum -y install kubelet kubeadm kubectl --disableexcludes=kubernetes

开启kubelet服务并将其开机启动

4、关闭swap

vim编辑/etc/fstab将swap行注释,并执行swapoff -a

5、配置网卡

执行命令echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

6、执行初始化部署k8s master

[root@k8smaster ~]# kubeadm init --kubernetes-version=v1.14.0 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.238.132

从一大堆输出中得到需要手动执行的四个命令

[root@k8smaster ~]# mkdir -p $HOME/.kube

[root@k8smaster ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8smaster ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看版本

[root@k8smaster ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.0", GitCommit:"641856db18352033a0d96dbc99153fa3b27298e5", GitTreeState:"clean", BuildDate:"2019-03-25T15:53:57Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.0", GitCommit:"641856db18352033a0d96dbc99153fa3b27298e5", GitTreeState:"clean", BuildDate:"2019-03-25T15:45:25Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

7、根据配置文件安装k8s所需的插件

[root@k8smaster ~]# cd k8s/

[root@k8smaster k8s]# ls

admin-user-role-binding.yaml admin-user.yaml kube-flannel.yml kubernetes-dashboard.yaml

[root@k8smaster k8s]# cat admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

[root@k8smaster k8s]# cat kube-flannel.yml

---

apiVersion: extensions/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unsed in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: ppc64le

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: s390x

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

[root@k8smaster k8s]# cat admin-user-role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

[root@k8smaster k8s]# cat kubernetes-dashboard.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Configuration to deploy release version of the Dashboard UI compatible with

# Kubernetes 1.8.

#

# Example usage: kubectl create -f <this_file>

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1beta2

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: reg.qiniu.com/k8s/kubernetes-dashboard-amd64:v1.8.3

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

建立admin用户

[root@k8smaster k8s]# kubectl create -f admin-user.yaml

[root@k8smaster k8s]# kubectl create -f admin-user-role-binding.yaml

安装flannel插件

首先下载kube-flannel.yml,内容与上文一致

[root@k8smaster k8s]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@k8smaster k8s]# kubectl apply -f kube-flannel.yml

可以从已经下好的dashboard配置文件安装,也可以从网络配置文件安装,内容一样

[root@k8smaster k8s]# kubectl apply -f http://mirror.faasx.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

安装后查看pods和services

[root@k8smaster ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-fb8b8dccf-2g6zg 1/1 Running 0 53m

kube-system coredns-fb8b8dccf-whzf9 1/1 Running 0 53m

kube-system etcd-k8smaster 1/1 Running 0 52m

kube-system kube-apiserver-k8smaster 1/1 Running 0 52m

kube-system kube-controller-manager-k8smaster 1/1 Running 0 52m

kube-system kube-flannel-ds-amd64-fp48s 1/1 Running 0 49m

kube-system kube-proxy-9zcfj 1/1 Running 0 53m

kube-system kube-scheduler-k8smaster 1/1 Running 0 52m

kube-system kubernetes-dashboard-576695b89b-ftqdr 1/1 Running 0 26s

kube-system kubernetes-dashboard-head-6745d6bb9c-z44qh 1/1 Running 0 45m

[root@k8smaster ~]# kubectl get services --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 17h

kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 17h

kube-system kubernetes-dashboard NodePort 10.101.226.92 <none> 443:31534/TCP 16h

此时只能在vmware虚拟机访问web页面,要想在其它机器访问页面,三种方法:

第一种,使用代理功能,必须按照下方所示将监听ip与允许访问ip指出

[root@localhost k8s]# kubectl proxy --address='192.168.238.132' --accept-hosts='^*$'

Starting to serve on 192.168.238.132:8001

但是只能访问登陆页面,点击登陆按钮没反应,此种方式失败

第二种,修改dashboard配置文件,

[root@k8smaster ~]# kubectl -n kube-system edit service kubernetes-dashboard

把倒数第三行type: ClusterIP修改为type: NodePort

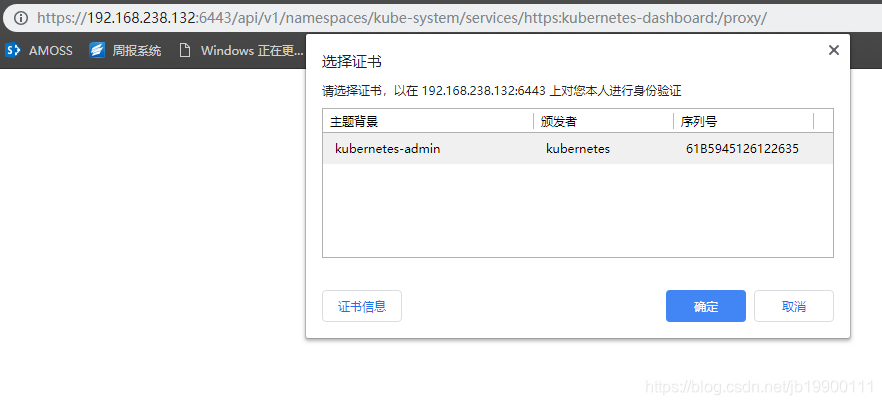

但是登陆时chrome不支持,如下图

此种方式也失败

第三种,使用api登陆。查看api监听端口

[root@k8smaster ~]# netstat -anlp|grep api|grep LISTEN

tcp6 0 0 :::6443 :::* LISTEN 10442/kube-apiserve

生成证书

[root@k8smaster ~]# grep 'client-certificate-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.crt

[root@k8smaster ~]# grep 'client-key-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.key

[root@k8smaster ~]# openssl pkcs12 -export -clcerts -inkey kubecfg.key -in kubecfg.crt -out kubecfg.p12 -name "kubernetes-client"

Enter Export Password:

Verifying - Enter Export Password:

打开chrome,将文件kubecfg.p12导入(设置-隐私-证书管理),重启chrome,访问https://:/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

选择token认证方式,获取token命令如下

[root@k8smaster k8s]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-mg5pd

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: dd283570-5137-11e9-ac60-000c2994187a

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLW1nNXBkIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJkZDI4MzU3MC01MTM3LTExZTktYWM2MC0wMDBjMjk5NDE4N2EiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.hhBZcdEsjB3Q7GYexwi-W61lLGnQGiIN0rfqs_3v9tZQ7X25KJkrgI16nCVAVoh0H1qPTZDse2o-0KprmoDwJFALFYm9ogjc3TYLUqzIPqh7yUhgq6qLvs-kCI_g_uEohM5J2eeKv4PXb5imLfrbIcEgx5A3OwMvGoo4a4G8NT1pEHZlHvKFAhXoWdu-7dN9RNFljjA-QCwM9z63kekh-eVH3cDCzvQlOTaNrG9g82QoeJy4FrSkiygmOMkZb51RQxKi7ZTGuqWHeJGKsScIEC50ag5rij6SvtDJVdqIZ19XzJe9-RacQs-e1h0AjtWtqlblt-Ex8GvRLnaRaFieVw

此时能成功登陆

再安装calico插件

[root@k8smaster ~]# kubectl apply -f http://mirror.faasx.com/kubernetes/installation/hosted/kubeadm/1.7/calico.yaml

configmap/calico-config created

daemonset.extensions/calico-etcd created

service/calico-etcd created

daemonset.extensions/calico-node created

deployment.extensions/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created

clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created

serviceaccount/calico-cni-plugin created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

[root@k8smaster ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-etcd-nvqqv 1/1 Running 0 8s

kube-system calico-kube-controllers-b76649dc9-598k9 0/1 ContainerCreating 0 8s

kube-system calico-node-sbgrx 0/2 ContainerCreating 0 7s

kube-system coredns-fb8b8dccf-2g6zg 1/1 Running 1 17h

kube-system coredns-fb8b8dccf-whzf9 1/1 Running 1 17h

kube-system etcd-k8smaster 1/1 Running 1 17h

kube-system kube-apiserver-k8smaster 1/1 Running 1 17h

kube-system kube-controller-manager-k8smaster 1/1 Running 1 17h

kube-system kube-flannel-ds-amd64-fp48s 1/1 Running 1 17h

kube-system kube-proxy-9zcfj 1/1 Running 1 17h

kube-system kube-scheduler-k8smaster 1/1 Running 1 17h

kube-system kubernetes-dashboard-576695b89b-ftqdr 1/1 Running 1 16h

kube-system kubernetes-dashboard-head-6745d6bb9c-z44qh 1/1 Running 1 17h

等了很久,终于全部启动

[root@k8smaster ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-etcd-nvqqv 1/1 Running 0 49s

kube-system calico-kube-controllers-b76649dc9-598k9 1/1 Running 0 49s

kube-system calico-node-sbgrx 2/2 Running 0 48s

kube-system coredns-fb8b8dccf-2g6zg 1/1 Running 1 17h

kube-system coredns-fb8b8dccf-whzf9 1/1 Running 1 17h

kube-system etcd-k8smaster 1/1 Running 1 17h

kube-system kube-apiserver-k8smaster 1/1 Running 1 17h

kube-system kube-controller-manager-k8smaster 1/1 Running 1 17h

kube-system kube-flannel-ds-amd64-fp48s 1/1 Running 1 17h

kube-system kube-proxy-9zcfj 1/1 Running 1 17h

kube-system kube-scheduler-k8smaster 1/1 Running 1 17h

kube-system kubernetes-dashboard-576695b89b-ftqdr 1/1 Running 1 16h

kube-system kubernetes-dashboard-head-6745d6bb9c-z44qh 1/1 Running 1 17h

8、配置k8snode节点并将其添加进集群

在node节点依次安装docker,关闭swap,执行echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables,安装kubeadm,kubectl等工具

在master节点查看现存的token

[root@k8smaster kubernetes]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

zyd0ba.9qv7oqba31fgwlz6 5h 2019-03-29T03:59:05-04:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

计算当前证书的sha256值

[root@k8smaster kubernetes]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

04500371c4b79afa4102edec9952b26780772586c656b374fd3466f3f993e916

利用token名字和sha256值添加节点,在node上执行

[root@k8snode ~]# kubeadm join 192.168.238.132:6443 --token zyd0ba.9qv7oqba31fgwlz6 --discovery-token-ca-cert-hash sha256:04500371c4b79afa4102edec9952b26780772586c656b374fd3466f3f993e916

[preflight] Running pre-flight checks

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

9、排错

在master端发现node状态时NotReady。

在node执行

[root@k8snode ~]# journalctl -u kubelet |tail

报错1:

“/system.slice/docker.service”: unknown container “/system.slice/docker.service”

解决办法:

修改文件10-kubeadm.conf

[root@k8snode ~]# vim /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

在KUBELET_CGROUP_ARGS变量赋值后面添加 " --runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice "

[root@k8snode ~]# cat /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/sysconfig/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

报错2:

Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

解决办法:

从master把/etc/cni目录scp到node节点的/etc/下

不过又一次实验中,不要需要进行这个操作,等一会儿node节点就可以自动生成/etc/cni目录,内容与master节点中的一样。

参考

https://www.cnblogs.com/RainingNight/p/using-kubeadm-to-create-a-cluster.html

https://www.cnblogs.com/RainingNight/p/deploying-k8s-dashboard-ui.html

https://juejin.im/post/5b8a4536e51d4538c545645c#heading-7