介绍:前期对ceph有一个简单的介绍,但是内容太大,并不具体,接下来使用ceph-deploy部署一个Ceph集群,并做一些运维管理工作,深入的理解Ceph原理及工作工程!

一、环境准备

本次使用的虚拟机测试,使用7.6系统最小化安装,CentOS Linux release 7.6.1810 (Core)

主机规划:

|

节点

|

类型

|

IP

|

CPU

|

内存

|

|

ceph-deploy

|

部署管理平台

|

172.25.254.130

|

2 C

|

4 G

|

|

ceph

|

Monitor OSD

|

172.25.254.131

|

2 C

|

4G

|

|

ceph2

|

OSD

|

172.25.254.132

|

2 C

|

4 G

|

|

ceph3

|

OSD

|

172.25.254.133

|

2 C

|

4 G

|

网络规划:

192.168.2.0/24 集群网络

172.25.254.0/24 OSD复制网络+iscsi映射网络

功能实现:

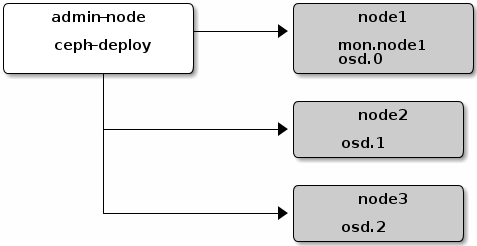

- Ceph集群:monitor、manager、osd*2

- iscsi:client

- monitor:dashboard

主机前期准备:

每个节点都要做

修改主机名安装必要软件

hostnamectl set-hostname username hostname username yum install -y net-tools wget vim yum update

配置阿里源

rm -f /etc/yum.repos.d/* wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo sed -i '/aliyuncs.com/d' /etc/yum.repos.d/*.repo echo '#阿里ceph源 > [ceph] > name=ceph > baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/x86_64/ > gpgcheck=0 > [ceph-noarch] > name=cephnoarch > baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/noarch/ > gpgcheck=0 > [ceph-source] > name=ceph-source > baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/SRPMS/ > gpgcheck=0 > #'>/etc/yum.repos.d/ceph.repo yum clean all && yum makecache

配置时间同步

yum install ntp ntpdate ntp-doc

部署节点配置主机名

[root@ceph1 ~]# vi /etc/hosts

172.25.254.130 ceph1 172.25.254.131 ceph2 172.25.254.132 ceph3 172.25.254.133 ceph4 172.25.254.134 ceph5 172.25.254.135 ceph6

配置部署节点到所有osd节点免密登录:

[root@ceph1 ~]# useradd manager #创建非root用户

[root@ceph1 ~]# echo redhat | passwd --stdin manager

[root@ceph1 ~]# echo "manager ALL = (root) NOPASSWD:ALL" > /etc/sudoers.d/manager

[root@ceph1 ~]# for i in {1..5}; do echo "====ceph${i}====";ssh root@ceph${i} 'useradd -d /home/cephuser -m cephuser; echo "redhat" | passwd --stdin cephuser'; done #所有osd节点创建cephuser用户

[root@ceph1 ~]# for i in {1..5}; do echo "====ceph${i}====";ssh root@ceph${i} 'echo "cephuser ALL = (root) NOPASSWD:ALL" > /etc/sudoers.d/cephuser'; done

[root@ceph1 ~]# for i in {1..5}; do echo "====ceph${i}====";ssh root@ceph${i} 'chmod 0440 /etc/sudoers.d/cephuser'; done

[root@ceph1 ~]# su - manager

[cephuser@ceph1 ~]$ ssh-keygen -f ~/.ssh/id_rsa -N ''

[cephuser@ceph1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected]

[cephuser@ceph1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub cephuser@172.25.254.131

[cephuser@ceph1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub cephuser@172.25.254.132

[cephuser@ceph1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub cephuser@172.25.254.133

[cephuser@ceph1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub cephuser@172.25.254.134

在部署节点ceph1上安徽在哪个匹配环境

[root@ceph1 ~]# curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py [root@ceph1 ~]# python get-pip.py

修改部署节点的config文件

[cephuser@ceph1 ~]$ vi ~/.ssh/config

Host node1 Hostname ceph1 User cephuser Host node2 Hostname crph2 User cephuser Host node3 Hostname ceph3 User cephuser Host node4 Hostname ceph4 User cephuser Host node5 Hostname ceph5 User cephuser

[cephuser@ceph1 ~]$ chmod 600 .ssh/config

测试

[cephuserr@ceph1 ~]$ ssh cephuser@ceph2

[cephuser@ceph2 ~]$ exit

关闭selinux并配置防火墙:

[root@ceph1 ~]# sed -i '/^SELINUX=.*/c SELINUX=perimissive' /etc/selinux/config

[root@ceph1 ~]# sed -i 's/^SELINUXTYPE=.*/SELINUXTYPE=disabled/g' /etc/selinux/config

[root@ceph1 ~]# grep --color=auto '^SELINUX' /etc/selinux/config

SELINUX=perimissive

SELINUXTYPE=disabled

[root@ceph1 ~]# setenforce 0

[root@ceph1 ~]# firewall-cmd --list-all

public (active) target: default icmp-block-inversion: no interfaces: ens33 sources: services: ssh dhcpv6-client ports: protocols: masquerade: no forward-ports: source-ports: icmp-blocks: rich rules:

[root@ceph1 ~]# firewall-cmd --zone=public --add-port 80/tcp --permanent

[root@ceph1 ~]# firewall-cmd --zone=public --add-port 6800-7300/tcp --permanent

[root@ceph1 ~]# firewall-cmd --reload

创建集群

以下操作均在控制节点完成

[root@ceph1 ~]# su - cephuser

创建配置文件

[cephuser@ceph1 ~]$ cd

[cephuser@ceph1 ~]$ mkdir my-cluster

[cephuser@ceph1 ~]$ cd my-cluster

创建监控节点

[cephuser@ceph1 my-cluster]$ ceph-deploy new ceph2

[cephuser@ceph1 my-cluster]$ ll

-rw-rw-r--. 1 cephuser cephuser 197 Mar 14 23:13 ceph.conf -rw-rw-r--. 1 cephuser cephuser 3166 Mar 14 23:13 ceph-deploy-ceph.log -rw-------. 1 cephuser cephuser 73 Mar 14 23:13 ceph.mon.keyring

该目录存在一个 Ceph 配置文件、一个 monitor 密钥环和一个日志文件。

配置网络

[cephuser@ceph1 my-cluster]$ vi ceph.conf

public network = 172.15.254.024 cluster network = 192.168.2.0/24

安装ceph:

[cephuser@ceph1 my-cluster]$ ceph-deploy install ceph1 ceph2 ceph3 ceph4 ceph5

初始化监控节点

[cephuser@ceph1 my-cluster]$ ceph-deploy mon create-initial

[cephuser@ceph1 my-cluster]$ ll

-rw-------. 1 cephuser cephuser 71 Mar 14 23:29 ceph.bootstrap-mds.keyring -rw-------. 1 cephuser cephuser 71 Mar 14 23:29 ceph.bootstrap-mgr.keyring -rw-------. 1 cephuser cephuser 71 Mar 14 23:29 ceph.bootstrap-osd.keyring -rw-------. 1 cephuser cephuser 71 Mar 14 23:29 ceph.bootstrap-rgw.keyring -rw-------. 1 cephuser cephuser 63 Mar 14 23:29 ceph.client.admin.keyring -rw-rw-r--. 1 cephuser cephuser 197 Mar 14 23:29 ceph.conf -rw-rw-r--. 1 cephuser cephuser 310977 Mar 14 23:30 ceph-deploy-ceph.log -rw-------. 1 cephuser cephuser 73 Mar 14 23:29 ceph.mon.keyring

部署MGR

[cephuser@ceph1 my-cluster]$ ceph-deploy mgr create ceph2 ceph3 ceph4

运行卡住,重新来过