环境信息

centos7.2

目录

Docker安装/docker实现Mysql主从复制参考地址

通过配置文件修改docker容器端口映射

docker实现项目的负载均衡集群

通过配置文件修改docker容器端口映射

Docker安装/docker实现Mysql主从复制参考地址

通过配置文件修改docker容器端口映射参考地址

(精)Docker容器内部端口映射到外部宿主机端口的方法小结

有时候,我们需要给正在运行的容器添加端口映射,百度一下发现很多都是通过iptables,或者是通过将当前容器通过docker commit命令提交为一个镜像,然后重新执行docker run命令添加端口映射。这种方法虽然可以,但是感觉好像有点南辕北辙,没有必要啊。看了很多文章,貌似没有一种合适的办法可以相对“优雅”地解决这个问题。

注意:

宿主机的一个端口只能映射到容器内部的某一个端口上,比如:8080->80之后,就不能8080->81

容器内部的某个端口可以被宿主机的多个端口映射,比如:8080->80,8090->80,8099->80

修改DOCKER端口映射步骤

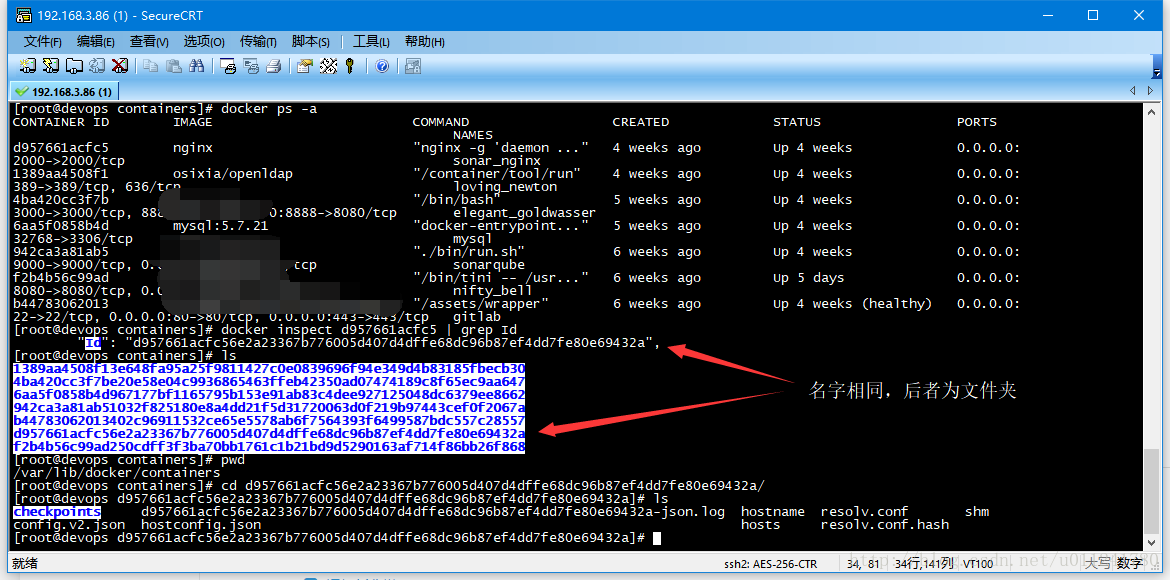

1、使用 docker ps -a 命令找到要修改容器的 CONTAINER ID

2、运行 docker inspect 【CONTAINER ID】 | grep Id 命令

3、执行 cd /var/lib/docker/containers 命令进入找到与 Id 相同的目录,如下图

4、停止 docker 引擎服务,systemctl stop docker 或者 service docker stop

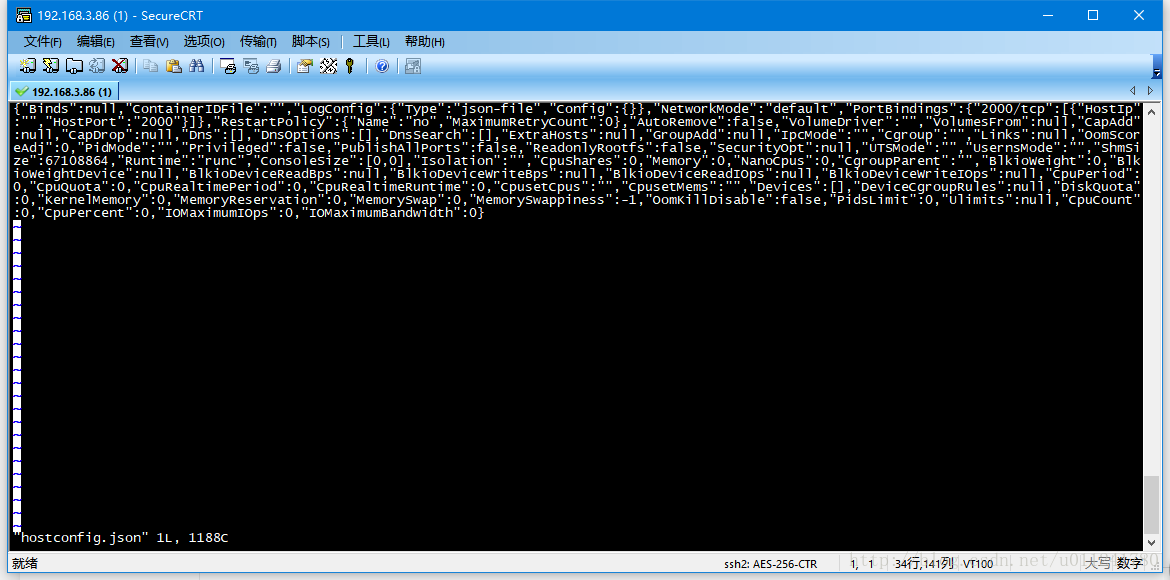

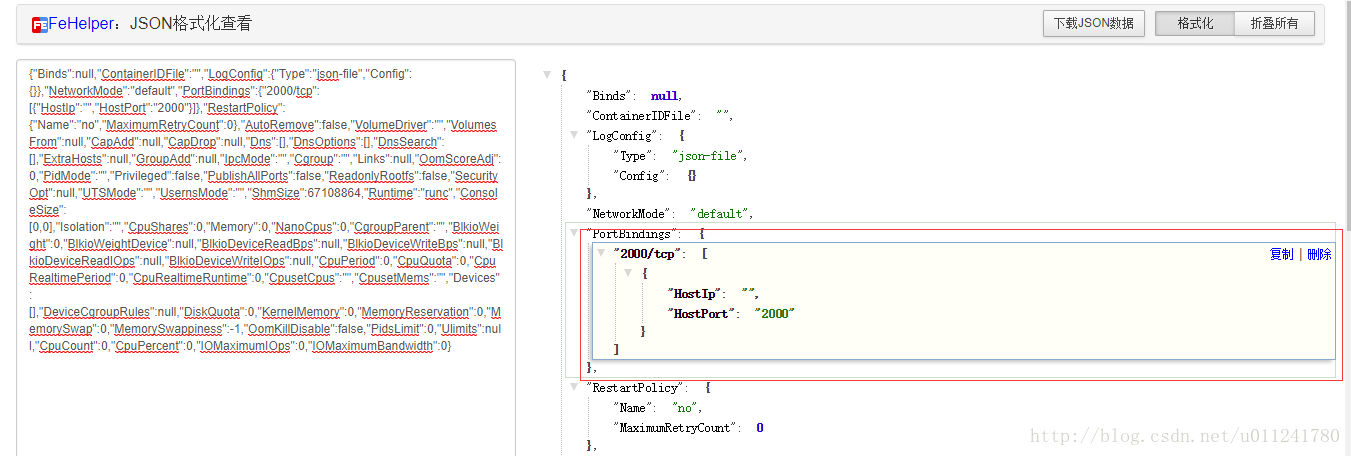

5、进入对应 Id 所在目录后,如上图所示,修改 hostconfig.json 和 config.v2.json ---- vi hostconfig.json

可以看到格式很乱,可以使用json美化工具查看具体信息。

然后可以按照方框中所示,再添加一段类似的内容,比如新增一个 80 端口,在 PortBindings下边添加一下内容,端口配置之间用英文字符逗号隔开

"80/tcp": [

{

"HostIp": "0.0.0.0",

"HostPort": "80"

}

]

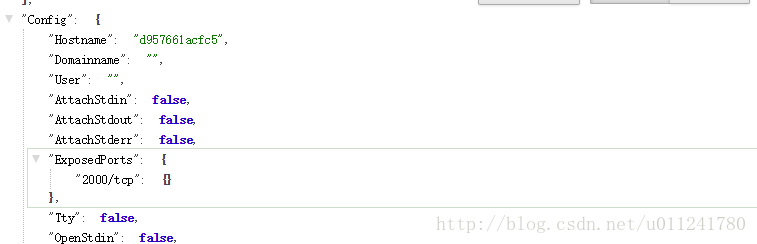

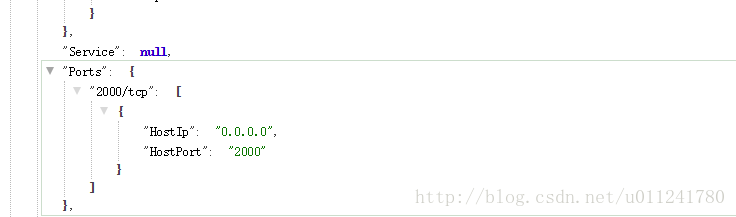

接着修改 vi config.v2.json

找到 ExposedPorts 和 Ports 对应项,还是仿照原先的内容添加自己所需要的端口映射

========================================================================

========================================================================

保存之后 systemctl start docker ,启动容器之后就可以看到新增的端口映射了。

配置案例

例如下面的是分别把宿主机的3306端口映射到容器的3306

把宿主机的8084端口映射到容器的8085端口

宿主机的一个端口可以被多个容器端口进行监听

- config.v2.json

{

"StreamConfig": { },

"State": {

"Running": true,

"Paused": false,

"Restarting": false,

"OOMKilled": false,

"RemovalInProgress": false,

"Dead": false,

"Pid": 7758,

"ExitCode": 0,

"Error": "",

"StartedAt": "2019-03-02T02:35:48.538300741Z",

"FinishedAt": "2019-03-01T07:16:15.64603722Z",

"Health": null

},

"ID": "7187b75620b4fa53f5bdaf4e50de0c7e9a152a261c7da1494139a50a7f65db74",

"Created": "2019-02-27T12:36:58.831703086Z",

"Managed": false,

"Path": "/usr/sbin/init",

"Args": [ ],

"Config": {

"Hostname": "7187b75620b4",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"3306/tcp": { },

"8084/tcp": { }

},

"Tty": true,

"OpenStdin": true,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

],

"Cmd": [

"/usr/sbin/init"

],

"Image": "1e1148e4cc2c",

"Volumes": null,

"WorkingDir": "",

"Entrypoint": null,

"OnBuild": null,

"Labels": {

"org.label-schema.build-date": "20181205",

"org.label-schema.license": "GPLv2",

"org.label-schema.name": "CentOS Base Image",

"org.label-schema.schema-version": "1.0",

"org.label-schema.vendor": "CentOS"

}

},

"Image": "sha256:1e1148e4cc2c148c6890a18e3b2d2dde41a6745ceb4e5fe94a923d811bf82ddb",

"NetworkSettings": {

"Bridge": "",

"SandboxID": "357f85e1a5521d83e0c801d960f039049999e626b53de452f9beefc910e3413e",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "b36eada156be7ce2d227cbcfe6c5864fdff69b5158411ab14e51738a9ac854fa",

"EndpointID": "3b80e8660c842622866614b6eee9755e4ba58707df28041eb7b3ddacda805b62",

"Gateway": "172.18.0.1",

"IPAddress": "172.18.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:12:00:02",

"IPAMOperational": false

}

},

"Service": null,

"Ports": {

"3306/tcp": [

{

"HostIp": "0.0.0.0",

"HostPort": "3306"

}

],

"8084/tcp": [

{

"HostIp": "0.0.0.0",

"HostPort": "8085"

}

]

},

"SandboxKey": "/var/run/docker/netns/357f85e1a552",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"IsAnonymousEndpoint": false,

"HasSwarmEndpoint": false

},

"LogPath": "",

"Name": "/mysql-master",

"Driver": "overlay2",

"MountLabel": "",

"ProcessLabel": "",

"RestartCount": 0,

"HasBeenStartedBefore": true,

"HasBeenManuallyStopped": false,

"MountPoints": { },

"SecretReferences": null,

"AppArmorProfile": "",

"HostnamePath": "/var/lib/docker/containers/7187b75620b4fa53f5bdaf4e50de0c7e9a152a261c7da1494139a50a7f65db74/hostname",

"HostsPath": "/var/lib/docker/containers/7187b75620b4fa53f5bdaf4e50de0c7e9a152a261c7da1494139a50a7f65db74/hosts",

"ShmPath": "/var/lib/docker/containers/7187b75620b4fa53f5bdaf4e50de0c7e9a152a261c7da1494139a50a7f65db74/shm",

"ResolvConfPath": "/var/lib/docker/containers/7187b75620b4fa53f5bdaf4e50de0c7e9a152a261c7da1494139a50a7f65db74/resolv.conf",

"SeccompProfile": "",

"NoNewPrivileges": false

}

- hostconfig.json

{

"Binds": null,

"ContainerIDFile": "",

"LogConfig": {

"Type": "journald",

"Config": { }

},

"NetworkMode": "default",

"PortBindings": {

"3306/tcp": [

{

"HostIp": "0.0.0.0",

"HostPort": "3306"

}

],

"8084/tcp": [

{

"HostIp": "0.0.0.0",

"HostPort": "8085"

}

]

},

"RestartPolicy": {

"Name": "no",

"MaximumRetryCount": 0

},

"AutoRemove": false,

"VolumeDriver": "",

"VolumesFrom": null,

"CapAdd": null,

"CapDrop": null,

"Dns": [ ],

"DnsOptions": [ ],

"DnsSearch": [ ],

"ExtraHosts": null,

"GroupAdd": null,

"IpcMode": "",

"Cgroup": "",

"Links": [ ],

"OomScoreAdj": 0,

"PidMode": "",

"Privileged": false,

"PublishAllPorts": false,

"ReadonlyRootfs": false,

"SecurityOpt": null,

"UTSMode": "",

"UsernsMode": "",

"ShmSize": 67108864,

"Runtime": "docker-runc",

"ConsoleSize": [

0,

0

],

"Isolation": "",

"CpuShares": 0,

"Memory": 0,

"NanoCpus": 0,

"CgroupParent": "",

"BlkioWeight": 0,

"BlkioWeightDevice": null,

"BlkioDeviceReadBps": null,

"BlkioDeviceWriteBps": null,

"BlkioDeviceReadIOps": null,

"BlkioDeviceWriteIOps": null,

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

"CpusetMems": "",

"Devices": [ ],

"DiskQuota": 0,

"KernelMemory": 0,

"MemoryReservation": 0,

"MemorySwap": 0,

"MemorySwappiness": -1,

"OomKillDisable": false,

"PidsLimit": 0,

"Ulimits": null,

"CpuCount": 0,

"CpuPercent": 0,

"IOMaximumIOps": 0,

"IOMaximumBandwidth": 0

}

docker实现项目的负载均衡集群

(精)死磕nginx系列–使用nginx做负载均衡

步骤说明

安装docker,参考目录第一个

容器安装两个以上/并且容器中运行自己的项目(进行宿主机端口与docker容器主机端口映射【也可采用端口映射】)

宿主机安装nginx

宿主机配置负载均衡(配置可以参考链接(精)死磕nginx系列–使用nginx做负载均衡)

配置说明

1.nginx的配置(nginx.conf)

# For more information on configuration, see:

# * Official English Documentation: http://nginx.org/en/docs/

# * Official Russian Documentation: http://nginx.org/ru/docs/

user nginx;

#(默认为自动,可以自己设置,一般不大于cpu核数)

worker_processes auto;

#(错误日志路径)

error_log /var/log/nginx/error.log;

#(pid文件路径)

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

#(设置网路连接序列化,防止惊群现象发生,默认为on)

accept_mutex on;

#(设置一个进程是否同时接受多个网络连接,默认为off)

multi_accept on;

#(一个进程的最大连接数)

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

#(这里注释掉)

#tcp_nopush on;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

##配置信息

charset utf-8;

server_names_hash_bucket_size 128;

large_client_header_buffers 4 64k;

client_max_body_size 16m;

client_body_buffer_size 128k;

client_header_buffer_size 32k;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 300;

proxy_read_timeout 300;

proxy_send_timeout 300;

##(连接超时时间)

keepalive_timeout 120;

proxy_buffers 4 32k;

proxy_buffer_size 64k;

proxy_busy_buffers_size 64k;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

fastcgi_connect_timeout 300;

fastcgi_send_timeout 300;

fastcgi_read_timeout 300;

fastcgi_buffer_size 64k;

fastcgi_buffers 4 64k;

fastcgi_busy_buffers_size 128k;

fastcgi_temp_file_write_size 128k;

#开启压缩

gzip on;

gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_http_version 1.0;

gzip_comp_level 2;

gzip_types text/plain application/x-javascript text/css application/xml;

gzip_vary on;

underscores_in_headers on;

limit_req_zone $binary_remote_addr zone=one:10m rate=10r/s;

log_format access '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

#附加配置,暂不设置

#lua_package_path "/data/nginx/conf/waf/?.lua";

#init_by_lua_file /data/nginx/conf/waf/init.lua;

#access_by_lua_file /data/nginx/conf/waf/waf.lua;

#lua_shared_dict limit 10m;

# Load modular configuration files from the /etc/nginx/conf.d directory.

# See http://nginx.org/en/docs/ngx_core_module.html#include

# for more information.

#这里我引用了配置

include /etc/nginx/conf.d/*.conf;

#include /etc/nginx/conf.d/kunzainginx.conf;

# 这里设置负载均衡,负载均衡有多种策略,nginx自带的有轮询,权重,ip-hash,响应时间等策略。

# 默认为平分http负载,为轮询的方式。

# 权重则是按照权重来分发请求,权重高的负载大

# ip-hash,根据ip来分配,保持同一个ip分在同一台服务器上。

# 响应时间,根据服务器都nginx 的响应时间,优先分发给响应速度快的服务器。

#集中策略可以适当组合

#upstream tomcat { #(tomcat为自定义的负载均衡规则名,可以自己定义,但是需要和【server->location->proxy_pass中(http://******;)的******(自定义)一致】)

#ip_hash; #(ip_hash则为ip-hash方法)

#server 192.168.14.132:8080 weight=5; #(weihgt为权重)

#server 192.168.14.133:8080 weight=3;

## 可以定义多组规则

#}

#server {

#(默认监听80端口)

#listen 80 default_server;

#(server_name我这边填写的是客户端访问的域名如baidu.com)

#server_name _;

#root /usr/share/nginx/html;

# Load configuration files for the default server block.

#include /etc/nginx/default.d/*.conf;

#( / 表示所有请求,可以自定义来针对不同的域名设定不同负载规则 和服务)

#location / {

# proxy_pass http://tomcat; (反向代理,填上你自己的负载均衡规则名)

# proxy_redirect off; (下面一些设置可以直接复制过去,不要的话,有可能导致一些 没法认证等的问题)

# proxy_set_header Host $host;

#proxy_set_header X-Real-IP $remote_addr;

#proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

#proxy_connect_timeout 90; (下面这几个都只是一些超时设置,可不要)

#proxy_send_timeout 90;

#proxy_read_timeout 90;

# }

# location ~\.(gif|jpg|png)$ { (比如,以正则表达式写)

# root /home/root/images;

# }

#error_page 404 /404.html;

# location = /40x.html {

#}

#error_page 500 502 503 504 /50x.html;

# location = /50x.html {

#}

}

# Settings for a TLS enabled server.

#

# server {

# listen 443 ssl http2 default_server;

# listen [::]:443 ssl http2 default_server;

# server_name _;

# root /usr/share/nginx/html;

#

# ssl_certificate "/etc/pki/nginx/server.crt";

# ssl_certificate_key "/etc/pki/nginx/private/server.key";

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 10m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

#

# # Load configuration files for the default server block.

# include /etc/nginx/default.d/*.conf;

#

# location / {

# }

#

# error_page 404 /404.html;

# location = /40x.html {

# }

#

# error_page 500 502 503 504 /50x.html;

# location = /50x.html {

# }

# }

#}

下面是上面配置的引用(include /etc/nginx/conf.d/*.conf;)

下面文件的所在/etc/nginx/conf.d/proxynginx.conf

主要该文件最下面两端的配置

server{

listen 80;

server_name test.cn;

location / {

proxy_pass http://127.0.0.1(宿主ip):8080;

proxy_set_header User-Agent $http_user_agent;

root /usr/local/java/apache-tomcat-8.0.47-jenkins/webapps/love;

index /pages/index.jsp;

access_log /usr/local/java/apache-tomcat-8.0.47-jenkins/webapps/love/access.log;

}

error_page 404 /404.html;

location = /40x.html {}

error_page 500 502 503 504 /50x.html;

location = /50x.html {}

}

##eureka项目

server{

listen 80;

server_name eureka.test.cn;

location / {

proxy_pass http://127.0.0.1(宿主ip):8761;

proxy_set_header User-Agent $http_user_agent;

root /usr/local/java/eureka;

index /;

access_log /usr/local/java/eureka/access.log;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

##image项目

server{

listen 80;

server_name image.test.cn;

location / {

proxy_pass http://127.0.0.1(宿主ip):8083;

proxy_set_header User-Agent $http_user_agent;

root /usr/local/java/eureka;

index /;

access_log /usr/local/java/boot/base-oss/access.log;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

##nexus项目

server{

listen 80;

server_name nexus.test.cn;

location / {

proxy_pass http://127.0.0.1(宿主ip):12345;

proxy_set_header User-Agent $http_user_agent;

index /;

access_log /usr/local/java/nexus/logs/access.log;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

##invitation项目

server{

listen 80;

server_name geer.test.cn;

location / {

proxy_pass http://127.0.0.1(宿主ip):8081;

proxy_set_header User-Agent $http_user_agent;

root /usr/local/java/eureka;

index /;

access_log /usr/local/java/boot/boot-invitation/access.log;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

##cat监控项目

server{

listen 80;

server_name cat.test.cn;

location / {

proxy_pass http://localhost:9090;

proxy_set_header User-Agent $http_user_agent;

index /;

#access_log /usr/local/java/cat/access.log;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

##activeMq项目

server{

listen 80;

server_name activemq.test.cn;

location / {

proxy_pass http://127.0.0.1(宿主ip):8161;

proxy_set_header User-Agent $http_user_agent;

root /usr/local/java/apache-activemq-5.15.4;

index /;

access_log /usr/local/java/apache-activemq-5.15.4/access.log;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

#

#

#负载均衡的实现见下面两段

#

#这里的域名要和下面proxy_pass的一样

upstream encry {

#这里的ip是两个不同docker容器的IP地址

server 172.18.0.2:8084 weight=1;

server 172.18.0.3:8085 weight=2;

}

##encryprion加密项目

server{

listen 80;

server_name encry.test.cn;

location / {

#这里面的encry是随意起的

proxy_pass http://encry;

#proxy_pass http://localhost:8084;

proxy_set_header User-Agent $http_user_agent;

index /;

access_log /usr/local/java/boot/encryption/access.log;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}