工作原理

1.副本集之间的复制是通过oplog日志现实的.备份节点通过查询这个集合就可以知道需要进行复制的操作

2.oplog是节点中local库中的一个固定的集合,在默认情况下oplog初始化大小为空闲磁盘的5%.oplog是capped collection,所以当oplog的空间被占满时,会覆盖最初写入的日志

3.通过改变oplog文档的大小直接改变local所占磁盘空间的大小.可以在配置文件中设置oplogSize参数来指定oplog文档的大小。

4.通过oplog中的操作记录,把数据复制在备份节点.

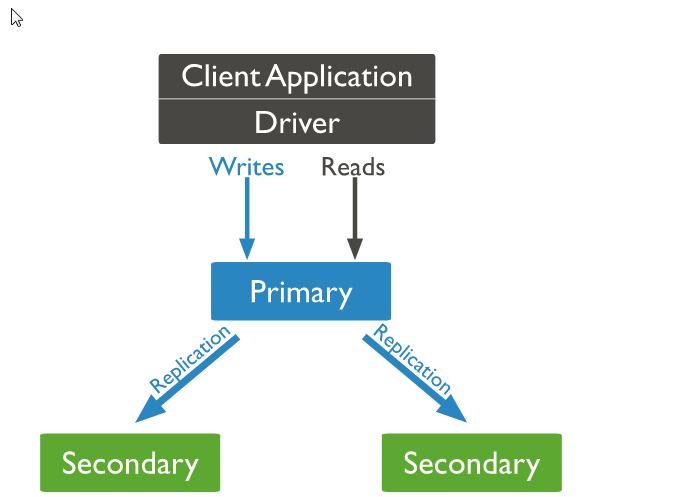

5.主节点是集中接收写入操作的,MongoDB中的应用连接到主节点进行写入操作,然后记录在主节点操作oplog,从节点成员定期轮询复制此日志并将操作应用于其数据集。

常用命令

rs.conf() 查看副本集配置

rs.status() 查看副本集状态

rs.initiate(config) 初始化副本集

rs.isMaster() 查看是否为主节点

db.getMongo().setSlaveOk(); 赋予副本集副本节点查询数据的权限,副本节点执行

一、环境准备

操作系统centos6.5 mongodb版本3.6.6

ip地址 主机名 角色

192.168.1.230 mongodb01 副本集主节点

192.168.1.18 mongodb02 副本集副本节点

192.168.1.247 mongodb03 副本集副本节点

关闭3个服务器的防火墙及selinux

二、安装部署mongodb及副本集配置

1、安装部署mongodb

可参考:https://www.cnblogs.com/wusy/p/10405991.html

2、副本集配置

1)yum安装mongodb在做副本集时需在所有服务器配置文件加入以下配置:

replication:

replSetName: repset(副本集名,可自定义)

2)下载源码包,手动编译的方式则需加以下配置:

replSet=repset(副本集名)

3、初始化副本集

在任意一台节点初始化都可,但在哪一台做初始化,那么这一台就作为副本集的主节点,这里选择mongodb01(也可使用权重值来控制主副节点)

1)进入mongodb数据库

[root@mongodb01 ~]# mongo --host 192.168.1.230

2)定义副本集配置,这里的_id:repset要与配置文件中所指的副本集名称一致

>config={

... _id:"repset",

... members:[

... {_id:0,host:"192.168.1.230:27017"},

... {_id:1,host:"192.168.1.18:27017"},

... {_id:2,host:"192.168.1.247:27017"}

... ]

... }

{

"_id" : "repset",

"members" : [

{

"_id" : 0,

"host" : "192.168.1.230:27017"

},

{

"_id" : 1,

"host" : "192.168.1.18:27017"

},

{

"_id" : 2,

"host" : "192.18.1.247:27017"

}

]

}

3)初始化副本集配置

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1551502195, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1551502195, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

4)查看集群节点状态(health为1表明正常,为0表明异常)

repset:PRIMARY> rs.status()

{

"set" : "repset",

"date" : ISODate("2019-03-02T04:50:47.554Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1551502238, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1551502238, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1551502238, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1551502238, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1551502208, 1),

"members" : [

{

"_id" : 0,

"name" : "192.168.1.230:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 153,

"optime" : {

"ts" : Timestamp(1551502238, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-02T04:50:38Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1551502207, 1),

"electionDate" : ISODate("2019-03-02T04:50:07Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.1.18:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 51,

"optime" : {

"ts" : Timestamp(1551502238, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1551502238, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-02T04:50:38Z"),

"optimeDurableDate" : ISODate("2019-03-02T04:50:38Z"),

"lastHeartbeat" : ISODate("2019-03-02T04:50:47.382Z"),

"lastHeartbeatRecv" : ISODate("2019-03-02T04:50:46.242Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.247:27017",

"syncSourceHost" : "192.168.1.247:27017",

"syncSourceId" : 2,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.1.247:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 51,

"optime" : {

"ts" : Timestamp(1551502238, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1551502238, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-02T04:50:38Z"),

"optimeDurableDate" : ISODate("2019-03-02T04:50:38Z"),

"lastHeartbeat" : ISODate("2019-03-02T04:50:47.382Z"),

"lastHeartbeatRecv" : ISODate("2019-03-02T04:50:46.183Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.230:27017",

"syncSourceHost" : "192.168.1.230:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1551502238, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1551502238, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

repset:PRIMARY>

5)查看是否为主节点(可在其他节点执行查看)

repset:PRIMARY> rs.isMaster()

{

"hosts" : [

"192.168.1.230:27017",

"192.168.1.18:27017",

"192.168.1.247:27017"

],

"setName" : "repset",

"setVersion" : 1,

"ismaster" : true,

"secondary" : false,

"primary" : "192.168.1.230:27017",

"me" : "192.168.1.230:27017",

"electionId" : ObjectId("7fffffff0000000000000001"),

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(1551502348, 1),

"t" : NumberLong(1)

},

"lastWriteDate" : ISODate("2019-03-02T04:52:28Z"),

"majorityOpTime" : {

"ts" : Timestamp(1551502348, 1),

"t" : NumberLong(1)

},

"majorityWriteDate" : ISODate("2019-03-02T04:52:28Z")

},

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"maxWriteBatchSize" : 100000,

"localTime" : ISODate("2019-03-02T04:52:32.106Z"),

"logicalSessionTimeoutMinutes" : 30,

"minWireVersion" : 0,

"maxWireVersion" : 7,

"readOnly" : false,

"ok" : 1,

"operationTime" : Timestamp(1551502348, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1551502348, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

6)可登入副本集副本节点,并查看是否为主节点或副本节点

[root@mongodb02 ~]# mongo --host 192.168.1.18

repset:SECONDARY>

repset:SECONDARY> rs.isMaster()

{

"hosts" : [

"192.168.1.230:27017",

"192.168.1.18:27017",

"192.168.1.247:27017"

],

"setName" : "repset",

"setVersion" : 1,

"ismaster" : false,

"secondary" : true,

"primary" : "192.168.1.230:27017",

"me" : "192.168.1.18:27017",

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(1551502458, 1),

"t" : NumberLong(1)

},

"lastWriteDate" : ISODate("2019-03-02T04:54:18Z"),

"majorityOpTime" : {

"ts" : Timestamp(1551502458, 1),

"t" : NumberLong(1)

},

"majorityWriteDate" : ISODate("2019-03-02T04:54:18Z")

},

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"maxWriteBatchSize" : 100000,

"localTime" : ISODate("2019-03-02T04:54:27.819Z"),

"logicalSessionTimeoutMinutes" : 30,

"minWireVersion" : 0,

"maxWireVersion" : 7,

"readOnly" : false,

"ok" : 1,

"operationTime" : Timestamp(1551502458, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1551502458, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

三、登入副本集副本集节点验证数据复制功能(默认副本集的副本节点不能查看数据)

1、在主节点mongodb01上插入数据,在副本节点查看数据

repset:PRIMARY> use test

switched to db test

repset:PRIMARY> db.test.save({id:"one",name:"wushaoyu"})

WriteResult({ "nInserted" : 1 })

2、进入副本节点mongodb02查看主库插入的数据

repset:SECONDARY> use test

switched to db test

repset:SECONDARY> show dbs

2019-03-02T13:07:06.503+0800 E QUERY [js] Error: listDatabases failed:{

"operationTime" : Timestamp(1551503218, 1),

"ok" : 0,

"errmsg" : "not master and slaveOk=false",

"code" : 13435,

"codeName" : "NotMasterNoSlaveOk",

"$clusterTime" : {

"clusterTime" : Timestamp(1551503218, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

} :

_getErrorWithCode@src/mongo/shell/utils.js:25:13

Mongo.prototype.getDBs@src/mongo/shell/mongo.js:65:1

shellHelper.show@src/mongo/shell/utils.js:865:19

shellHelper@src/mongo/shell/utils.js:755:15

@(shellhelp2):1:1

注:上面出现了报错

因为主库插入的数据,从库是不具备读的权限的,所以要设置副本节点可以读

repset:SECONDARY> db.getMongo().setSlaveOk();

repset:SECONDARY> db.test.find()

{ "_id" : ObjectId("5c7a0f3a6698c70ef075f0a8"), "id" : "one", "name" : "wushaoyu" }

repset:SECONDARY> show tables

test

注:由此看到,副本节点已经把主节点的数据复制过来了(在mongodb03上做同样操作即可)

四、验证故障转移功能,停止当前副本主节点,验证高可用

先停止副本主节点,在查看副本节点的状态,

1)停止副本主节点的mongodb

[root@mongodb01 ~]# ps -ef |grep mongod

mongod 27170 1 0 12:48 ? 00:00:06 /usr/bin/mongod -f /etc/mongod.conf

root 27335 5388 0 13:15 pts/3 00:00:00 grep mongod

[root@mongodb01 ~]# kill -9 27170

2)登录其他两个副本集节点,查看是否推选出新的主节点

[root@mongodb02 ~]# mongo --host 192.168.1.18

repset:SECONDARY> rs.isMaster()

{

"hosts" : [

"192.168.1.230:27017",

"192.168.1.18:27017",

"192.168.1.247:27017"

],

"setName" : "repset",

"setVersion" : 1,

"ismaster" : false,

"secondary" : true,

"primary" : "192.168.1.247:27017",

"me" : "192.168.1.18:27017",

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(1551503801, 1),

"t" : NumberLong(2)

},

"lastWriteDate" : ISODate("2019-03-02T05:16:41Z"),

"majorityOpTime" : {

"ts" : Timestamp(1551503801, 1),

"t" : NumberLong(2)

},

"majorityWriteDate" : ISODate("2019-03-02T05:16:41Z")

},

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"maxWriteBatchSize" : 100000,

"localTime" : ISODate("2019-03-02T05:16:48.433Z"),

"logicalSessionTimeoutMinutes" : 30,

"minWireVersion" : 0,

"maxWireVersion" : 7,

"readOnly" : false,

"ok" : 1,

"operationTime" : Timestamp(1551503801, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1551503801, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

[root@mongodb03 ~]# mongo --host 192.168.1.247

repset:PRIMARY> rs.isMaster()

{

"hosts" : [

"192.168.1.230:27017",

"192.168.1.18:27017",

"192.168.1.247:27017"

],

"setName" : "repset",

"setVersion" : 1,

"ismaster" : true,

"secondary" : false,

"primary" : "192.168.1.247:27017",

"me" : "192.168.1.247:27017",

"electionId" : ObjectId("7fffffff0000000000000002"),

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(1551503971, 1),

"t" : NumberLong(2)

},

"lastWriteDate" : ISODate("2019-03-02T05:19:31Z"),

"majorityOpTime" : {

"ts" : Timestamp(1551503971, 1),

"t" : NumberLong(2)

},

"majorityWriteDate" : ISODate("2019-03-02T05:19:31Z")

},

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"maxWriteBatchSize" : 100000,

"localTime" : ISODate("2019-03-02T05:19:35.282Z"),

"logicalSessionTimeoutMinutes" : 30,

"minWireVersion" : 0,

"maxWireVersion" : 7,

"readOnly" : false,

"ok" : 1,

"operationTime" : Timestamp(1551503971, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1551503971, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

ps:发现主节点宕机之后,mongodb03为新推选出来的主节点

3)在新的主节点mongodb03插入数据,在mongodb02上查看是否同步

[root@mongodb03 ~]# mongo --host 192.168.1.247

repset:PRIMARY> use wsy

switched to db wsy

repset:PRIMARY> db.wsy.save({"name":"wsy"})

WriteResult({ "nInserted" : 1 })

[root@mongodb02 ~]# mongo --host 192.168.1.18

repset:SECONDARY> db.getMongo().setSlaveOk();

repset:SECONDARY> show tables

wsy

repset:SECONDARY> db.wsy.find()

{ "_id" : ObjectId("5c7a133007ebf60ac5870bae"), "name" : "wsy" }

由此可看出,数据同步成功

4)启动先前的mongodb01,查看状态,mongodb成为了当前主节点的副本节点,并且在它宕机期间插入的数据也同步了过来

[root@mongodb01 ~]# /etc/init.d/mongod start

[root@mongodb01 ~]# mongo --host 192.168.1.230

repset:SECONDARY> rs.isMaster()

{

"hosts" : [

"192.168.1.230:27017",

"192.168.1.18:27017",

"192.168.1.247:27017"

],

"setName" : "repset",

"setVersion" : 1,

"ismaster" : false,

"secondary" : true,

"primary" : "192.168.1.247:27017",

"me" : "192.168.1.230:27017",

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(1551504771, 1),

"t" : NumberLong(2)

},

"lastWriteDate" : ISODate("2019-03-02T05:32:51Z"),

"majorityOpTime" : {

"ts" : Timestamp(1551504771, 1),

"t" : NumberLong(2)

},

"majorityWriteDate" : ISODate("2019-03-02T05:32:51Z")

},

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"maxWriteBatchSize" : 100000,

"localTime" : ISODate("2019-03-02T05:32:59.225Z"),

"logicalSessionTimeoutMinutes" : 30,

"minWireVersion" : 0,

"maxWireVersion" : 7,

"readOnly" : false,

"ok" : 1,

"operationTime" : Timestamp(1551504771, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1551504771, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

#开启副本节点读的权限

repset:SECONDARY> db.getMongo().setSlaveOk();

repset:SECONDARY> db.wsy.find()

{ "_id" : ObjectId("5c7a133007ebf60ac5870bae"), "name" : "wsy" }

注:在原来的主节点宕机期间,新选举出来的主机插入的数据,也会同步过来