转自:https://blog.csdn.net/hz5034/article/details/79794615

版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/hz5034/article/details/79794615

网卡

网卡工作在物理层和数据链路层,主要由PHY/MAC芯片、Tx/Rx FIFO、DMA等组成,其中网线通过变压器接PHY芯片、PHY芯片通过MII接MAC芯片、MAC芯片接PCI总线

PHY芯片主要负责:CSMA/CD、模数转换、编解码、串并转换

MAC芯片主要负责:

比特流和帧的转换:7字节的前导码Preamble和1字节的帧首定界符SFD

CRC校验

Packet Filtering:L2 Filtering、VLAN Filtering、Manageability / Host Filtering

Intel的千兆网卡以82575/82576为代表、万兆网卡以82598/82599为代表

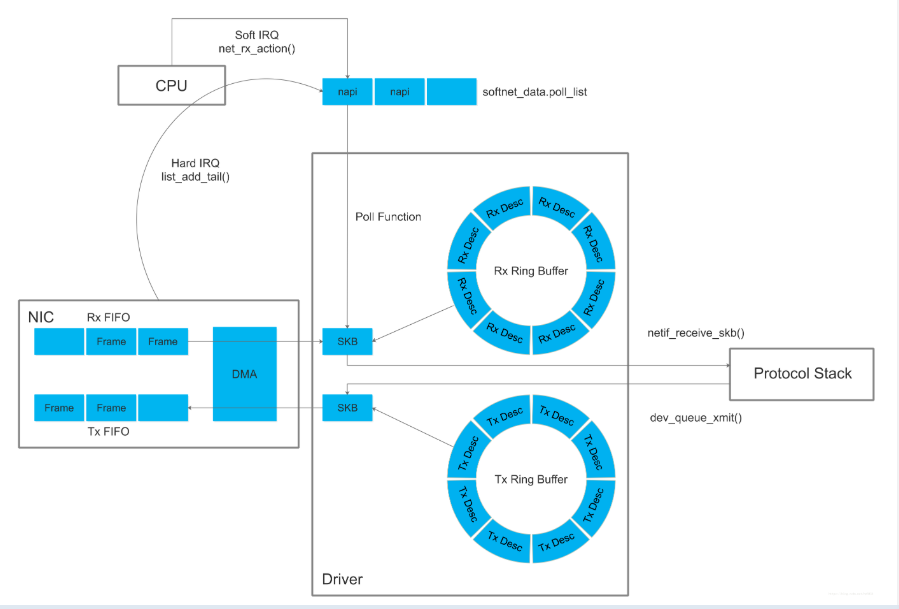

收发包过程图

ixgbe_adapter包含ixgbe_q_vector数组(一个ixgbe_q_vector对应一个中断),ixgbe_q_vector包含napi_struct

硬中断函数把napi_struct加入CPU的poll_list,软中断函数net_rx_action()遍历poll_list,执行poll函数

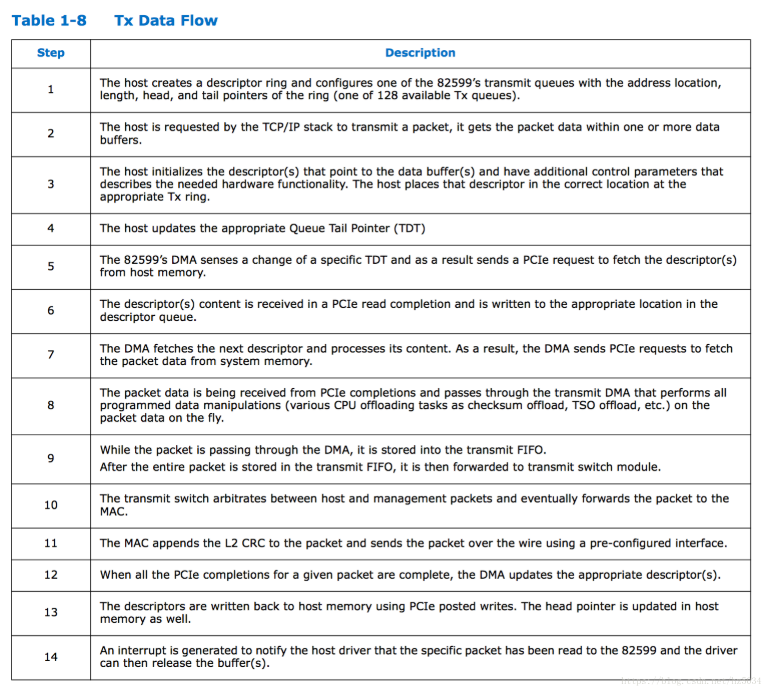

发包过程

1、网卡驱动创建tx descriptor ring(一致性DMA内存),将tx descriptor ring的总线地址写入网卡寄存器TDBA

2、协议栈通过dev_queue_xmit()将sk_buff下送网卡驱动

3、网卡驱动将sk_buff放入tx descriptor ring,更新TDT

4、DMA感知到TDT的改变后,找到tx descriptor ring中下一个将要使用的descriptor

5、DMA通过PCI总线将descriptor的数据缓存区复制到Tx FIFO

6、复制完后,通过MAC芯片将数据包发送出去

7、发送完后,网卡更新TDH,启动硬中断通知CPU释放数据缓存区中的数据包

Tx Ring Buffer

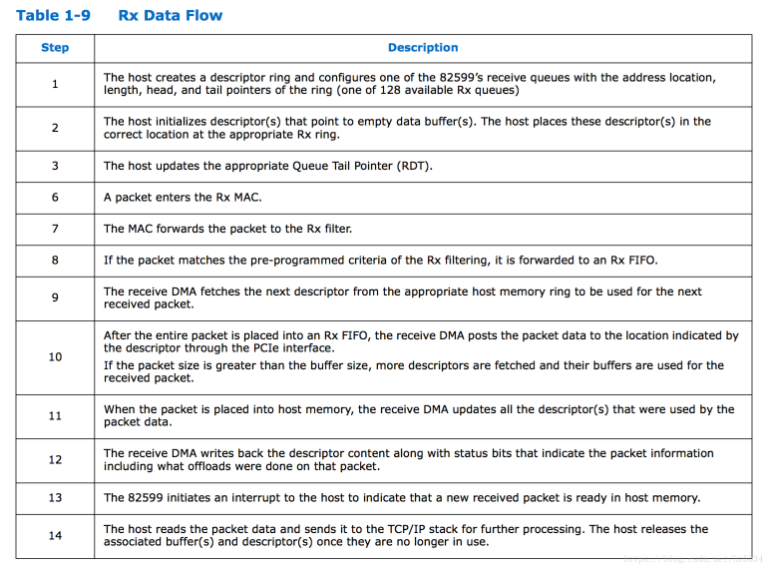

收包过程

1、网卡驱动创建rx descriptor ring(一致性DMA内存),将rx descriptor ring的总线地址写入网卡寄存器RDBA

2、网卡驱动为每个descriptor分配sk_buff和数据缓存区,流式DMA映射数据缓存区,将数据缓存区的总线地址保存到descriptor

3、网卡接收数据包,将数据包写入Rx FIFO

4、DMA找到rx descriptor ring中下一个将要使用的descriptor

5、整个数据包写入Rx FIFO后,DMA通过PCI总线将Rx FIFO中的数据包复制到descriptor的数据缓存区

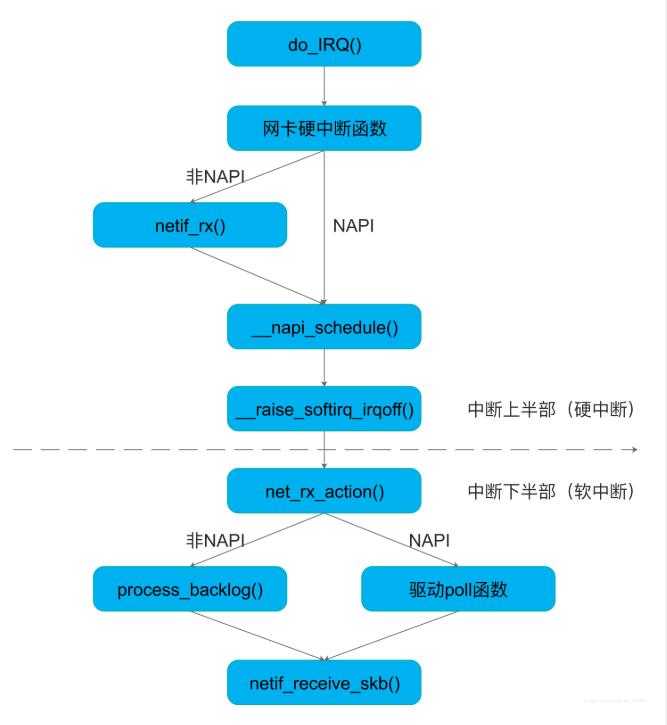

6、复制完后,网卡启动硬中断通知CPU数据缓存区中已经有新的数据包了,CPU执行硬中断函数:

NAPI(以e1000网卡为例):e1000_intr() -> __napi_schedule() -> __raise_softirq_irqoff(NET_RX_SOFTIRQ)

非NAPI(以dm9000网卡为例):dm9000_interrupt() -> dm9000_rx() -> netif_rx() -> napi_schedule() -> __napi_schedule() -> __raise_softirq_irqoff(NET_RX_SOFTIRQ)

7、ksoftirqd执行软中断函数net_rx_action():

NAPI(以e1000网卡为例):net_rx_action() -> e1000_clean() -> e1000_clean_rx_irq() -> e1000_receive_skb() -> netif_receive_skb()

非NAPI(以dm9000网卡为例):net_rx_action() -> process_backlog() -> netif_receive_skb()

8、网卡驱动通过netif_receive_skb()将sk_buff上送协议栈

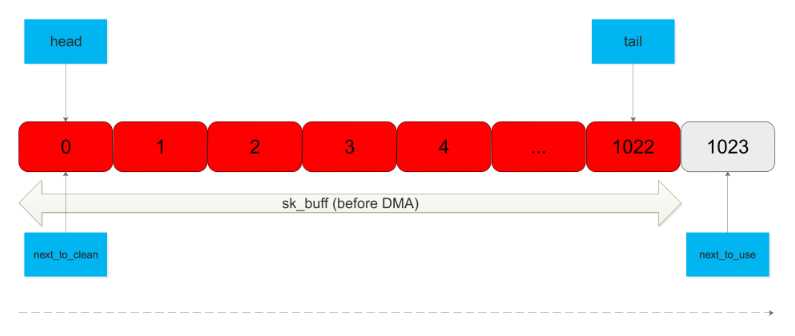

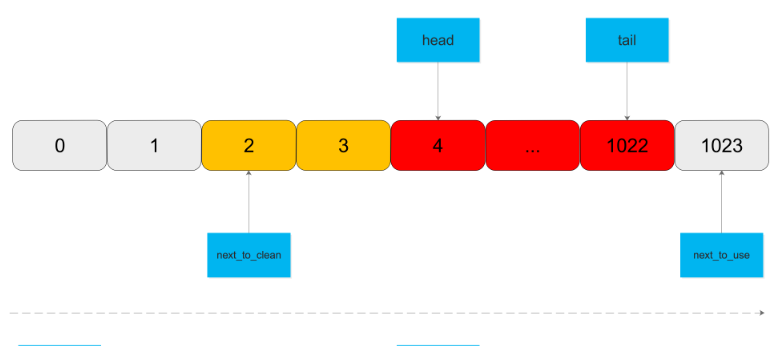

Rx Ring Buffer

SW向从next_to_use开始的N个descriptor补充sk_buff,next_to_use += N,tail = next_to_use - 1(设置网卡寄存器RDT)

HW向从head开始的M个descriptor的sk_buff复制数据包并设置DD,head += M

SW将从next_to_clean的开始的L个sk_buff移出Rx Ring Buffer交给协议栈,next_to_clean += L,向从next_to_use开始的L个descriptor补充sk_buff,next_to_use += L,tail = next_to_use - 1

注意:每次补充完sk_buff以后,tail、next_to_use、next_to_clean三者都是紧挨着的

中断上下部

do_IRQ()是CPU处理硬中断的总入口,在do_IRQ()中调用硬中断函数

// 在e1000_request_irq()中注册硬中断,中断函数为e1000_intr()

irq_handler_t handler = e1000_intr;

err = request_irq(adapter->pdev->irq, handler, irq_flags, netdev->name,

netdev);

// 在dm9000_open()中注册硬中断,中断函数为dm9000_interrupt()

if (request_irq(dev->irq, &dm9000_interrupt, irqflags, dev->name, dev))

return -EAGAIN;

// 在net_dev_init()中注册软中断,中断函数为net_rx_action()

open_softirq(NET_RX_SOFTIRQ, net_rx_action);

// 在e1000_probe()中注册napi的poll函数为e1000_clean()

netif_napi_add(netdev, &adapter->napi, e1000_clean, 64);

// 在net_dev_init()中注册非napi的poll函数为process_backlog()

queue->backlog.poll = process_backlog;

netif_rx()

在netif_rx()中把skb加入CPU的softnet_data

/**

* netif_rx - post buffer to the network code

* @skb: buffer to post

*

* This function receives a packet from a device driver and queues it for

* the upper (protocol) levels to process. It always succeeds. The buffer

* may be dropped during processing for congestion control or by the

* protocol layers.

*

* return values:

* NET_RX_SUCCESS (no congestion)

* NET_RX_DROP (packet was dropped)

*

*/

int netif_rx(struct sk_buff *skb)

{

struct softnet_data *queue;

unsigned long flags;

/* if netpoll wants it, pretend we never saw it */

if (netpoll_rx(skb))

return NET_RX_DROP;

if (!skb->tstamp.tv64)

net_timestamp(skb);

/*

* The code is rearranged so that the path is the most

* short when CPU is congested, but is still operating.

*/

local_irq_save(flags);

queue = &__get_cpu_var(softnet_data); // 得到CPU的softnet_data

__get_cpu_var(netdev_rx_stat).total++;

if (queue->input_pkt_queue.qlen <= netdev_max_backlog) { // 若队列长度不大于netdev_max_backlog

if (queue->input_pkt_queue.qlen) { // 若队列长度非0,表示queue->backlog已被加入poll_list

enqueue:

__skb_queue_tail(&queue->input_pkt_queue, skb); // 将skb加入队列尾部

local_irq_restore(flags);

return NET_RX_SUCCESS;

}

napi_schedule(&queue->backlog); // 调度queue->backlog

goto enqueue; // 将skb加入队列尾部

}

__get_cpu_var(netdev_rx_stat).dropped++;

local_irq_restore(flags);

kfree_skb(skb);

return NET_RX_DROP;

}

参考资料

82599:

https://www.intel.com/content/www/us/en/embedded/products/networking/82599-10-gbe-controller-datasheet.html

网卡:

http://blog.csdn.net/tao546377318/article/details/51602298

http://blog.csdn.net/Just_Do_IT_Ye/article/details/47000383

DMA:

http://www.wowotech.net/memory_management/DMA-Mapping-api.html

http://blog.csdn.net/phunxm/article/details/9452575

http://blog.chinaunix.net/uid-1858380-id-3261817.html

http://www.elecfans.com/book/232/

协议栈收发包过程:

https://segmentfault.com/a/1190000008836467

https://segmentfault.com/a/1190000008926093

NAPI:

http://blog.csdn.net/zhangskd/article/details/21627963

https://blog.packagecloud.io/eng/2016/06/22/monitoring-tuning-linux-networking-stack-receiving-data/

https://blog.packagecloud.io/eng/2017/02/06/monitoring-tuning-linux-networking-stack-sending-data/

https://blog.packagecloud.io/eng/2016/10/11/monitoring-tuning-linux-networking-stack-receiving-data-illustrated/

---------------------

作者:hz5034

来源:CSDN

原文:https://blog.csdn.net/hz5034/article/details/79794615

版权声明:本文为博主原创文章,转载请附上博文链接!