日志可以分为两部分

业务日志

业务日志一般是要长期保留的,以供以后有问题随时查询,elk是现在比较流行的日志方案,但是容器日志最好不要落地所以不能把logstash客户端包在容器里面

可以使用logstash的udp模式允许日志不落地但是要在程序把日志扔到到logstash的udp端口当中,配置文件如下:

客户端配置:

架构说明:

容器-->logstash客户端-->Redis-->logstash服务端-->Elasticsearch存储-->Kibana展示

input { # 获取本地的日志 file { type => "nginx_access_logs" path => "/data/logs/nginx/*.log" codec => json { charset => "UTF-8" } } # 获取网络的日志 udp { host => "127.0.0.1" port => "2900" type => "logstash_udp_ingress_logs" codec => json { charset => "UTF-8" } } } output { if [type] == "nginx_access_logs" { redis { host => "elk.xxx.cn" port => "6379" data_type => "list"# logstash redis插件工作方式 key => "nginx_access_logs:redis"# 存入Redis的Key名 password => "xxx123"# 如果有安全认证, 此项为密码 codec => json { charset => ["UTF-8"] } } } elseif[type] == "logstash_udp_ingress_logs" { redis { host => "elk-public.xxx.cn" port => "6379" data_type => "list"# logstash redis插件工作方式 key => "logstash_udp_ingress_logs"# 存入Redis的Key名 password => "xxx123"# 如果有安全认证, 此项为密码 codec => json { charset => ["UTF-8"] } } } }

那一个nodejs程序举例怎么上传日志

log4js配置文件

{ "appenders": [{ "host": "<192.168.1.1>", "port": 2900, "type": "logstashUDP", "logType": "logstash_udp_ingress_logs", "category": "DEBUG::", "fields": { "project": "Order", "field2": "Message" } }, { "type": "console" } ] }

nodejs代码

log4js.configure('./logs/log4js.json');

var logger = log4js.getLogger('DEBUG::');

logger.setLevel('INFO');

EWTRACE = function (Message) {

var myDate = new Date();

var nowStr = myDate.format("yyyyMMdd hh:mm:ss");

logger.info(Message + "\r");

}

//接受参数

EWTRACEIFY = function (Message) {

var myDate = new Date();

var nowStr = myDate.format("yyyyMMdd hh:mm:ss");

// logger.warn(JSON.stringify(Message) + "\r");

logger.info(Message);

}

//提醒

EWTRACETIP = function (Message) {

logger.warn("Tip:" + JSON.stringify(Message) + "\r");

}

//错误

EWTRACEERROR = function (Message) {

//logger.error(JSON.stringify(Message) + "\r");

var myDate = new Date();

var nowStr = myDate.format("yyyyMMdd hh:mm:ss");

// logger.warn(JSON.stringify(Message) + "\r");

logger.error(Message);

}

服务端配置:

起一个redis略过。。。。

logstash服务端配置:

input { #从redis中获取本地日志 redis { host => "192.168.1.1" port => 6379 type => "redis-input" data_type => "list" key => "nginx_access_logs:redis" key => "nginx_access_*" password => "xxx123" codec => json { charset => ["UTF-8"] } } #获取UDP日志 redis { host => "192.168.1.1" port => 6379 type => "redis-input" data_type => "list" key => "logstash_udp_ingress_logs"# key => "logstash_udp_ingres*" password => "downtown123" codec => json { charset => ["UTF-8"] } } } output { if [type] == "nginx_access_logs" {# if [type] == "nginx_access_*" { elasticsearch { hosts => ["http://192.168.1.1:9200"] flush_size => 2000 idle_flush_time => 15 codec => json { charset => ["UTF-8"] } index => "logstash-nginx_access_logs-%{+YYYY.MM.dd}" } stdout { codec => rubydebug } } elseif[type] == "logstash_udp_ingress_logs" { elasticsearch { hosts => ["http://192.168.1.1:9200"] flush_size => 2000 idle_flush_time => 15 codec => json { charset => ["UTF-8"] } index => "logstash-udp_ingress_logs-%{+YYYY.MM.dd}" } } stdout { codec => plain } }

Elasticsearch Kibana根据实际情况配置优化

常用的针对日志Elasticsearch机器的配置

cluster.name: logging-es node.name: logging-es01 path.data: /es-data01/data,/es-data02/data path.logs: /data/logs/elasticsearch network.host: 192.168.1.1 node.master: true node.data: true node.attr.tag: hot discovery.zen.fd.ping_timeout: 100s discovery.zen.ping_timeout: 100s discovery.zen.fd.ping_interval: 30s discovery.zen.fd.ping_retries: 10 discovery.zen.ping.unicast.hosts: ["logging-es01", "logging-es02","logging-es03","logging-es04"] discovery.zen.minimum_master_nodes: 2 action.destructive_requires_name: false bootstrap.memory_lock: false cluster.routing.allocation.enable: all bootstrap.system_call_filter: false thread_pool.bulk.queue_size: 6000 cluster.routing.allocation.node_concurrent_recoveries: 128 indices.recovery.max_bytes_per_sec: 200mb indices.memory.index_buffer_size: 20%

程序日志

程序日志一般是指debug日志或者程序崩溃日志,特点是不用长期保存但是日志量会非常大

扫描二维码关注公众号,回复:

5330435 查看本文章

oc提供了一套日志聚合方案具体架构如下:

容器console-->Fluentd收集(每个NODE)-->Elasticsearch存储-->Kibana展示

加入配置/etc/ansible/hosts

[OSEv3:vars] openshift_logging_install_logging=true openshift_logging_master_url=https://www.oc.example.cn:8443 openshift_logging_public_master_url=https://kubernetes.default.svc.cluster.local openshift_logging_kibana_hostname=kibana.oc.example.cn openshift_logging_kibana_ops_hostname=kibana.oc.example.cn openshift_logging_es_cluster_size=2 openshift_logging_master_public_url=https://www.oc.example.cn:8443

执行安装

# ansible-playbook openshift-ansible/playbooks/openshift-logging/config.yml

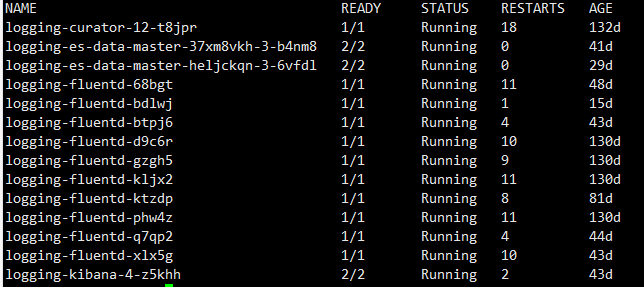

查看安装情况

# oc project logging

# oc get pod

安装好之后可以修改configmap来配置ES和日志过期时间

# oc get configmap

设置日志过期时间修改logging-curator

.operations: delete: days: 2 .defaults: delete: days: 7