需要导入的依赖

<!-- jdbc驱动包 -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.44</version>

</dependency>

<!-- 添加Httpclient支持 -->

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.2</version>

</dependency>

<!-- 添加jsoup支持 -->

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.10.1</version>

</dependency>

<!-- 添加日志支持 -->

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.16</version>

</dependency>

<!-- 添加ehcache支持 -->

<dependency>

<groupId>net.sf.ehcache</groupId>

<artifactId>ehcache</artifactId>

<version>2.10.3</version>

</dependency>

<!-- 添加commons io支持 -->

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.5</version>

</dependency>

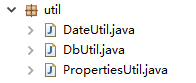

需要的帮助类

帮助类代码

DateUtil

/**

* 日期工具类

* @author user

*

*/

public class DateUtil {

/**

* 获取当前年月日路径

* @return

* @throws Exception

*/

public static String getCurrentDatePath()throws Exception{

Date date=new Date();

SimpleDateFormat sdf=new SimpleDateFormat("yyyy/MM/dd");

return sdf.format(date);

}

public static void main(String[] args) {

try {

// 根据当前的日期匹配自己想要的日期格式

System.out.println(getCurrentDatePath());

} catch (Exception e) {

e.printStackTrace();

}

}

}

DbUtil

**

* 数据库工具类

* @author user

*

*/

public class DbUtil {

/**

* 获取连接

* @return

* @throws Exception

*/

public Connection getCon()throws Exception{

Class.forName(PropertiesUtil.getValue("jdbcName"));

Connection con=DriverManager.getConnection(PropertiesUtil.getValue("dbUrl"), PropertiesUtil.getValue("dbUserName"), PropertiesUtil.getValue("dbPassword"));

return con;

}

/**

* 关闭连接

* @param con

* @throws Exception

*/

public void closeCon(Connection con)throws Exception{

if(con!=null){

con.close();

}

}

public static void main(String[] args) {

DbUtil dbUtil=new DbUtil();

try {

dbUtil.getCon();

System.out.println("数据库连接成功");

} catch (Exception e) {

e.printStackTrace();

System.out.println("数据库连接失败");

}

}

}

PropertiesUtil

/**

* properties工具类

* @author user

*

*/

public class PropertiesUtil {

/**

* 根据key获取value值

* @param key

* @return

*/

public static String getValue(String key){

Properties prop=new Properties();

InputStream in=new PropertiesUtil().getClass().getResourceAsStream("/crawler.properties");

try {

prop.load(in);

} catch (IOException e) {

e.printStackTrace();

}

return prop.getProperty(key);

}

}

所需的配置文件

crawler.properties

dbUrl=jdbc:mysql://localhost:3306/db_blogs?autoReconnect=true&characterEncoding=utf8

dbUserName=root

dbPassword=123

jdbcName=com.mysql.jdbc.Driver

ehcacheXmlPath=D://blogCrawler/ehcache.xml

blogImages=D://blogCrawler/blogImages/

log4j.properties

log4j.rootLogger=INFO, stdout,D

#Console

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target = System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=[%-5p] %d{yyyy-MM-dd HH:mm:ss,SSS} method:%l%n%m%n

#D

log4j.appender.D = org.apache.log4j.RollingFileAppender

log4j.appender.D.File = C://blogCrawler/bloglogs/log.log

log4j.appender.D.MaxFileSize=100KB

log4j.appender.D.MaxBackupIndex=100

log4j.appender.D.Append = true

log4j.appender.D.layout = org.apache.log4j.PatternLayout

log4j.appender.D.layout.ConversionPattern = %-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%n

代码实现

(这一部分的代码是实现服务器图片本地化)

public class DownloadImg {

private static Logger logger = Logger.getLogger(DownloadImg.class);

private static String IMGURL = "http://img16.3lian.com/gif2016/q7/20/88.jpg";

public static void main(String[] args) {

logger.info("开始爬图片" + IMGURL);

CloseableHttpClient httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(IMGURL);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if(response == null) {

logger.info(IMGURL +":爬取无响应");

return ;

}

if(response.getStatusLine().getStatusCode() == 200) {//服务器响应正常

HttpEntity entity = response.getEntity();

String blogImagesPath = PropertiesUtil.getValue("blogImages");//图片储存路径(根据配置文件中键去读值)

String dateDir = DateUtil.getCurrentDatePath();//根据日期去生成文件夹

String uuid = UUID.randomUUID().toString();//图片的唯一标识

String suffix = entity.getContentType().getValue().split("/")[1];//图片的后缀

logger.info(suffix);

String imgPath = blogImagesPath + dateDir + "/" + uuid + "." + suffix;

FileUtils.copyInputStreamToFile(entity.getContent(), new File(imgPath));

}

} catch (ClientProtocolException e) {

logger.info(IMGURL + "出现异常ClientProtocolException",e);

} catch (IOException e) {

logger.info(IMGURL + "出现异常IOException",e);

} catch (Exception e) {

e.printStackTrace();

}finally {

if(response != null) {

try {

response.close();

} catch (IOException e) {

e.printStackTrace();

}

}

if(httpClient != null) {

try {

httpClient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

logger.info("爬取图片结束" + IMGURL);

}

}

博客爬取代码

public class BlogCralerStart {

private static Logger logger = Logger.getLogger(BlogCralerStart.class);//记录日志

//csdn首页的最新文章

private static String HOMEURL = "https://www.csdn.net/nav/newarticles";//首页的URL

private static CloseableHttpClient httpClient ;

private static Connection con = null;

/**

* 解析首页,获首页内容

*/

public static void parseHomePage() {

logger.info("开始爬首页" + HOMEURL);

httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(HOMEURL);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if(response == null) {

logger.info(HOMEURL +":爬取无响应");

}

if(response.getStatusLine().getStatusCode() == 200) {//服务器响应正常

HttpEntity entity = response.getEntity();

String homeUrlPageContent = EntityUtils.toString(entity, "utf-8");

// System.out.println(homeUrlPageContent);

parseHomeUrlPageContent(homeUrlPageContent);

}

} catch (ClientProtocolException e) {

logger.info(HOMEURL + "出现异常ClientProtocolException",e);

} catch (IOException e) {

logger.info(HOMEURL + "出现异常IOException",e);

}finally {

if(response != null) {

try {

response.close();

} catch (IOException e) {

e.printStackTrace();

}

}

if(httpClient != null) {

try {

httpClient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

logger.info("爬取首页结束" + HOMEURL);

}

private static void parseHomeUrlPageContent(String homeUrlPageContent) {

Document doc = Jsoup.parse(homeUrlPageContent);

Elements aEles = doc.select("#feedlist_id .list_con .title h2 a");

for (Element aEle : aEles) {

// System.out.println(aEle.attr("href"));

String blogUrl = aEle.attr("href");

if(null == blogUrl || "".equals(blogUrl)) {

logger.info("该博客无内容,不再向下爬取插入数据库!!!");

continue;

}

parseBlogUrl(blogUrl);

}

}

/**

* 通过博客地址获取博客标题和博客内容

* @param blogUrl 博客链接

*/

private static void parseBlogUrl(String blogUrl) {

logger.info("开始爬博客网页" + blogUrl);

httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(blogUrl);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if(response == null) {

logger.info(blogUrl +":爬取无响应");

}

if(response.getStatusLine().getStatusCode() == 200) {//服务器响应正常

HttpEntity entity = response.getEntity();

String blogContent = EntityUtils.toString(entity, "utf-8");

//解析博客内容

parseBlogContent(blogContent,blogUrl);

}

} catch (ClientProtocolException e) {

logger.error(blogUrl + "出现异常ClientProtocolException",e);

} catch (IOException e) {

logger.error(blogUrl + "出现异常IOException",e);

}finally {

if(response != null) {

try {

response.close();

} catch (IOException e) {

logger.error("关闭响应失败-IOException",e);

}

}

}

logger.info("爬取博客网页结束" + blogUrl);

}

/**

* 解析博客内容(包括标题和内容)

* @param blogContent

*/

private static void parseBlogContent(String blogContent,String blogUrl) {

Document doc = Jsoup.parse(blogContent);

//一定要拿到上面的id吗?是因为id是唯一的?为什么我找的网页没有id?

Elements titleELes = doc.select("#mainBox main .blog-content-box .article-header-box .article-header .article-title-box h1");

// logger.info("博客标题" + titleELes.get(0).html());

//标题

if(titleELes.size() == 0) {

logger.info("博客标题为空,不插入数据库");

return;

}

String title = titleELes.get(0).html();

//内容

Elements contentEles = doc.select("#article_content");

// logger.info("博客内容" + contentEles.get(0).html());

if(contentEles.size() == 0) {

logger.info("内容为空不插入数据库");

}

String blogContentBody = contentEles.get(0).html();

//图片路径

Elements imgELes = doc.select("img");

List<String> imgUrlList = new LinkedList<String>();

if(imgELes.size() > 0) {//内容中存在图片

// imgUrlList.add(imgELes.attr("src"));

for (Element imgELe : imgELes) {

imgUrlList.add(imgELe.attr("src"));

}

}

if(imgUrlList != null && imgUrlList.size() > 0) {

//如果博客内容中存在图片就一同下到本地

//把下载下来的博客里面的图片换成本地的图片

Map<String, String> replaceUrlMap = downloadImgList(imgUrlList);

blogContentBody = replaceContent(blogContent,replaceUrlMap);//替换内容

}

//now() mysql的内置函数

// id int 编号

// title varchar 标题

// content longtext 内容

// summary varchar 摘要

// crawlerDate datetime 创建时间

// clickHit int 点击量

// typeId int 类别

// tags varchar 标签

// orUrl varchar 链接

// state int 状态

// releaseDate datetime 发布时间

logger.info(title);

String sql = "insert into t_article(id,title,content,summary,crawlerDate,clickHit,typeId,tags,orUrl,state,releaseDate) values(null,?,?,null,now(),0,1,null,?,0,null)";

try {

PreparedStatement ps = con.prepareStatement(sql);

ps.setObject(1, title);

ps.setObject(2, blogContentBody);

ps.setObject(3, blogUrl);

if(ps.executeUpdate() == 0) {

logger.info("插入数据库失败!!!");

}else {

logger.info("插入数据库成功");

}

} catch (SQLException e) {

logger.error("数据异常SQLException",e);

}

}

/**

* 将别人博客进行加工,将原有的图片换成本地图片

* @param blogContent

* @param replaceUrlMap

* @return

*/

private static String replaceContent(String blogContent, Map<String, String> replaceUrlMap) {

for (Map.Entry<String, String> entry : replaceUrlMap.entrySet()) {

blogContent = blogContent.replace(entry.getKey(), entry.getValue());

}

return blogContent;

}

/**

* 服务器的图片本地化

* @param imgUrlList

* @return

*/

private static Map<String, String> downloadImgList(List<String> imgUrlList) {

Map<String, String> replace = new HashMap<String, String>();

for (String imgUrl : imgUrlList) {

CloseableHttpClient httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(imgUrl);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if(response == null) {

logger.info(imgUrl +":爬取无响应");

}else {

if(response.getStatusLine().getStatusCode() == 200) {//服务器响应正常

HttpEntity entity = response.getEntity();

String blogImagesPath = PropertiesUtil.getValue("blogImages");//图片储存路径(根据配置文件中键去读值)

String dateDir = DateUtil.getCurrentDatePath();//根据日期去生成文件夹

String uuid = UUID.randomUUID().toString();//图片的唯一标识

String suffix = entity.getContentType().getValue().split("/")[1];//图片的后缀

logger.info(suffix);

String imgPath = blogImagesPath + dateDir + "/" + uuid + "." + suffix;

FileUtils.copyInputStreamToFile(entity.getContent(), new File(imgPath));

// 服务器 本机路径

replace.put(imgUrl, imgPath);

}

}

} catch (ClientProtocolException e) {

logger.info(imgUrl + "出现异常ClientProtocolException",e);

} catch (IOException e) {

logger.info(imgUrl + "出现异常IOException",e);

} catch (Exception e) {

e.printStackTrace();

}finally {

if(response != null) {

try {

response.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

return replace;

}

/**

* 初始化

*/

public static void init() {

DbUtil dbUtil = new DbUtil();

try {

con = dbUtil.getCon();//获得连接

parseHomePage();

} catch (Exception e) {

logger.error("数据库连接失败!!",e);

}finally {

try {

if(con != null && !con.isClosed()) {

con.close();

}

} catch (SQLException e) {

logger.error("数据库关闭失败!!",e);

}

}

}

public static void main(String[] args) {

// parseHomePage();//爬取博客首页

init();//爬取博客内容

}

}