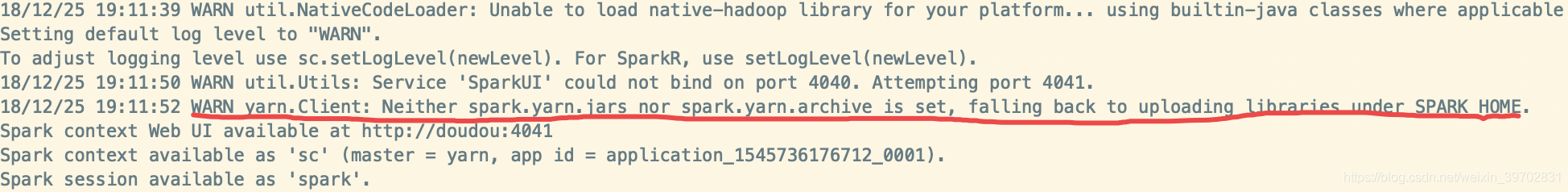

在我们使用Spark on Yarn的时候都会看到这样的一句:warning Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

Ps:解释一下这幅图为什么这样啊,是因为我们内存不够了,所以才这样的,并不影响我们解决问题的

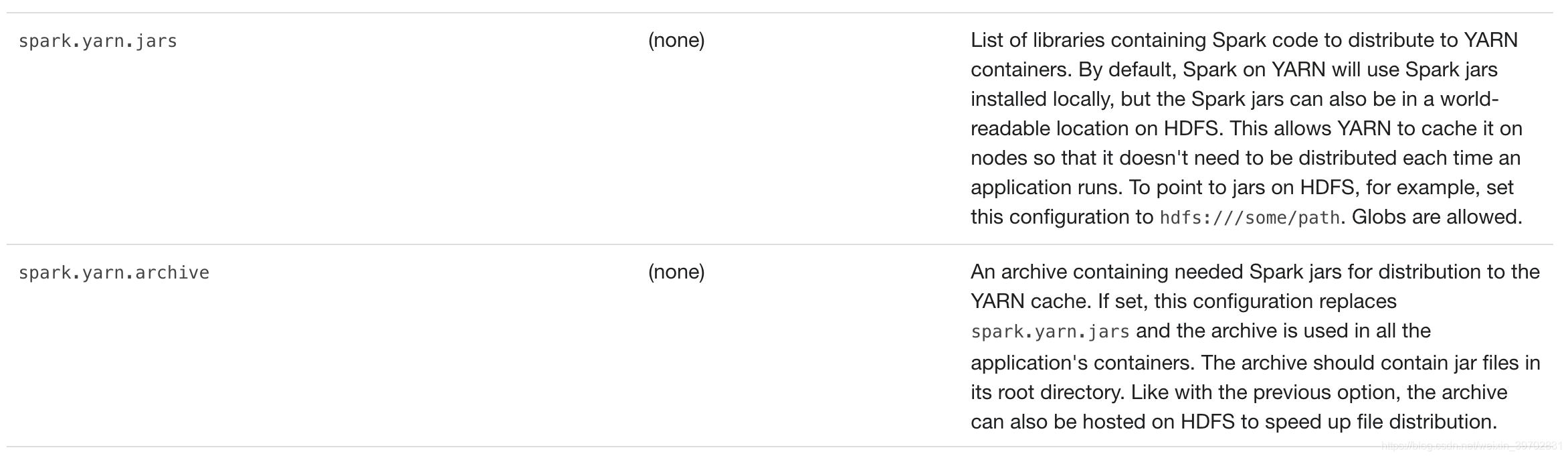

既然提示我们spark.yarn.jars和spark.yarn.archive,这两个东西我们没有设置,那么我们就去设置一下吧。但是这两个东西是个什么鬼呢?想了想我们上一篇所提高的我们可以将spark.*开头的属性设置到SparkConf或者spark-default.xml这个文件里面,那么这两个应该也是一个配置属性吧,那么我们去官网看看有没有提示这两货的信息:

http://spark.apache.org/docs/latest/running-on-yarn.html

看看下面这幅图

这里不就讲了嘛,是因为我们使用Spark on Yarn的时候是需要到spark的一些jar包的,而我们没有设置这两个属性时,每次提交作业都会压缩并打包我们spark下的jar包上传到HDFS上去。既然这样,我们就手动打包上去吧,然后用spark.yarn.jars这个属性指定我们的HDFS路径试试看。

但是要注意的是这里是写hdfs:///…,我刚开始的时候没有加hdfs直接用了/…/…系统一直报application是accepte状态。

首先,我将$SAPRK_HOME/jars下的所有包都上传到HDFS上面的/spark目录下去了,然后我们使用这样的命令:

spark-submit \

--master yarn \

--deploy-mode client \

--class doudou.scala.wc.WCApp \

--conf spark.yarn.jars=hdfs://doudou:8020/spark/ \

spark.sql-1.0-SNAPSHOT.jar

结果控制台上显示

18/12/27 13:13:58 INFO yarn.Client: Submitting application application_1545827265217_0003 to ResourceManager

18/12/27 13:13:58 INFO impl.YarnClientImpl: Submitted application application_1545827265217_0003

18/12/27 13:13:58 INFO cluster.SchedulerExtensionServices: Starting Yarn extension services with app application_1545827265217_0003 and attemptId None

18/12/27 13:13:59 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:13:59 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.test

start time: 1545887638566

final status: UNDEFINED

tracking URL: http://doudou:8288/proxy/application_1545827265217_0003/

user: test

接着就是:

18/12/27 13:14:00 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:01 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:02 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:03 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:04 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:05 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:06 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:07 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:08 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:09 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:10 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:11 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:12 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:13 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:14 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:15 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:16 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:17 INFO yarn.Client: Application report for application_1545827265217_0003 (state: ACCEPTED)

18/12/27 13:14:18 INFO yarn.Client: Application report for application_1545827265217_0003 (state: FAILED)

18/12/27 13:14:18 INFO yarn.Client:

client token: N/A

diagnostics: Application application_1545827265217_0003 failed 10 times due to AM Container for appattempt_1545827265217_0003_000010 exited with exitCode: 1

For more detailed output, check application tracking page:http://doudou:8288/proxy/application_1545827265217_0003/Then, click on links to logs of each attempt.

连续十次提交都没能正常接收,最后就直接失败了。所以我们来看看这么多次提交后为什么还是失败呢?

我们使用yarn logs -applicationId application_1545827265217_0003查看一下具体的报错

Container: container_1545827265217_0003_10_000001 on doudou_38383

===================================================================

LogType:stderr

Log Upload Time:Thu Dec 27 13:14:18 +0800 2018

LogLength:87

Log Contents:

Error: Could not find or load main class org.apache.spark.deploy.yarn.ExecutorLauncher

LogType:stdout

Log Upload Time:Thu Dec 27 13:14:18 +0800 2018

LogLength:0

Log Contents:

Container: container_1545827265217_0003_07_000001 on doudou_38383

===================================================================

LogType:stderr

Log Upload Time:Thu Dec 27 13:14:18 +0800 2018

LogLength:87

Log Contents:

Error: Could not find or load main class org.apache.spark.deploy.yarn.ExecutorLauncher

LogType:stdout

Log Upload Time:Thu Dec 27 13:14:18 +0800 2018

LogLength:0

Log Contents:

所报的错误都是Could not find or load main class org.apache.spark.deploy.yarn.ExecutorLauncher

这个错误,没有找到或者加载到main class org.apache.spark.deploy.yarn.ExecutorLauncher,那么显然我们上传的jars根本就没有被读取到嘛,在网上搜了下发现spark.yarn.jars=hdfs://doudou:8020/spark/* ,在后面要加上一个小星星表示全部。

那我们按照这个方法再试一次吧

spark-submit \

--master yarn \

--deploy-mode client \

--class doudou.scala.wc.WCApp \

--conf spark.yarn.jars=hdfs://doudou:8020/spark/* \

spark.sql-1.0-SNAPSHOT.jar

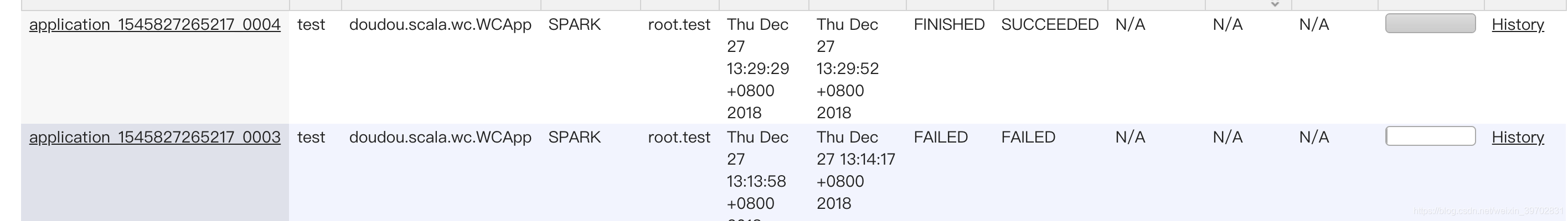

结果好像就好了,我们在控制台上面可以看到yarn在我们指定的hdfs路径上copy一些jar包到其他路径(我没来得及截图),然后我们到yarn的UI界面上看看

下面的application是我第一次提交的,它失败了。而上面的application是成功执行了。

但是但是但是,重点来了。。。我打开日志看了下,虽然执行成功了,在日志里面还是有这样一个warning,它报的是 ExitCodeException exitCode=1这个异常,我接着百度了一下,好像是说我们的executor的内存超出了我们yarn中container内存默认配置,我就试着修改一些某些配置吧,接着我设置了这些配置属性:

spark-submit \

--master yarn \

--deploy-mode client \

--class doudou.scala.wc.WCApp \

--conf spark.yarn.jars=hdfs://doudou:8020/spark/* \

--driver-memory 800M \

--executor-memory 800M \

--num-executors 1 \

--total-executor-cores 1 \

spark.sql-1.0-SNAPSHOT.jar

好了,现在问题就没有了。我减少了executor的个数,同时增加了executor的内存数量,其实就是我们executor的个数乘上executor的内存数量超过了yarn中一个container的内存数了(这个以后也是一个调优的方向)。