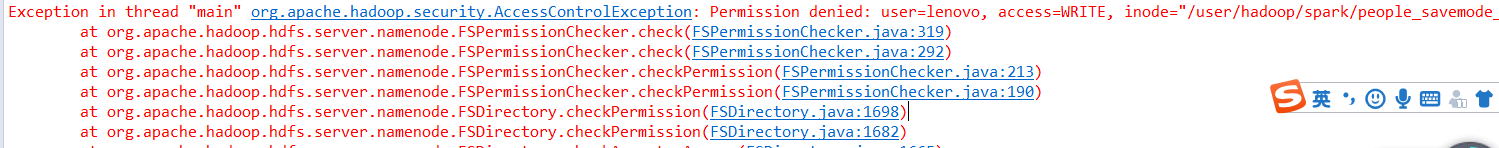

保存文件时权限被拒绝 曾经踩过的坑: 保存结果到hdfs上没有写的权限 通过修改权限将文件写入到指定的目录下

*

*

* $HADOOP_HOME/bin/hdfs dfs -chmod 777 /user

*

*

* Exception in thread "main" org.apache.hadoop.security.AccessControlException:

* Permission denied: user=Mypc, access=WRITE,

* inode="/":fan:supergroup:drwxr-xr-x

package cn.spark.study.sql; import org.apache.spark.SparkConf; import org.apache.spark.api.java.JavaSparkContext; import org.apache.spark.sql.DataFrame; import org.apache.spark.sql.SQLContext; import org.apache.spark.sql.SaveMode; /** * SaveModel示例 * * @author 张运涛 * */ public class SaveModeTest { @SuppressWarnings("deprecation") public static void main(String[] args) { System.setProperty("hadoop.home.dir", "E:\\hadoop-2.7.1"); SparkConf conf = new SparkConf().setMaster("local").setAppName("SaveModeTest"); JavaSparkContext sc = new JavaSparkContext(conf); SQLContext sqlContext = new SQLContext(sc); DataFrame peopleDF = sqlContext.read().format("json").load("hdfs://zhang:9000/user/hadoop/spark/people.json"); /** * 保存文件时权限被拒绝 曾经踩过的坑: 保存结果到hdfs上没有写的权限 通过修改权限将文件写入到指定的目录下 * * * $HADOOP_HOME/bin/hdfs dfs -chmod 777 /user * * * Exception in thread "main" org.apache.hadoop.security.AccessControlException: * Permission denied: user=Mypc, access=WRITE, * inode="/":fan:supergroup:drwxr-xr-x * */ peopleDF.save("hdfs://zhang:9000/user/people_savemode_test", "json", SaveMode.Append); } }