Metropolis Light Transport

MLT generates a sequence of light-carrying paths through the scene, where each path

is found by mutating(改变) the previous path in some manner. These mutations(改变) are done

in a way that ensures that the overall distribution of sampled paths is proportional(成比例)

to the contribution the paths make to the image being generated. Such a distribution

of paths can in turn(反过来) be used to generate an image of the scene. Given the flexibility

of the Metropolis sampling method, there are relatively few restrictions on the types

of mutations that can be applied; in general, it is possible to apply unusual sampling

techniques designed to sample otherwise difficult-to-find light-carrying paths. Many

such sampling techniques that can be used with MLT can’t easily be applied to other

Monte Carlo light transport algorithms without introducing bias.

MLT has another important advantage with respect to previous unbiased approaches for

image synthesis(合成) in that it performs local exploration(探索): when a path that makes a large contribution

to the image is found, it’s easy to sample other paths that are similar to that one

by making small perturbations(扰动) to it. When a function has a small value over most of its

domain and a large contribution in only a small subset of it, local exploration amortizes(平摊)

the expense (in samples) of the search for the important region by taking a number of

samples from this part of the path space. This property makes MLT a reasonably robust(强健)

light transport algorithm: while it is about as efficient as other unbiased techniques (e.g.,

path tracing or bidirectional ray tracing) for relatively straightforward lighting problems,

it distinguishes itself in more difficult settings where most of the light transport happens

along a small fraction of all of the possible paths through the scene.

How to calculate a pixel in MLT

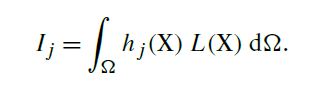

First, we define the image contribution function such that for an image with j

pixels, each pixel Ij has a value that is the integral of the product of the pixel’s image

reconstruction filter hj and the radiance L that contributes to the image:

Generally, the filter function hj only depends on whichever two samples of X that give

the image sample position. Further, the value of hj for any particular pixel is usually zero

for the vast majority of samples X due to the filter’s finite extent.

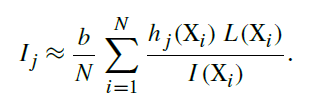

If N samples Xi are generated from some distribution, Xi ∼ p(X), then the standard

Monte Carlo estimate of Ij is

Because L is a spectrally valued function, Metropolis can’t be applied directly to generating

samples from its distribution; there is no unambiguous(不清楚) notion of what it means

to generate samples according to L’s distribution. We can, however, define a scalar contribution

function I (X) to be the function that defines the distribution of samples that

are taken with Metropolis sampling. It is desirable that this function be large when L is

large so that the distribution of samples has some relationship to the important regions

of L. As such, using the luminance of the radiance value is a good choice for the scalar

contribution function. In general, any function that is nonzero when L is nonzero will

generate correct results, just possibly not as efficiently as a function that is more directly

proportional to L. ()

Given a suitable scalar contribution function, I (X), Metropolis generates a sequence of

samples Xi from I ’s distribution, the normalized version of I :

(I(x) 这里是 计算 radiance的 luminance )

(I(x) 这里是 计算 radiance的 luminance )

and the pixel values can thus be computed as

The integral of I over the entire domain can be computed using a traditional approach like path tracing. If this value is denoted by b, with ![]() then each pixel’s value

then each pixel’s value

is given by

(b 这里就是计算一整张image的 luminance 和)

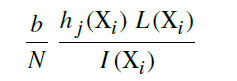

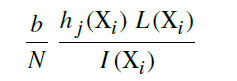

In other words, we can use Metropolis sampling to generate samples Xi from the distribution

of the scalar contribution function I . For each sample, the pixels it contributes to

(based on the extent of the pixel filter function h) have the value

added to them. Thus, brighter pixels have larger values than dimmer pixels due to more

samples contributing to them (as long as the ratio L(Xi)/I (Xi) is generally of the same

magnitude). The Film::Splat() method defined in Section 7.8.1 is used to accumulate

these contributions.

MetropolisRenderer 主要函数作用:

1.

struct PathSample {

BSDFSample bsdfSample;

float rrSample;

};

struct LightingSample {

BSDFSample bsdfSample;

float lightNum;

LightSample lightSample;

};

struct MLTSample {

MLTSample(int maxLength) {

cameraPathSamples.resize(maxLength);

lightPathSamples.resize(maxLength);

lightingSamples.resize(maxLength);

}

CameraSample cameraSample;

float lightNumSample, lightRaySamples[5];

vector<PathSample> cameraPathSamples, lightPathSamples;

vector<LightingSample> lightingSamples;

};作用:

Figure 15.29: Contributions of Members of the MLTSample Structure to the Definition of a

Path through the Scene. The cameraSample defines the camera ray leaving the camera, and the

cameraPathSamples define how to sample the outgoing directions at the vertices of the camera path.

At each camera path vertex, the corresponding lightingSamples sample is used for a direct lighting

calculation. If bidirectional path tracing is being used, then lightNumSample and lightRaySamples are

used to generate a ray leaving a light source, and lightPathSamples are used to sample outgoing

directions at light path vertices.

2. static uint32_t GeneratePath(const RayDifferential &r,

const Spectrum &a, const Scene *scene, MemoryArena &arena,

const vector<PathSample> &samples, PathVertex *path,

RayDifferential *escapedRay, Spectrum *escapedAlpha)

作用:(GeneratePath 利用 sampes 来生成 pathVertex,也就是说利用采样点来生成 vertex)

The GeneratePath() function below takes an initial ray (sampled from the camera or a light) and a vector

of PathSamples that it uses to sample a path through the scene. At each vertex of the

path, an instance of the PathVertex structure is initialized to store the information about

the surface intersection at the corresponding vertex. Figure 15.30 illustrates some of the

values stored.

3. Spectrum MetropolisRenderer::PathL(const MLTSample &sample, const Scene *scene, MemoryArena &arena, const Camera *camera, const Distribution1D *lightDistribution, PathVertex *cameraPath, PathVertex *lightPath, RNG &rng)

:(利用 参数的MLTSample (MLTSample 可以理解为保存了很多sample,其中包含了 CameraSample,也就是pixel的位置), 生成出 多个 cameraPathVertex,lightPathVertex, 那么这些 vertex 产生了 多少的 radiance 给这个 cameraSample对应的pixel )

we will define the method that computes the radiance L(X) for a given vector

of sample values that represent light-carrying paths of a sequence of points on surfaces

in the scene.This method first uses the GeneratePath() method to convert the

PathSamples for the camera and possibly a light source into one or two paths over surfaces

in the scene before computing the radiance they transport. The cameraPath and

lightPath parameters should be allocated by the caller to each have length at least equal

to MetropolisRenderer::maxDepth.

4.

static void LargeStep(RNG &rng, MLTSample *sample, int maxDepth,

float x, float y, float t0, float t1, bool bidirectional) {

// Do large step mutation of _cameraSample_

sample->cameraSample.imageX = x;

sample->cameraSample.imageY = y;

sample->cameraSample.time = Lerp(rng.RandomFloat(), t0, t1);

sample->cameraSample.lensU = rng.RandomFloat();

sample->cameraSample.lensV = rng.RandomFloat();

for (int i = 0; i < maxDepth; ++i) {

// Apply large step to $i$th camera _PathSample_

PathSample &cps = sample->cameraPathSamples[i];

cps.bsdfSample.uComponent = rng.RandomFloat();

cps.bsdfSample.uDir[0] = rng.RandomFloat();

cps.bsdfSample.uDir[1] = rng.RandomFloat();

cps.rrSample = rng.RandomFloat();

// Apply large step to $i$th _LightingSample_

LightingSample &ls = sample->lightingSamples[i];

ls.bsdfSample.uComponent = rng.RandomFloat();

ls.bsdfSample.uDir[0] = rng.RandomFloat();

ls.bsdfSample.uDir[1] = rng.RandomFloat();

ls.lightNum = rng.RandomFloat();

ls.lightSample.uComponent = rng.RandomFloat();

ls.lightSample.uPos[0] = rng.RandomFloat();

ls.lightSample.uPos[1] = rng.RandomFloat();

}

if (bidirectional) {

// Apply large step to bidirectional light samples

sample->lightNumSample = rng.RandomFloat();

for (int i = 0; i < 5; ++i)

sample->lightRaySamples[i] = rng.RandomFloat();

for (int i = 0; i < maxDepth; ++i) {

// Apply large step to $i$th light _PathSample_

PathSample &lps = sample->lightPathSamples[i];

lps.bsdfSample.uComponent = rng.RandomFloat();

lps.bsdfSample.uDir[0] = rng.RandomFloat();

lps.bsdfSample.uDir[1] = rng.RandomFloat();

lps.rrSample = rng.RandomFloat();

}

}

}:

(LargeStep 函数 主要的作用就是 重新计算 参数 MLTSampler 的所有采样点,这个函数 被用户直接指定imageX,imageY,从而可以计算 某一个pixel 的 所需要的sample数据)

The MetropolisRenderer applies two different mutations to the MLTSample values. The

first (the “large step” mutation) computes new uniform random samples for all of the

sample values in X. Recall from Section 13.4.2 that it is important that there be greater

than zero probability that every possible sample value be proposed; this is taken care of

by the large step mutation. In general, the large stepmutation helps us widely explore the

entire state space(探索整一个空间) without getting stuck on local “islands.”(被困在当地“岛屿”上)

4.

static inline void mutate(RNG &rng, float *v, float min = 0.f,

float max = 1.f) {

if (min == max) { *v = min; return; }

Assert(min < max);

float a = 1.f / 1024.f, b = 1.f / 64.f;

static const float logRatio = -logf(b/a);

float delta = (max - min) * b * expf(logRatio * rng.RandomFloat());

if (rng.RandomFloat() < 0.5f) {

*v += delta;

if (*v >= max) *v = min + (*v - max);

}

else {

*v -= delta;

if (*v < min) *v = max - (min - *v);

}

if (*v < min || *v >= max) *v = min;

}

static void SmallStep(RNG &rng, MLTSample *sample, int maxDepth,

int x0, int x1, int y0, int y1, float t0, float t1,

bool bidirectional) {

mutate(rng, &sample->cameraSample.imageX, x0, x1);

mutate(rng, &sample->cameraSample.imageY, y0, y1);

mutate(rng, &sample->cameraSample.time, t0, t1);

mutate(rng, &sample->cameraSample.lensU);

mutate(rng, &sample->cameraSample.lensV);

// Apply small step mutation to camera, lighting, and light samples

for (int i = 0; i < maxDepth; ++i) {

// Apply small step to $i$th camera _PathSample_

PathSample &eps = sample->cameraPathSamples[i];

mutate(rng, &eps.bsdfSample.uComponent);

mutate(rng, &eps.bsdfSample.uDir[0]);

mutate(rng, &eps.bsdfSample.uDir[1]);

mutate(rng, &eps.rrSample);

// Apply small step to $i$th _LightingSample_

LightingSample &ls = sample->lightingSamples[i];

mutate(rng, &ls.bsdfSample.uComponent);

mutate(rng, &ls.bsdfSample.uDir[0]);

mutate(rng, &ls.bsdfSample.uDir[1]);

mutate(rng, &ls.lightNum);

mutate(rng, &ls.lightSample.uComponent);

mutate(rng, &ls.lightSample.uPos[0]);

mutate(rng, &ls.lightSample.uPos[1]);

}

if (bidirectional) {

mutate(rng, &sample->lightNumSample);

for (int i = 0; i < 5; ++i)

mutate(rng, &sample->lightRaySamples[i]);

for (int i = 0; i < maxDepth; ++i) {

// Apply small step to $i$th light _PathSample_

PathSample &lps = sample->lightPathSamples[i];

mutate(rng, &lps.bsdfSample.uComponent);

mutate(rng, &lps.bsdfSample.uDir[0]);

mutate(rng, &lps.bsdfSample.uDir[1]);

mutate(rng, &lps.rrSample);

}

}

}作用:

(SmallStep 函数 也是重新计算 参数 MLTSampler 的所有采样点,但是,这个函数计算 MLTSampler 采样点 是利用指数分布的优点,让新的采样点数据,先从较小的变化 过渡到 较大的 变化,也就是说,重新计算出来的MLTSampler的采样点数值,与原来的采样点数据,只是相差少少的差距)

The SmallStep() function applies a small mutation to the sample value drawn from an

exponential distribution as defined by Equation (13.9): ![]() , giving

, giving

a sample in the range [a, b]. The advantage of sampling with an exponential distribution

like this is that it naturally tries a variety of mutation sizes. It preferentially(优先) makes small

mutations, close to the minimum magnitudes specified, which help locally explore the

path space in small areas of high contribution where large mutations would tend to be

rejected. On the other hand, because it also can make larger mutations, it also avoids

spending too much time in a small part of the path space in cases where larger mutations

have a good likelihood of acceptance.

MetropolisRenderer 是如何生成 Image

1. void MetropolisRenderer::Render(const Scene *scene)

void MetropolisRenderer::Render(const Scene *scene) {

PBRT_MLT_STARTED_RENDERING();

if (scene->lights.size() > 0) {

int x0, x1, y0, y1;

camera->film->GetPixelExtent(&x0, &x1, &y0, &y1);

float t0 = camera->shutterOpen, t1 = camera->shutterClose;

Distribution1D *lightDistribution = ComputeLightSamplingCDF(scene);

if (directLighting != NULL) {

PBRT_MLT_STARTED_DIRECTLIGHTING();

// Compute direct lighting before Metropolis light transport

if (nDirectPixelSamples > 0) {

LDSampler sampler(x0, x1, y0, y1, nDirectPixelSamples, t0, t1);

Sample *sample = new Sample(&sampler, directLighting, NULL, scene);

vector<Task *> directTasks;

int nDirectTasks = max(32 * NumSystemCores(),

(camera->film->xResolution * camera->film->yResolution) / (16*16));

nDirectTasks = RoundUpPow2(nDirectTasks);

ProgressReporter directProgress(nDirectTasks, "Direct Lighting");

for (int i = 0; i < nDirectTasks; ++i)

directTasks.push_back(new SamplerRendererTask(scene, this, camera, directProgress,

&sampler, sample, false, i, nDirectTasks));

std::reverse(directTasks.begin(), directTasks.end());

EnqueueTasks(directTasks);

WaitForAllTasks();

for (uint32_t i = 0; i < directTasks.size(); ++i)

delete directTasks[i];

delete sample;

directProgress.Done();

}

camera->film->WriteImage();

PBRT_MLT_FINISHED_DIRECTLIGHTING();

}

// Take initial set of samples to compute $b$

PBRT_MLT_STARTED_BOOTSTRAPPING(nBootstrap);

RNG rng(0);

MemoryArena arena;

vector<float> bootstrapI;

vector<PathVertex> cameraPath(maxDepth, PathVertex());

vector<PathVertex> lightPath(maxDepth, PathVertex());

float sumI = 0.f;

bootstrapI.reserve(nBootstrap);

MLTSample sample(maxDepth);

for (uint32_t i = 0; i < nBootstrap; ++i) {

// Generate random sample and path radiance for MLT bootstrapping

float x = Lerp(rng.RandomFloat(), x0, x1);

float y = Lerp(rng.RandomFloat(), y0, y1);

LargeStep(rng, &sample, maxDepth, x, y, t0, t1, bidirectional);

Spectrum L = PathL(sample, scene, arena, camera, lightDistribution,

&cameraPath[0], &lightPath[0], rng);

// Compute contribution for random sample for MLT bootstrapping

float I = ::I(L);

sumI += I;

bootstrapI.push_back(I);

arena.FreeAll();

}

float b = sumI / nBootstrap;

PBRT_MLT_FINISHED_BOOTSTRAPPING(b);

Info("MLT computed b = %f", b);

// Select initial sample from bootstrap samples

float contribOffset = rng.RandomFloat() * sumI;

rng.Seed(0);

sumI = 0.f;

MLTSample initialSample(maxDepth);

for (uint32_t i = 0; i < nBootstrap; ++i) {

float x = Lerp(rng.RandomFloat(), x0, x1);

float y = Lerp(rng.RandomFloat(), y0, y1);

LargeStep(rng, &initialSample, maxDepth, x, y, t0, t1,

bidirectional);

sumI += bootstrapI[i];

if (sumI > contribOffset)

break;

}

// Launch tasks to generate Metropolis samples

uint32_t nTasks = largeStepsPerPixel;

uint32_t largeStepRate = nPixelSamples / largeStepsPerPixel;

Info("MLT running %d tasks, large step rate %d", nTasks, largeStepRate);

ProgressReporter progress(nTasks * largeStepRate, "Metropolis");

vector<Task *> tasks;

Mutex *filmMutex = Mutex::Create();

Assert(IsPowerOf2(nTasks));

uint32_t scramble[2] = { rng.RandomUInt(), rng.RandomUInt() };

uint32_t pfreq = (x1-x0) * (y1-y0);

for (uint32_t i = 0; i < nTasks; ++i) {

float d[2];

Sample02(i, scramble, d);

tasks.push_back(new MLTTask(progress, pfreq, i,

d[0], d[1], x0, x1, y0, y1, t0, t1, b, initialSample,

scene, camera, this, filmMutex, lightDistribution));

}

EnqueueTasks(tasks);

WaitForAllTasks();

for (uint32_t i = 0; i < tasks.size(); ++i)

delete tasks[i];

progress.Done();

Mutex::Destroy(filmMutex);

delete lightDistribution;

}

camera->film->WriteImage();

PBRT_MLT_FINISHED_RENDERING();

}细节

a.

// Take initial set of samples to compute $b$

PBRT_MLT_STARTED_BOOTSTRAPPING(nBootstrap);

RNG rng(0);

MemoryArena arena;

vector<float> bootstrapI;

vector<PathVertex> cameraPath(maxDepth, PathVertex());

vector<PathVertex> lightPath(maxDepth, PathVertex());

float sumI = 0.f;

bootstrapI.reserve(nBootstrap);

MLTSample sample(maxDepth);

for (uint32_t i = 0; i < nBootstrap; ++i) {

// Generate random sample and path radiance for MLT bootstrapping

float x = Lerp(rng.RandomFloat(), x0, x1);

float y = Lerp(rng.RandomFloat(), y0, y1);

LargeStep(rng, &sample, maxDepth, x, y, t0, t1, bidirectional);

Spectrum L = PathL(sample, scene, arena, camera, lightDistribution,

&cameraPath[0], &lightPath[0], rng);

// Compute contribution for random sample for MLT bootstrapping

float I = ::I(L);

sumI += I;

bootstrapI.push_back(I);

arena.FreeAll();

}

float b = sumI / nBootstrap;

PBRT_MLT_FINISHED_BOOTSTRAPPING(b);

inline float I(const Spectrum &L) {

return L.y();

}作用:

(这里主要的 就是计算 ![]() ,但是同时 bootstrapI 也会保存每一个 采样点的 所产生的 I 值,也就是 radiance的 亮度)

,但是同时 bootstrapI 也会保存每一个 采样点的 所产生的 I 值,也就是 radiance的 亮度)

In order to compute the integral of the scalar contribution function ![]()

we can use the sample generation and evaluation routines implemented previously: the

LargeStep() function is called to generate uniform random samples over the sample

space domain, and PathL() computes the value of the function for each one. The sumI

variable accumulates the sum of the scalar contribution functions. This fragment also

stores the contribution of each sample in the bootstrapI array; these will be used in a

second pass through the samples, below, to select the initial sample for rendering.

b.

float contribOffset = rng.RandomFloat() * sumI;

rng.Seed(0);

sumI = 0.f;

MLTSample initialSample(maxDepth);

for (uint32_t i = 0; i < nBootstrap; ++i) {

float x = Lerp(rng.RandomFloat(), x0, x1);

float y = Lerp(rng.RandomFloat(), y0, y1);

LargeStep(rng, &initialSample, maxDepth, x, y, t0, t1,

bidirectional);

sumI += bootstrapI[i];

if (sumI > contribOffset)

break;

}作用:

(这里最简单地理解,生成一个 initialSample出来)

here we select an initial sample with probability proportional to its contribution relative to the

sum of all of the sample contributions computed above.We can do this without explicitly

computing a normalized CDF by selecting a uniform random value between zero and

the contribution sum and then looping over all of the path samples from the start until

we find the one whose contribution causes the accumulated sum of contributions to

pass this value. We don’t need to save all of the sample values generated during the first

pass; instead we re-seed the random number generator to the same seed as before and

then apply the same mutations to the MLTSample; the resulting sample will then be the

appropriate one.(rng.Seed(0) 导致 随机生成器 生成 一样的 随机数)

c. 剩下的代码就是直接调用 MLTTask.Run

2. MLTTask.Run

void MLTTask::Run() {

PBRT_MLT_STARTED_MLT_TASK(this);

// Declare basic _MLTTask_ variables and prepare for sampling

PBRT_MLT_STARTED_TASK_INIT();

uint32_t nPixels = (x1-x0) * (y1-y0);

uint32_t nPixelSamples = renderer->nPixelSamples;

uint32_t largeStepRate = nPixelSamples / renderer->largeStepsPerPixel;

Assert(largeStepRate > 1);

uint64_t nTaskSamples = uint64_t(nPixels) * uint64_t(largeStepRate);

uint32_t consecutiveRejects = 0;

uint32_t progressCounter = progressUpdateFrequency;

// Declare variables for storing and computing MLT samples

MemoryArena arena;

RNG rng(taskNum);

vector<PathVertex> cameraPath(renderer->maxDepth, PathVertex());

vector<PathVertex> lightPath(renderer->maxDepth, PathVertex());

vector<MLTSample> samples(2, MLTSample(renderer->maxDepth));

Spectrum L[2];

float I[2];

uint32_t current = 0, proposed = 1;

// Compute _L[current]_ for initial sample

samples[current] = initialSample;

L[current] = renderer->PathL(initialSample, scene, arena, camera,

lightDistribution, &cameraPath[0], &lightPath[0], rng);

I[current] = ::I(L[current]);

arena.FreeAll();

// Compute randomly permuted table of pixel indices for large steps

uint32_t pixelNumOffset = 0;

vector<int> largeStepPixelNum;

largeStepPixelNum.reserve(nPixels);

for (uint32_t i = 0; i < nPixels; ++i) largeStepPixelNum.push_back(i);

Shuffle(&largeStepPixelNum[0], nPixels, 1, rng);

PBRT_MLT_FINISHED_TASK_INIT();

for (uint64_t s = 0; s < nTaskSamples; ++s) {

// Compute proposed mutation to current sample

PBRT_MLT_STARTED_MUTATION();

samples[proposed] = samples[current];

bool largeStep = ((s % largeStepRate) == 0);

if (largeStep) {

int x = x0 + largeStepPixelNum[pixelNumOffset] % (x1 - x0);

int y = y0 + largeStepPixelNum[pixelNumOffset] / (x1 - x0);

LargeStep(rng, &samples[proposed], renderer->maxDepth,

x + dx, y + dy, t0, t1, renderer->bidirectional);

++pixelNumOffset;

}

else

SmallStep(rng, &samples[proposed], renderer->maxDepth,

x0, x1, y0, y1, t0, t1, renderer->bidirectional);

PBRT_MLT_FINISHED_MUTATION();

// Compute contribution of proposed sample

L[proposed] = renderer->PathL(samples[proposed], scene, arena, camera,

lightDistribution, &cameraPath[0], &lightPath[0], rng);

I[proposed] = ::I(L[proposed]);

arena.FreeAll();

// Compute acceptance probability for proposed sample

float a = min(1.f, I[proposed] / I[current]);

// Splat current and proposed samples to _Film_

PBRT_MLT_STARTED_SAMPLE_SPLAT();

if (I[current] > 0.f) {

if (!isinf(1.f / I[current])) {

Spectrum contrib = (b / nPixelSamples) * L[current] / I[current];

camera->film->Splat(samples[current].cameraSample,

(1.f - a) * contrib);

}

}

if (I[proposed] > 0.f) {

if (!isinf(1.f / I[proposed])) {

Spectrum contrib = (b / nPixelSamples) * L[proposed] / I[proposed];

camera->film->Splat(samples[proposed].cameraSample,

a * contrib);

}

}

PBRT_MLT_FINISHED_SAMPLE_SPLAT();

// Randomly accept proposed path mutation (or not)

if (consecutiveRejects >= renderer->maxConsecutiveRejects ||

rng.RandomFloat() < a) {

PBRT_MLT_ACCEPTED_MUTATION(a, &samples[current], &samples[proposed]);

current ^= 1;

proposed ^= 1;

consecutiveRejects = 0;

}

else

{

PBRT_MLT_REJECTED_MUTATION(a, &samples[current], &samples[proposed]);

++consecutiveRejects;

}

if (--progressCounter == 0) {

progress.Update();

progressCounter = progressUpdateFrequency;

}

}

Assert(pixelNumOffset == nPixels);

// Update display for recently computed Metropolis samples

PBRT_MLT_STARTED_DISPLAY_UPDATE();

int ntf = AtomicAdd(&renderer->nTasksFinished, 1);

int64_t totalSamples = int64_t(nPixels) * int64_t(nPixelSamples);

float splatScale = float(double(totalSamples) / double(ntf * nTaskSamples));

camera->film->UpdateDisplay(x0, y0, x1, y1, splatScale);

if ((taskNum % 8) == 0) {

MutexLock lock(*filmMutex);

camera->film->WriteImage(splatScale);

}

PBRT_MLT_FINISHED_DISPLAY_UPDATE();

PBRT_MLT_FINISHED_MLT_TASK(this);

}细节

a.

// Compute randomly permuted table of pixel indices for large steps

uint32_t pixelNumOffset = 0;

vector<int> largeStepPixelNum;

largeStepPixelNum.reserve(nPixels);

for (uint32_t i = 0; i < nPixels; ++i) largeStepPixelNum.push_back(i);

Shuffle(&largeStepPixelNum[0], nPixels, 1, rng);作用:

(初始化 largeStepPixelNum, largeStepPixelNum 保存了所有的pixel的索引,随机顺序的。)

Each pixel in the image should receive a single proposed large step mutation for each

task. So that the transition densities between samples for large stepmutations is uniform,

the order in which pixels are visited by the large step mutations should be randomized.

(It should also be different in different tasks.) The bookkeeping for this is handled by

assigning each pixel an index and randomly shuffling an array of the pixel indices. The

code that calls LargeStep() below will in turn convert pixel indices back to (x, y) pixel

values.

b.

PBRT_MLT_STARTED_MUTATION();

samples[proposed] = samples[current];

bool largeStep = ((s % largeStepRate) == 0);

if (largeStep) {

int x = x0 + largeStepPixelNum[pixelNumOffset] % (x1 - x0);

int y = y0 + largeStepPixelNum[pixelNumOffset] / (x1 - x0);

LargeStep(rng, &samples[proposed], renderer->maxDepth,

x + dx, y + dy, t0, t1, renderer->bidirectional);

++pixelNumOffset;

}

else

SmallStep(rng, &samples[proposed], renderer->maxDepth,

x0, x1, y0, y1, t0, t1, renderer->bidirectional);

PBRT_MLT_FINISHED_MUTATION();作用:

(这里是在 循环里面,for (uint64_t s = 0; s < nTaskSamples; ++s), 那么,这里是 执行 bool largeStep = ((s % largeStepRate) == 0);,可以知道 ,在 循环 laygeStep 次,会执行 laygeStep -1 次 SmallStep , 执行一次 LargeStep,根据上面的SamllStep 的作用可以知道,这里就是执行(when a path that makes a large contribution

to the image is found, it’s easy to sample other paths that are similar to that one

by making small perturbations(扰动) to it. )

同时,LargeStep 执行的时候,x,y 的值(ImageX,ImageY),直接都是在 largeStepPixelNum 数组中取出,其实就是获得随机的 pixel的索引)

The implementation of the Metropolis sampling routine in the following fragments follows

the pseudo-code that uses the expected values technique from Section 13.4.1: a

mutation is proposed, the value of the function for the mutated sample and the acceptance

probability are computed, the contributions of both the new and old samples are

recorded, and the proposed mutatation is randomly accepted based on its acceptance

probability.

The implementation here regularly alternates between the large step and the small step

mutations, taking one large step and then largeStepRate-1 small steps, another large

step, and so forth. Although it could instead randomly choose between the two mutations

with corresponding probabilities, with the approach implemented here we ensure that

this task will perform exactly one large step mutation for each pixel.

When a large step mutation does occur, the (x, y) image location to pass to the large

step is found in a two-step process. First, the current index in the shuffled pixel index

array is converted to an integer (x, y) pixel coordinate. Next, an offset in [0, 1]2 is added

to the integer pixel coordinate. The same offset is used for all of the large steps in this

task; the offset values are computed by the code that launches the task and stored in

the MLTTask::dx, MLTTask::dy member variables. To compute these values, the code that

launches MLTTasks computes a low-discrepancy sampling pattern in [0, 1]2, with one

sample for each task. Thus, in the aggregate when all of the tasks have finished, not only

will each pixel have received the same number of proposed large step mutations, but also

their (x, y) pixel locations will be well distributed within each pixel’s area.

c.

// Compute contribution of proposed sample

L[proposed] = renderer->PathL(samples[proposed], scene, arena, camera,

lightDistribution, &cameraPath[0], &lightPath[0], rng);

I[proposed] = ::I(L[proposed]);

作用:

(上面计算出来sample 了,这里就是直接计算 sample对应 radiance 和 亮度)

Given the proposed sample, the previously defined PathL() and I() methods compute

the sample’s radiance value and scalar contribution.

d.

// Compute acceptance probability for proposed sample

float a = min(1.f, I[proposed] / I[current]);

// Splat current and proposed samples to _Film_

PBRT_MLT_STARTED_SAMPLE_SPLAT();

if (I[current] > 0.f) {

if (!isinf(1.f / I[current])) {

Spectrum contrib = (b / nPixelSamples) * L[current] / I[current];

camera->film->Splat(samples[current].cameraSample,

(1.f - a) * contrib);

}

}

if (I[proposed] > 0.f) {

if (!isinf(1.f / I[proposed])) {

Spectrum contrib = (b / nPixelSamples) * L[proposed] / I[proposed];

camera->film->Splat(samples[proposed].cameraSample,

a * contrib);

}

}作用:

(直接计算 公式:

)

Since all of the mutations here are symmetric(对称), the transition probabilities cancel when

computing the acceptance probability and so the acceptance probability for the proposed

sample is found using Equation (13.8).

The contributions for the proposed and current samples are computed using Equation

(15.21) and the two samples are splatted into the image using the Film::Splat()

method. Before being provided to the film, they are scaled with weights based on the

expected values optimization introduced in Section 13.4.1.12 Note that Film::Splat() is

responsible for incorporating the contribution of filter function hj (X) before storing the

sample’s contribution in the pixels that it contributes to.

e.

// Randomly accept proposed path mutation (or not)

if (consecutiveRejects >= renderer->maxConsecutiveRejects ||

rng.RandomFloat() < a) {

PBRT_MLT_ACCEPTED_MUTATION(a, &samples[current], &samples[proposed]);

current ^= 1;

proposed ^= 1;

consecutiveRejects = 0;

}

else

{

PBRT_MLT_REJECTED_MUTATION(a, &samples[current], &samples[proposed]);

++consecutiveRejects;

}作用:

Finally, the proposed mutation is either accepted or rejected, based on the computed

acceptance probability a (and the fixed limit of successive rejections that are allowed).

If the mutation is accepted, then the values of the current and proposed indices are

exchanged; because these variables can have only have the values zero or one, then taking

the exclusive-OR of each of them with one does this efficiently.

总结:

最主要的一点就是,当计算一个Pixel的radiance的时候,Pixel中每一个sample都会贡献自己收集到的radiance,在上面的代码可以知道,在循环 laygeStep 次,会执行 laygeStep -1 次 SmallStep , 执行一次 LargeStep,SmallStep 只是很小的范围进行修改偏移当前的 Pixel,那么就会有这样的一个效果:(when a path that makes a large contribution to the image is found, it’s easy to sample other paths that are similar to that one by making small perturbations(扰动) to it. )当执行完SamllStep,才会进行LargeStep,跳到下一个Pixel上处理收集对应的Radiance.